|

1 | 1 | # Radix Sort |

2 | 2 |

|

3 | 3 | ## Background |

4 | | - |

5 | 4 | Radix Sort is a non-comparison based, stable sorting algorithm that conventionally uses counting sort as a subroutine. |

6 | 5 |

|

7 | 6 | Radix Sort performs counting sort several times on the numbers. It sorts starting with the least-significant segment |

8 | | -to the most-significant segment. |

| 7 | +to the most-significant segment. What a 'segment' refers to is explained below. |

9 | 8 |

|

10 | | -### Segments |

11 | | -The definition of a 'segment' is user defined and defers from implementation to implementation. |

12 | | -It is most commonly defined as a bit chunk. |

| 9 | +### Idea |

| 10 | +The definition of a 'segment' is user-defined and could vary depending on implementation. |

13 | 11 |

|

14 | | -For example, if we aim to sort integers, we can sort each element |

15 | | -from the least to most significant digit, with the digits being our 'segments'. |

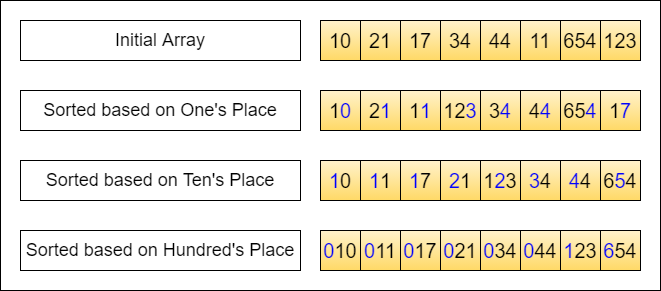

| 12 | +Let's consider sorting an array of integers. We interpret the integers in base-10 as shown below. |

| 13 | +Here, we treat each digit as a 'segment' and sort (counting sort as a sub-routine here) the elements |

| 14 | +from the least significant digit (right) to most significant digit (left). In other words, the sub-routine sort is just |

| 15 | +focusing on 1 digit at a time. |

16 | 16 |

|

17 | | -Within our implementation, we take the binary representation of the elements and |

18 | | -partition it into 8-bit segments. An integer is represented in 32 bits, |

19 | | -this gives us 4 total segments to sort through. |

| 17 | +<div align="center"> |

| 18 | + <img src="../../../../../../docs/assets/images/RadixSort.png" width="65%"> |

| 19 | + <br> |

| 20 | + Credits: Level Up Coding |

| 21 | +</div> |

20 | 22 |

|

21 | | -Note that the number of segments is flexible and can range up to the number of digits in the binary representation. |

22 | | -(In this case, sub-routine sort is done on every digit from right to left) |

| 23 | +The astute would note that a **stable version of counting sort** has to be used here, otherwise the relative ordering |

| 24 | +based on previous segments might get disrupted when sorting with subsequent segments. |

23 | 25 |

|

24 | | - |

| 26 | +### Segment Size |

| 27 | +Naturally, the choice of using just 1 digit in base-10 for segmenting is an arbitrary one. The concept of Radix Sort |

| 28 | +remains the same regardless of the segment size, allowing for flexibility in its implementation. |

25 | 29 |

|

26 | | -We place each element into a queue based on the number of possible segments that could be generated. |

27 | | -Suppose the values of our segments are in base-10, (limited to a value within range *[0, 9]*), |

28 | | -we get 10 queues. We can also see that radix sort is stable since |

29 | | -they are enqueued in a manner where the first observed element remains at the head of the queue |

| 30 | +In practice, numbers are often interpreted in their binary representation, with the 'segment' commonly defined as a |

| 31 | +bit chunk of a specified size (usually 8 bits/1 byte, though this number could vary for optimization). |

30 | 32 |

|

31 | | -*Source: Level Up Coding* |

| 33 | +For our implementation, we utilize the binary representation of elements, partitioning them into 8-bit segments. |

| 34 | +Given that an integer is typically represented in 32 bits, this results in four segments per integer. |

| 35 | +By applying the sorting subroutine to each segment across all integers, we can efficiently sort the array. |

| 36 | +This method requires sorting the array four times in total, once for each 8-bit segment, |

32 | 37 |

|

33 | 38 | ### Implementation Invariant |

| 39 | +At the end of the *ith* iteration, the elements are sorted based on their numeric value up till the *ith* segment. |

34 | 40 |

|

35 | | -At the start of the *i-th* segment we are sorting on, the array has already been sorted on the |

36 | | -previous *(i - 1)-th* segments. |

37 | | - |

38 | | -### Common Misconceptions |

39 | | - |

40 | | -While Radix Sort is non-comparison based, |

41 | | -the that total ordering of elements is still required. |

42 | | -This total ordering is needed because once we assigned a element to a order based on a segment, |

43 | | -the order *cannot* change unless deemed by a segment with a higher significance. |

44 | | -Hence, a stable sort is required to maintain the order as |

45 | | -the sorting is done with respect to each of the segments. |

| 41 | +### Common Misconception |

| 42 | +While Radix Sort is a non-comparison-based algorithm, |

| 43 | +it still necessitates a form of total ordering among the elements to be effective. |

| 44 | +Although it does not involve direct comparisons between elements, Radix Sort achieves ordering by processing elements |

| 45 | +based on individual segments or digits. This process depends on Counting Sort, which organizes elements into a |

| 46 | +frequency map according to a **predefined, ascending order** of those segments. |

46 | 47 |

|

47 | 48 | ## Complexity Analysis |

48 | | -Let b-bit words be broken into r-bit pieces. Let n be the number of elements to sort. |

| 49 | +Let b-bit words be broken into r-bit pieces. Let n be the number of elements. |

49 | 50 |

|

50 | 51 | *b/r* represents the number of segments and hence the number of counting sort passes. Note that each pass |

51 | | -of counting sort takes *(2^r + n)* (O(k+n) where k is the range which is 2^r here). |

| 52 | +of counting sort takes *(2^r + n)* (or more commonly, O(k+n) where k is the range which is 2^r here). |

52 | 53 |

|

53 | 54 | **Time**: *O((b/r) * (2^r + n))* |

54 | 55 |

|

55 | | -**Space**: *O(n + 2^r)* |

| 56 | +**Space**: *O(2^r + n)* <br> |

| 57 | +Note that our implementation has some slight space optimization - creating another array at the start so that we can |

| 58 | +repeatedly recycle the use of original and the copy (saves space!), |

| 59 | +to write and update the results after each iteration of the sub-routine function. |

56 | 60 |

|

57 | 61 | ### Choosing r |

58 | | -Previously we said the number of segments is flexible. Indeed, it is but for more optimised performance, r needs to be |

| 62 | +Previously we said the number of segments is flexible. Indeed, it is, but for more optimised performance, r needs to be |

59 | 63 | carefully chosen. The optimal choice of r is slightly smaller than logn which can be justified with differentiation. |

60 | 64 |

|

61 | | -Briefly, r=lgn --> Time complexity can be simplified to (b/lgn)(2n). <br> |

62 | | -For numbers in the range of 0 - n^m, b = mlgn and so the expression can be further simplified to *O(mn)*. |

| 65 | +Briefly, r=logn --> Time complexity can be simplified to (b/lgn)(2n). <br> |

| 66 | +For numbers in the range of 0 - n^m, b = number of bits = log(n^m) = mlogn <br> |

| 67 | +and so the expression can be further simplified to *O(mn)*. |

63 | 68 |

|

64 | 69 | ## Notes |

65 | | -- Radix sort's time complexity is dependent on the maximum number of digits in each element, |

66 | | - hence it is ideal to use it on integers with a large range and with little digits. |

67 | | -- This could mean that Radix Sort might end up performing worst on small sets of data |

68 | | - if any one given element has a in-proportionate amount of digits. |

| 70 | +- Radix Sort doesn't compare elements against each other, which can make it faster than comparative sorting algorithms |

| 71 | +like QuickSort or MergeSort for large datasets with a small range of key values |

| 72 | + - Useful for large sets of numeric data, especially if stability is important |

| 73 | + - Also works well for data that can be divided into segments of equal size, with the ordering between elements known |

| 74 | + |

| 75 | +- Radix sort's efficiency is closely tied to the number of digits in the largest element. So, its performance |

| 76 | +might not be optimal on small datasets that include elements with a significantly higher number of digits compared to |

| 77 | +others. This scenario could introduce more sorting passes than desired, diminishing the algorithm's overall efficiency. |

| 78 | + - Avoid for datasets with sparse data |

| 79 | + |

| 80 | +- Our implementation uses bit masking. If you are unsure, do check |

| 81 | +[this](https://cheever.domains.swarthmore.edu/Ref/BinaryMath/NumSys.html ) out |

0 commit comments