What happened to Linear hypernetwork Activation? #3761

-

|

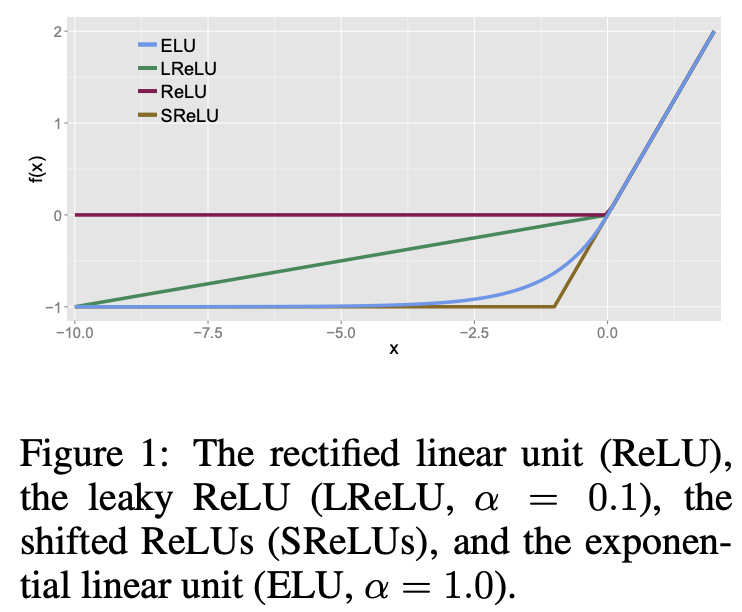

What happened to Linear hypernetwork Activation? There used to be Linear, relu, leakyrelu, etc. But now there's like 28 different things. To put it simply. what settings should i choose to have the same results as before hypernetworks had these options? Like when it was just like the embeddings tab and it was just linear. i am a simple person, i just want a simple 1 click button, i miss that, now i don't even know what to choose to train. |

Beta Was this translation helpful? Give feedback.

Replies: 3 comments 1 reply

-

|

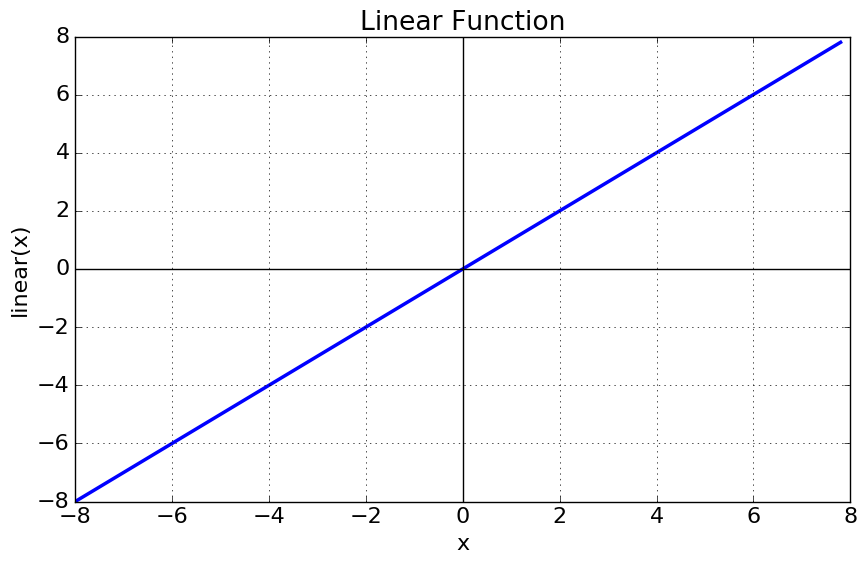

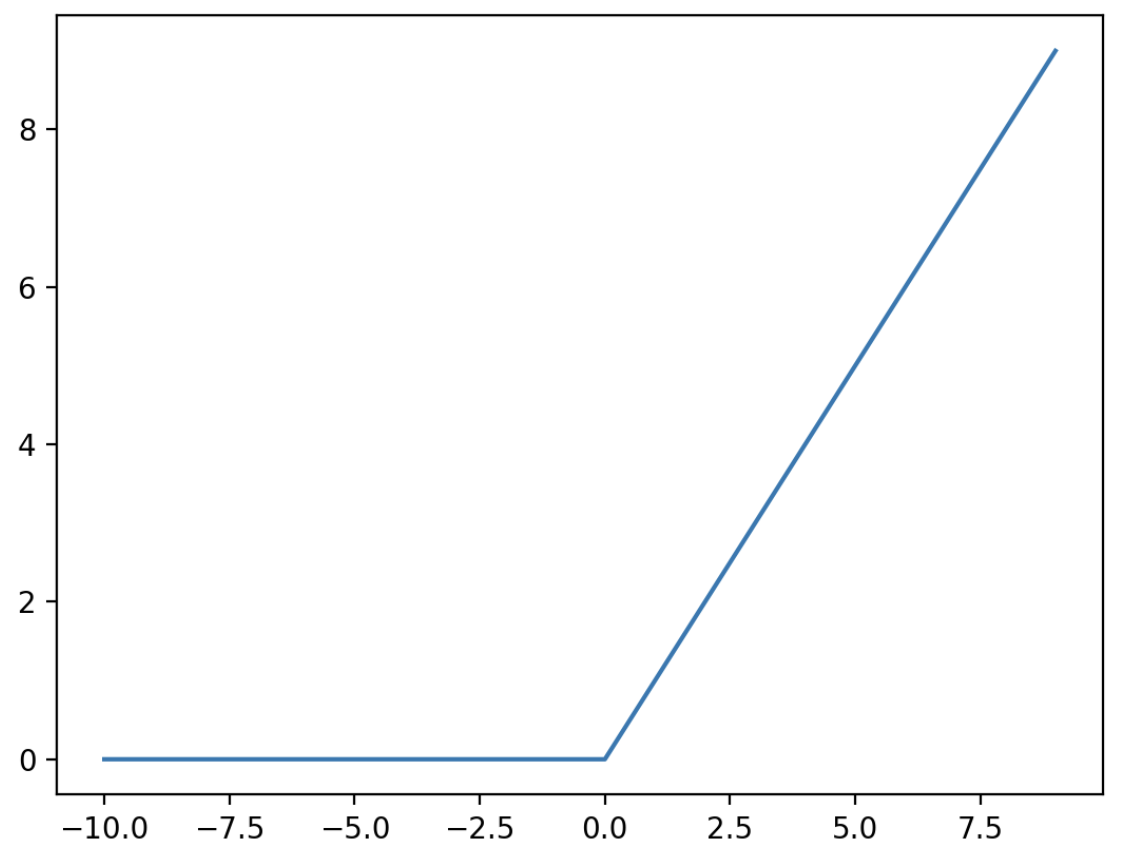

Im trying to understand all that stuff right now, but im no programmer, and turns out all those functions are math, and im no mathematician... So i don't understand really, but it seems that relu is linear, so try it, referencing wiki https://en.wikipedia.org/wiki/Activation_function |

Beta Was this translation helpful? Give feedback.

-

|

#3717 however, if you are looking for a linear activation, just make your structure |

Beta Was this translation helpful? Give feedback.

-

|

Use RRELU if you want something close to linear. It's a version of leaky with a random negative slope. |

Beta Was this translation helpful? Give feedback.

Im trying to understand all that stuff right now, but im no programmer, and turns out all those functions are math, and im no mathematician... So i don't understand really, but it seems that relu is linear, so try it, referencing wiki https://en.wikipedia.org/wiki/Activation_function