Replies: 5 comments 11 replies

-

|

I agree with changing hyperparameters of Adam can help training. But where did multiplying 10 come from? |

Beta Was this translation helpful? Give feedback.

-

|

Can we export |

Beta Was this translation helpful? Give feedback.

-

|

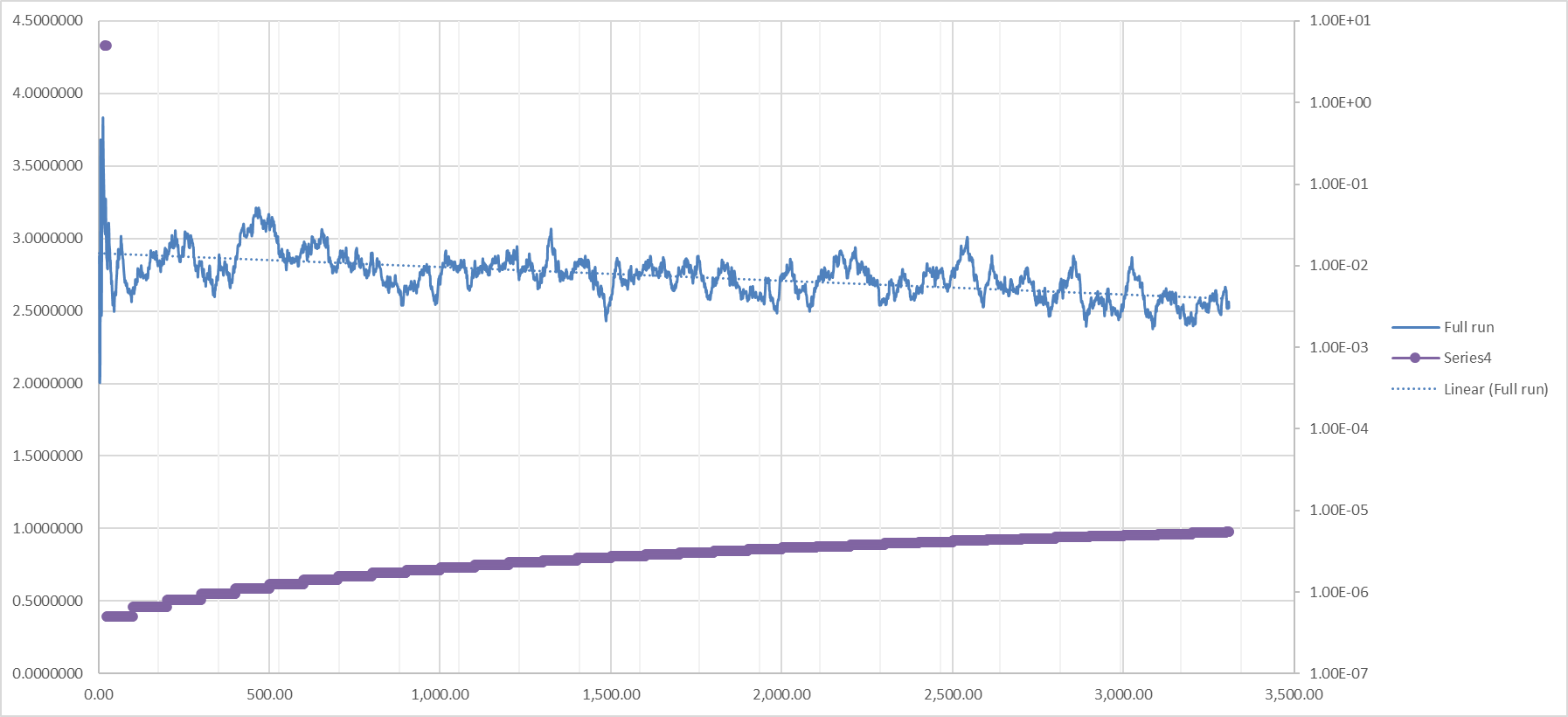

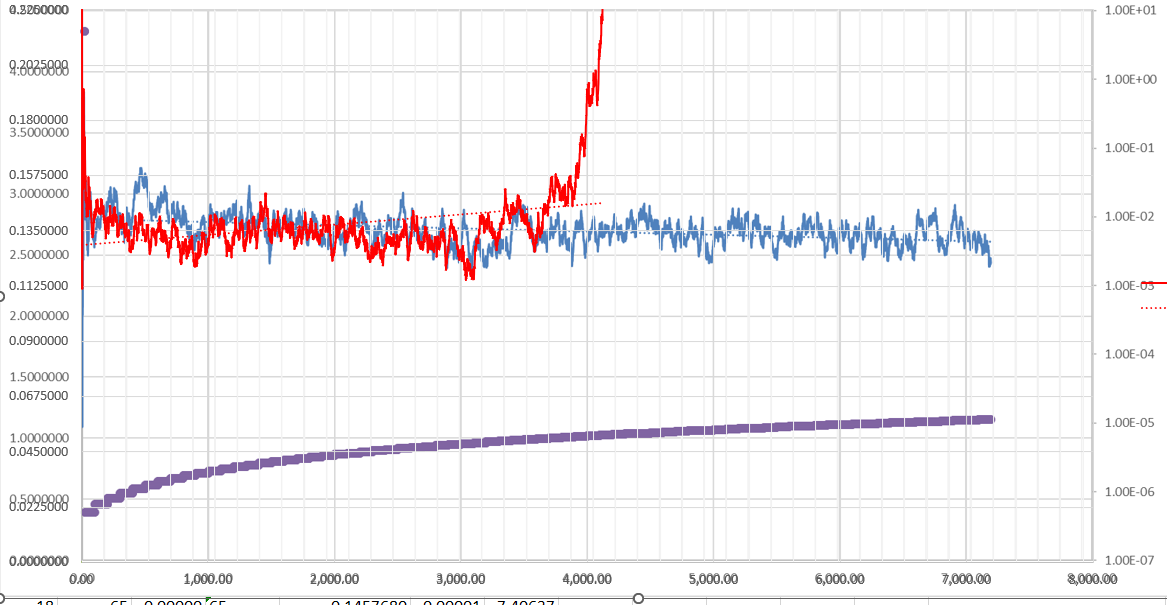

I finally found something really promising. Fair warning I did it with: loss=loss*20 Linearly increasing learn rate on right axis which is as discussed above equivalent to 20 times learn rate of default settings. Finally it is just gradually lowering and converging so the learn rate is finally high enough. |

Beta Was this translation helpful? Give feedback.

-

|

And I did find something but now I have no frame of reference to say if it is remarkable or not since all my previous attempts at making hypernetworks didn't create any results I would find as good enough. Result at step 1400 with 143 training pictures. Closest training picture: My guess is if I continued from there with a much lower rate I would get some details in and it would be nice but:

|

Beta Was this translation helpful? Give feedback.

-

|

eps=1 sounds crazy to me. Even 0.1 is. The default is 1e-8, and it should be a very small number, because the purpose for eps is really just to prevent division by zero so you get a very large number but not infinity. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

I was absolutely wrong about everything here but I found the real issue in the newer thread.

Beta Was this translation helpful? Give feedback.

All reactions