Replies: 10 comments 34 replies

-

|

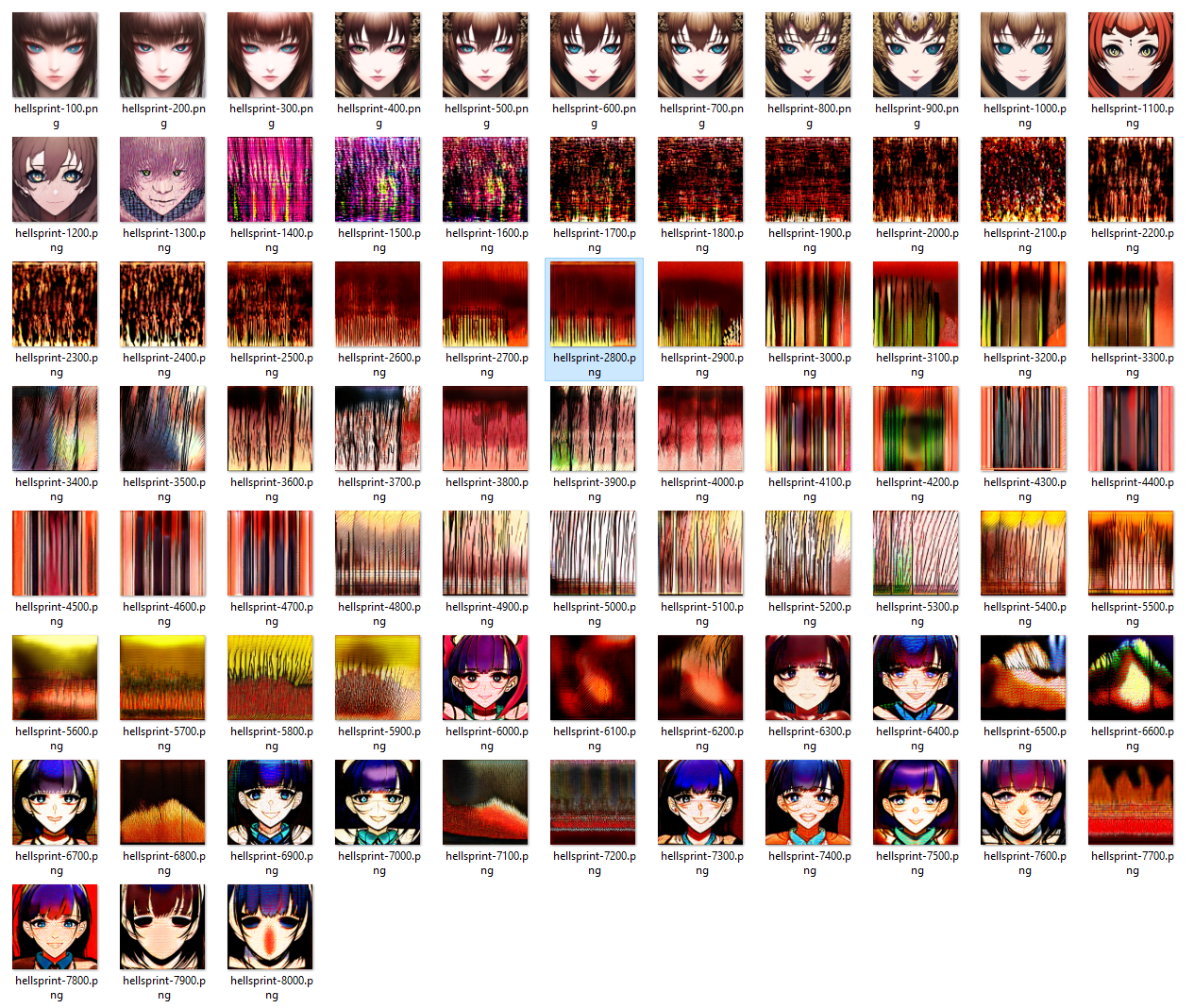

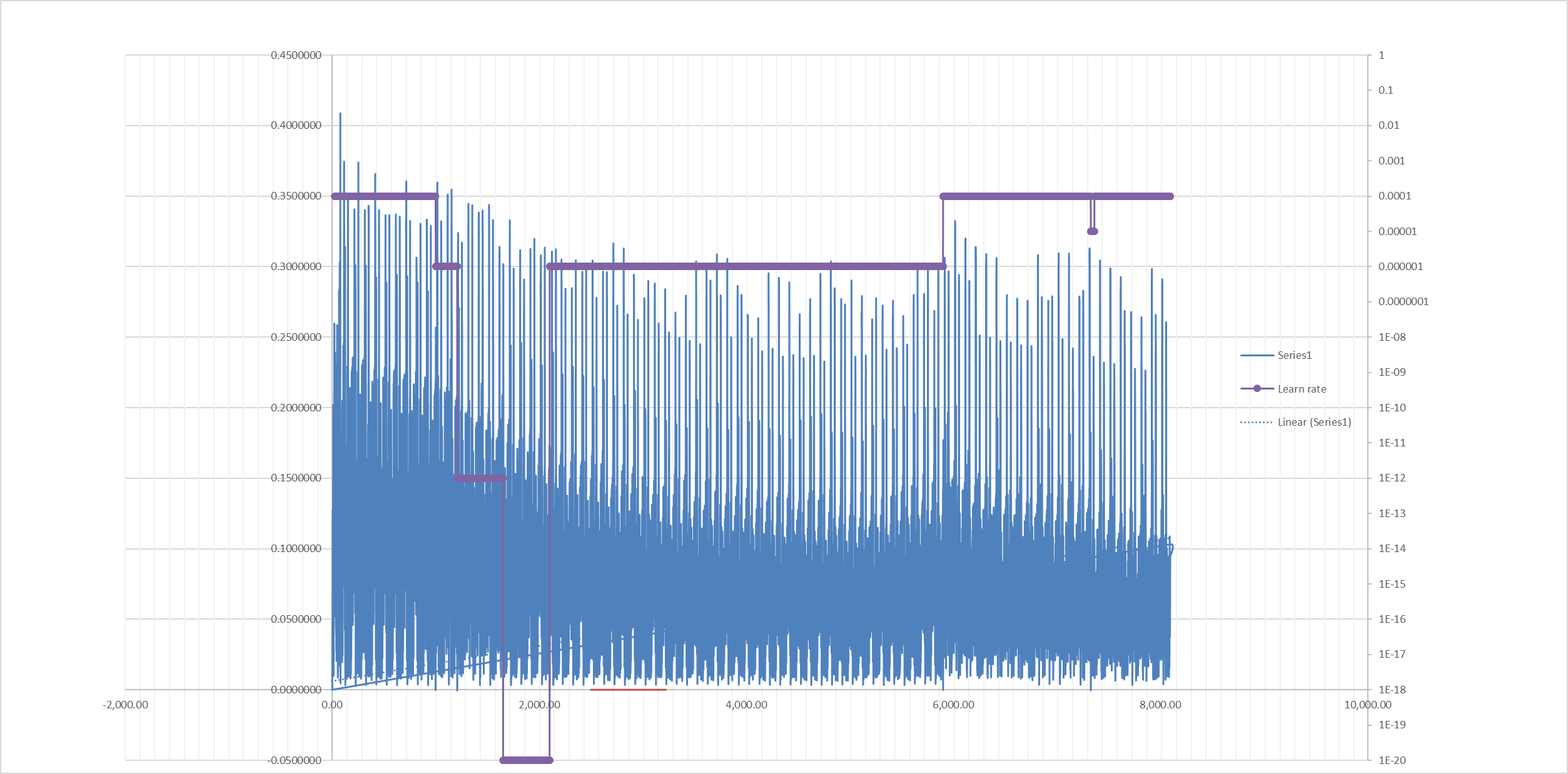

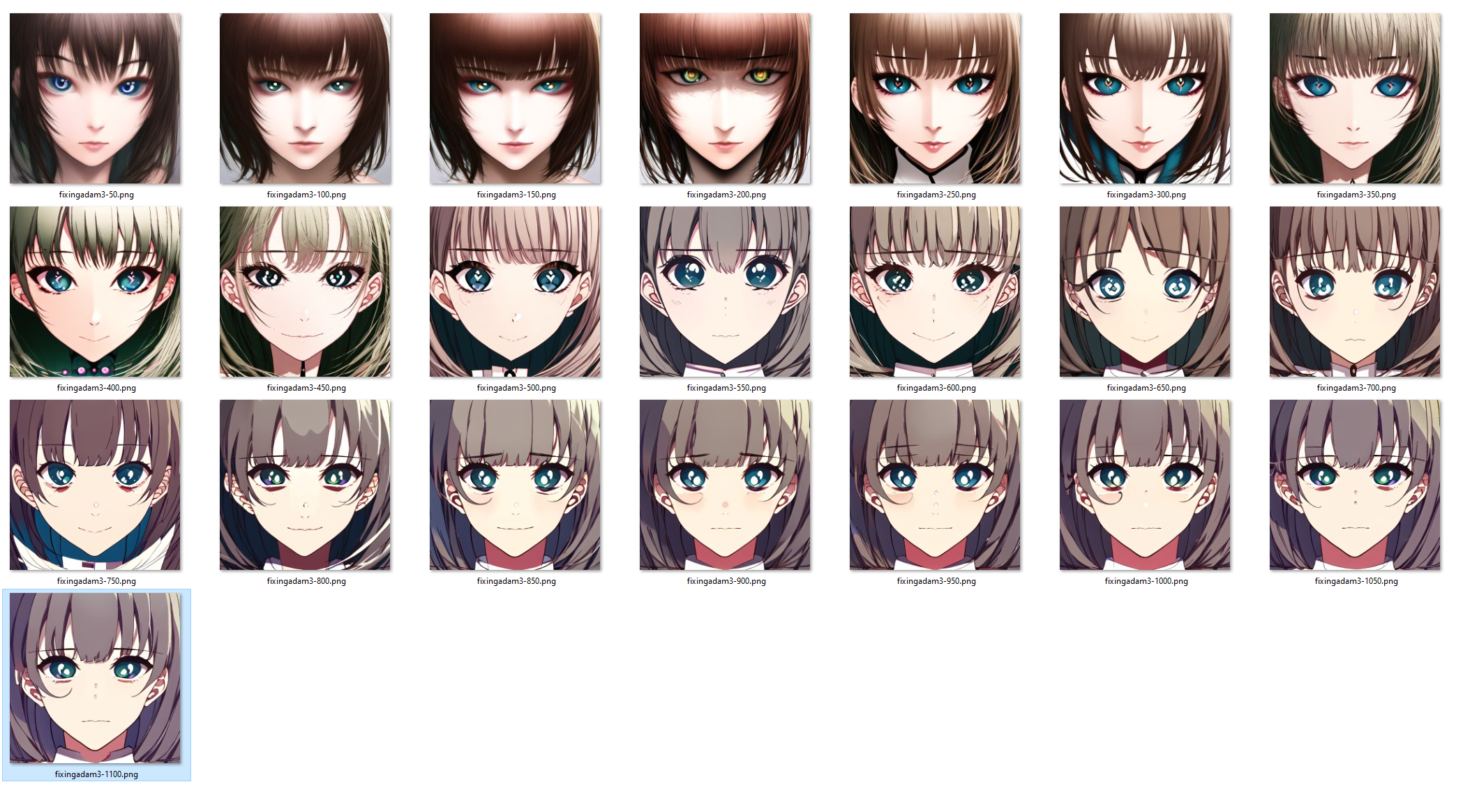

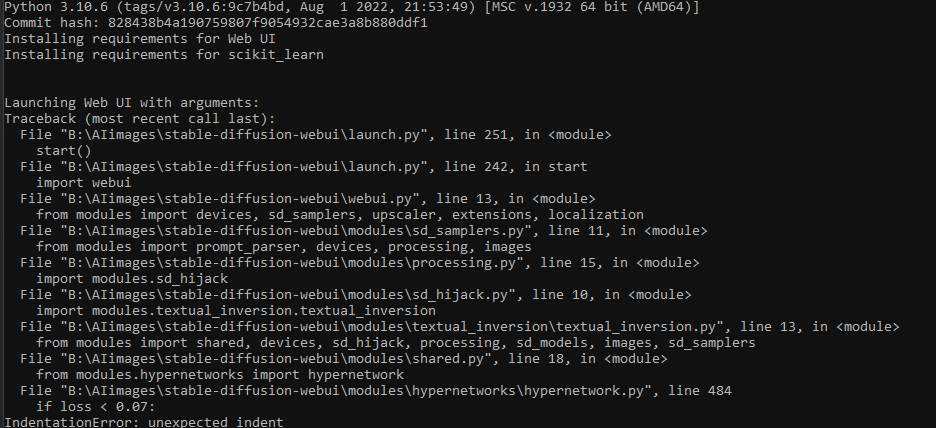

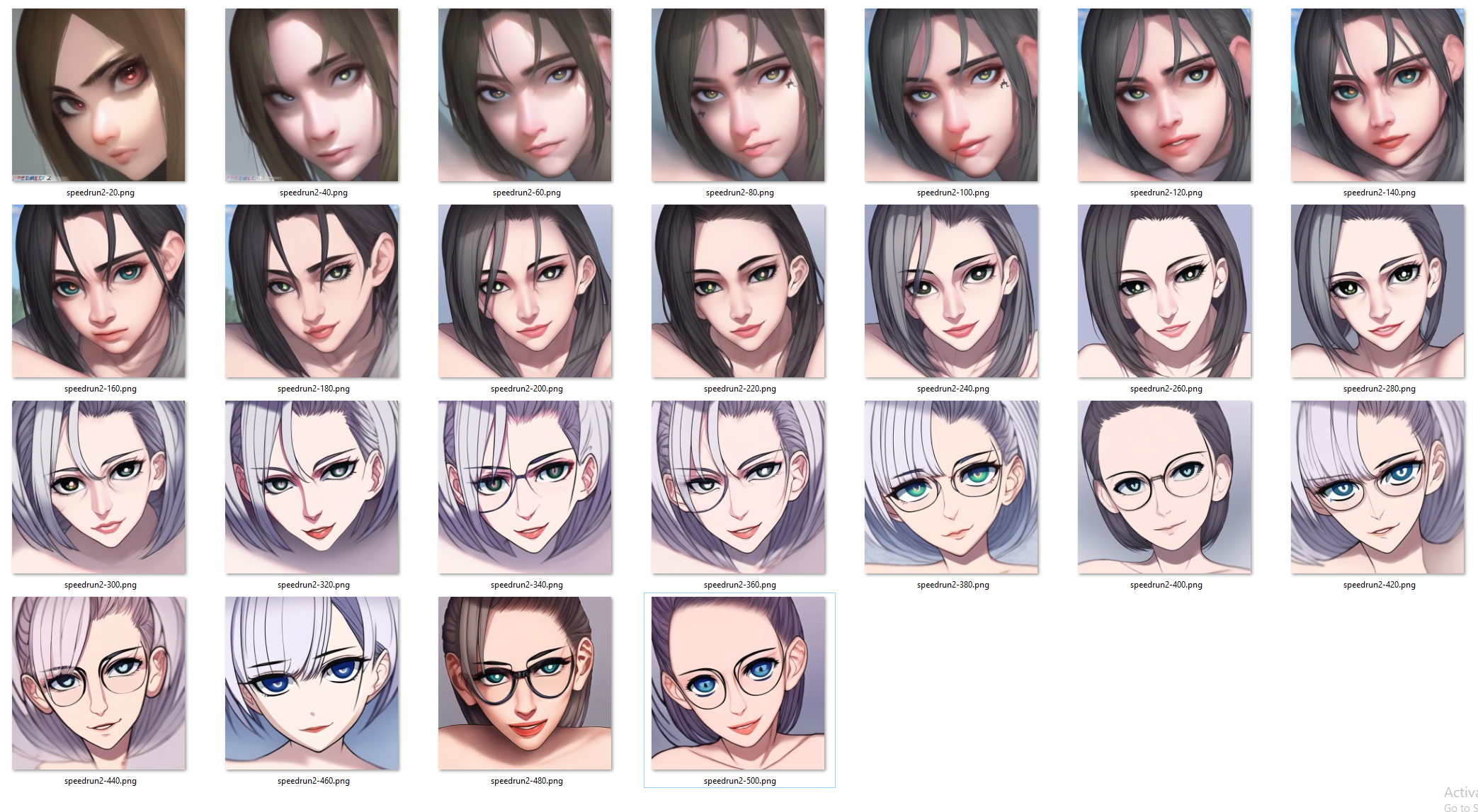

And the sequel is already here: I was happy cause there is a face at 48800. But I died in hell Cause my loss shot up. See? Overfitting and gradient explosions are two different things that both result in pretty colors. |

Beta Was this translation helpful? Give feedback.

-

|

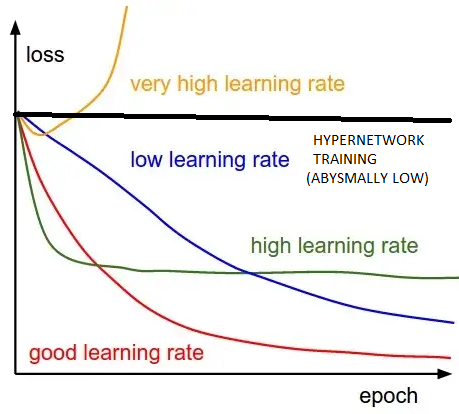

And I got out of hell: Not gonna wait for it to stabilize and show perfect pictures again because the optimizer is still very sub par for this. But you can see that you can get back from blobs to actual shapes - weights are normalizing and overfitting becomes less severe to a point where you start seeing things again. This also confirmed what I thought about how this works and how current training worked - why training at high learn rate was better. Setting the low learn rate (1e-20 on my graph) gives the algorithm a lot of room to approach global minimum and get closer to training points which creates much bigger overfitting (hell pictures). Then setting the learn rate high makes it less likely to move closer to data points which made it that I got a picture again at 6000 when I upped the learn rate to 1e-4. At 7400 I removed optimizer data to make it harder for the algorithm to find result closer to training points and it probably helped. Next I will try to play around with beta parameters of adam to remove or even reverse the learn rate decrease it does when it is stuck. Because this makes it more likely to move and destroy normalization. |

Beta Was this translation helpful? Give feedback.

-

|

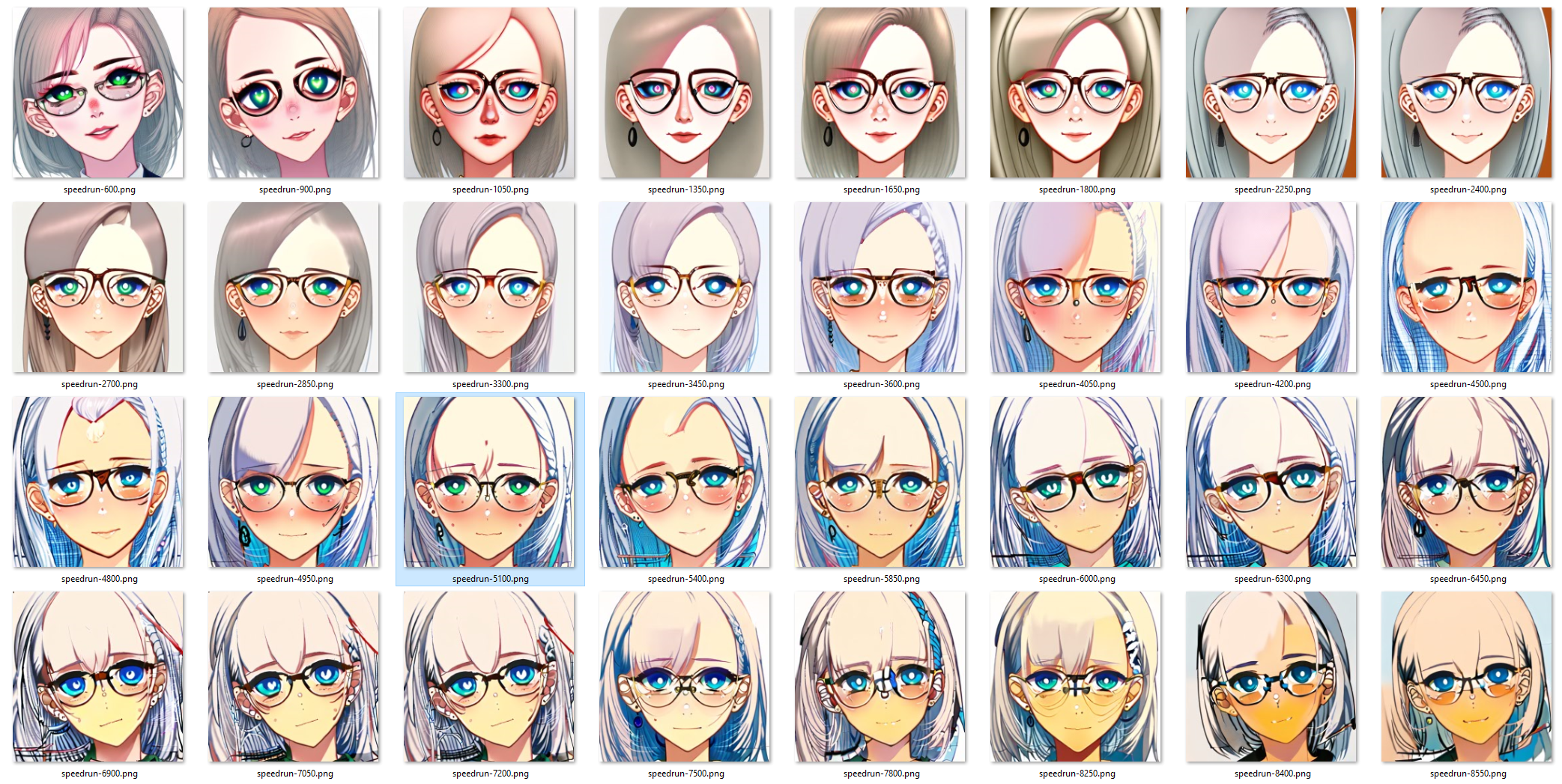

I added what I said: Changed optimizer to:

(EPS probably not even necessary weight decay of 0.1 is nice and beta is default beta) And I am completely blown away by results. It finally works quickly and doesn't go into overfitting. I mean I probably need to fine tune it a bit from here but this is exactly what was lacking in the training. |

Beta Was this translation helpful? Give feedback.

-

|

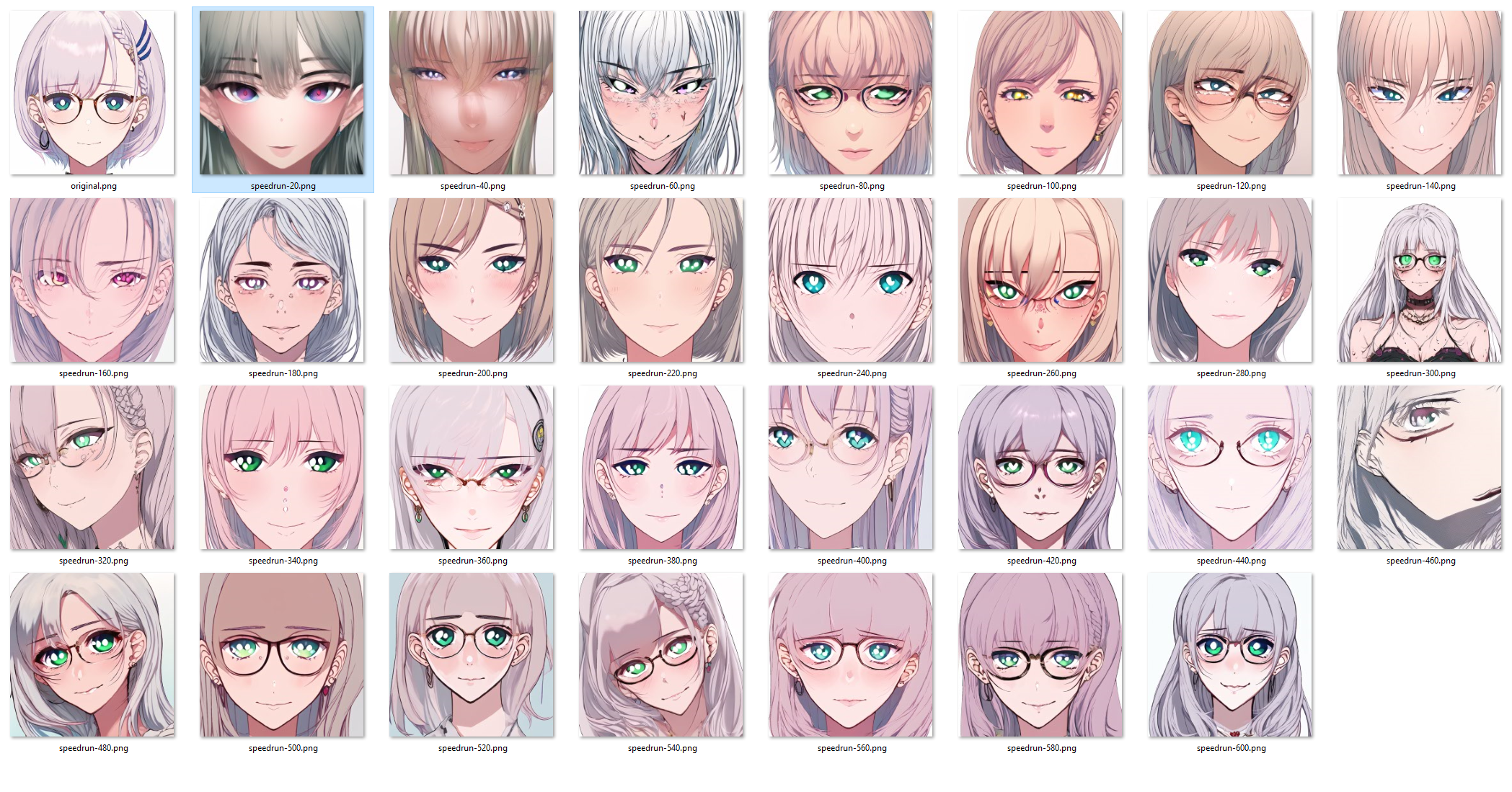

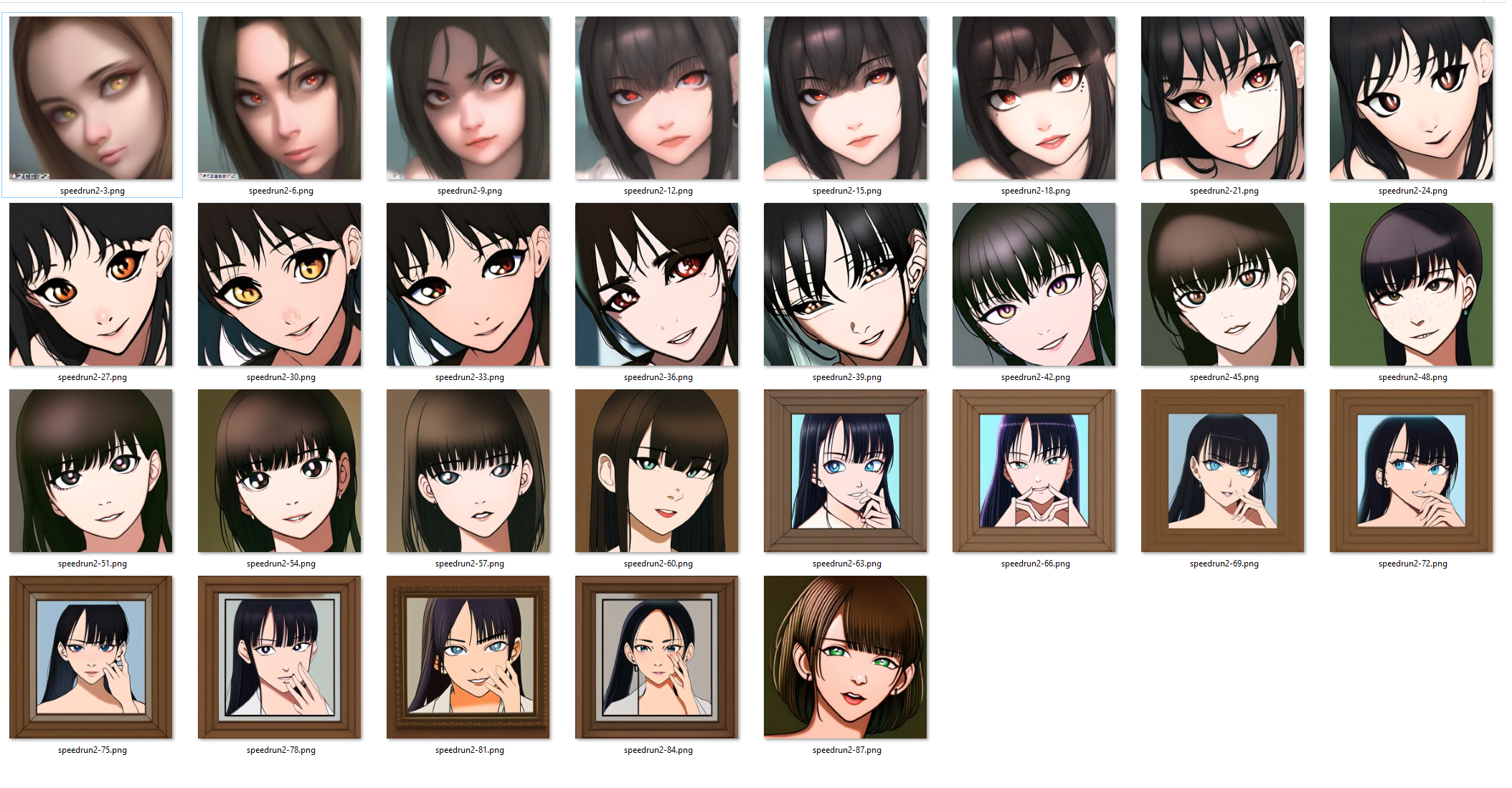

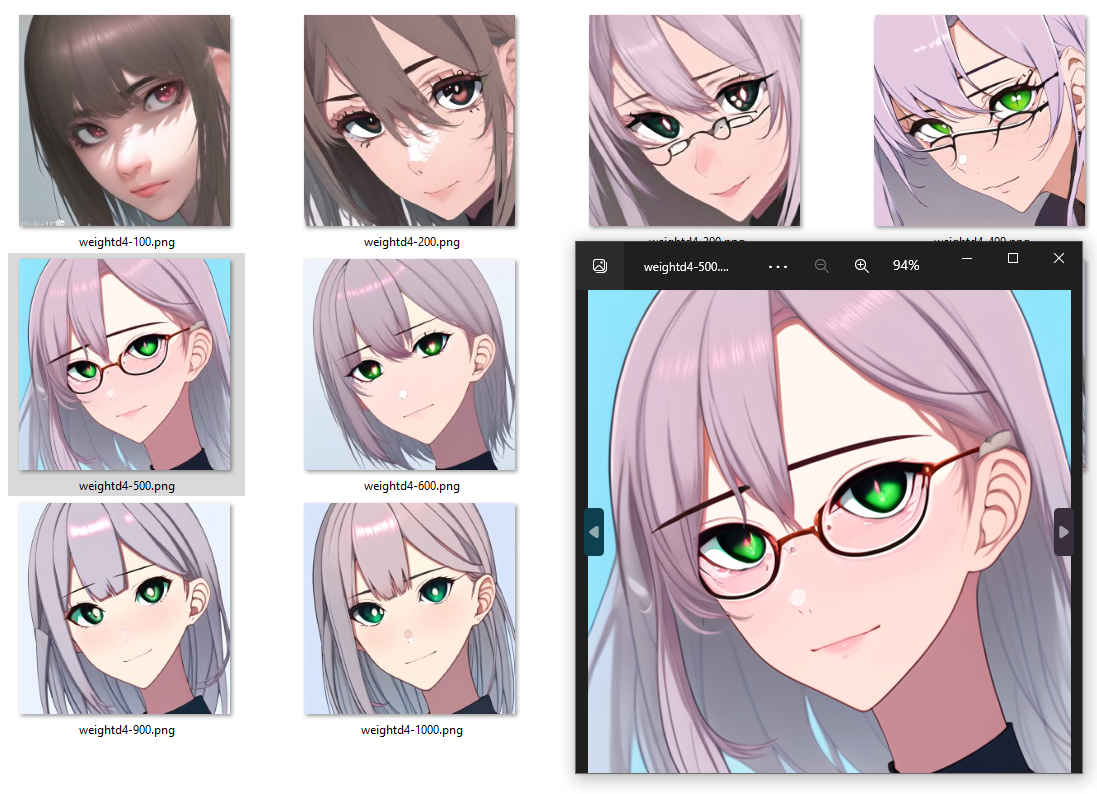

Here is a 1 picture no tag speedrun. Can we agree that this works? (Actually 1 picture learning is more complex than I thought...) |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

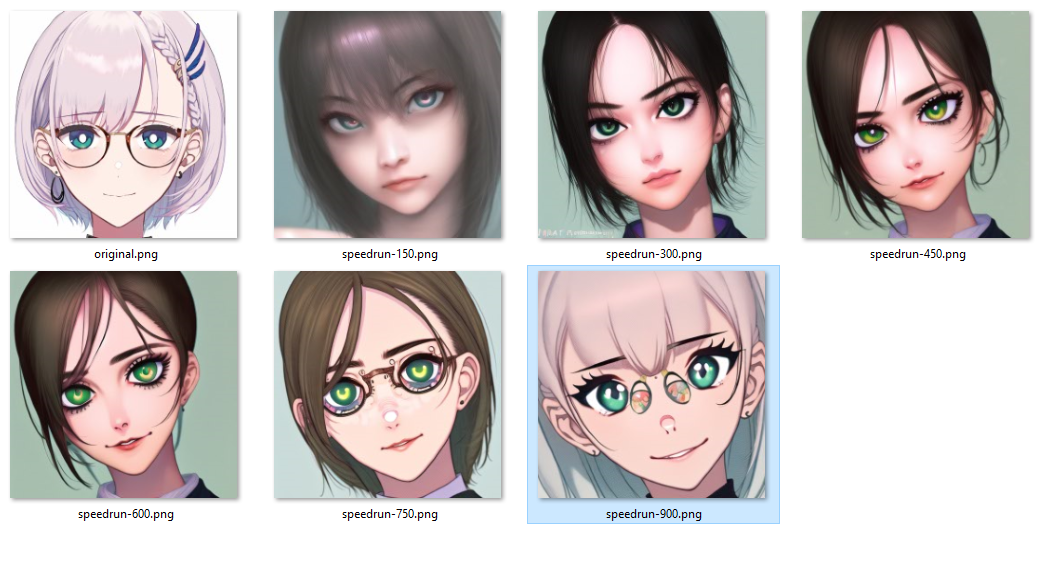

Loss clamping creates a new kind of death: Death by oversimplification. On the other hand if you go the reverse route of decreasing the weight decay to 0.0001 with loss clamping: Ok I got nothing. I was hoping that with a small weight decay I would get an inverse of current training where you go into overfitting and then hit eject before your network simplifies to nothing. But I don't have any idea what this is. I broke something. Some of this is nice. And it is only 50 steps. Possibly my patchwork implementation of it is wrong. Maybe it should be forced to do some steps to not kill itself like this. Maybe more playing around with weight decay and this limit + maybe adding dropout? I am gonna take a break because this is too complex for me. |

Beta Was this translation helpful? Give feedback.

-

|

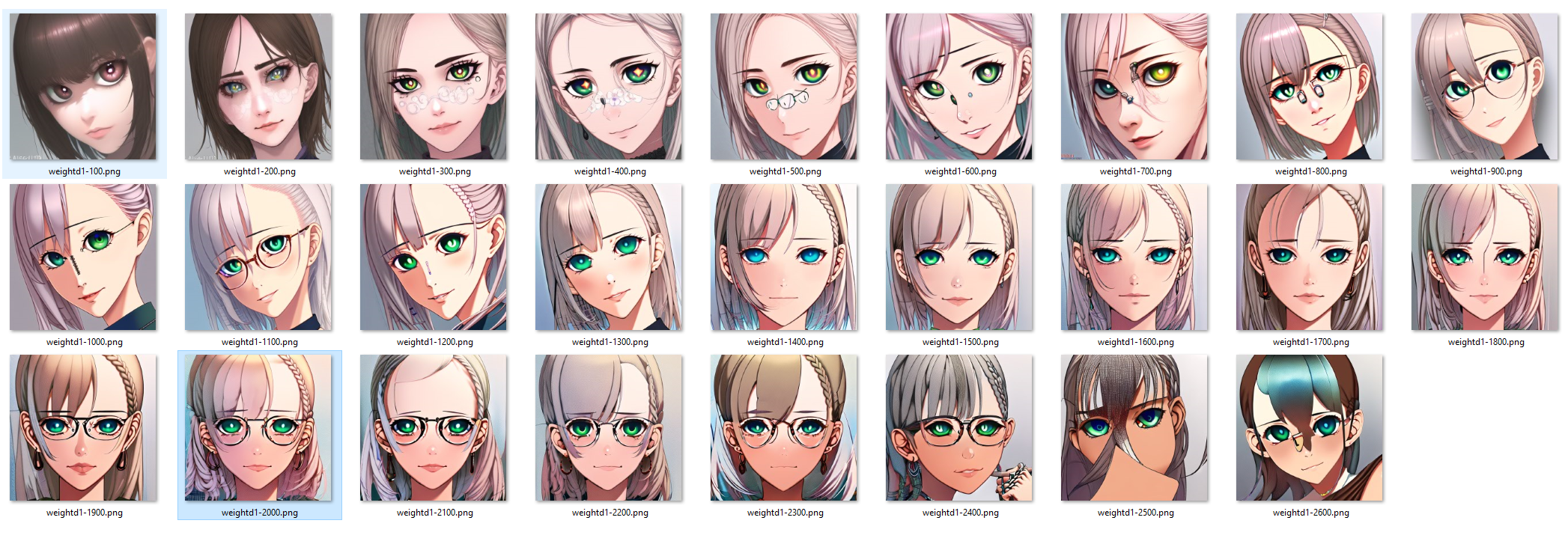

Posting my results here. I'm not super into hypernetworks (because they take so long to train) so I don't have much to compare this to quality-wise, but this seems like a big win for quick hypernetwork training. Here's what I did:

IMO it looks best after 1 epoch. |

Beta Was this translation helpful? Give feedback.

-

|

I have no idea what any of this means, but i'm glad there are anime math nerds doing the good work so we dirt eating artists can make better trainings :D |

Beta Was this translation helpful? Give feedback.

-

|

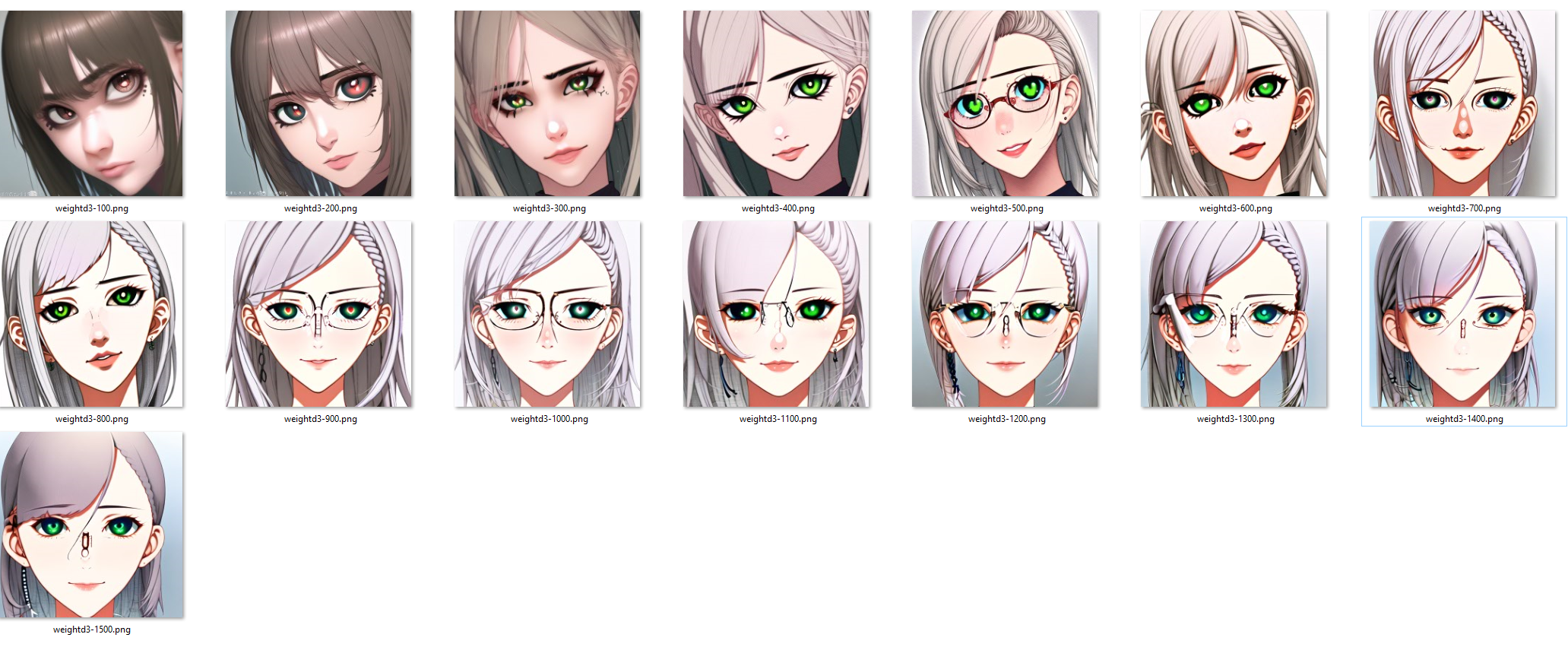

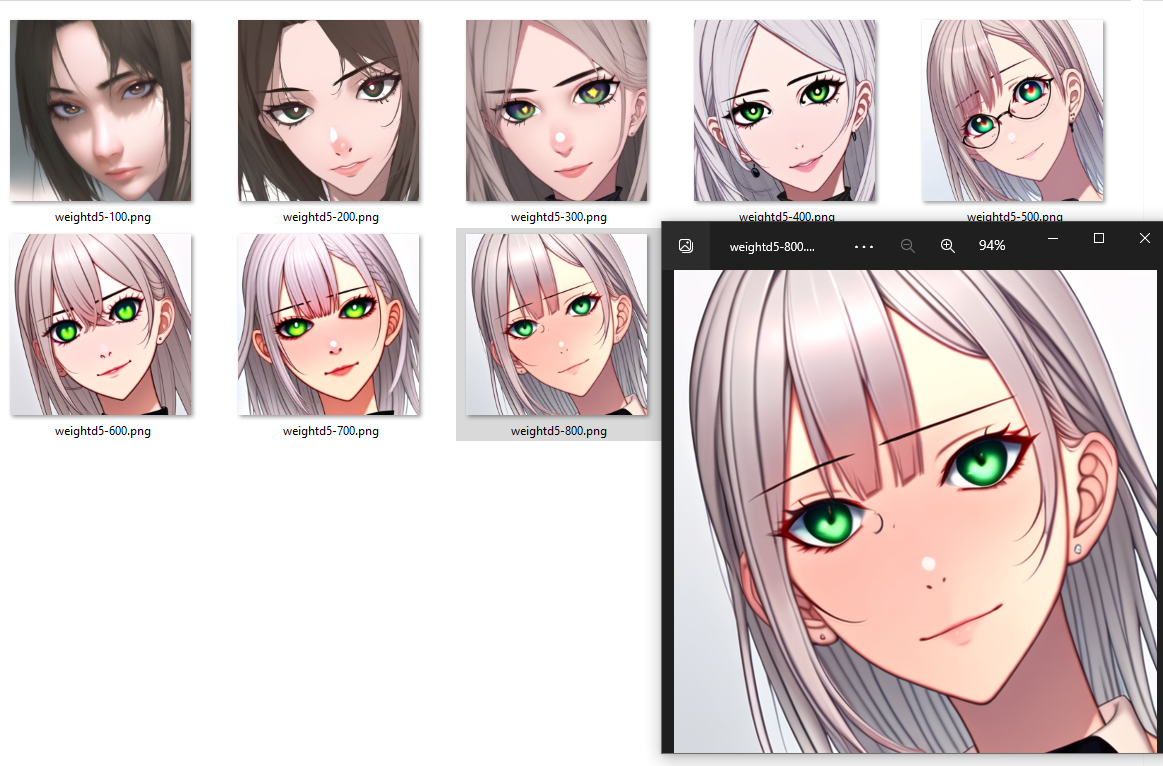

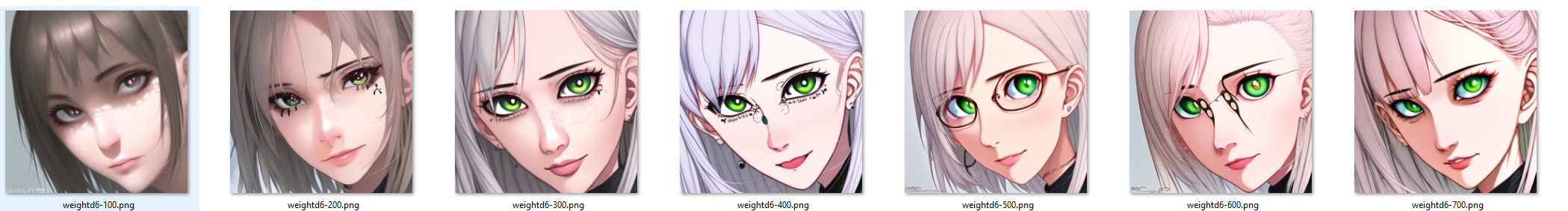

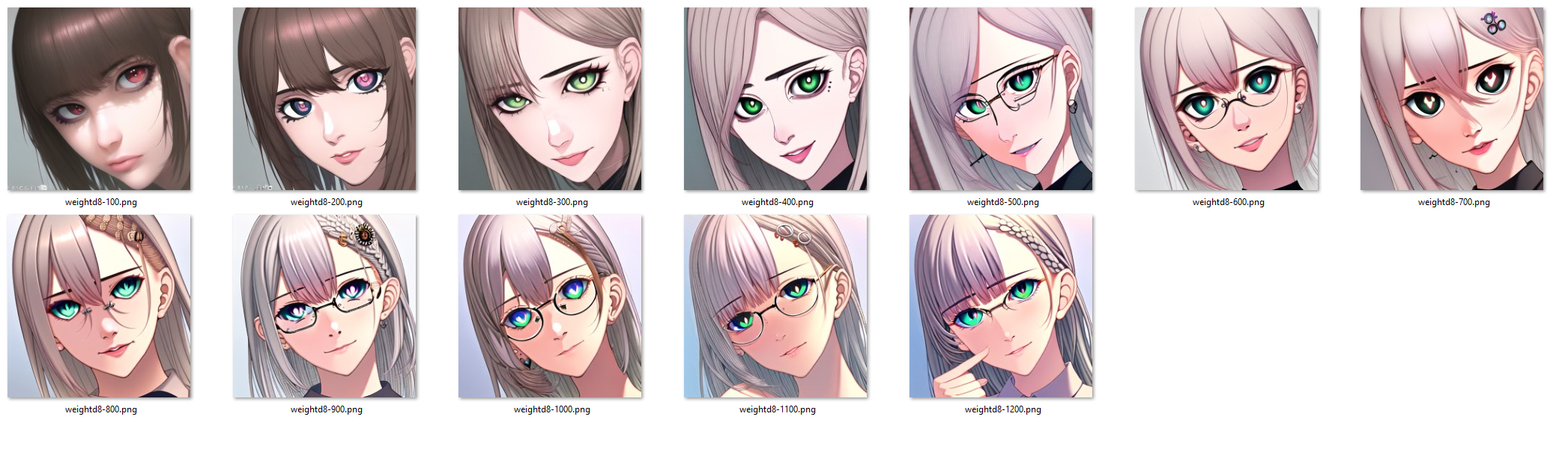

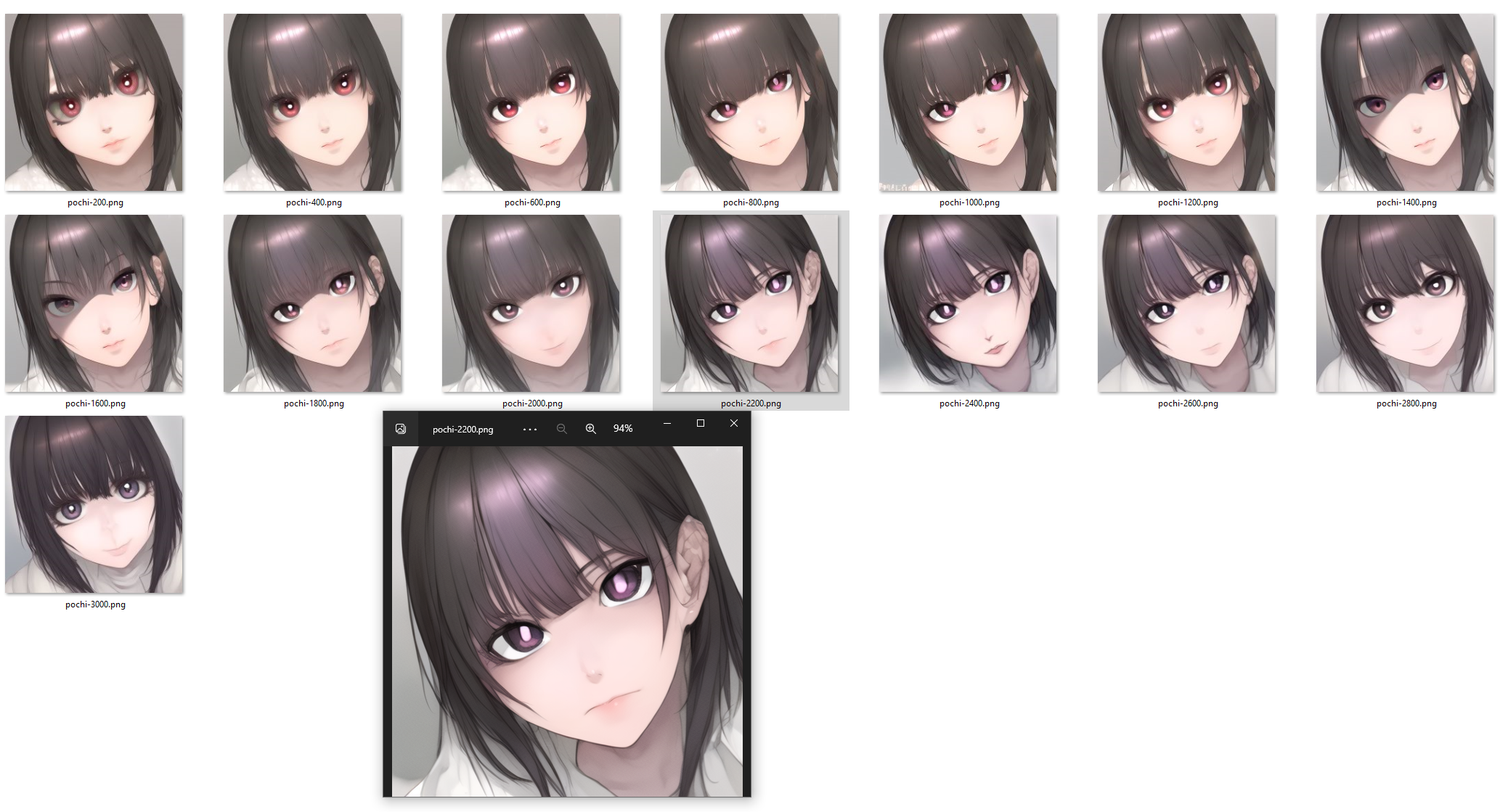

I need to stop working on this but I can't... Here is how weight decay with loss clamp works (once again that 1 face picture as data set without tags): Weight decay 0.1 Weight decay 0.2 Weight decay 0.4 (I was expecting it to be too big already but it is still kind of good and probably better than 0.2) Weight decay 2 (I really wanted to illustrate what happens when it is too big but I can't... It is still kind of good but I think it is starting to show) Weight decay 10 (I was about to say finally it is too big but then step 500 happened and then it only kept getting better) Weight decay 200( I just don't know anymore... I mean I was thinking 0.1 - 1 would be the optimal range for this.) Weight decay 9001 (could have stopped at step 500) Weight decay 1e20 (yes. actually that big)

Actually I got what I wanted 11 hours ago in the post "death by simplification" I simply wrote the loss clamp incorrectly and it never made a loss backward. What you see there is pure normalization from initial weights (at least I think so - I could be wrong but it looks like it to me)

I should also specify that while the same trend will carry over to all hypernetwork trainings the optimal value for weight decay will probably be different each time depending on size of network, number of pictures noise etc. Refer back to the article and section that starts with:

|

Beta Was this translation helpful? Give feedback.

-

|

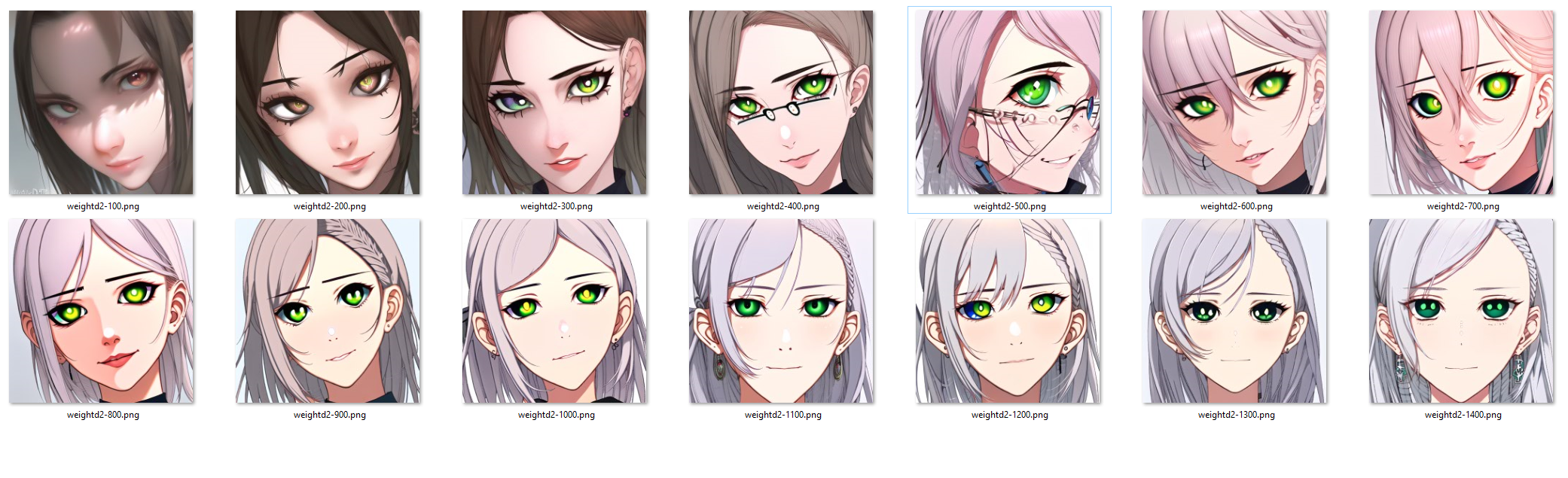

Untagged 548 images with mirrors (cause why not). 1,1.5,1.5,1.5,1.5,1 softsign Weight decay 2. Only problem I have with those is that the colors are bland but I guess my prompt was also bland and non-descript. Curious thing is I got it exploding shortly after that and that was a gradient explosion and not overfitting (even when I added gradient clipping). Which could be because of weight decay above 1. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

#2670 (comment)

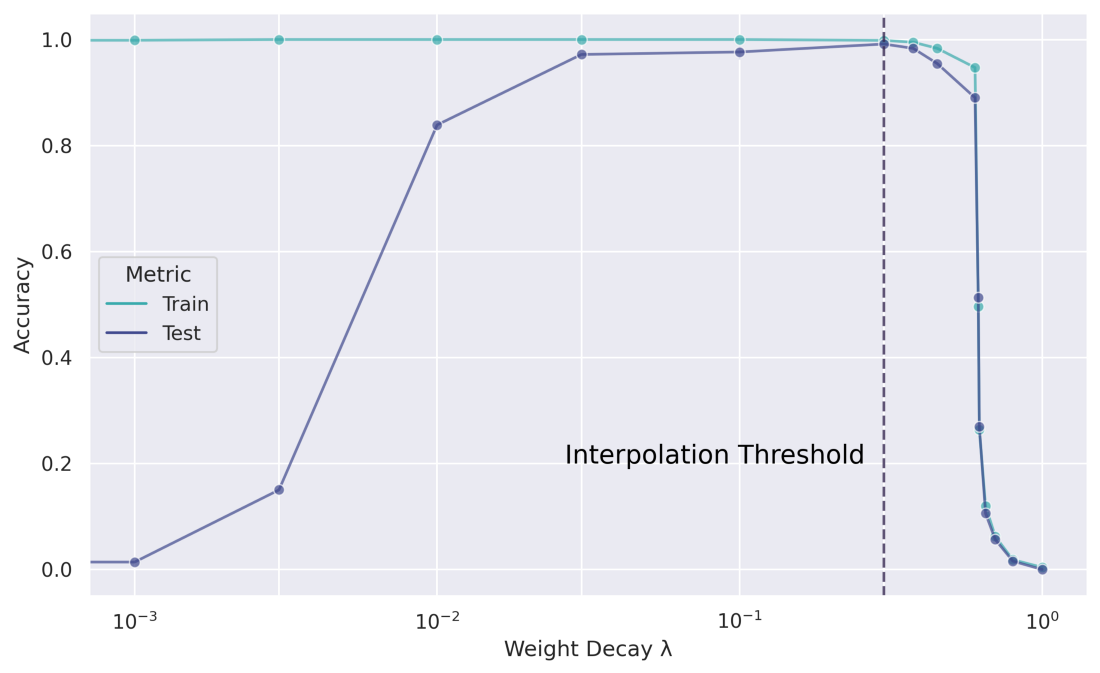

I started discussion there, but it is a bit chaotic as I was still trying to figure it out. Now I have a much more firm grasp and I will demonstrate everything on a run I am doing right now.First of all mandatory reading I posted in that thread:https://towardsdatascience.com/weight-decay-and-its-peculiar-effects-66e0aee3e7b8

What is described there is happening during hypernetwork training, but it is happening very very poorly and it is clear to me that the results are very random, just because of how ADAMW is unintentionally mishandling the training which may be very specific to how training is working right now.There was some truth to what I wrote but I needed to do more runs and I still need to do more runs. I will rewrite it again after I get the result I want.

For now the most important takeaways I got from sitting on this:

You can both undertrain and overtrain network. Overtraining will happen quickly for a complex network, default weight decay and small picture dataset (20). Undertraining will happen quickly for a simple network, high weight decay parameter and a big picture dataset (what was happening in my trainings - too big dataset (150 or so) for too small network even when I was using 300Mb sometimes).

Alternative way of training to the one posted in main hypernetwork style training set and people + me got working:

Make a picture or two in the middle of first epoch. Run training for up to 3000-5000 steps (the whole point of this method is that it is quick and should give fairly nice results) with... whatever learn rate you want, it doesn't matter that much to be honest, as long as it is slightly below the rate where you see the loss increased from the start . See if you like the first pictures in the middle of first epoch more. If you do then you need to increase the size of the network further but it may make the training take longer. Training this way should never lead to overcooking. What you should see is at one point the training loss will start to increase. But before that happens you will probably see the quality of previews drop. So you need to stop before quality of pictures starts to decay.

All of the above is directly tied to the weight norm of parameters - how smooth the functions of your network are. So what I said before this rewrite about it being useful stays. It would be great to be able to see it during training as it tells you if network is headed for simplification of not.

I want to believe that neither this method nor the one in original hypernetwork styles thread is the absolute best and I am going to keep trying to get that best method I have in mind to work.

Beta Was this translation helpful? Give feedback.

All reactions