Photography Best Practices for SD #5305

Replies: 4 comments 4 replies

-

|

These are valid points from an artistic perspective for sure. However, stable diffusion is not, in any way, an intelligent system. It has a certain "understanding" of how words relate to images and a very clever way of converting that into visible results. Problem is, due to technical reasons, the way it was originally trained was an enormous pile of initially 95% garbage images, which were then cropped to squares without any subject centering. So most standard 2:3, 3:4 or even 9:16 ratio portraits got top of the head cut off. Hence all that crappy framing, lacking lighting and other issues (stock image watermarks, yay!) Edit: A little more info on this. You can see and search some (12096835 million to be exact (at the moment of writing)) of the images (or type of, who knows what they filtered and how) that were used to train the system here: https://laion-aesthetic.datasette.io/laion-aesthetic-6pls/images It seems like the most realistic way of getting decent results is relying on custom trained models. Other than that, filtering in good examples and filtering out bad ones with keywords can help. But it is not a completely reliable solution. Edit: The way you word it, sounds like a 3D system that arranges content based on keywords then letting you light and frame it nicely. That may be possible in the future (Nvidia has some early examples of single objects being generated that way), but for now that level of control seems a good distance away. |

Beta Was this translation helpful? Give feedback.

-

|

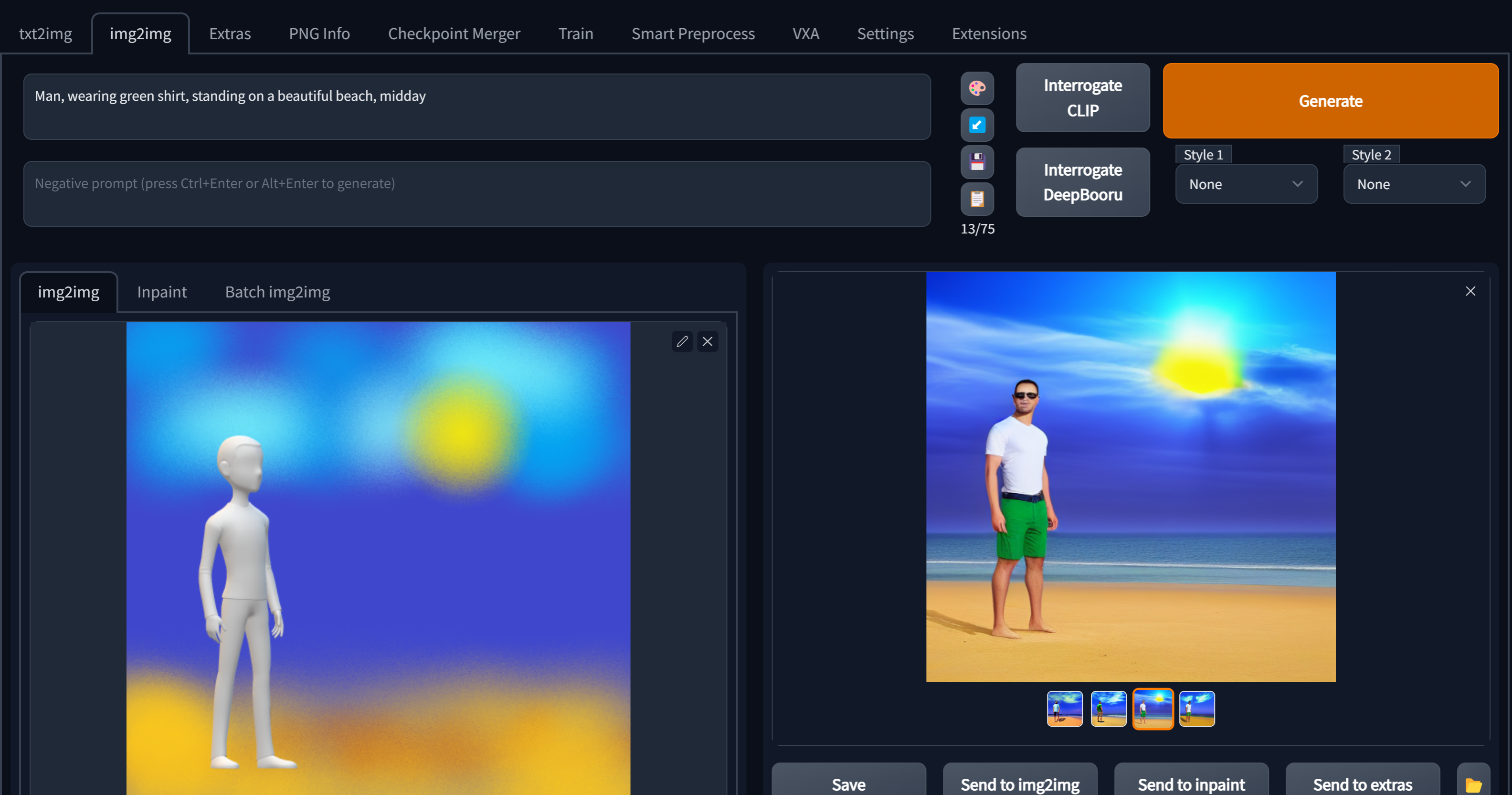

For proper placement exactly as you want it, something like img2img with the inpainting model or depth masks or something would make sense. Like draw a blob somewhere on a white canvas and then run that through img2img, telling it what the blob is supposed to be |

Beta Was this translation helpful? Give feedback.

-

|

What you want is precision and control in general. It's a holy grail of image generation, and just like the holy grail it might be unreachable by using text to image alone. Not just because the model doesn't understand the natural language well - I'm sure it will improve greatly, but because treating the model in a functional way (send words - receive image) is inherently limited in explaining artistic intent. Even if you put a real human on the other side, who will never analyze requests or give feedback, just blindly following them. Text to image alone is more of a toy, it's not well suited to getting things done. "Prompt engineering" is a gimmick - it scales poorly, and explaining your intent with higher order tools than text is faster and more convenient than trying to write Finnish in a way that would yield coherent Chinese poems after Google Translate. Which is similar to what txt2img does. TL;DR: one sketch for img2img is worth a thousand tokens. One depth guide is worth ten thousand. Now, the closest thing to what you want that you can do right now, is to use the 2.0's depth2img feature. Use any 3D software to quickly setup the rough geometry with directional lighting. Use free assets, there are plenty of royalty-free human rigs etc. Don't bother with details, anatomy, haircuts, physically correct rendering or anything like that - the model will deal with all that, it just needs a rough guide. Then feed the draft render and/or a depth buffer of your 3D scene into the model as a guide for your prompts. To get the style you want, use any of the available training techniques on your reference pictures, not clever prompt tricks as they quickly become too complex to manage if you want control. Reference images can be the output of SD as well! Textual inversion is worth a shot, as despite 2.0 prompt understanding being possibly gimped, all the training images are still there for your styling needs (sans NSFW, it requires Dreambooth and alike), so you'll be able to train your embedding easily. Thinking of it more, image gen needs some kind of a hybrid 2D/3D software toolkit software with a pipeline like this:

The model can possibly be further combined with something like this for temporal stability, making animation possible. Using tagged geometry as in the aforementioned paper, one could semantically tag the specific objects or areas of the 3D scene to better guide the model ("this is a middle-aged man", "this is a house roof", "this is a Volvo semi", "this is a mirror" etc). This would make complex scenes possible without overloading the textual input. I imagine one would need to train a new model from scratch with such use in mind. Such software would revolutionize content creation, just like other "unconventional" tools like ZBrush revolutionized their respective areas. |

Beta Was this translation helpful? Give feedback.

-

|

I guess the simple thing is: Stable diffusion is a magic box of math that you throw words into and get images out of. It doesn't have an understanding of what things are, but it knows what words are close in meaning and what images they might represent. And then it knows how to take some gaussian noise, some parameters, and a prompt to somehow make an at-least-somewhat-coherent image. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

One thing I noticed about SD output is that it's truly missing some of the simple things that make photography really great. Two of them are framing and lighting.

For example, just telling SD to put one or both eyes on the top third of the frame would significantly improve quality of output. Much of the time, we've got bodies with heads cut off at the neck. This would eliminate that problem completely for all "shapes" (human, animal, etc.) that have eyes. It would be much more complicated to add in some rules about rendering the frame around the subject where the frame would bisect their joints (shoulders and knees are common locations for this).

When rendering subjects that don't have eyes, where you want the focus of the piece to draw attention to the subject, your subject's center would be placed at a bottom third, right third or left third. This is best when the subject is small such as the nugget of gold in a piece of rock, the sunset, or a mouse. There may be times where the subject would be best at the top third such as a moon or sun in the sky.

Another good way to increase the control (and thus quality) of output is to place lighting such as the sun. If there were parameters to control sun altitude (100 would be high noon overhead sun, 10 would be sunset at the horizon and 5 would be dusk and 0 would be night) and placement in relation to the subject (front would be 0 degrees, right would be 90 degrees, back would be 180, left of subject would be 270).

This is my first time posting anything on Github so please pardon me for my noobishness.

Beta Was this translation helpful? Give feedback.

All reactions