Replies: 13 comments 43 replies

-

|

it is worth to try it? seems like a lot of steps to intall it... |

Beta Was this translation helpful? Give feedback.

-

|

What's the benefit of this? Or is this a gimmick? |

Beta Was this translation helpful? Give feedback.

-

|

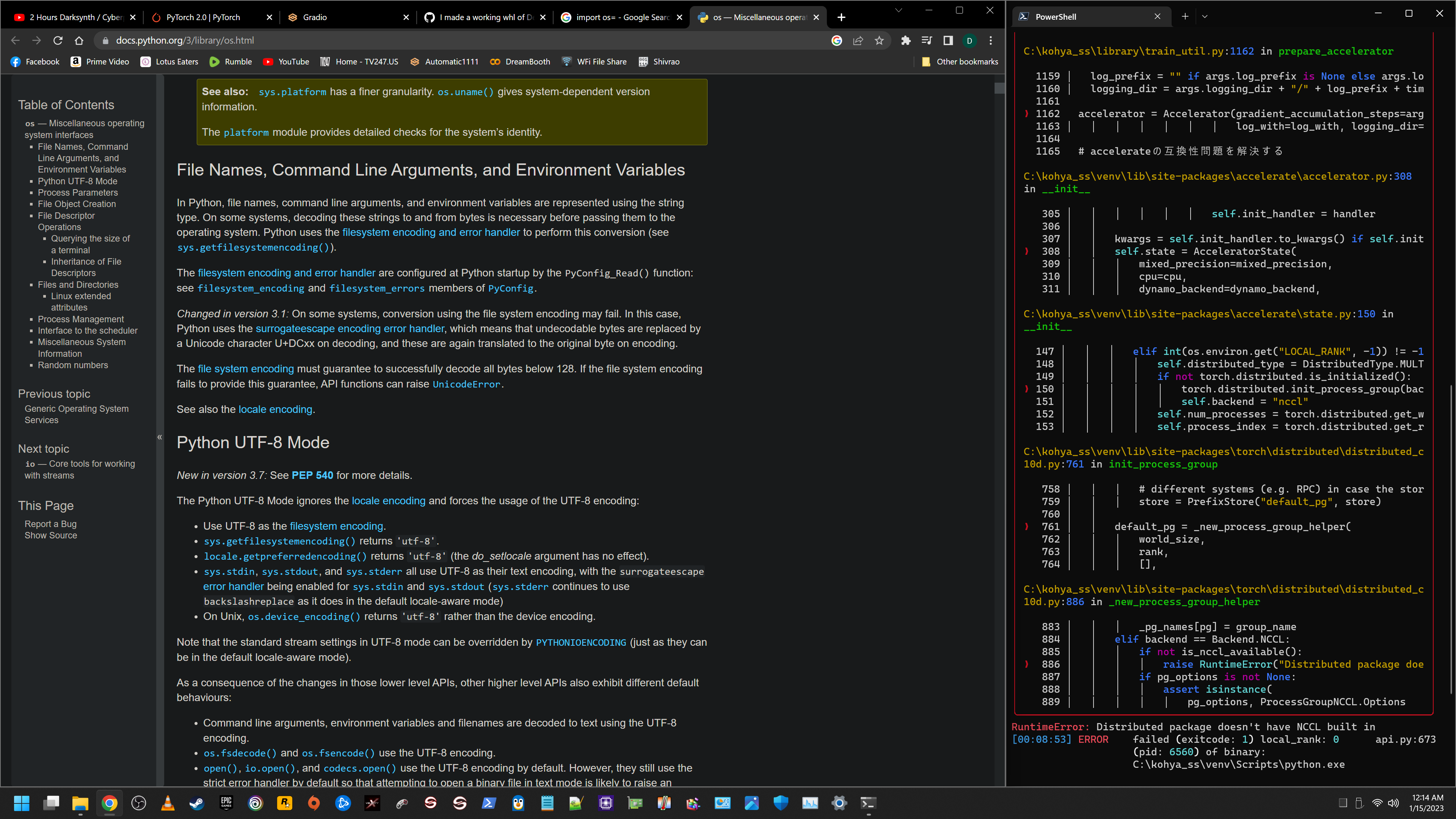

i've encountered a new error that wasn't there last night about torch.distributed.elastic training that has me hesitant to post it and risk breaking anybody else's models in the meantime... i've already found the right bit of code to insert to fix it bc it's still looking for a Linux backend. now I just need to figure out where to put it so torch looks for Windows "gloo" instead. it's gotta be either in accelerate or torch... |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

I have good news and bad news... The problem was never anything to do with my install at all bc I was able to recreate the same exact error without deepspeed installed at all from a zipped backup I made right before installing it... thats the good news... the bad news is I was able to recreate it simply enabling any sort of distributed training via accelerate config... so that means the likely culprit is either the Triton module, my primary suspect, or xformers, or both bc the 2 are tied together... |

Beta Was this translation helpful? Give feedback.

-

|

nope... its still throwing up the same error with distributed training on and both triton/xformers deleted entirely |

Beta Was this translation helpful? Give feedback.

-

|

the culprit has got to be accelerate... I just did a fresh install from zip with the only thing added being "--xformers" to launch.py line 178 in command args |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

im friggin exhausted but here's the whl file for anyone else who wants to give it a go: |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

I'm pretty sure i've done everything I can on this from my end until Windows has better support for distibuted training baked into it bc everything is trying to look for a linux only backend nvidia has no plans on ever migrating over while torch distributed only really supports NCCL for GPU and gloo for CPU with little to no support for the one type of distributed windows supports called MPI... I hate Linux and WSL linux is even more of a headache... Oh well for now I guess |

Beta Was this translation helpful? Give feedback.

-

|

Hi @PleezDeez |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

With the recent triton build for windows I was able to get deepspeed to build with proper sparse attention support from it on windows and integrated it into my 1111. I have a whl for it I'm going to be uploading tonight after a family gathering when I have more time to make a proper install guide but for now I'll leave the requirements I was working with. CUDA Toolkit 11.7, python 3.10.8, xformers most recent 0.0.16dev424 build, Deepspeed 0.8.0, torch 1.31cu117, py_cpuinfo, Triton 2.0.0 with any meta data in it and the dist.info folder saying it's 1.0.0, the contents of venv/lib/site-packages/triton copied into and over the contents of the triton folder in xformers it conflicts with, a few other metadata edits to numba, tensorflow_intel, and open_clip_torch to make them accept numby 1.23.3, had to change line 176 of launch.py and replace the torch it asks for there with the pip install command for torch 1.13cu117, and I had to make the accelerate config file in WSL then copy it over to windows to avoid a ton of errors that pop up after enabling deepspeed in the the config menu but it accepted the wsl parameters without issue. CPU offload is working now with triton as well but still no NVMe offload bc I have no way to get it to address the drives.

Beta Was this translation helpful? Give feedback.

All reactions