Replies: 10 comments 15 replies

-

|

It seems to be related to L2 and L3 cache. AFAIK on 13900K, Performance cores have access to 2MB L2 Cache "Per Core", Efficient cores have access to 4MB L2 Cache "Per Cluster". |

Beta Was this translation helpful? Give feedback.

-

|

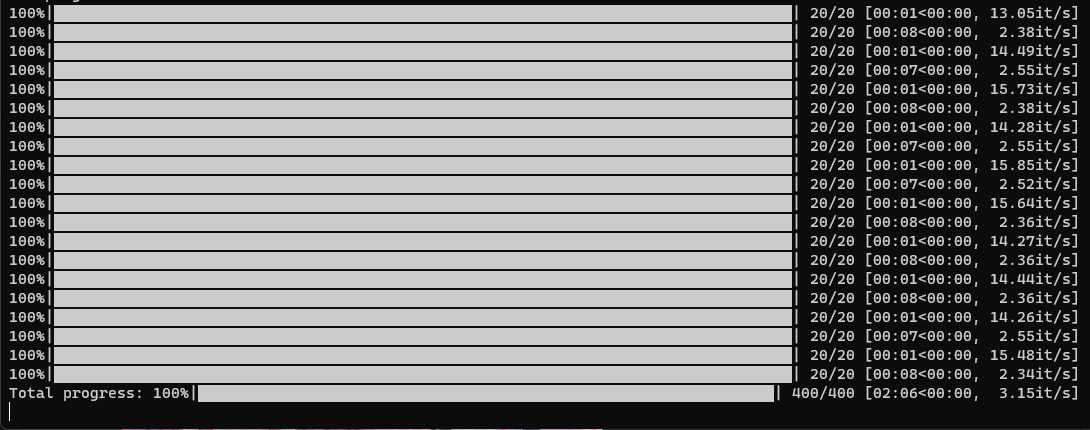

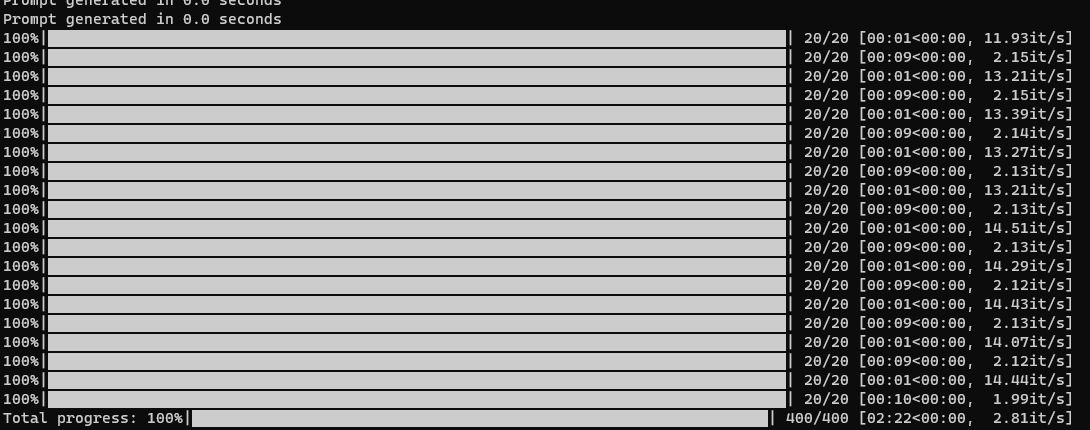

Using a 5800x3D with -30 curve optimizer on BIOS, no idea if the extra L3 cache helps. On Windows 11 with an RTX 3080 12GB using Torch 2, compiled xformers, updated .dlls and -opt-channelslast. First is just using an undervolt: Max I saw was 16 it/s. Second is using an unnecessarily paranoid Afterburner profile with a 70% powerlimit, -350 memory underclock and a regular undervolt Max I saw was about at about 14.5 it/s. Seems in line with these benchmarks: In both cases with longer prompts above 75 tokens I've seen it drops to about 8-9 it/s and 2-2.8 it/s with highres fix from 512x512 to 1024x1024. Edited the og post because pictures are better. |

Beta Was this translation helpful? Give feedback.

-

|

Someone suggested the reason CPU performance affects this is due to something called "CPU scheduling" vs "GPU scheduling" which appears to only be available on Windows drivers. I've found multiple people asking about GPU scheduling for Linux but not getting it. I'm not sure why NVidia isn't providing this. I'm not sure if this "scheduling" thing is in the nvidia kernel module or libcuda.so. I know I got about a 2% speed up by compiling pytorch with '-march=native" to leverage my Raptor Lake processor. I may try to do the same thing by building my own https://github.com/NVIDIA/open-gpu-kernel-modules/ |

Beta Was this translation helpful? Give feedback.

-

|

I’ve really low perf probably due to R7 1800X. This Thu I’ll upgrade to R9 5900X and I’ll report if there are also changes in speed. For me it’s really surprising, because CPU looks like “barely” used during pic creation. |

Beta Was this translation helpful? Give feedback.

-

|

(Linux user here) There's something's with python as any generation maxes out a core. I guess this is what you call scheduling? But no matter what size of generation I do one core is always maxed out. I also want to report the negative prompt slows down generation alot on slower cpus. This becomes less apparent the larger you generate as the gpu becomes the bottleneck. |

Beta Was this translation helpful? Give feedback.

-

|

One thing I noticed is on first launch after a boot/reboot, my speed stays at about 8 it/s, but switching to a different model increases it to 14 it/s. Switching back to the original model that was loaded on launch, and switching to any other model, also keeps the increased speed. Maybe a difference in how models are loaded at launch compared to how they're loaded through the dropdown in the UI. |

Beta Was this translation helpful? Give feedback.

-

|

Damn! I wish I had googled python profiling tools at the beginning of the day instead of near the end. It was so easy. The only downside is results can be skewed due to async operations. This can be corrected by: 13207464 function calls (11804587 primitive calls) in 25.760 seconds Ordered by: internal time ncalls tottime percall cumtime percall filename:lineno(function) |

Beta Was this translation helpful? Give feedback.

-

|

@aifartist not directly related, but definitely has an impact especially on lower end cpus #6932 (comment) |

Beta Was this translation helpful? Give feedback.

-

|

and one more dead-end - tried compiling native python 3.11 which is supposed to be much faster than python 3.10 well, no issues building python, but.... |

Beta Was this translation helpful? Give feedback.

-

The program that does AI interation needs to do the matrix multiplications/adds and the activation function. Depending on what type of activation function is implemented and the number, it's most likely to be done by the CPU. Because the CPU is faster to do different things at the same time and is built to do complex math with less steps than the GPU. The CPU is also working on reading the data from the RAM or SSD, processing the data and then transferring the data with the instructions to the GPU |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

There are those with a 4090 that get near the 39 it/s I get and those that don't. Yesterday trying to figure out why someone else was only getting 34 it/s I wondered if a slower CPU would have any difference compared wiht my 5.8 GHz i9-39000K. Didn't think I had a good way to test that. Then I realize I had a CPU that was both fast and slow. The Raptor Lakes have two kinds of cores. (P) and (E) cores. I used the Linux 'taskset' command to bind the A1111 threads to the slower E-cores and my it/s drops from 39 to 27.5!

Why does the CPU speed make such a huge difference for what should be a largely GPU operation? Today I'm going to start drilling down to find out.

Ideas would be welcome. I even tried to binary patch a 'pause' instruction I found during cpu profiling into a NOP instruction. It kind of looks like a lot of time is spent in some polling loop. A pause instruction is often added to such loops. Spinlock implementations typically do that. However, this didn't speed it up. Hmmm, what should I try next...

Beta Was this translation helpful? Give feedback.

All reactions