diff --git a/.github/workflows/ci-main.yml b/.github/workflows/ci-main.yml

index d49831f..a93dfee 100644

--- a/.github/workflows/ci-main.yml

+++ b/.github/workflows/ci-main.yml

@@ -49,6 +49,12 @@ jobs:

run: poetry run mypy src tests

- name: All tests (unit + integration + system)

- run: |

- poetry run pytest \

- --disable-warnings

+ run: poetry run pytest --disable-warnings --cov=asyncflow --cov-report=xml

+

+ - name: Upload coverage to Codecov

+ uses: codecov/codecov-action@v4

+ with:

+ files: coverage.xml

+ flags: tests

+ fail_ci_if_error: true

+ token: ${{ secrets.CODECOV_TOKEN }}

diff --git a/CHANGELOG.MD b/CHANGELOG.MD

new file mode 100644

index 0000000..20a987b

--- /dev/null

+++ b/CHANGELOG.MD

@@ -0,0 +1,115 @@

+# Changelog

+

+All notable changes to this project will be documented in this file.

+

+The format is based on [Keep a Changelog](https://keepachangelog.com/en/1.1.0/).

+

+## \[Unreleased]

+

+### Planned

+

+* **Network baseline upgrade** (sockets, RAM per connection, keep-alive).

+* **New metrics and visualization improvements** (queue wait times, service histograms).

+* **Monte Carlo analysis** with confidence intervals.

+

+---

+

+## \[0.1.1] – 2025-08-29

+

+### Added

+

+* **Event Injection (runtime-ready):**

+

+ * Declarative events with `start` / `end` markers (server down/up, network spike start/end).

+ * Runtime scheduler integrated with SimPy, applying events at the right simulation time.

+ * Deterministic latency **offset handling** for network spikes (phase 1).

+

+* **Improved Server Model:**

+

+ * Refined CPU + I/O handling with clearer queue accounting.

+ * Ready queue length now explicitly updated on contention.

+ * I/O queue metrics improved with better protection against mis-counting edge cases.

+ * Enhanced readability and maintainability in endpoint step execution flow.

+

+### Documentation

+

+* Expanded examples on event injection in YAML.

+* Inline comments clarifying queue management logic.

+

+### Notes

+

+* This is still an **alpha-series** release, but now supports scenario-driven **event injection** and a more faithful **server runtime model**, paving the way for the upcoming network baseline upgrade.

+

+---

+

+## \[0.1.0a2] – 2025-08-17

+

+### Fixed

+

+* **Quickstart YAML in README**: corrected field to ensure a smooth first run for new users.

+

+### Notes

+

+* Minor docs polish only; no runtime changes.

+

+---

+

+## \[0.1.0a1] – 2025-08-17

+

+### Changed

+

+* Repository aligned with the **PyPI 0.1.0a1** build.

+* Packaging metadata tidy-up in `pyproject.toml`.

+

+### CI

+

+* Main workflow now also triggers on **push** to `main`.

+

+### Notes

+

+* No functional/runtime changes.

+

+---

+

+## \[v0.1.0-alpha] – 2025-08-17

+

+**First public alpha** of AsyncFlow — a SimPy-based, **event-loop-aware** simulator for async distributed systems.

+

+### Highlights

+

+* **Event-loop model** per server: explicit **CPU** (blocking), **I/O waits** (non-blocking), **RAM** residency.

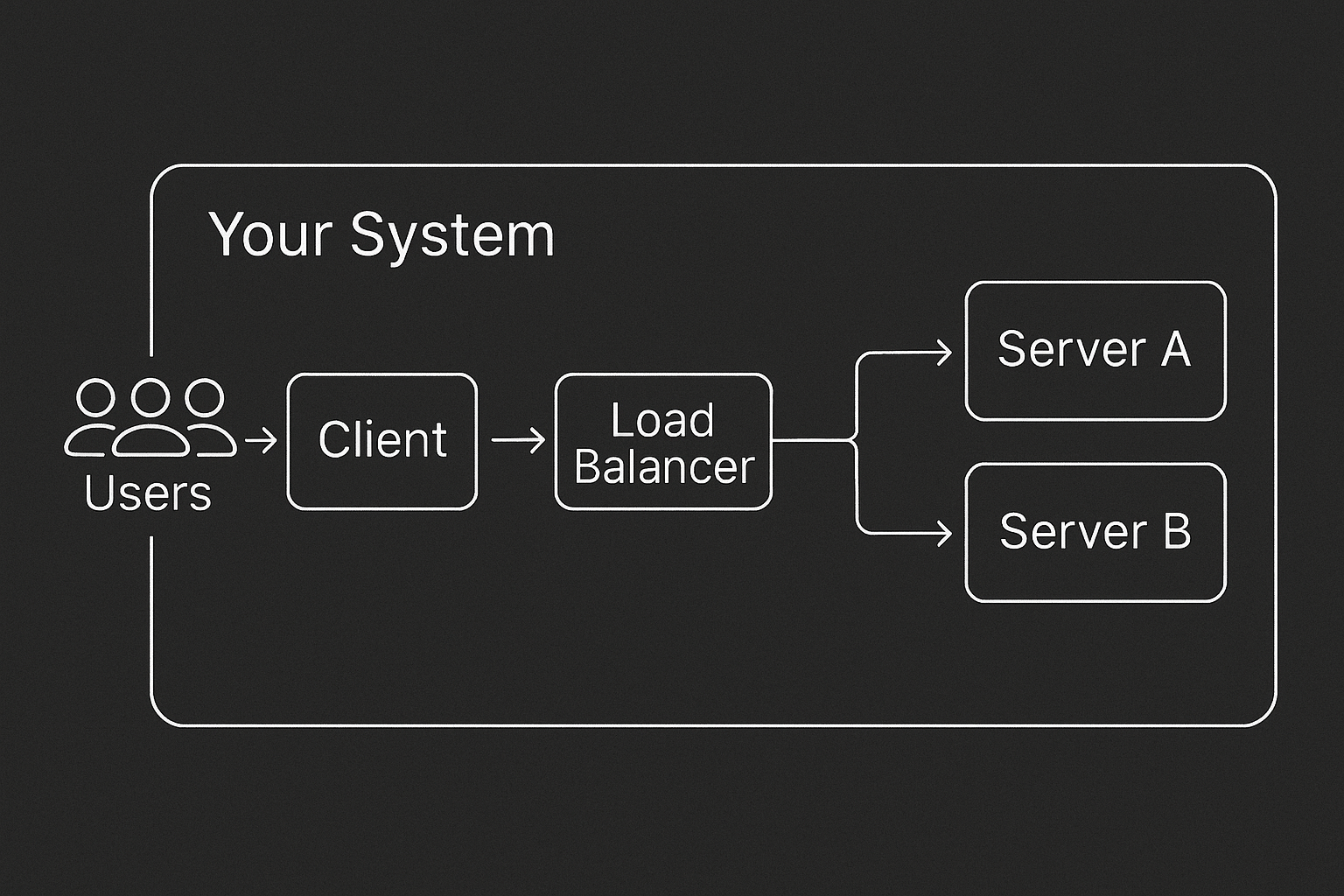

+* **Topology graph**: generator → client → (LB, optional) → servers; multi-server via **round-robin**; **stochastic network latency** and optional dropouts.

+* **Workload**: stochastic traffic via simple RV configs (Poisson defaults).

+

+### Metrics & Analyzer

+

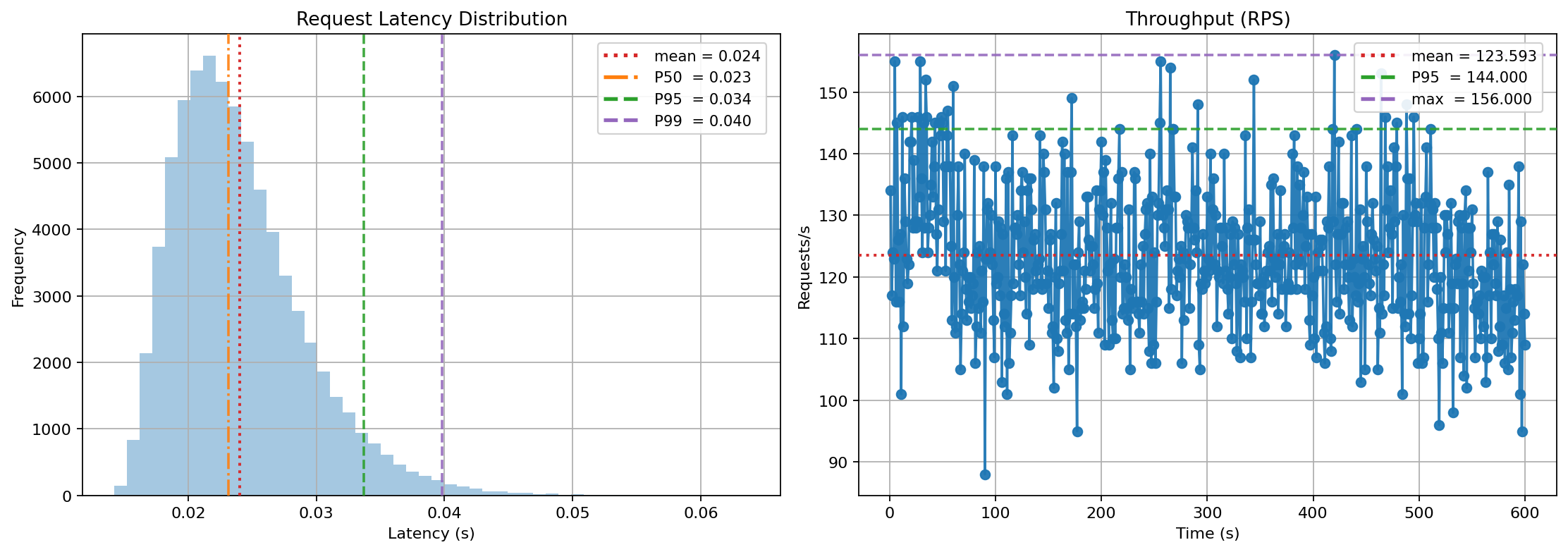

+* **Event metrics**: `RqsClock` (end-to-end latency).

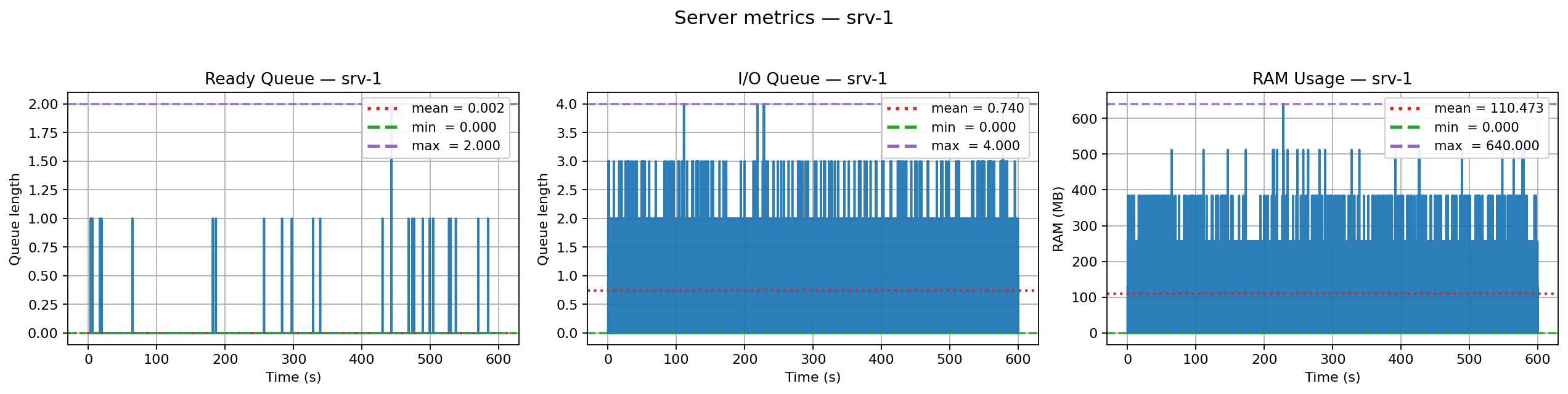

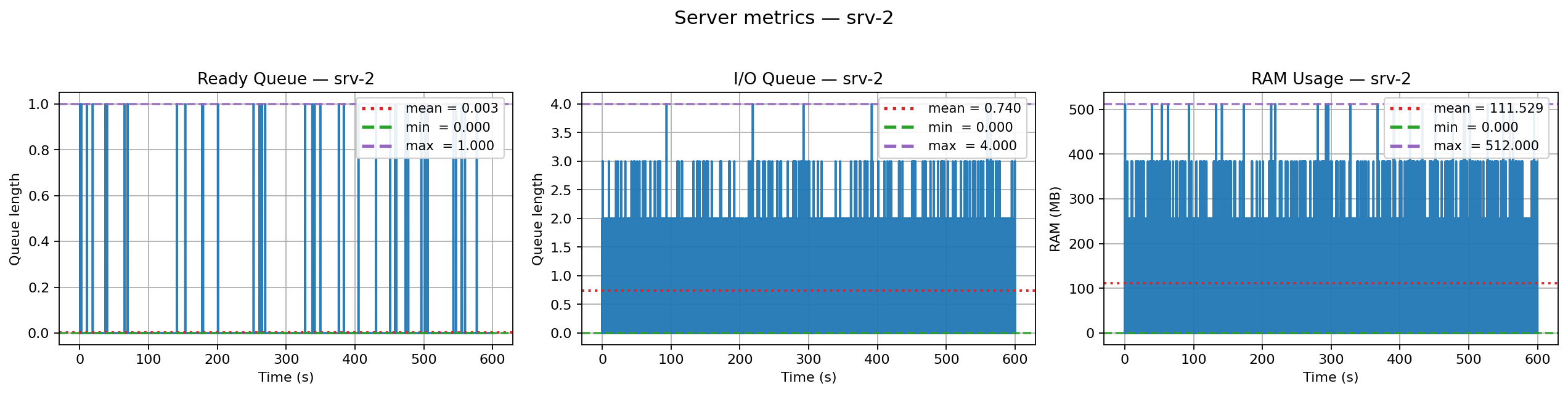

+* **Sampled metrics**: `ready_queue_len`, `event_loop_io_sleep`, `ram_in_use`, `edge_concurrent_connection`.

+* **Analyzer API** (`ResultsAnalyzer`):

+

+ * `get_latency_stats()`, `get_throughput_series()`

+ * Plots: `plot_latency_distribution()`, `plot_throughput()`

+ * Per-server: `plot_single_server_ready_queue()`, `plot_single_server_io_queue()`, `plot_single_server_ram()`

+ * Compact dashboards.

+

+### Examples

+

+* YAML quickstart (single server).

+* Pythonic builder:

+

+ * Single server.

+ * **Load balancer + two servers** example with saved figures.

+

+### Tooling & CI

+

+* One-shot setup scripts (`dev_setup`, `quality_check`, `run_tests`, `run_sys_tests`) for Linux/macOS/Windows.

+* GitHub Actions: Ruff + MyPy + Pytest; **system tests gate merges** into `main`.

+

+### Compatibility

+

+* **Python 3.12+** (Linux/macOS/Windows).

+* Install from PyPI: `pip install asyncflow-sim`.

+

+

+

+

diff --git a/README.md b/README.md

index 4622987..b084df7 100644

--- a/README.md

+++ b/README.md

@@ -1,12 +1,12 @@

-# AsyncFlow — Event-Loop Aware Simulator for Async Distributed Systems

+# AsyncFlow: Scenario-Driven Simulator for Async Systems

Created and maintained by @GioeleB00.

[](https://pypi.org/project/asyncflow-sim/)

[](https://pypi.org/project/asyncflow-sim/)

[](LICENSE)

-[](#)

+[](https://codecov.io/gh/AsyncFlow-Sim/AsyncFlow)

[](https://github.com/astral-sh/ruff)

[](https://mypy-lang.org/)

[](https://docs.pytest.org/)

@@ -14,27 +14,65 @@ Created and maintained by @GioeleB00.

-----

-AsyncFlow is a discrete-event simulator for modeling and analyzing the performance of asynchronous, distributed backend systems built with SimPy. You describe your system's topology—its servers, network links, and load balancers—and AsyncFlow simulates the entire lifecycle of requests as they move through it.

+**AsyncFlow** is a scenario-driven simulator for **asynchronous distributed backends**.

+You don’t “predict the Internet” — you **declare scenarios** (network RTT + jitter, resource caps, failure events) and AsyncFlow shows the operational impact: concurrency, queue growth, socket/RAM pressure, latency distributions. This means you can evaluate architectures before implementation: test scaling strategies, network assumptions, or failure modes without writing production code.

-It provides a **digital twin** of your service, modeling not just the high-level architecture but also the low-level behavior of each server's **event loop**, including explicit **CPU work**, **RAM residency**, and **I/O waits**. This allows you to run realistic "what-if" scenarios that behave like production systems rather than toy benchmarks.

+At its core, AsyncFlow is **event-loop aware**:

+

+* **CPU work** blocks the loop,

+* **RAM residency** ties up memory until release,

+* **I/O waits** free the loop just like in real async frameworks.

+

+With the new **event injection engine**, you can explore *what-if* dynamics: network spikes, server outages, degraded links, all under your control.

+

+---

### What Problem Does It Solve?

-Modern async stacks like FastAPI are incredibly performant, but predicting their behavior under real-world load is difficult. Capacity planning often relies on guesswork, expensive cloud-based load tests, or discovering bottlenecks only after a production failure. AsyncFlow is designed to replace that uncertainty with **data-driven forecasting**, allowing you to understand how your system will perform before you deploy a single line of code.

+Predicting how an async system will behave under real-world load is notoriously hard. Teams often rely on rough guesses, over-provisioning, or painful production incidents. **AsyncFlow replaces guesswork with scenario-driven simulations**: you declare the conditions (network RTT, jitter, resource limits, injected failures) and observe the consequences on latency, throughput, and resource pressure.

+

+---

+

+### Why Scenario-Driven? *Design Before You Code*

+

+AsyncFlow doesn’t need your backend to exist.

+You can model your architecture with YAML or Python, run simulations, and explore bottlenecks **before writing production code**.

+This scenario-driven approach lets you stress-test scaling strategies, network assumptions, and failure modes safely and repeatably.

+

+---

+

+### How Does It Work?

-### How Does It Work? An Example Topology

+AsyncFlow represents your system as a **directed graph of components**, for example: clients, load balancers, servers—connected by network edges with configurable latency models. Each server is **event-loop aware**: CPU work blocks, RAM stays allocated, and I/O yields the loop, just like real async frameworks. You can define topologies via **YAML** or a **Pythonic builder**.

-AsyncFlow models your system as a directed graph of interconnected components. A typical setup might look like this:

+

-

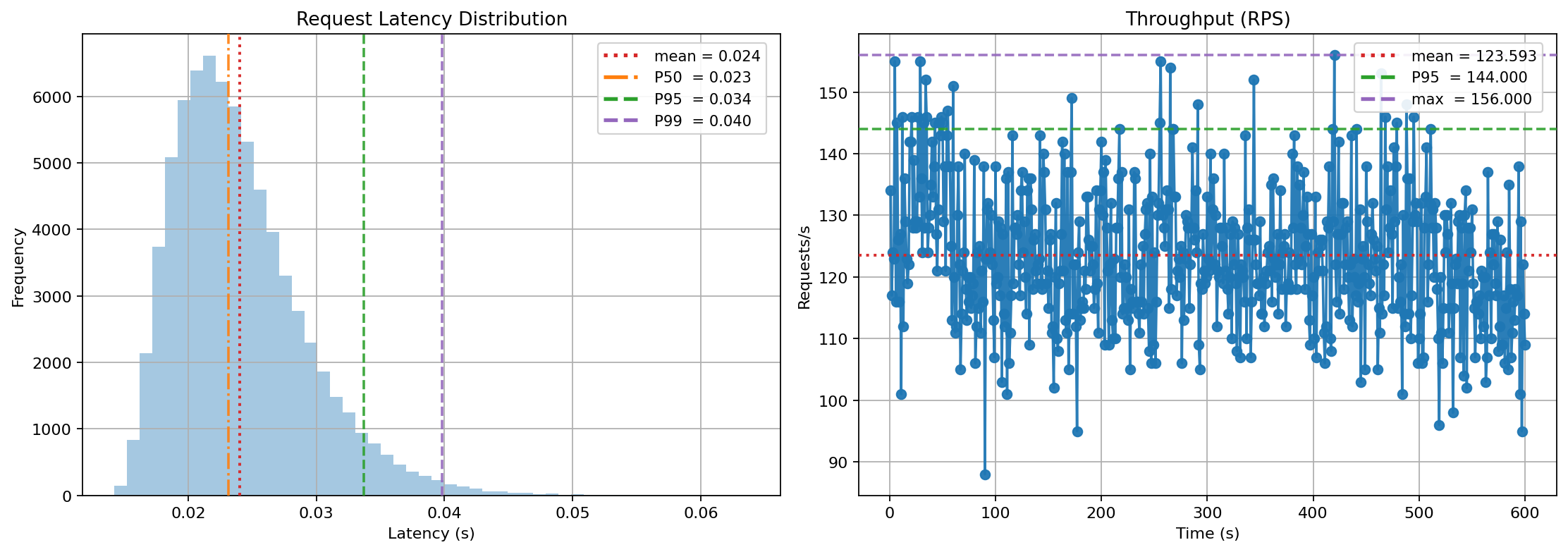

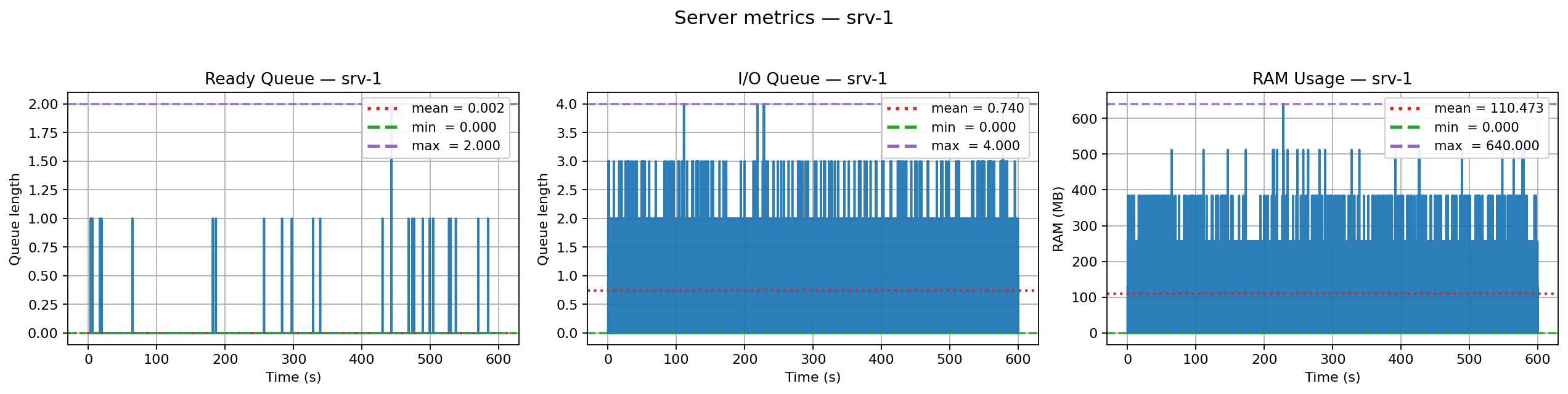

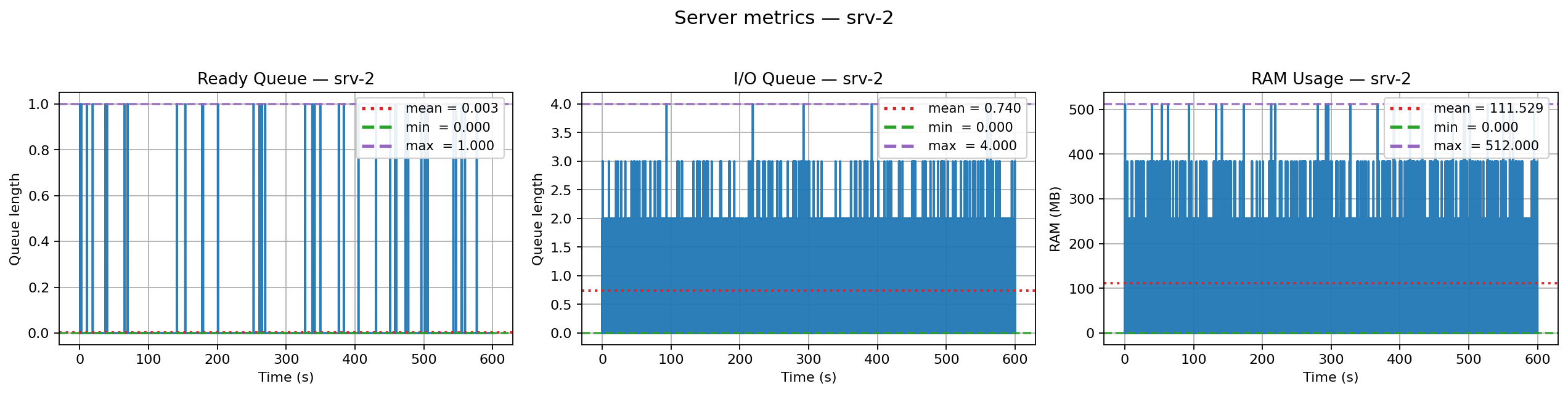

+Run the simulation and inspect the outputs:

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+

+

+

+---

### What Questions Can It Answer?

-By running simulations on your defined topology, you can get quantitative answers to critical engineering questions, such as:

+With scenario simulations, AsyncFlow helps answer questions such as:

+

+* How does **p95 latency** shift if active users double?

+* What happens when a **client–server edge** suffers a 20 ms spike for 60 seconds?

+* Will a given endpoint pipeline — CPU parse → RAM allocation → DB I/O — still meet its **SLA at 40 RPS**?

+* How many sockets and how much RAM will a load balancer need under peak conditions?

- * How does **p95 latency** change if active users increase from 100 to 200?

- * What is the impact on the system if the **client-to-server network latency** increases by 3ms?

- * Will a specific API endpoint—with a pipeline of parsing, RAM allocation, and database I/O—hold its **SLA at a load of 40 requests per second**?

---

## Installation

@@ -167,7 +205,7 @@ You’ll get latency stats in the terminal and a PNG with four charts (latency d

**Want more?**

-For ready-to-run scenarios—including examples using the Pythonic builder and multi-server topologies—check out the `examples/` directory in the repository.

+For ready-to-run scenarios including examples using the Pythonic builder and multi-server topologies, check out the `examples/` directory in the repository.

## Development

@@ -279,97 +317,28 @@ bash scripts/run_sys_tests.sh

Executes **pytest** with a terminal coverage summary (no XML, no slowest list).

+## Current Limitations (v0.1.1)

+AsyncFlow is still in alpha. The current release has some known limitations that are already on the project roadmap:

-## What AsyncFlow Models (v0.1)

-

-AsyncFlow provides a detailed simulation of your backend system. Here is a high-level overview of the core components it models. For a deeper technical dive into the implementation and design rationale, follow the links to the internal documentation.

-

-* **Async Event Loop:** Simulates a single-threaded, non-blocking event loop per server. **CPU steps** block the loop, while **I/O steps** are non-blocking, accurately modeling `asyncio` behavior.

- * *(Deep Dive: `docs/internals/runtime-and-resources.md`)*

-

-* **System Resources:** Models finite server resources, including **CPU cores** and **RAM (MB)**. Requests must acquire these resources, creating natural back-pressure and contention when the system is under load.

- * *(Deep Dive: `docs/internals/runtime-and-resources.md`)*

-

-* **Endpoints & Request Lifecycles:** Models server endpoints as a linear sequence of **steps**. Each step is a distinct operation, such as `cpu_bound_operation`, `io_wait`, or `ram` allocation.

- * *(Schema Definition: `docs/internals/simulation-input.md`)*

-

-* **Network Edges:** Simulates the connections between system components. Each edge has a configurable **latency** (drawn from a probability distribution) and an optional **dropout rate** to model packet loss.

- * *(Schema Definition: `docs/internals/simulation-input.md` | Runtime Behavior: `docs/internals/runtime-and-resources.md`)*

-

-* **Stochastic Workload:** Generates user traffic based on a two-stage sampling model, combining the number of active users and their request rate per minute to produce a realistic, fluctuating load (RPS) on the system.

- * *(Modeling Details with mathematical explanation and clear assumptions: `docs/internals/requests-generator.md`)*

-

-* **Metrics & Outputs:** Collects two types of data: **time-series metrics** (e.g., `ready_queue_len`, `ram_in_use`) and **event-based data** (`RqsClock`). This raw data is used to calculate final KPIs like **p95/p99 latency** and **throughput**.

- * *(Metric Reference: `docs/internals/metrics`)*

-

-## Current Limitations (v0.1)

-

-* Network realism: base latency + optional drops (no bandwidth/payload/TCP yet).

-* Single event loop per server: no multi-process/multi-node servers yet.

-* Linear endpoint flows: no branching/fan-out within an endpoint.

-* No thread-level concurrency; modeling OS threads and scheduler/context switching is out of scope.”

-* Stationary workload: no diurnal patterns or feedback/backpressure.

-* Sampling cadence: very short spikes can be missed if `sample_period_s` is large.

-

-

-## Roadmap (Order is not indicative of priority)

-

-This roadmap outlines the key development areas to transform AsyncFlow into a comprehensive framework for statistical analysis and resilience modeling of distributed systems.

-

-### 1. Monte Carlo Simulation Engine

-

-**Why:** To overcome the limitations of a single simulation run and obtain statistically robust results. This transforms the simulator from an "intuition" tool into an engineering tool for data-driven decisions with confidence intervals.

-

-* **Independent Replications:** Run the same simulation N times with different random seeds to sample the space of possible outcomes.

-* **Warm-up Period Management:** Introduce a "warm-up" period to be discarded from the analysis, ensuring that metrics are calculated only on the steady-state portion of the simulation.

-* **Ensemble Aggregation:** Calculate means, standard deviations, and confidence intervals for aggregated metrics (latency, throughput) across all replications.

-* **Confidence Bands:** Visualize time-series data (e.g., queue lengths) with confidence bands to show variability over time.

-

-### 2. Realistic Service Times (Stochastic Service Times)

-

-**Why:** Constant service times underestimate tail latencies (p95/p99), which are almost always driven by "slow" requests. Modeling this variability is crucial for a realistic analysis of bottlenecks.

-

-* **Distributions for Steps:** Allow parameters like `cpu_time` and `io_waiting_time` in an `EndpointStep` to be sampled from statistical distributions (e.g., Lognormal, Gamma, Weibull) instead of being fixed values.

-* **Per-Request Sampling:** Each request will sample its own service times independently, simulating the natural variability of a real-world system.

-

-### 3. Component Library Expansion

-

-**Why:** To increase the variety and realism of the architectures that can be modeled.

-

-* **New System Nodes:**

- * `CacheRuntime`: To model caching layers (e.g., Redis) with hit/miss logic, TTL, and warm-up behavior.

- * `APIGatewayRuntime`: To simulate API Gateways with features like rate-limiting and authentication caching.

- * `DBRuntime`: A more advanced model for databases featuring connection pool contention and row-level locking.

-* **New Load Balancer Algorithms:** Add more advanced routing strategies (e.g., Weighted Round Robin, Least Response Time).

-

-### 4. Fault and Event Injection

-

-**Why:** To test the resilience and behavior of the system under non-ideal conditions, a fundamental use case for Site Reliability Engineering (SRE).

-

-* **API for Scheduled Events:** Introduce a system to schedule events at specific simulation times, such as:

- * **Node Down/Up:** Turn a server off and on to test the load balancer's failover logic.

- * **Degraded Edge:** Drastically increase the latency or drop rate of a network link.

- * **Error Bursts:** Simulate a temporary increase in the rate of application errors.

-

-### 5. Advanced Network Modeling

+* **Network model** — only base latency + jitter/spikes.

+ Bandwidth, queuing, and protocol-level details (HTTP/2 streams, QUIC, TLS handshakes) are not yet modeled.

-**Why:** To more faithfully model network-related bottlenecks that are not solely dependent on latency.

+* **Server model** — single event loop per server.

+ Multi-process or multi-threaded execution is not yet supported.

-* **Bandwidth and Payload Size:** Introduce the concepts of link bandwidth and request/response size to simulate delays caused by data transfer.

-* **Retries and Timeouts:** Model retry and timeout logic at the client or internal service level.

+* **Endpoint flows** — endpoints are linear pipelines.

+ Branching/fan-out (e.g. service calls to DB + cache) will be added in future versions.

-### 6. Complex Endpoint Flows

+* **Workload generation** — stationary workloads only.

+ No support yet for diurnal patterns, feedback loops, or adaptive backpressure.

-**Why:** To model more realistic business logic that does not follow a linear path.

+* **Overload policies** — no explicit handling of overload conditions.

+ Queue caps, deadlines, timeouts, rate limiting, and circuit breakers are not yet implemented.

-* **Conditional Branching:** Introduce the ability to have conditional steps within an endpoint (e.g., a different path for a cache hit vs. a cache miss).

-* **Fan-out / Fan-in:** Model scenarios where a service calls multiple downstream services in parallel and waits for their responses.

+* **Sampling cadence** — very short events may be missed if the `sample_period_s` is too large.

-### 7. Backpressure and Autoscaling

-**Why:** To simulate the behavior of modern, adaptive systems that react to load.

-* **Dynamic Rate Limiting:** Introduce backpressure mechanisms where services slow down the acceptance of new requests if their internal queues exceed a certain threshold.

-* **Autoscaling Policies:** Model simple Horizontal Pod Autoscaler (HPA) policies where the number of server replicas increases or decreases based on metrics like CPU utilization or queue length.

+📌 See the [ROADMAP](./ROADMAP.md) for planned features and upcoming milestones.

diff --git a/ROADMAP.md b/ROADMAP.md

new file mode 100644

index 0000000..0fc9666

--- /dev/null

+++ b/ROADMAP.md

@@ -0,0 +1,65 @@

+# **AsyncFlow Roadmap**

+

+AsyncFlow is designed as a **scenario-driven simulator for capacity planning**. Its purpose is not to “predict the Internet,” but to give engineers and researchers a way to test how backend systems behave under controlled, reproducible what-if conditions. The roadmap reflects a balance between realism, clarity, and usability: each step extends the tool while keeping its scope transparent and focused.

+

+---

+

+## **1. Network Baseline Upgrade**

+

+The first milestone is to move beyond a purely abstract latency model and introduce a more realistic network layer. Instead of only attaching a fixed RTT, AsyncFlow will account for socket capacity and per-connection memory usage at each node (servers and load balancers). This brings the simulator closer to operational limits, where resource saturation, rather than bandwidth, becomes the bottleneck.

+

+**Impact:** users will see how socket pressure and memory constraints affect latency, throughput, and error rates under different scenarios.

+

+---

+

+## **2. Richer Metrics and Visualization**

+

+Next, the focus shifts to **observability**. The simulator will expose finer metrics such as RAM queue lengths, CPU waiting times, and service durations. Visualizations will be improved with richer charts, event markers, and streamlined dashboards.

+

+**Impact:** enables clearer attribution of slowdowns whether they stem from CPU contention, memory limits, or network pressure and makes results easier to communicate.

+

+---

+

+## **3. Monte Carlo Analysis**

+

+Simulations are inherently variable. This milestone adds **multi-run Monte Carlo support**, allowing users to quantify uncertainty in latency, throughput, and utilization metrics. Results will be presented with confidence intervals and bands over time series, turning AsyncFlow into a decision-making tool rather than a single-run experiment.

+

+**Impact:** supports risk-aware capacity planning by highlighting ranges and probabilities, not just averages.

+

+---

+

+## **4. Databases and Caches**

+

+Once the core network and metric layers are mature, AsyncFlow will expand into modeling **stateful backends**. Simple but powerful abstractions for databases and caches will be introduced: connection pools, cache hit/miss dynamics, and latency distributions.

+

+**Impact:** this step unlocks realistic end-to-end scenarios, where system behavior is dominated not just by servers and edges, but by datastore capacity and caching efficiency.

+

+---

+

+## **5. Overload Policies and Resilience**

+

+With the main components in place, the simulator will introduce **control policies**: queue caps, deadlines, circuit breakers, rate limiting, and similar mechanisms. These features make it possible to test how systems protect themselves under overload, and to compare resilience strategies side by side.

+

+**Impact:** users will gain insight into not just when a system fails, but how gracefully it degrades.

+

+---

+

+## **6. Reinforcement Learning Playground**

+

+The final planned milestone is a **research-oriented playground** where AsyncFlow serves as a training and evaluation environment for intelligent load-balancing and autoscaling strategies. With a Gym-like interface, researchers can train RL agents and benchmark them against established baselines in controlled, reproducible conditions.

+

+**Impact:** bridges capacity planning with modern adaptive control, turning AsyncFlow into both an educational tool and a research testbed.

+

+---

+

+## **Vision**

+

+At each step, AsyncFlow stays true to its philosophy: **clarity over exhaustiveness, scenarios over prediction**. The roadmap builds toward a platform that is useful across three domains:

+

+* **Education**, to illustrate principles of latency, concurrency, and resilience.

+* **Pre-production planning**, to evaluate system limits before deployment.

+* **Research**, to test new algorithms and policies in a safe, transparent environment.

+

+---

+

+

diff --git a/docs/api/event-injection.md b/docs/api/event-injection.md

new file mode 100644

index 0000000..9156364

--- /dev/null

+++ b/docs/api/event-injection.md

@@ -0,0 +1,246 @@

+# EventInjection — Public API Documentation

+

+## Overview

+

+`EventInjection` declares a **time-bounded event** that affects a component in the simulation. Each event targets either a **server** or a **network edge**, and is delimited by a `start` marker and an `end` marker.

+

+Supported families (per code):

+

+* **Server availability**: `SERVER_DOWN` → `SERVER_UP`

+* **Network latency spike (deterministic offset in seconds)**: `NETWORK_SPIKE_START` → `NETWORK_SPIKE_END`

+ For network spikes, the `Start` marker carries the amplitude in seconds via `spike_s`.

+

+Strictness:

+

+* Models use `ConfigDict(extra="forbid", frozen=True)`

+ → unknown fields are rejected; instances are immutable at runtime.

+

+---

+

+## Data Model

+

+### `Start`

+

+* `kind: Literal[SERVER_DOWN, NETWORK_SPIKE_START]`

+ Event family selector.

+* `t_start: NonNegativeFloat`

+ Start time in **seconds** from simulation start; **≥ 0.0**.

+* `spike_s: PositiveFloat | None`

+ **Required** and **> 0** **only** when `kind == NETWORK_SPIKE_START`.

+ **Forbidden** (must be omitted/`None`) for any other kind.

+

+### `End`

+

+* `kind: Literal[SERVER_UP, NETWORK_SPIKE_END]`

+ Must match the start family (see invariants).

+* `t_end: PositiveFloat`

+ End time in **seconds**; **> 0.0**.

+

+### `EventInjection`

+

+* `event_id: str`

+ Unique identifier within the simulation payload.

+* `target_id: str`

+ Identifier of the affected component (server or edge) as defined in the topology.

+* `start: Start`

+ Start marker.

+* `end: End`

+ End marker.

+

+---

+

+## Validation & Invariants (as implemented)

+

+### Within `EventInjection`

+

+1. **Family coherence**

+

+ * `SERVER_DOWN` → `SERVER_UP`

+ * `NETWORK_SPIKE_START` → `NETWORK_SPIKE_END`

+ Any other pairing raises:

+

+ ```

+ The event {event_id} must have as value of kind in end {expected}

+ ```

+2. **Temporal ordering**

+

+ * `t_start < t_end` (with `t_start ≥ 0.0`, `t_end > 0.0`)

+ Error:

+

+ ```

+ The starting time for the event {event_id} must be smaller than the ending time

+ ```

+3. **Network spike parameter**

+

+ * If `start.kind == NETWORK_SPIKE_START` ⇒ `start.spike_s` **must** be provided and be a positive float (seconds).

+ Error:

+

+ ```

+ The field spike_s for the event {event_id} must be defined as a positive float (seconds)

+ ```

+ * Otherwise (`SERVER_DOWN`) ⇒ `start.spike_s` **must be omitted** / `None`.

+ Error:

+

+ ```

+ Event {event_id}: spike_s must be omitted for non-network events

+ ```

+

+### Enforced at `SimulationPayload` level

+

+4. **Unique event IDs**

+ Error:

+

+ ```

+ The id's representing different events must be unique

+ ```

+5. **Target existence & compatibility**

+

+ * For server events (`SERVER_DOWN`), `target_id` must refer to a **server**.

+ * For network spikes (`NETWORK_SPIKE_START`), `target_id` must refer to an **edge**.

+ Errors:

+

+ ```

+ The target id {target_id} related to the event {event_id} does not exist

+ ```

+

+ ```

+ The event {event_id} regarding a server does not have a compatible target id

+ ```

+

+ ```

+ The event {event_id} regarding an edge does not have a compatible target id

+ ```

+6. **Times within simulation horizon** (with `T = sim_settings.total_simulation_time`)

+

+ * `t_start >= 0.0` and `t_start <= T`

+ * `t_end <= T`

+ Errors:

+

+ ```

+ Event '{event_id}': start time t_start={t:.6f} must be >= 0.0

+ Event '{event_id}': start time t_start={t:.6f} exceeds simulation horizon T={T:.6f}

+ Event '{event_id}': end time t_end={t:.6f} exceeds simulation horizon T={T:.6f}

+ ```

+7. **Global liveness rule (servers)**

+ The payload is rejected if **all servers are down at the same moment**.

+ Implementation detail: the timeline is ordered so that, at identical timestamps, **`END` is processed before `START`** to avoid transient all-down states.

+ Error:

+

+ ```

+ At time {time:.6f} all servers are down; keep at least one up

+ ```

+

+---

+

+## Runtime Semantics (summary)

+

+* **Server events**: the targeted server is unavailable between the start and end markers; the system enforces that at least one server remains up at all times.

+* **Network spike events**: the targeted edge’s latency sampler is deterministically **shifted by `spike_s` seconds** during the event window (additive congestion model). The underlying distribution is not reshaped—samples are translated by a constant offset.

+

+*(This reflects the agreed model: deterministic additive offset on edges.)*

+

+---

+

+## Units & Precision

+

+* All times and offsets are in **seconds** (floating-point).

+* Provide values with the precision your simulator supports; microsecond-level precision is acceptable if needed.

+

+---

+

+## Authoring Guidelines

+

+* **Do not include `spike_s`** for non-network events.

+* Use **stable, meaningful `event_id`** values for auditability.

+* Keep events within the **simulation horizon**.

+* When multiple markers share the same timestamp, rely on the engine’s **END-before-START** ordering for determinism.

+

+---

+

+## Examples

+

+### 1) Valid — Server maintenance window

+

+```yaml

+event_id: ev-maint-001

+target_id: srv-1

+start: { kind: SERVER_DOWN, t_start: 120.0 }

+end: { kind: SERVER_UP, t_end: 240.0 }

+```

+

+### 2) Valid — Network spike on an edge (+8 ms)

+

+```yaml

+event_id: ev-spike-008ms

+target_id: edge-12

+start: { kind: NETWORK_SPIKE_START, t_start: 10.0, spike_s: 0.008 }

+end: { kind: NETWORK_SPIKE_END, t_end: 25.0 }

+```

+

+### 3) Invalid — Missing `spike_s` for a network spike

+

+```yaml

+event_id: ev-missing-spike

+target_id: edge-5

+start: { kind: NETWORK_SPIKE_START, t_start: 5.0 }

+end: { kind: NETWORK_SPIKE_END, t_end: 15.0 }

+```

+

+Error:

+

+```

+The field spike_s for the event ev-missing-spike must be defined as a positive float (seconds)

+```

+

+### 4) Invalid — `spike_s` present for a server event

+

+```yaml

+event_id: ev-bad-spike

+target_id: srv-2

+start: { kind: SERVER_DOWN, t_start: 50.0, spike_s: 0.005 }

+end: { kind: SERVER_UP, t_end: 60.0 }

+```

+

+Error:

+

+```

+Event ev-bad-spike: spike_s must be omitted for non-network events

+```

+

+### 5) Invalid — Mismatched families

+

+```yaml

+event_id: ev-bad-kinds

+target_id: edge-1

+start: { kind: NETWORK_SPIKE_START, t_start: 5.0, spike_s: 0.010 }

+end: { kind: SERVER_UP, t_end: 15.0 }

+```

+

+Error:

+

+```

+The event ev-bad-kinds must have as value of kind in end NETWORK_SPIKE_END

+```

+

+### 6) Invalid — Start not before End

+

+```yaml

+event_id: ev-bad-time

+target_id: srv-2

+start: { kind: SERVER_DOWN, t_start: 300.0 }

+end: { kind: SERVER_UP, t_end: 300.0 }

+```

+

+Error:

+

+```

+The starting time for the event ev-bad-time must be smaller than the ending time

+```

+

+---

+

+## Notes for Consumers

+

+* The schema is **strict**: misspelled fields (e.g., `t_strat`) are rejected.

+* The engine may combine multiple active network spikes on the same edge by **summing** their `spike_s` values while they overlap (handled by runtime bookkeeping).

+* This document describes exactly what is present in the provided code and validators; no additional fields or OpenAPI metadata are assumed.

diff --git a/docs/internals/edges-events-injection.md b/docs/internals/edges-events-injection.md

new file mode 100644

index 0000000..210d190

--- /dev/null

+++ b/docs/internals/edges-events-injection.md

@@ -0,0 +1,277 @@

+# Edge Event Injection: Architecture & Operations

+

+This document explains how **edge-level events** (e.g., deterministic latency spikes) are modeled, centralized, and injected into the simulation. It covers:

+

+* Data model (start/end markers & validation)

+* The **central event runtime** (timeline, cumulative offsets, live adapters)

+* How **SimulationRunner** wires everything

+* How **EdgeRuntime** consumes the adapters during delivery

+* Ordering, correctness guarantees, and trade-offs

+* Extension points and maintenance tips

+

+---

+

+## 1) Conceptual Model

+

+### What’s an “edge event”?

+

+An edge event is a **time-bounded effect** applied to a specific network edge (link). Today we support **latency spikes**: while the event is active, the edge’s transit time is increased by a fixed offset (`spike_s`) in seconds.

+

+### Event markers

+

+Events are defined with two **markers**:

+

+* `Start` (`kind` in `{NETWORK_SPIKE_START, SERVER_DOWN}`)

+* `End` (`kind` in `{NETWORK_SPIKE_END, SERVER_UP}`)

+

+Validation guarantees:

+

+* **Kind pairing** is coherent (e.g., `NETWORK_SPIKE_START` ↔ `NETWORK_SPIKE_END`).

+* **Time ordering**: `t_start < t_end`.

+* For network spike events, **`spike_s` is required** and positive.

+

+> These guarantees are enforced by the Pydantic models and their `model_validator`s in the schema layer, *before* runtime.

+

+---

+

+## 2) Centralized Event Registry: `EventInjectionRuntime`

+

+`EventInjectionRuntime` centralizes all event logic and exposes **live read-only views** (adapters) to edge actors.

+

+### Responsibilities & Data

+

+* **Input**:

+

+ * `events: list[EventInjection] | None`

+ * `edges: list[Edge]`, `servers: list[Server]`, `env: simpy.Environment`

+* **Internal state**:

+

+ * `self._edges_events: dict[event_id, dict[edge_id, float]]`

+ Mapping from event → edge → spike amplitude (`spike_s`).

+ This allows multiple events per edge and distinguishes overlapping events.

+ * `self._edges_spike: dict[edge_id, float]`

+ **Cumulative** spike currently active per edge (updated at runtime).

+ * `self._edges_affected: set[edge_id]`

+ All edges that are ever impacted by at least one event.

+ * `self._edges_timeline: list[tuple[time, event_id, edge_id, mark]]`

+ Absolute timestamps (`time`) with `mark ∈ {start, end}` for **edges**.

+ * (We also construct a server timeline, reserved for future server-side effects.)

+

+> If `events` is `None` or empty, the runtime initializes to empty sets/maps and **does nothing** when started.

+

+### Build step (performed in `__init__`)

+

+1. Early return if there are no events (keeps empty adapters).

+2. Partition events by **target type** (edge vs server).

+3. For each **edge** event:

+

+ * Record `spike_s` in `self._edges_events[event_id][edge_id]`.

+ * Append `(t_start, event_id, edge_id, start)` and `(t_end, event_id, edge_id, end)` to the **edge timeline**.

+ * Add `edge_id` to `self._edges_affected`.

+4. **Sort** timelines by `(time, mark == start, event_id, edge_id)` so that at equal time, **end** is processed **before start**.

+ (Because `False < True`, `end` precedes `start`.)

+

+### Runtime step (SimPy process)

+

+The coroutine `self._assign_edges_spike()`:

+

+* Iterates the ordered timeline of **absolute** timestamps.

+* Converts absolute `t_event` to relative waits via `dt = t_event - last_t`.

+* After waiting `dt`, applies the state change:

+

+ * On **start**: `edges_spike[edge_id] += delta`

+ * On **end**: `edges_spike[edge_id] -= delta`

+

+This gives a continuously updated, **cumulative** spike per edge, enabling **overlapping events** to stack linearly.

+

+### Public adapters (read-only views)

+

+* `edges_spike: dict[str, float]` — current cumulative spike per edge.

+* `edges_affected: set[str]` — edges that may ever be affected.

+

+These are **shared** with `EdgeRuntime` instances, so updates made by the central process are immediately visible to the edges **without any signaling or copying**.

+

+---

+

+## 3) Wiring & Lifecycle: `SimulationRunner`

+

+`SimulationRunner` orchestrates creation, wiring, and startup order.

+

+### Build phase

+

+1. Build node runtimes (request generator, client, servers, optional load-balancer).

+2. Build **edge runtimes** (`EdgeRuntime`) with their target boxes (stores).

+3. **Build events**:

+

+ * If `simulation_input.events` is empty/None → **skip** (no process, no adapters).

+ * Else:

+

+ * Construct **one** `EventInjectionRuntime`.

+ * Extract adapters: `edges_affected`, `edges_spike`.

+ * Attach these **same objects** to **every** `EdgeRuntime`.

+ (EdgeRuntime performs a membership check; harmless for unaffected edges.)

+

+> We deliberately attach adapters to all edges for simplicity. This is O(1) memory for references, and O(1) runtime per delivery (one membership + dict lookup). If desired, the runner could pass adapters **only** to affected edges—this would save a branch per delivery at the cost of more conditional wiring logic.

+

+### Start phase (order matters)

+

+* `EventInjectionRuntime.start()` — **first**

+ Ensures that the spike timeline is active before edges start delivering; the first edge transport will see the correct offset when due.

+* Start all other actors.

+* Start the metric collector (RAM / queues / connections snapshots).

+* `env.run(until=total_simulation_time)` to advance the clock.

+

+### Why this order?

+

+* Prevents race conditions where the first edge message observes stale (`0.0`) spike at time ≈ `t_start`.

+* Keeps the architecture deterministic and easy to reason about.

+

+---

+

+## 4) Edge Consumption: `EdgeRuntime`

+

+Each edge has:

+

+* `edges_affected: Container[str] | None`

+* `edges_spike: Mapping[str, float] | None`

+

+During `_deliver(state)`:

+

+1. Sample base latency from the configured RV.

+2. If adapters are present **and** `edge_id ∈ edges_affected`:

+

+ * Read `spike = edges_spike.get(edge_id, 0.0)`

+ * `effective = base_latency + spike`

+3. `yield env.timeout(effective)`

+

+No further coordination required: the **central** process updates `edges_spike` as time advances, so each delivery observes the **current** spike.

+

+---

+

+## 5) Correctness & Guarantees

+

+* **Temporal correctness**: Absolute → relative time conversion (`dt = t_event - last_t`) ensures the process applies changes at the exact timestamps. Sorting ensures **END** is processed before **START** when times coincide, so zero-length events won’t “leak” positive offset.

+* **Coherence**: Pydantic validators enforce event pairing and time ordering.

+* **Immutability**: Marker models are frozen; unknown fields are forbidden.

+* **Overlap**: Multiple events on the same edge stack linearly (`+=`/`-=`).

+

+---

+

+## 6) Performance & Trade-offs

+

+### Centralized vs Distributed

+

+* **Chosen**: one central `EventInjectionRuntime` with live adapters.

+

+ * **Pros**: simple mental model; single source of truth; O(1) read for edges; no per-edge coroutines; minimal memory traffic.

+ * **Cons**: single process to maintain (but it’s lightweight); edges branch on membership.

+

+* **Alternative A**: deliver the **full** event runtime object to each edge.

+

+ * **Cons**: wider API surface; tighter coupling; harder to evolve; edges would get capabilities they don’t need (SRP violation).

+

+* **Alternative B**: per-edge local event processes.

+

+ * **Cons**: one coroutine per edge (N processes), more scheduler overhead, duplicated logic & sorting.

+

+### Passing adapters to *all* edges vs only affected edges

+

+* **Chosen**: pass to all edges.

+

+ * **Pros**: wiring stays uniform; negligible memory; O(1) branch in `_deliver`.

+ * **Cons**: trivial per-delivery branch even for unaffected edges.

+* **Alternative**: only affected edges receive adapters.

+

+ * **Pros**: removes one branch at delivery.

+ * **Cons**: more conditional wiring, more moving parts for little gain.

+

+---

+

+## 7) Sequence Overview

+

+```

+SimulationRunner.run()

+ ├─ _build_rqs_generator()

+ ├─ _build_client()

+ ├─ _build_servers()

+ ├─ _build_load_balancer()

+ ├─ _build_edges()

+ ├─ _build_events()

+ │ └─ EventInjectionRuntime(...):

+ │ - build _edges_events, _edges_affected

+ │ - build & sort _edges_timeline

+ │

+ ├─ _start_events()

+ │ └─ start _assign_edges_spike() (central timeline process)

+ │

+ ├─ _start_all_processes() (edges, client, servers, etc.)

+ ├─ _start_metric_collector()

+ └─ env.run(until = T)

+```

+

+During `EdgeRuntime._deliver()`:

+

+```

+base = sample(latency_rv)

+if adapters_present and edge_id in edges_affected:

+ spike = edges_spike.get(edge_id, 0.0)

+ effective = base + spike

+else:

+ effective = base

+yield env.timeout(effective)

+```

+

+---

+

+## 8) Extensibility

+

+* **Other edge effects**: add new event kinds and store per-edge state (e.g., drop-rate bumps) in `_edges_events` and update logic in `_assign_edges_spike()`.

+* **Server outages**: server timeline is already scaffolded; add a server process to open/close resources (e.g., capacity=0 during downtime).

+* **Non-deterministic spikes**: swap `float` `spike_s` for a small sampler (callable) and apply the sampled value at each **start**, or at each **delivery** (define semantics).

+* **Per-edge filtering in runner** (micro-optimization): only wire adapters to affected edges.

+

+---

+

+## 9) Operational Notes & Best Practices

+

+* **Start order** matters: always start `EventInjectionRuntime` *before* edges.

+* **Adapters must be shared** (not copied) to preserve live updates.

+* **Keep `edges_spike` additive** (no negative values unless you introduce “negative spikes” intentionally).

+* **Time units**: seconds everywhere; keep it consistent with sampling.

+* **Validation first**: reject malformed events early (schema layer), *not* in runtime.

+

+---

+

+## 10) Glossary

+

+* **Adapter**: a minimal, read-only view (e.g., `Mapping[str, float]`, `Container[str]`) handed to edges to observe central state without owning it.

+* **Timeline**: sorted list of `(time, event_id, edge_id, mark)` where `mark ∈ {start, end}`.

+* **Spike**: deterministic latency offset to be added to the sampled base latency.

+

+---

+

+## 11) Example (end-to-end)

+

+**YAML (conceptual)**

+

+```yaml

+events:

+ - event_id: ev-spike-1

+ target_id: edge-42

+ start: { kind: NETWORK_SPIKE_START, t_start: 12.0, spike_s: 0.050 }

+ end: { kind: NETWORK_SPIKE_END, t_end: 18.0 }

+```

+

+**Runtime effect**

+

+* From `t ∈ [12, 18)`, `edge-42` adds **+50 ms** to its sampled latency.

+* Overlapping events stack: `edges_spike["edge-42"]` is the **sum** of active spikes.

+

+---

+

+## 12) Summary

+

+* We centralize event logic in **`EventInjectionRuntime`** and expose **live adapters** to edges.

+* Edges read **current cumulative spikes** at delivery time—**no coupling** and **no extra processes per edge**.

+* The runner keeps the flow simple and deterministic: **build → wire → start events → start actors → run**.

+* The architecture is **extensible**, **testable**, and **performant** for realistic workloads.

diff --git a/docs/internals/server-events-injection.md b/docs/internals/server-events-injection.md

new file mode 100644

index 0000000..8bc7165

--- /dev/null

+++ b/docs/internals/server-events-injection.md

@@ -0,0 +1,203 @@

+# Server Event Injection — End-to-End Design & Rationale

+

+This document explains how **server-level events** (planned outages) are modeled and executed across all layers of the simulation stack. It complements the Edge Event Injection design.

+

+---

+

+## 1) Goals

+

+* Hide outage semantics from the load balancer algorithms: **they see only the current set of edges**.

+* Keep **runtime cost O(1)** per transition (down/up).

+* Preserve determinism and fairness when servers rejoin.

+* Centralize event logic; avoid per-server coroutines and ad-hoc flags.

+

+---

+

+## 2) Participants (layers)

+

+* **Schema / Validation (Pydantic)**: validates `EventInjection` objects (pairing, order, target existence).

+* **SimulationRunner**: builds runtimes; owns the **single shared** `OrderedDict[str, EdgeRuntime]` used by the LB (`_lb_out_edges`).

+* **EventInjectionRuntime**: central event engine; builds the **server timeline** and a **reverse index** `server_id → (edge_id, EdgeRuntime)`; mutates `_lb_out_edges` at runtime.

+* **LoadBalancerRuntime**: reads `_lb_out_edges` to select the next edge (RR / least-connections). **No outage logic inside.**

+* **EdgeRuntime (LB→Server edges)**: unaffected by server outages; disappears from the LB’s choice set while the server is down.

+* **ServerRuntime**: unaffected structurally; no extra checks for “am I down?”.

+* **SimPy Environment**: schedules the central outage coroutine.

+* **Metric Collector**: optional; observes effects but is not part of the mechanism.

+

+---

+

+## 3) Data & Structures

+

+* **`_lb_out_edges: OrderedDict[str, EdgeRuntime]`**

+ Single shared map of **currently routable** LB→server edges.

+

+ * Removal/Insertion/Move are **O(1)**.

+ * Aliased into both `LoadBalancerRuntime` and `EventInjectionRuntime`.

+

+* **`_servers_timeline: list[tuple[time, event_id, server_id, mark]]`**

+ Absolute timestamps, sorted by `(time, mark == start, event_id, server_id)` so **END precedes START** when equal.

+

+* **`_edge_by_server: dict[str, tuple[str, EdgeRuntime]]`**

+ Reverse index built from `_lb_out_edges` at initialization.

+

+---

+

+## 4) Build-time Responsibilities

+

+* **SimulationRunner**

+

+ 1. Build LB and pass it `_lb_out_edges` (empty at first).

+ 2. Build edges; when wiring LB→Server, insert that edge into `_lb_out_edges`.

+ 3. Build `EventInjectionRuntime`, passing:

+

+ * validated `events`

+ * `servers` and `edges` (IDs for sanity checks)

+ * aliased `_lb_out_edges`

+

+* **EventInjectionRuntime.**init****

+

+ * Partition events; construct ` _servers_timeline`.

+ * Sort timeline (END before START at equal `time`).

+ * Build ` _edge_by_server` by scanning `_lb_out_edges` (edge target → server\_id).

+

+---

+

+## 5) Run-time Responsibilities

+

+* **EventInjectionRuntime.\_assign\_server\_state()**

+

+ * Iterate the server timeline with absolute→relative waits: `dt = t_event − last_t`, then `yield env.timeout(dt)`.

+ * On `SERVER_DOWN` (START):

+ `lb_out_edges.pop(edge_id, None)`

+ * On `SERVER_UP` (END):

+

+ ```

+ lb_out_edges[edge_id] = edge_runtime

+ lb_out_edges.move_to_end(edge_id) # fairness on rejoin

+ ```

+

+* **LoadBalancerRuntime**

+

+ * For each request, read `_lb_out_edges` and apply the chosen algorithm. If a server is down, its edge simply **isn’t there**.

+

+* **EdgeRuntime & ServerRuntime**

+

+ * No additional work: outage is reflected entirely by presence/absence of the LB→server edge.

+

+---

+

+## 6) Sequence Overview (all layers)

+

+```

+User YAML ──► Schema/Validation

+ │ (pairing, ordering, target checks)

+ ▼

+ SimulationRunner

+ │ _lb_out_edges: OrderedDict[...] (shared object)

+ │ build LB, edges (LB→S inserted into _lb_out_edges)

+ │ build EventInjectionRuntime(..., lb_out_edges=alias)

+ │

+ ├─ _start_events()

+ │ └─ EventInjectionRuntime.start()

+ │ └─ start _assign_server_state() (SimPy proc)

+ │

+ ├─ _start_all_processes()

+ │ ├─ LoadBalancerRuntime.start()

+ │ ├─ EdgeRuntime.start() (if any process)

+ │ └─ ServerRuntime.start()

+ │

+ └─ env.run(until=T)

+

+Runtime progression (example):

+t=5s EventInjectionRuntime: SERVER_DOWN(S1)

+ └─ _edge_by_server[S1] -> (edge-S1, edge_rt)

+ └─ _lb_out_edges.pop("edge-S1") # O(1)

+

+t=7s LoadBalancerRuntime picks next edge

+ └─ "edge-S1" not present → never selected

+

+t=10s EventInjectionRuntime: SERVER_UP(S1)

+ └─ _lb_out_edges["edge-S1"] = edge_rt # O(1)

+ └─ _lb_out_edges.move_to_end("edge-S1") # fairness

+

+t>10s LoadBalancerRuntime now sees edge-S1 again

+ └─ RR/LC proceeds as usual

+```

+

+---

+

+## 7) Correctness & Determinism

+

+* **Exact timing**: absolute→relative conversion ensures transitions happen at precise timestamps.

+* **END before START** at identical times prevents spuriously “stuck down” outcomes for back-to-back events.

+* **Fair rejoin**: `move_to_end` reintroduces the server in a predictable RR position (least recently used).

+ (Least-connections remains deterministic because the edge reappears with its current connection count.)

+* **Availability constraint**: schema can enforce “at least one server up,” avoiding degenerate LB states.

+

+---

+

+## 8) Design Choices & Rationale

+

+* **Mutate the edge set, not the algorithm**

+ Removing/adding the LB→server edge keeps LB code **pure** and reusable; no conditional branches for “down servers”.

+* **Single shared `OrderedDict`**

+

+ * O(1) for remove/insert/rotate.

+ * Aliasing between LB and injector removes the need for signaling or copies.

+* **Centralized coroutine**

+ One SimPy process for server outages scales better than per-server processes; simpler mental model.

+* **Reverse index `server_id → edge`**

+ Constant-time resolution; avoids coupling servers to LB or vice-versa.

+

+---

+

+## 9) Performance

+

+* **Build**:

+

+ * Timeline construction: O(#server-events)

+ * Sort: O(#server-events · log #server-events)

+* **Run**:

+

+ * Each transition: O(1) (pop/set/move)

+ * LB pick: unchanged (RR O(1), LC O(n))

+* **Space**:

+

+ * Reverse index: O(#servers with LB edges)

+ * Timeline: O(#server-events)

+

+---

+

+## 10) Failure Modes & Guards

+

+* Unknown server in an event → rejected by schema (or ignored with a log if you prefer leniency).

+* Concurrent DOWN/UP at same timestamp → resolved by timeline ordering (END first).

+* All servers down → disallowed by schema (or handled by LB guard if you opt in later).

+* Missing reverse mapping (no LB) → injector safely no-ops.

+

+---

+

+## 11) Extensibility

+

+* **Multiple LB instances**: make the reverse index `(lb_id, server_id) → edge_id`, or pass per-LB `lb_out_edges`.

+* **Partial capacity**: instead of removing edges, attach capacity/weight and have the LB respect it (requires extending LB policy).

+* **Dynamic scale-out**: adding new servers at runtime is the same operation as “UP” with a previously unseen edge.

+

+---

+

+## 12) Operational Notes

+

+* Start the **event coroutine** before LB to avoid off-by-one delivery at `t_start`.

+* Keep `_lb_out_edges` the **only source of truth** for routable edges.

+* If you also use edge-level spikes, both coroutines can run concurrently; they are independent.

+

+---

+

+## 13) Summary

+

+We model server outages by **mutating the LB’s live edge set** via a centralized event runtime:

+

+* **O(1)** down/up transitions by `pop`/`set` on a shared `OrderedDict`.

+* LB algorithms remain untouched and deterministic.

+* A single SimPy coroutine drives the timeline; a reverse index resolves targets in constant time.

+* The design is minimal, performant, and easy to extend to richer failure models.

diff --git a/examples/builder_input/event_injection/lb_two_servers.py b/examples/builder_input/event_injection/lb_two_servers.py

new file mode 100644

index 0000000..8af411f

--- /dev/null

+++ b/examples/builder_input/event_injection/lb_two_servers.py

@@ -0,0 +1,240 @@

+"""

+AsyncFlow builder example — LB + 2 servers (medium load) with events.

+

+Topology

+ generator → client → LB → srv-1

+ └→ srv-2

+ srv-1 → client

+ srv-2 → client

+

+Workload

+ ~40 rps (120 users × 20 req/min ÷ 60).

+

+Events

+ - Edge spike on client→LB (+15 ms) @ [100s, 160s]

+ - srv-1 outage @ [180s, 240s]

+ - Edge spike on LB→srv-2 (+20 ms) @ [300s, 360s]

+ - srv-2 outage @ [360s, 420s]

+ - Edge spike on gen→client (+10 ms) @ [480s, 540s]

+

+Outputs

+ PNGs saved under `lb_two_servers_events_plots/` next to this script:

+ - dashboard (latency + throughput)

+ - per-server plots: ready queue, I/O queue, RAM

+"""

+

+from __future__ import annotations

+

+from pathlib import Path

+

+import matplotlib.pyplot as plt

+import simpy

+

+# Public builder API

+from asyncflow import AsyncFlow

+from asyncflow.components import Client, Server, Edge, Endpoint, LoadBalancer

+from asyncflow.settings import SimulationSettings

+from asyncflow.workload import RqsGenerator

+

+# Runner + Analyzer

+from asyncflow.metrics.analyzer import ResultsAnalyzer

+from asyncflow.runtime.simulation_runner import SimulationRunner

+

+

+def build_and_run() -> ResultsAnalyzer:

+ """Build the scenario via the builder and run the simulation."""

+ # ── Workload (generator) ───────────────────────────────────────────────

+ generator = RqsGenerator(

+ id="rqs-1",

+ avg_active_users={"mean": 120},

+ avg_request_per_minute_per_user={"mean": 20},

+ user_sampling_window=60,

+ )

+

+ # ── Client ────────────────────────────────────────────────────────────

+ client = Client(id="client-1")

+

+ # ── Servers (identical endpoint: CPU 2ms → RAM 128MB → IO 12ms) ───────

+ endpoint = Endpoint(

+ endpoint_name="/api",

+ steps=[

+ {"kind": "initial_parsing", "step_operation": {"cpu_time": 0.002}},

+ {"kind": "ram", "step_operation": {"necessary_ram": 128}},

+ {"kind": "io_wait", "step_operation": {"io_waiting_time": 0.012}},

+ ],

+ )

+ srv1 = Server(

+ id="srv-1",

+ server_resources={"cpu_cores": 1, "ram_mb": 2048},

+ endpoints=[endpoint],

+ )

+ srv2 = Server(

+ id="srv-2",

+ server_resources={"cpu_cores": 1, "ram_mb": 2048},

+ endpoints=[endpoint],

+ )

+

+ # ── Load Balancer ─────────────────────────────────────────────────────

+ lb = LoadBalancer(

+ id="lb-1",

+ algorithms="round_robin",

+ server_covered=["srv-1", "srv-2"],

+ )

+

+ # ── Edges (exponential latency) ───────────────────────────────────────

+ e_gen_client = Edge(

+ id="gen-client",

+ source="rqs-1",

+ target="client-1",

+ latency={"mean": 0.003, "distribution": "exponential"},

+ )

+ e_client_lb = Edge(

+ id="client-lb",

+ source="client-1",

+ target="lb-1",

+ latency={"mean": 0.002, "distribution": "exponential"},

+ )

+ e_lb_srv1 = Edge(

+ id="lb-srv1",

+ source="lb-1",

+ target="srv-1",

+ latency={"mean": 0.002, "distribution": "exponential"},

+ )

+ e_lb_srv2 = Edge(

+ id="lb-srv2",

+ source="lb-1",

+ target="srv-2",

+ latency={"mean": 0.002, "distribution": "exponential"},

+ )

+ e_srv1_client = Edge(

+ id="srv1-client",

+ source="srv-1",

+ target="client-1",

+ latency={"mean": 0.003, "distribution": "exponential"},

+ )

+ e_srv2_client = Edge(

+ id="srv2-client",

+ source="srv-2",

+ target="client-1",

+ latency={"mean": 0.003, "distribution": "exponential"},

+ )

+

+ # ── Simulation settings ───────────────────────────────────────────────

+ settings = SimulationSettings(

+ total_simulation_time=600,

+ sample_period_s=0.05,

+ enabled_sample_metrics=[

+ "ready_queue_len",

+ "event_loop_io_sleep",

+ "ram_in_use",

+ "edge_concurrent_connection",

+ ],

+ enabled_event_metrics=["rqs_clock"],

+ )

+

+ # ── Assemble payload + events via builder ─────────────────────────────

+ payload = (

+ AsyncFlow()

+ .add_generator(generator)

+ .add_client(client)

+ .add_servers(srv1, srv2)

+ .add_load_balancer(lb)

+ .add_edges(

+ e_gen_client,

+ e_client_lb,

+ e_lb_srv1,

+ e_lb_srv2,

+ e_srv1_client,

+ e_srv2_client,

+ )

+ .add_simulation_settings(settings)

+ # Events

+ .add_network_spike(

+ event_id="ev-spike-1",

+ edge_id="client-lb",

+ t_start=100.0,

+ t_end=160.0,

+ spike_s=0.015, # +15 ms

+ )

+ .add_server_outage(

+ event_id="ev-srv1-down",

+ server_id="srv-1",

+ t_start=180.0,

+ t_end=240.0,

+ )

+ .add_network_spike(

+ event_id="ev-spike-2",

+ edge_id="lb-srv2",

+ t_start=300.0,

+ t_end=360.0,

+ spike_s=0.020, # +20 ms

+ )

+ .add_server_outage(

+ event_id="ev-srv2-down",

+ server_id="srv-2",

+ t_start=360.0,

+ t_end=420.0,

+ )

+ .add_network_spike(

+ event_id="ev-spike-3",

+ edge_id="gen-client",

+ t_start=480.0,

+ t_end=540.0,

+ spike_s=0.010, # +10 ms

+ )

+ .build_payload()

+ )

+

+ # ── Run ───────────────────────────────────────────────────────────────

+ env = simpy.Environment()

+ runner = SimulationRunner(env=env, simulation_input=payload)

+ results: ResultsAnalyzer = runner.run()

+ return results

+

+

+def main() -> None:

+ res = build_and_run()

+ print(res.format_latency_stats())

+

+ # Output directory next to this script

+ script_dir = Path(__file__).parent

+ out_dir = script_dir / "lb_two_servers_events_plots"

+ out_dir.mkdir(parents=True, exist_ok=True)

+

+ # Dashboard (latency + throughput)

+ fig, axes = plt.subplots(1, 2, figsize=(14, 5))

+ res.plot_base_dashboard(axes[0], axes[1])

+ fig.tight_layout()

+ dash_path = out_dir / "lb_two_servers_events_dashboard.png"

+ fig.savefig(dash_path)

+ print(f"Saved: {dash_path}")

+

+ # Per-server plots

+ for sid in res.list_server_ids():

+ # Ready queue

+ f1, a1 = plt.subplots(figsize=(10, 5))

+ res.plot_single_server_ready_queue(a1, sid)

+ f1.tight_layout()

+ p1 = out_dir / f"lb_two_servers_events_ready_queue_{sid}.png"

+ f1.savefig(p1)

+ print(f"Saved: {p1}")

+

+ # I/O queue

+ f2, a2 = plt.subplots(figsize=(10, 5))

+ res.plot_single_server_io_queue(a2, sid)

+ f2.tight_layout()

+ p2 = out_dir / f"lb_two_servers_events_io_queue_{sid}.png"

+ f2.savefig(p2)

+ print(f"Saved: {p2}")

+

+ # RAM usage

+ f3, a3 = plt.subplots(figsize=(10, 5))

+ res.plot_single_server_ram(a3, sid)

+ f3.tight_layout()

+ p3 = out_dir / f"lb_two_servers_events_ram_{sid}.png"

+ f3.savefig(p3)

+ print(f"Saved: {p3}")

+

+

+if __name__ == "__main__":

+ main()

diff --git a/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_dashboard.png b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_dashboard.png

new file mode 100644

index 0000000..2177ffb

Binary files /dev/null and b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_dashboard.png differ

diff --git a/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_io_queue_srv-1.png b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_io_queue_srv-1.png

new file mode 100644

index 0000000..9c7ffba

Binary files /dev/null and b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_io_queue_srv-1.png differ

diff --git a/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_io_queue_srv-2.png b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_io_queue_srv-2.png

new file mode 100644

index 0000000..678c839

Binary files /dev/null and b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_io_queue_srv-2.png differ

diff --git a/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ram_srv-1.png b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ram_srv-1.png

new file mode 100644

index 0000000..c8102f8

Binary files /dev/null and b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ram_srv-1.png differ

diff --git a/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ram_srv-2.png b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ram_srv-2.png

new file mode 100644

index 0000000..ddf4a20

Binary files /dev/null and b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ram_srv-2.png differ

diff --git a/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ready_queue_srv-1.png b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ready_queue_srv-1.png

new file mode 100644

index 0000000..3464e5f

Binary files /dev/null and b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ready_queue_srv-1.png differ

diff --git a/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ready_queue_srv-2.png b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ready_queue_srv-2.png

new file mode 100644

index 0000000..cfb8c0f

Binary files /dev/null and b/examples/builder_input/event_injection/lb_two_servers_events_plots/lb_two_servers_events_ready_queue_srv-2.png differ

diff --git a/examples/builder_input/event_injection/single_server.py b/examples/builder_input/event_injection/single_server.py

new file mode 100644

index 0000000..0c514b2

--- /dev/null

+++ b/examples/builder_input/event_injection/single_server.py

@@ -0,0 +1,187 @@

+#!/usr/bin/env python3

+"""

+AsyncFlow builder example — build, run, and visualize a single-server async system

+with event injections (latency spike on edge + server outage).

+

+Topology (single server)

+ generator ──edge──> client ──edge──> server ──edge──> client

+

+Load model

+ ~100 active users, 20 requests/min each (Poisson-like aggregate).

+

+Server model

+ 1 CPU core, 2 GB RAM

+ Endpoint pipeline: CPU(1 ms) → RAM(100 MB) → I/O wait (100 ms)

+ Semantics:

+ - CPU step blocks the event loop

+ - RAM step holds a working set until request completion

+ - I/O step is non-blocking (event-loop friendly)

+

+Network model

+ Each edge has exponential latency with mean 3 ms.

+

+Events

+ - ev-spike-1: deterministic latency spike (+20 ms) on client→server edge,

+ active from t=120s to t=240s

+ - ev-outage-1: server outage for srv-1 from t=300s to t=360s

+

+Outputs

+ - Prints latency statistics to stdout

+ - Saves PNGs in `single_server_plot/` next to this script:

+ * dashboard (latency + throughput)

+ * per-server plots (ready queue, I/O queue, RAM)

+"""

+

+from __future__ import annotations

+

+from pathlib import Path

+import simpy

+import matplotlib.pyplot as plt

+

+# Public AsyncFlow API (builder)

+from asyncflow import AsyncFlow

+from asyncflow.components import Client, Server, Edge, Endpoint

+from asyncflow.settings import SimulationSettings

+from asyncflow.workload import RqsGenerator

+

+# Runner + Analyzer

+from asyncflow.runtime.simulation_runner import SimulationRunner

+from asyncflow.metrics.analyzer import ResultsAnalyzer

+

+

+def build_and_run() -> ResultsAnalyzer:

+ """Build the scenario via the Pythonic builder and run the simulation."""

+ # Workload (generator)

+ generator = RqsGenerator(

+ id="rqs-1",

+ avg_active_users={"mean": 100},

+ avg_request_per_minute_per_user={"mean": 20},

+ user_sampling_window=60,

+ )

+

+ # Client

+ client = Client(id="client-1")

+

+ # Server + endpoint (CPU → RAM → I/O)

+ endpoint = Endpoint(

+ endpoint_name="ep-1",

+ probability=1.0,

+ steps=[

+ {"kind": "initial_parsing", "step_operation": {"cpu_time": 0.001}}, # 1 ms

+ {"kind": "ram", "step_operation": {"necessary_ram": 100}}, # 100 MB

+ {"kind": "io_wait", "step_operation": {"io_waiting_time": 0.100}}, # 100 ms

+ ],

+ )

+ server = Server(

+ id="srv-1",

+ server_resources={"cpu_cores": 1, "ram_mb": 2048},

+ endpoints=[endpoint],

+ )

+

+ # Network edges (3 ms mean, exponential)

+ e_gen_client = Edge(

+ id="gen-client",

+ source="rqs-1",

+ target="client-1",

+ latency={"mean": 0.003, "distribution": "exponential"},

+ )

+ e_client_srv = Edge(

+ id="client-srv",

+ source="client-1",

+ target="srv-1",

+ latency={"mean": 0.003, "distribution": "exponential"},

+ )

+ e_srv_client = Edge(

+ id="srv-client",

+ source="srv-1",

+ target="client-1",

+ latency={"mean": 0.003, "distribution": "exponential"},

+ )

+

+ # Simulation settings

+ settings = SimulationSettings(

+ total_simulation_time=500,

+ sample_period_s=0.05,

+ enabled_sample_metrics=[

+ "ready_queue_len",

+ "event_loop_io_sleep",

+ "ram_in_use",

+ "edge_concurrent_connection",

+ ],

+ enabled_event_metrics=["rqs_clock"],

+ )

+

+ # Assemble payload with events

+ payload = (

+ AsyncFlow()

+ .add_generator(generator)

+ .add_client(client)

+ .add_servers(server)

+ .add_edges(e_gen_client, e_client_srv, e_srv_client)

+ .add_simulation_settings(settings)

+ # Events

+ .add_network_spike(

+ event_id="ev-spike-1",

+ edge_id="client-srv",

+ t_start=120.0,

+ t_end=240.0,

+ spike_s=0.020, # 20 ms spike

+ )

+ ).build_payload()

+

+ # Run

+ env = simpy.Environment()

+ runner = SimulationRunner(env=env, simulation_input=payload)

+ results: ResultsAnalyzer = runner.run()

+ return results

+

+

+def main() -> None:

+ # Build & run

+ res = build_and_run()

+

+ # Print concise latency summary

+ print(res.format_latency_stats())

+

+ # Prepare output dir

+ script_dir = Path(__file__).parent

+ out_dir = script_dir / "single_server_plot"

+ out_dir.mkdir(parents=True, exist_ok=True)

+

+ # Dashboard (latency + throughput)

+ fig, axes = plt.subplots(1, 2, figsize=(14, 5))

+ res.plot_base_dashboard(axes[0], axes[1])

+ fig.tight_layout()

+ dash_path = out_dir / "event_inj_single_server_dashboard.png"

+ fig.savefig(dash_path)

+ print(f"Saved: {dash_path}")

+

+ # Per-server plots

+ for sid in res.list_server_ids():

+ # Ready queue

+ f1, a1 = plt.subplots(figsize=(10, 5))

+ res.plot_single_server_ready_queue(a1, sid)

+ f1.tight_layout()

+ p1 = out_dir / f"event_inj_single_server_ready_queue_{sid}.png"

+ f1.savefig(p1)

+ print(f"Saved: {p1}")

+

+ # I/O queue

+ f2, a2 = plt.subplots(figsize=(10, 5))

+ res.plot_single_server_io_queue(a2, sid)

+ f2.tight_layout()

+ p2 = out_dir / f"event_inj_single_server_io_queue_{sid}.png"

+ f2.savefig(p2)

+ print(f"Saved: {p2}")

+

+ # RAM usage

+ f3, a3 = plt.subplots(figsize=(10, 5))

+ res.plot_single_server_ram(a3, sid)

+ f3.tight_layout()

+ p3 = out_dir / f"event_inj_single_server_ram_{sid}.png"

+ f3.savefig(p3)

+ print(f"Saved: {p3}")

+

+

+if __name__ == "__main__":

+ main()

diff --git a/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_dashboard.png b/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_dashboard.png

new file mode 100644

index 0000000..1a81453

Binary files /dev/null and b/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_dashboard.png differ

diff --git a/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_io_queue_srv-1.png b/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_io_queue_srv-1.png

new file mode 100644

index 0000000..ed08233

Binary files /dev/null and b/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_io_queue_srv-1.png differ

diff --git a/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_ram_srv-1.png b/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_ram_srv-1.png

new file mode 100644

index 0000000..476bd79

Binary files /dev/null and b/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_ram_srv-1.png differ

diff --git a/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_ready_queue_srv-1.png b/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_ready_queue_srv-1.png

new file mode 100644

index 0000000..a6fcf29

Binary files /dev/null and b/examples/builder_input/event_injection/single_server_plot/event_inj_single_server_ready_queue_srv-1.png differ

diff --git a/examples/builder_input/load_balancer/lb_dashboard.png b/examples/builder_input/load_balancer/lb_dashboard.png

index 4d94cfe..dd6cc80 100644

Binary files a/examples/builder_input/load_balancer/lb_dashboard.png and b/examples/builder_input/load_balancer/lb_dashboard.png differ

diff --git a/examples/builder_input/load_balancer/lb_server_srv-1_metrics.png b/examples/builder_input/load_balancer/lb_server_srv-1_metrics.png

index 1665766..d7f57e6 100644

Binary files a/examples/builder_input/load_balancer/lb_server_srv-1_metrics.png and b/examples/builder_input/load_balancer/lb_server_srv-1_metrics.png differ

diff --git a/examples/builder_input/load_balancer/lb_server_srv-2_metrics.png b/examples/builder_input/load_balancer/lb_server_srv-2_metrics.png

index cdda50f..f055ff4 100644

Binary files a/examples/builder_input/load_balancer/lb_server_srv-2_metrics.png and b/examples/builder_input/load_balancer/lb_server_srv-2_metrics.png differ

diff --git a/examples/builder_input/load_balancer/two_servers.py b/examples/builder_input/load_balancer/two_servers.py

index fb2eb35..a57d090 100644

--- a/examples/builder_input/load_balancer/two_servers.py

+++ b/examples/builder_input/load_balancer/two_servers.py

@@ -1,4 +1,3 @@

-#!/usr/bin/env python3

"""

Didactic example: AsyncFlow with a Load Balancer and two **identical** servers.

diff --git a/examples/builder_input/single_server/builder_service_plots.png b/examples/builder_input/single_server/builder_service_plots.png

index 22fc27d..31c230e 100644

Binary files a/examples/builder_input/single_server/builder_service_plots.png and b/examples/builder_input/single_server/builder_service_plots.png differ

diff --git a/examples/builder_input/single_server/single_server.py b/examples/builder_input/single_server/single_server.py

index bb54344..7fb7e99 100644

--- a/examples/builder_input/single_server/single_server.py

+++ b/examples/builder_input/single_server/single_server.py

@@ -1,4 +1,3 @@

-#!/usr/bin/env python3

"""

AsyncFlow builder example — build, run, and visualize a single-server async system.

diff --git a/examples/yaml_input/data/event_inj_lb.yml b/examples/yaml_input/data/event_inj_lb.yml

new file mode 100644

index 0000000..5d97bc8

--- /dev/null

+++ b/examples/yaml_input/data/event_inj_lb.yml

@@ -0,0 +1,102 @@

+# AsyncFlow SimulationPayload — LB + 2 servers (medium load) with events

+#

+# Topology:

+# generator → client → LB → srv-1

+# └→ srv-2

+# srv-1 → client

+# srv-2 → client

+#

+# Workload targets ~40 rps (120 users × 20 req/min ÷ 60).

+

+rqs_input:

+ id: rqs-1

+ avg_active_users: { mean: 120 }

+ avg_request_per_minute_per_user: { mean: 20 }

+ user_sampling_window: 60

+

+topology_graph:

+ nodes:

+ client: { id: client-1 }

+

+ load_balancer:

+ id: lb-1

+ algorithms: round_robin

+ server_covered: [srv-1, srv-2]

+

+ servers:

+ - id: srv-1

+ server_resources: { cpu_cores: 1, ram_mb: 2048 }

+ endpoints:

+ - endpoint_name: /api

+ steps:

+ - kind: initial_parsing

+ step_operation: { cpu_time: 0.002 } # 2 ms CPU

+ - kind: ram

+ step_operation: { necessary_ram: 128 } # 128 MB

+ - kind: io_wait

+ step_operation: { io_waiting_time: 0.012 } # 12 ms I/O wait

+

+ - id: srv-2

+ server_resources: { cpu_cores: 1, ram_mb: 2048 }

+ endpoints:

+ - endpoint_name: /api

+ steps:

+ - kind: initial_parsing

+ step_operation: { cpu_time: 0.002 }

+ - kind: ram

+ step_operation: { necessary_ram: 128 }

+ - kind: io_wait

+ step_operation: { io_waiting_time: 0.012 }

+

+ edges:

+ - { id: gen-client, source: rqs-1, target: client-1, latency: { mean: 0.003, distribution: exponential } }

+ - { id: client-lb, source: client-1, target: lb-1, latency: { mean: 0.002, distribution: exponential } }

+ - { id: lb-srv1, source: lb-1, target: srv-1, latency: { mean: 0.002, distribution: exponential } }

+ - { id: lb-srv2, source: lb-1, target: srv-2, latency: { mean: 0.002, distribution: exponential } }

+ - { id: srv1-client, source: srv-1, target: client-1, latency: { mean: 0.003, distribution: exponential } }

+ - { id: srv2-client, source: srv-2, target: client-1, latency: { mean: 0.003, distribution: exponential } }

+

+sim_settings:

+ total_simulation_time: 600

+ sample_period_s: 0.05

+ enabled_sample_metrics:

+ - ready_queue_len

+ - event_loop_io_sleep

+ - ram_in_use

+ - edge_concurrent_connection

+ enabled_event_metrics:

+ - rqs_clock

+

+# Events:

+# - Edge spikes (added latency in seconds) that stress different paths at different times.

+# - Server outages that never overlap (so at least one server stays up).

+events:

+ # Edge spike: client → LB gets +15 ms from t=100s to t=160s

+ - event_id: ev-spike-1

+ target_id: client-lb

+ start: { kind: network_spike_start, t_start: 100.0, spike_s: 0.015 }

+ end: { kind: network_spike_end, t_end: 160.0 }

+

+ # Server outage: srv-1 down from t=180s to t=240s

+ - event_id: ev-srv1-down

+ target_id: srv-1

+ start: { kind: server_down, t_start: 180.0 }

+ end: { kind: server_up, t_end: 240.0 }

+

+ # Edge spike focused on srv-2 leg (LB → srv-2) from t=300s to t=360s (+20 ms)