Unable to load custom yolov5 tflite model in the camera #139

-

|

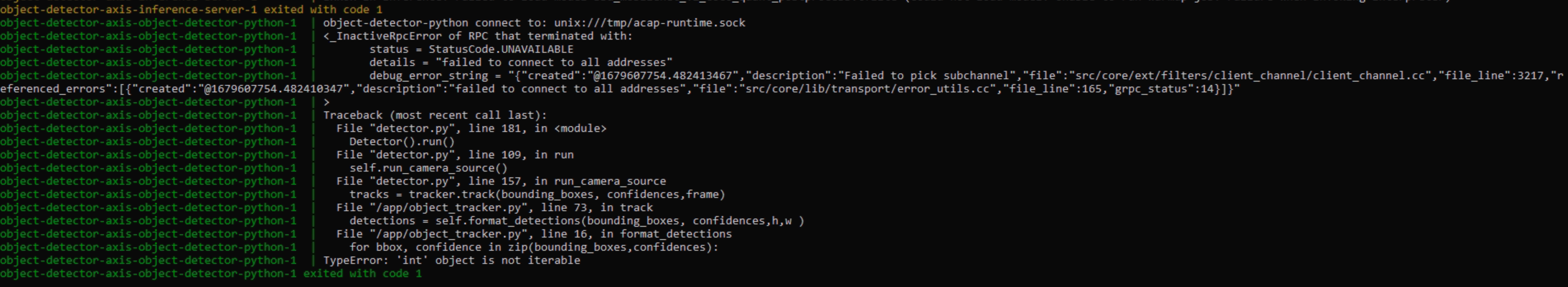

I am trying to load a custom model in the camera we are facing some issues the error is: We believe this is because the inference container is not able to load the model. The common known issues page(#111) of the SDK says that if we face this error, we should leave the app running for 3-5 minutes as it takes time to load a model for the first time. But we were seeing this error even after 10 mins, we also followed the next steps. i.e. When we try "docker -H ps" the apac-runtime container is not running and docker compose logs are empty. The journalctl -u larod command in the camera SSH console gives the following output |

Beta Was this translation helpful? Give feedback.

Replies: 3 comments 1 reply

-

|

Hello! Or, by loading the model on netron.app you can search for 429 and see what is the layer that cause the problem. In general, our devices support only tensorflow lite operators, try to stick with them to avoid issues: Edit:

|

Beta Was this translation helpful? Give feedback.

-

|

Hi, Thank you in advance. |

Beta Was this translation helpful? Give feedback.

-

|

Hi again, |

Beta Was this translation helpful? Give feedback.

Hi again,

It is now possible to run Yolov5 on Artpec-8 on devices running Axis OS >= 11.7

See the guide here