Replies: 2 comments 1 reply

-

|

Nice paper.

I think that the easy way is to encode the data to a Poisson spikes, your can use the code in

You can create your own set of images as input stimulation and feed them to the network.

Sorry, not sure what was the question.

The parameters seems ok, but that is for you to fine tune the the model and find the right parameters.

As you mention, both paper work on different assumptions, architecture and principles. The above paper seems to have the ability to change the polarity of the connection, something that Diehl&Cook2015 did not had, also there is no winner take all mechanism in the above paper. |

Beta Was this translation helpful? Give feedback.

-

I only skim the paper, but from what I see it seems that the algorithm in the paper can change synapse polarity, similar to what BP does in ANN, in the standard learning method synapse can start with positive value and the algorithm can change it to negative (and vice versa). In the end of the training, neuron can have positive and some negative synapse, something that is not exist in biology (as far as I know). Diehl&Cook2015 have separate inhibitory or excitatory connection, synapse can not change polarity.

Yes, since BindsNET version 0.2.7, you can feed the input to any neuron, no need to use 'Input' unit. Let me know how its goes. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

Hello,

I would like to implement simple neuron models used for pattern recognition from the paper "Pattern recognition with Spiking Neural Networks: a simple training method". Brief details from the paper http://ceur-ws.org/Vol-1525/paper-21.pdf:

Task: Classify an image as either X or O

Neuron model: Izhikevich neurons

Learning rule: STDP is used as the learning rule for training(BUT here I don't know whether STDP used between all layers or only between

input -> hidden)Network Architecture: 25 input neurons, 5 neurons for the hidden layer and 2 output neurons

If I am not mistaken, only 1000 circle images were trained, then 13 images in the given sequence were tested: {O, O, X, X, O, X, X, X, X, O, O, X, O}

I wanted to implement this, but I had some questions. In the discussion, section authors mentioned that such training is usually tested on the MNIST dataset. Then I decided to do it for the MNIST dataset because my idea was to use

bindsnet/examples/mnist/eth_mnist.pyas a reference and change therenetwork modelSo, I took hidden layer neurons and output neurons = 100, input layer neurons = 784, STDP rule was used between the input → hidden layer only. And my input layer was made by

InputIzhikevich Model :

Question 1: In this paper was not mentioned input data encoders, how can I give my input data to Network? Especially, how to give MNIST dataset?

Question 2: How to implement this part? «After initial tests on the learning ability of the network, the training period was adjusted to be 15s during which the input layer is stimulated every 100ms with a circle pattern. 10ms after stimulating the input layer, the output neuron is given an external stimulation making it to spike. This spiking, in relation to the preliminary spiking of neurons from the input layer, reinforces the paths between activated neurons of the input layer and the trained neuron of the output layer. This training can be seen in the first phase of the time diagrams (top and middle) of Figure 5 from t = 0 to 15s.» Mainly, How to generate external stimulation?

Question 3: In the above IzhikevichModel input_layer neurons also must be based on the Izhikevich equation, but I couldn’t make

Question 4: Could you check please IzhikevichModel, have parameters taken correctly?

Any thoughts, suggestions, and examples, feedbacks are highly appreciated.

Thank you

P.S:

As

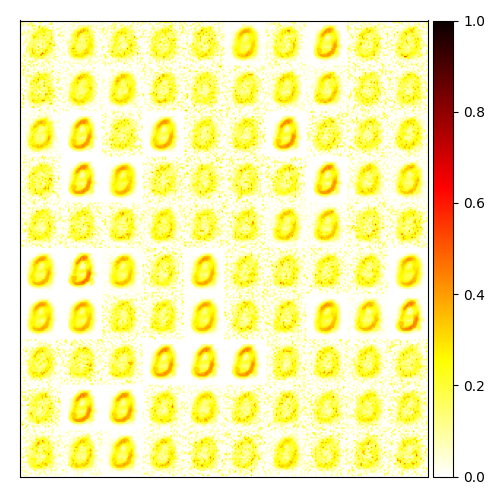

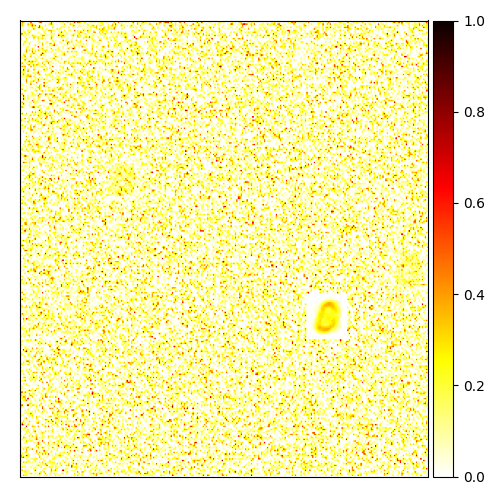

eth_mnist.pywas the implementation of the paper Diehl&Cook2015, and they mainly focused on lateral inhibition and adaptive threshold, homeostasis. From my understanding, they showed that STDP cannot do anything alone, because those lateral inhibitions and adaptive threshold leads neurons to compete with each other. BUT in this paper authors did not mention anything like competitive learning, adaptive th, lat. inh.With the above Izhikevich Model, I got this kind of outputs

Beta Was this translation helpful? Give feedback.

All reactions