🎶 Benefits of Connecting Vensim to Stan #21

hyunjimoon

started this conversation in

people; relating

Replies: 3 comments 6 replies

-

|

I could potentially be convinced otherwise, but I have yet to encounter a gradient solver that was general purpose for all SD models. Payoff discontinuities, roughness, and weird manifolds within high-dimensional parameter spaces are pretty common. |

Beta Was this translation helpful? Give feedback.

6 replies

-

|

Andrew pointed me to the paper Delivering data differently. 2.5 Sound4.4 Using musical sounds to convey the progress of iterative algorithms |

Beta Was this translation helpful? Give feedback.

0 replies

-

|

Discussion with @jandraor on benefits of Stan connection

|

Beta Was this translation helpful? Give feedback.

0 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

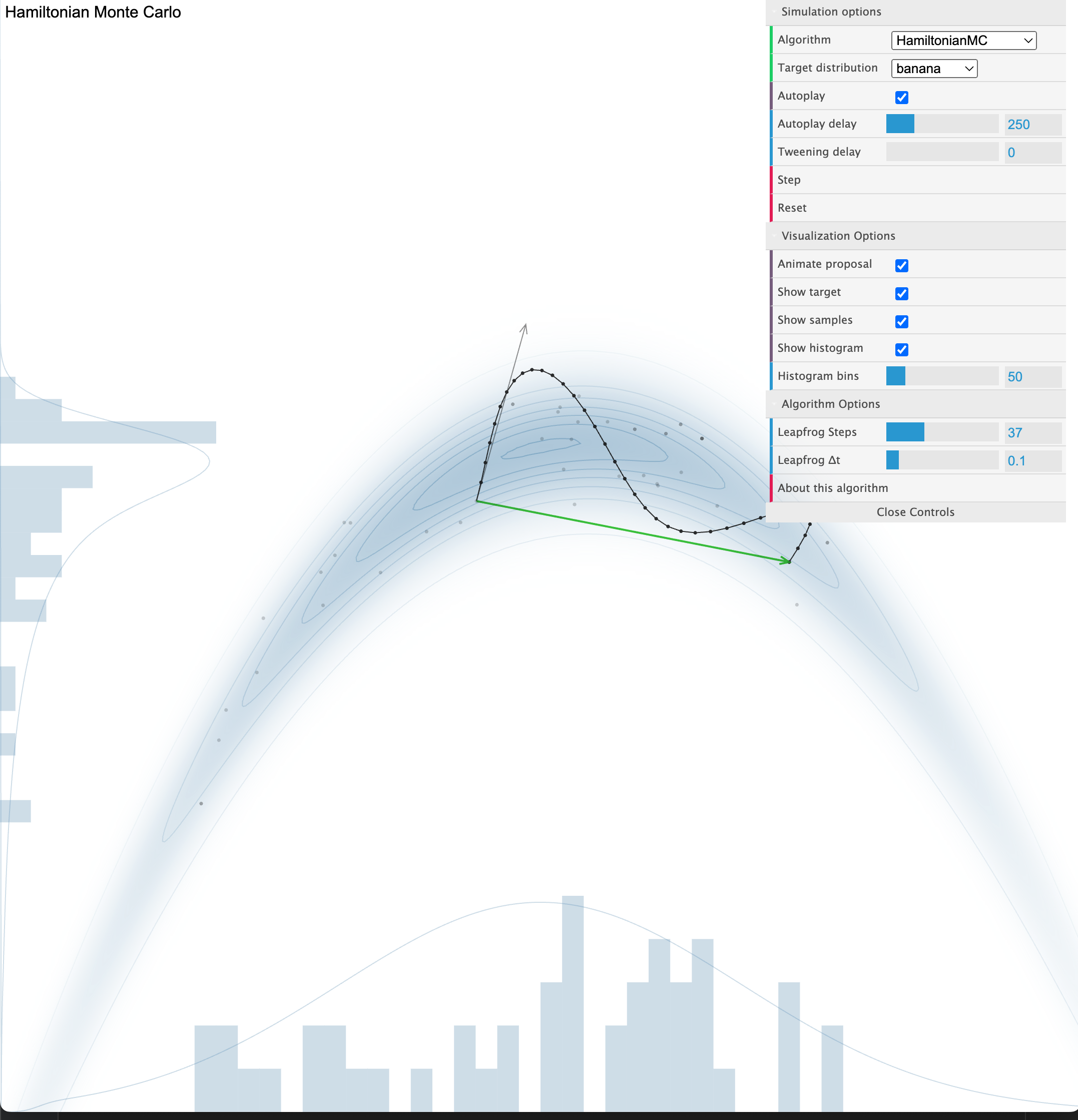

Vensim is the standard tool for system dynamic modelers. However, for Bayes + System dynamics, I am persuading my advisor Hazhir (MIT, system dynamics group) and Tom (Vensim CTO) to consider outsourcing optimization (or at least offerring ways to do optimization) to Stan e.g. by connecting Stan math library to Vensim. Stan is not the only option; gen which offers custom hybrid inference algorithms and I believe there could be other alternatives. I gave the following for reasons for this outsource.

To whom?

Benefits: increase usability

ISO defines usability = efficiency + effectiveness + satisfaction

efficiency

Ttest: convergence diagnosticseffectiveness

Aapproximator:one disadvantage for Stan is we need to truncate only`neg_binom_2_lpmf(int | real )'

variance ~ mean^2vsvariance ~ mean(stan uses standard-deviation parameterization)satisfaction

Qualitative Why?

Considering log likelihood optimization is the core for Bayesian computation (one branch of probabilistic programming language), wouldn't it be natural for Stan platform to have continuous inflow of cutting-edge techniques both for algorithm and diagnostics? Note both algorithm and diagnostics need constant update considering their actor-critic relation. For one, Vensim's MCMC, DREAM algorithm explained in this paper cites Gelman and Rubin (1992) for its convergence diagnostics which is outdated. khat from this paper is one of the cutting-edge diagnoses. Stan have a team of at least ten developers concentrating on developing diagnostics (and thousands of users for testing) itself so perhaps outsourcing this and channeling our efforts in what we excel at seemed reasonable to me. To be specific, the decision analysis of Stan in this official manual is not dynamic which we can do better. Practicality of decision-based optimization is what I feel SD experts can contribute to Bayes + System dynamics.

Quantitative Why?

To get gradient of payoff with regard to parameters.

This part corresponds to Stan's function block in Stan estimation, which offers autodiff for implicit functions. Johann from Stanford Computational Policy Lab. who coded reverse-mode autodiff for algebraic solvers explains this here which states that Stan has Powell, Newton, fixed point iteration algebraic solver and only the former two have reverse-mode autodiff implemented. Reverse-mode has a high speedup benefit when output dimension is much smaller than input which is the case when log_posterior is our optimization target. As proof, Johann's Propagating Derivatives through Implicit Functions in Reverse Mode Autodiff poster Propagating Derivatives through Implicit Functions in Reverse Mode Autodiff shows the speedup.

That being said, Juho's paper An importance sampling approach for reliable and efficient inference in Bayesian ordinary differential equation models suggesting the following workflow is cutting-edge in Stan community on ODE which I wish to follow. Considering importance sampling is sequential Monte Carlo's core, exploration on particle MCMC (pMCMC) interests me.

Mathematic details of the above is in hyunjimoon/SBC#52 (comment)

Ryan helped me connect importance sampling with MCMC by pointing to his paper he coauthored with Tamara Broderick Covariances, Robustness, and Variational Bayes: "sampling with MCMC samples to calculate the local sensitivity is precisely equivalent to using the same MCMC samples to estimate the covariance, importance sampling approach is equivalent to using MCMC samples to estimate" (appendix B).

At this point (09/22) Stan seems to be the best choice for a gradient-based optimizer (Vensim's version is not).

What is done

Code-wise, I finished auto-translating Vensim dynamic models to Stan function block with @Dashadower commit 684dfb1 and issue #17 last week. Draft for Estimation for four parameters of Lotka-Volterra model defined from Vensim and inference in Stan is completed but with bad fit$\hat{k} > .7$ . Notebook file includes prior, posterior predictive checks.

What is needed

What can be done

Beta Was this translation helpful? Give feedback.

All reactions