You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

fix(profiling): accounting of time for gevent tasks [backport #5338 to 1.11] (#5549)

Backport of #5338 to 1.11

This change fixes the accounting of wall and CPU time to gevent tasks by

only assigning them to the main Python thread, where they are supposed

to run under normal circumstances. This change also ensures that task

information does not leak onto any other threads that might be running,

most notably the profiler threads themselves.

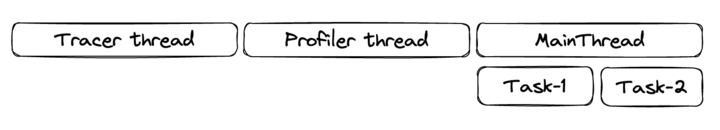

The rough picture of the gevent task modelling on top of Python threads

that comes with this PR is the following

This means that the `MainThread` will account for the wall time of all

the tasks running within it, as well as the native thread stacks. For

example, if the main, gevent-based, application runs a `sleep(2)` in the

main thread, and a `sleep(1)` in a secondary thread, the `MainThread`

thread would report a total of about 5 seconds of wall time (both of the

sleeps, plus the 2 seconds spent in the gevent hub as part of the native

thread stack), whereas the `MainThread` _task_ would only account for

the 2 seconds of the `sleep(2)`.

<img width="1050" alt="Screenshot 2023-03-20 at 15 35 13"

src="https://user-images.githubusercontent.com/20231758/226390338-bc47941f-3536-4b95-a50a-da0f37a3acff.png">

<img width="1050" alt="Screenshot 2023-03-20 at 15 35 34"

src="https://user-images.githubusercontent.com/20231758/226390409-5091beda-7815-4c1d-a437-0a2a3d9e727e.png">

Some of the existing tests have been adapted to check for the accounting

proposed by this PR. Dedicated scenarios will be added to internal

correctness check to catch future regressions.

## Checklist

- [x] Change(s) are motivated and described in the PR description.

- [x] Testing strategy is described if automated tests are not included

in the PR.

- [x] Risk is outlined (performance impact, potential for breakage,

maintainability, etc).

- [x] Change is maintainable (easy to change, telemetry, documentation).

- [x] [Library release note

guidelines](https://ddtrace.readthedocs.io/en/stable/contributing.html#Release-Note-Guidelines)

are followed.

- [x] Documentation is included (in-code, generated user docs, [public

corp docs](https://github.com/DataDog/documentation/)).

- [x] Author is aware of the performance implications of this PR as

reported in the benchmarks PR comment.

## Reviewer Checklist

- [x] Title is accurate.

- [x] No unnecessary changes are introduced.

- [x] Description motivates each change.

- [x] Avoids breaking

[API](https://ddtrace.readthedocs.io/en/stable/versioning.html#interfaces)

changes unless absolutely necessary.

- [x] Testing strategy adequately addresses listed risk(s).

- [x] Change is maintainable (easy to change, telemetry, documentation).

- [x] Release note makes sense to a user of the library.

- [x] Reviewer is aware of, and discussed the performance implications

of this PR as reported in the benchmarks PR comment.

0 commit comments