"npm run deploy" fails at uploading #4887

-

|

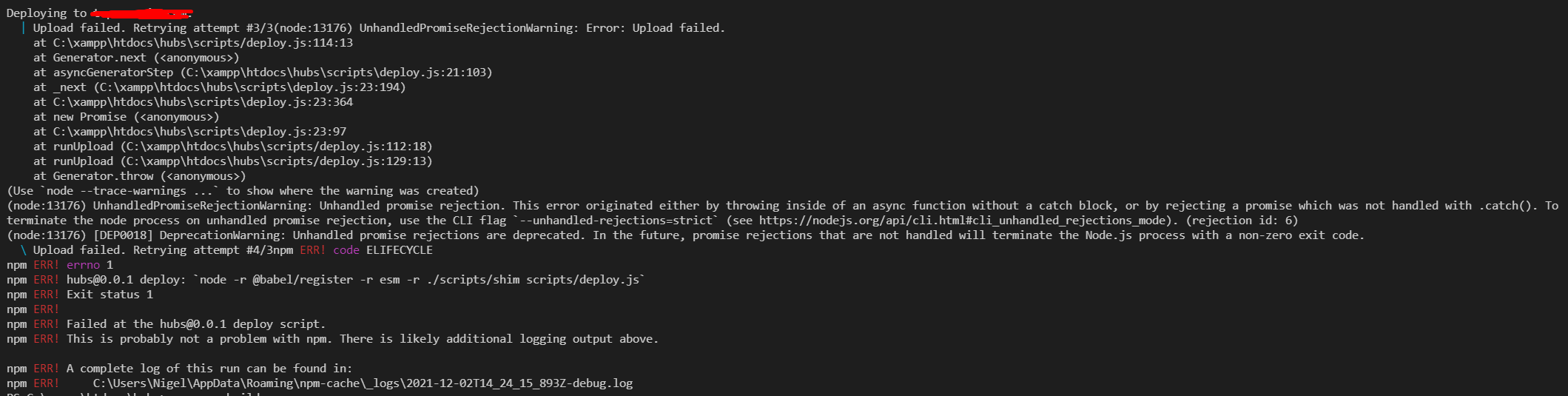

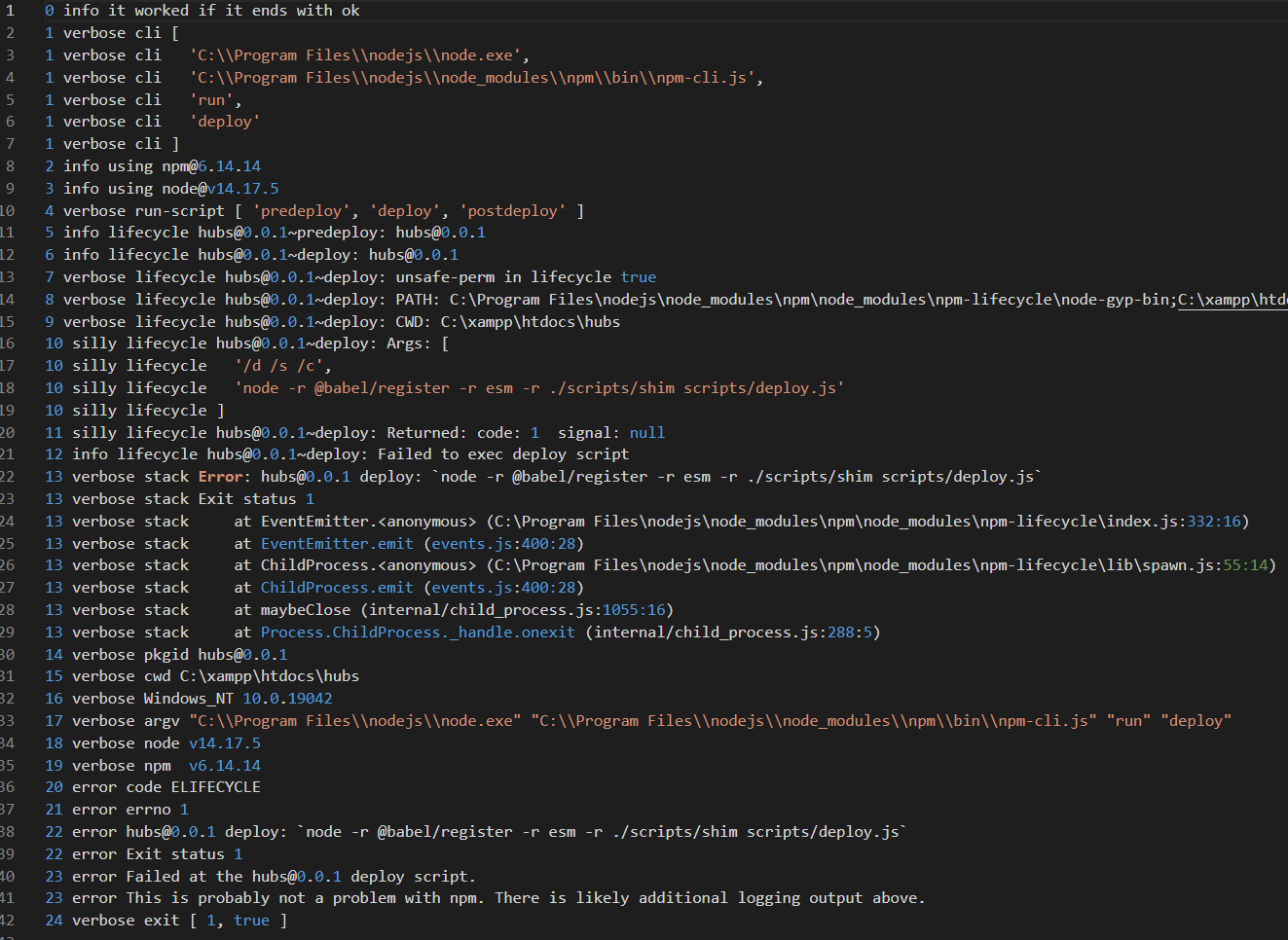

Hi, for two days now I am not able to build and upload to my custom client anymore. I hadn't built in like a month, made a new branch within my custom code, made some very small changes including adding a simple button to the homepage. Locally it worked fine. When I performed "npm run deploy" 2 days ago, it did go through, but I didn't see the new button in the custom client. So I decided to immediately revert some of the changes for it to do it step-by-step, but this time building failed at the uploading part. And it hasn't worked since. It hangs for a few seconds on "Building admins console" I think, then it tries to upload but fails and ends up getting stuck in "Upload failed. Retrying attempt #4/3" untill I cancel the command. My command terminal says the following: The log file the error refers to says the following: The weird / worst thing is that a build did get uploaded before it started failing, but within that build joining rooms doesn't work anymore. So I have a broken build online and am not able to overwrite it. Could this have anything to do with the fact that I haven't performed the cloud template update yet? Or the fact I haven't updated my custom code with the live hubs-cloud code in a while? 'Cause I feel like I didn't change anything that would cause this problem to occur. Hope someone can help me! 🙂 |

Beta Was this translation helpful? Give feedback.

Replies: 2 comments

-

|

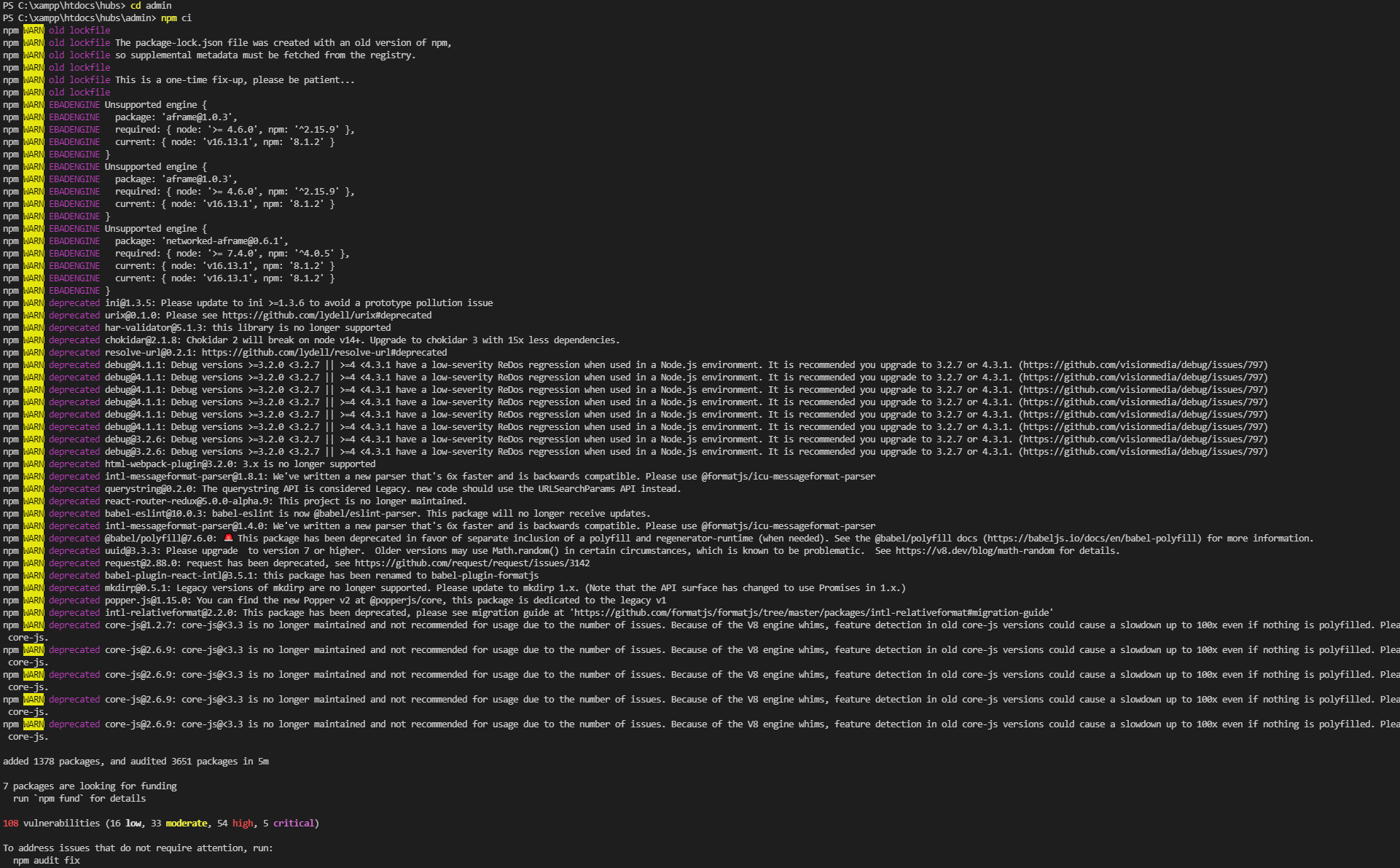

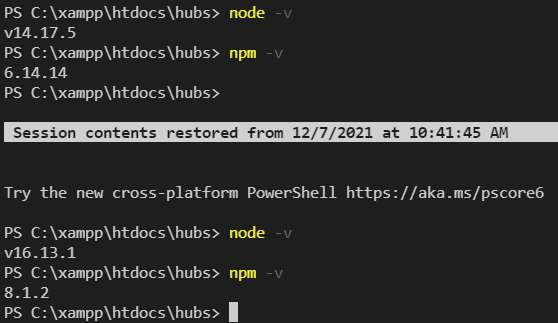

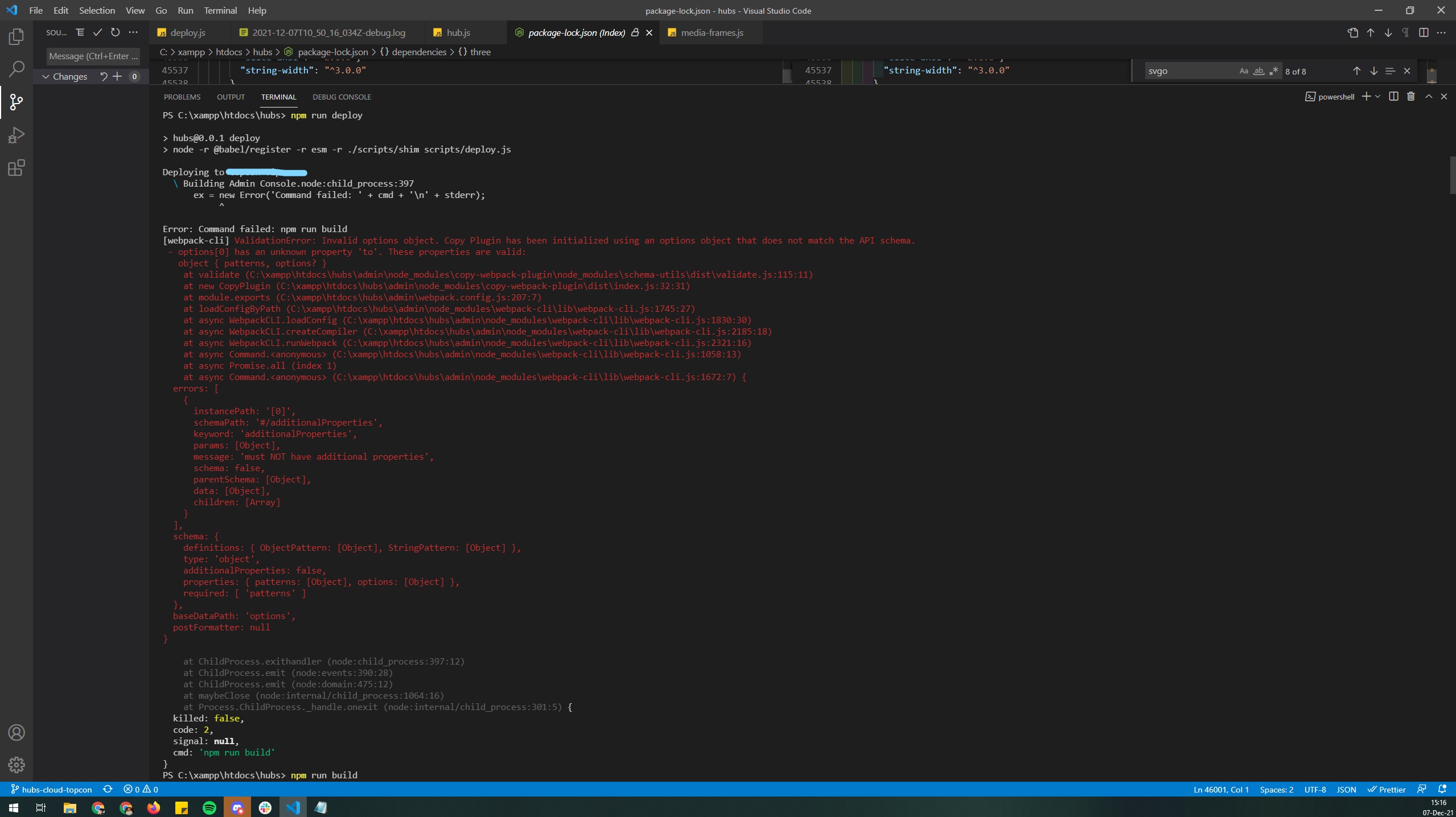

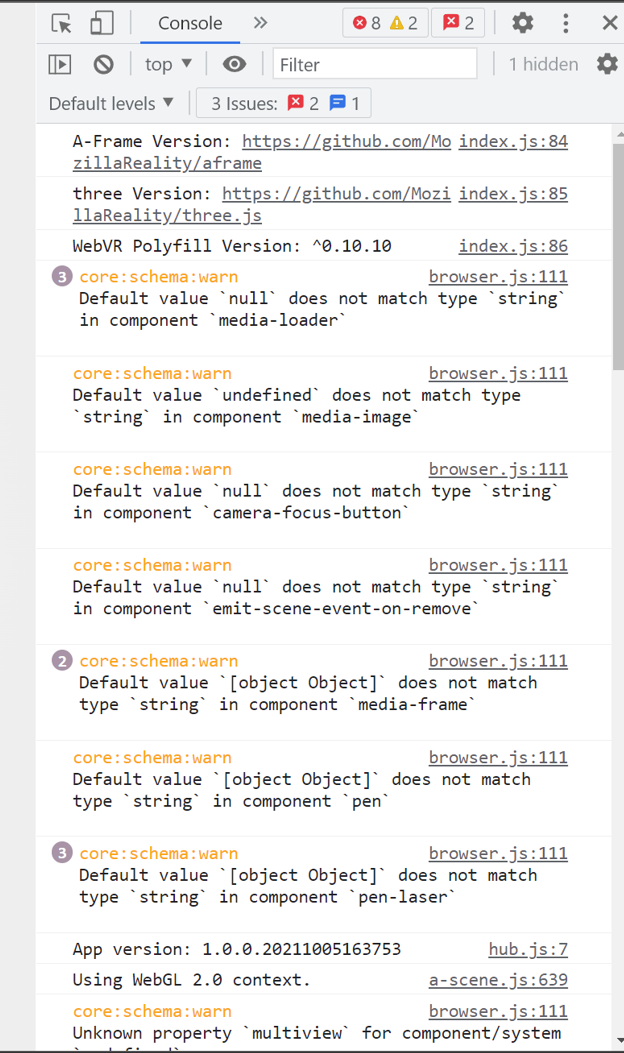

Update: I've not been able to build over my broken build for over a week now and me and my client are getting quite anxious. A description of things I've been trying in the meantime: I've tried the part about testing your admin panel on this page: https://github.com/MozillaReality/hubs-docs/blob/master/docs/hubs-cloud-custom-clients.md I must say that in the meantime I've deleted the node_modules folder multiple times, reinstalled with npm ci, I've updated Node including NPM to newer versions. With later attempts to build, this error message appeared: If I then try to run "npm run build", not much seems to be wrong though. Only some warnings like "WARNING in asset size limit The following asset(s) exceed the recommended size limit (244 KiB). This can impact web performance." and "WARNING in entrypoint size limit The following entrypoint(s) combined asset size exceeds the recommended limit (244 KiB). This can impact web performance. Entrypoints" with then a list of mostly assets that are simply generally included by Hubs. We've been thinking maybe a part of the problem for not being able to deploy is the broken build that is already live, so we've considered doing "npm run undeploy" and even doing an AWS restorage from back-up. Lastly, here are the first two error messages I get in the currently broken live build when I try to join a room:

I've seen and tried so much in the meantime that I must say I'm lost and not even sure what direction to think in anymore, to solve this problem. Thanks for you time if you're reading this! |

Beta Was this translation helpful? Give feedback.

-

|

SOLVED In the meantime it is fixed by doing a stack update! Such a shame it was so difficult to know where the problem was coming from since we've spent a lot of time looking into different directions, but oh well - we've become much wiser in the process. The weird thing is that the broken stack(s) were possibly caused by some activations within AWS for getting a view of the costs per instance. We for example activated the Cost Explorer and some allocation tags, of which most still have not gotten through. I don't really see why that should've broken anything within AWS / the stacks, but timing of the activations and the breaking does suggest it. |

Beta Was this translation helpful? Give feedback.

SOLVED

In the meantime it is fixed by doing a stack update! Such a shame it was so difficult to know where the problem was coming from since we've spent a lot of time looking into different directions, but oh well - we've become much wiser in the process. The weird thing is that the broken stack(s) were possibly caused by some activations within AWS for getting a view of the costs per instance. We for example activated the Cost Explorer and some allocation tags, of which most still have not gotten through. I don't really see why that should've broken anything within AWS / the stacks, but timing of the activations and the breaking does suggest it.