-

Notifications

You must be signed in to change notification settings - Fork 21

Description

Describe the bug

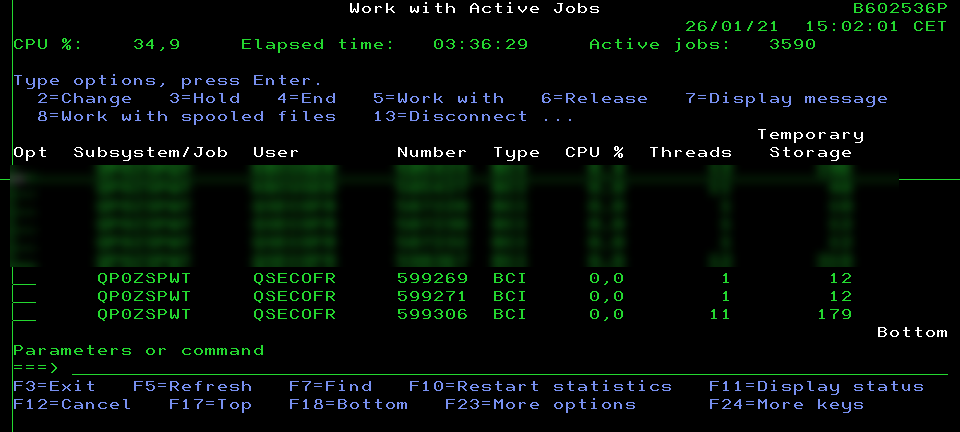

When a bunch (north of 5000) queries have run inside the job, the job appears to run out of memory and refuses to create new connections until restarted. Temporary storage of the job also keeps increasing throughout the day. I can only suspect a memory leak.

To Reproduce

Steps to reproduce the behavior:

I have a back-end server implemented with Express.js that runs the entire day and is restarted at night. Normally it works fine, but a couple of days a month, everybody in the company uses the tool it powers at the same time, and it can receive 10 hits per second. During those busy days, it looks like the job eventually runs out of temporary storage. This appears to be caused by the statements (or the connections?) allocating memory and never deallocating? If this is caused by poor coding from my side, I would like to apologize for wasting your time, I usually stick to RPGLE :-).

I have also included a function that uses idb-pconnector, as there the problem seems to be much worse. This could provide useful clues when figuring out what happens I guess.

const { dbconn, dbstmt, NUMERIC, CHAR, IN, NULL, SQL_ATTR_DBC_SYS_NAMING, SQL_TRUE } = require('idb-connector');

const { DBPool, Statement } = require('idb-pconnector');

const config = require('./config.json');

const pool = new DBPool({

url: '*LOCAL',

});

async function executeStatement(sqlStatement, bindParams, skipFetch = false, skipSetLibList = false) {

const connection = new dbconn();

connection.conn('*LOCAL');

if (!skipSetLibList) await setLibraryList(connection, config.db2LibList);

const statement = new dbstmt(connection);

return new Promise((resolve, reject) => {

try {

statement.exec('SET PATH = *LIBL', (out, error) => {

if (error) {

reject(error);

return;

}

if (bindParams === null) {

statement.exec(sqlStatement, (out, error) => {

if (error) {

reject(error);

return;

}

resolve(out);

statement.close();

connection.disconn();

connection.close();

});

} else {

let bindings = [];

for (var i in bindParams) {

switch (typeof bindParams[i]) {

case 'number':

bindings.push([bindParams[i], IN, NUMERIC]);

break;

case 'string':

bindings.push([bindParams[i], IN, CHAR]);

break;

case 'object':

if (bindParams[i] === null) bindings.push([null, IN, NULL]);

else bindings.push([bindParams[i], IN, CHAR]);

break;

}

}

statement.prepare(sqlStatement, (error) => {

if (error) {

reject(error);

return;

}

statement.bindParameters(bindings, (error) => {

if (error) {

reject(error);

return;

}

statement.execute((out, error) => {

if (error) {

reject(error);

return;

}

if (!skipFetch) {

statement.fetchAll((out, error) => {

if (error) {

reject(error);

return;

}

resolve(out);

statement.close();

connection.disconn();

connection.close();

});

} else {

resolve(out);

statement.close();

connection.disconn();

connection.close();

}

});

});

});

}

});

} catch (error) {

reject(error);

}

});

}

async function setLibraryList(conn, list) {

await setConnAttr(conn, SQL_ATTR_DBC_SYS_NAMING, SQL_TRUE);

return new Promise((resolve, reject) => {

try {

let changeLibStmt = new dbstmt(conn),

qcmdexc = 'CALL QSYS2.QCMDEXC(?)',

// you can set multiple libs

changeLibParam = `CHGLIBL LIBL(${list.join(' ')})`;

changeLibStmt.prepare(qcmdexc, (error) => {

if (error) {

reject(error);

return;

}

changeLibStmt.bindParam([[changeLibParam, IN, CHAR]], (error) => {

if (error) {

reject(error);

return;

}

changeLibStmt.execute((out, error) => {

if (error) {

reject(error);

return;

}

changeLibStmt.close();

resolve(out);

});

});

});

} catch (error) {

reject(error);

}

});

}

async function setConnAttr(conn, attribute, value) {

return new Promise((resolve, reject) => {

try {

resolve(conn.setConnAttr(attribute, value));

} catch (error) {

reject(error);

}

});

}

async function executeStatementConnectorP(sqlStatement, bindParams, skipFetch = false, skipSetLibList = false) {

const conn = pool.attach();

if (!skipSetLibList) await conn.connection.setLibraryList(config.db2LibList);

var results;

let statement = new Statement(conn.connection);

statement.stmt.asNumber(true);

await statement.exec('SET PATH = *LIBL'); //TIMESTAMP_ISO8601 UDF in SYSACCPGM

if (bindParams === null) {

results = await statement.exec(sqlStatement);

} else {

await statement.prepare(sqlStatement);

let bindings = [];

for (var i in bindParams) {

switch (typeof bindParams[i]) {

case 'number':

bindings.push([bindParams[i], IN, NUMERIC]);

break;

case 'string':

bindings.push([bindParams[i], IN, CHAR]);

break;

case 'object':

if (bindParams[i] === null) bindings.push([null, IN, NULL]);

else bindings.push([bindParams[i], IN, CHAR]);

break;

}

}

await statement.bindParam(bindings);

await statement.execute();

if (!skipFetch) results = await statement.fetchAll();

}

await statement.close();

pool.detach(conn);

return results;

}

async function getStories() {

const sSql = `

SELECT

rTrim(JIRAISSUE) "jiraIssue",

rTrim(JIRAISSUEID) "jiraIssueId",

rTrim(JIRAASSIGNEEUSER) "jiraAssigneeUser",

JIRAISSUEDESCRIPTION "jiraIssueDescription",

JIRAISSUESYSTEM "jiraIssueSystem",

rTrim(JIRAISSUEVERSION) "jiraIssueVersion",

DEPLOYINSTRUCTIONS "deployInstructions",

rTrim(DEPLOYINSTRUCTIONSRESPONSIBLE) "deployInstructionsResponsible",

rTrim(DEPLOYINSTRUCTIONSRESPONSIBLEBACKUP) "deployInstructionsResponsibleBackup",

DEPLOYINSTRUCTIONSDONE "deployInstructionsDone",

STATUS "status",

TIMESTAMP_ISO8601(timestamp(UPDATEDATE, UPDATETIME)) "updateTimestamp",

rTrim(UPDATEUSER) "updateUser"

FROM sysjse

order by JIRAISSUEID`;

const resultSet = await executeStatement(sSql);

//const resultSet = await executeStatementConnectorP(sSql);

return resultSet;

}

for (let step = 0; step < 1000; step++) {

getStories()

.then((a) => {

console.log(step + ' OK ');

})

.catch((e) => {

console.error(step + ' ERROR ' + e);

});

}

//enter to exit

process.stdin.setRawMode(true);

process.stdin.resume();

process.stdin.on('data', process.exit.bind(process, 0));When ran using idb-connector [await executeStatement(sSql)]:

When ran using idb-pconnector [await executeStatementConnectorP(sSql)]:

My implementation with pconnector uses about 30% more temp storage after completing 1000 statements and runs slightly slower.

When a node job reaches 1200 - 1400 MB of temp storage, it tends to crash on our machine.

Is this caused by poor coding on my side, or is this some sort of memory leak?

Expected behavior

All memory cleaned up after completing a query.

Screenshots

See above.

- Node.js version: v12.18.4

- idb-connector version: +-- [email protected]

+-- [email protected]

|-- [email protected] deduped-- [email protected]

`-- [email protected] deduped - IBM i version: 4 7

Thank you for your time 👍