How to improve performance of paralell queries #3098

Replies: 2 comments 9 replies

-

Are you sure profile() output for every such query shows only a few milliseconds? Also note that the |

Beta Was this translation helpful? Give feedback.

-

|

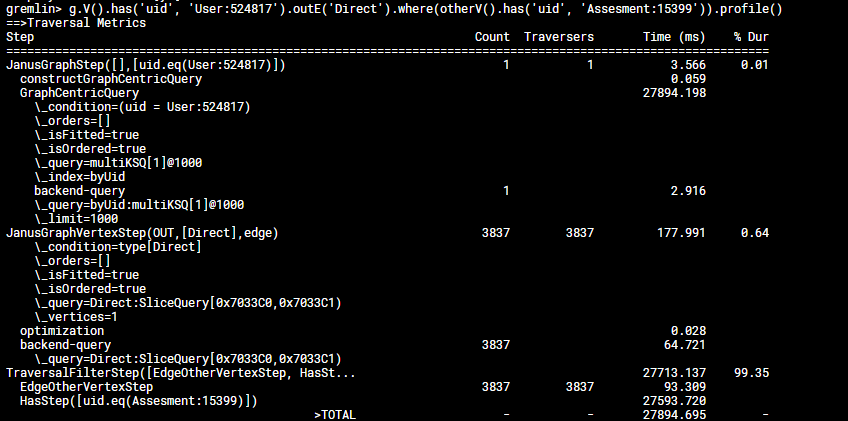

@li-boxuan we run it in the pod console of JanusGraph server. However looks like we were mistaken. We tried to run it again during the high load and received 28sec. Maybe you have any ideas why it takes so much time? The similar queries generated by our app were run in paralell when we perform this test. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

We have JanusGraph+Casandra CQL.

We have the Gremlin query that creates the Edges between two vertexes.

The query wroks great and is very fast (few milliseconds). However when we start to run this query in paralell the performance starts to degradate.

The query creates edge if it doesn't exist.

For our test scenario we created one Content vertex and we try to create edge to multiple User Vertexes.

So we run the follwing query in paralell:

this.G .V() .Has('uid', userVertexId) .Coalesce<Edge>( __.OutE(Direct).Where(__.OtherV().Has('uid', contentVertexId)), __.AddE(Direct) .Property(Priority, relation.Priority) .To(__.V().Has('uid', assetVertexId))) .Iterate()When we run it 100 times in parallell the perfromance is degradated from miliseconds to 2-3 seconds or even more.

The query batching (adding multiple edges in one query) also works for us. However there can be thousands of User Vertexes and we will still need to introduce some parallelism.

We have 3 instances of Casandra, 1 instance of JanusGraph server. The resource usage (cpu/memory) for both is almost not impacted during the test and there are available 90% of available CPU/memory.

Our consistency configuration is Quorum/Quorum with replciation factor 3. We tested it with Any/One, All/One. The result is the same, maybe performance was better, however with increasing of paralellism it started to degradate.

Maybe there is some internal lock for JanusGraph on Content vertex as it is shared in all queries.

We tried different configurations, but nothing doesn't help.

We tred to run .profile() for the command during the test. It shows, the it take 2ms in total, however it took few seconds to complete.

Any ideas what can we try? Or how can we investigate it deeper?

Our latest configuration is following:

value: '2000'

- name: >-

janusgraph.storage.buffer-sizestorage.cql.executor-service.core-pool-size

value: '10240'

- name: janusgraph.storage.cql.executor-service.core-pool-size

value: '50'

- name: janusgraph.storage.cql.batch-statement-size

value: '100'

- name: janusgraph.storage.cql.local-max-connections-per-host

value: '2'

- name: janusgraph.storage.cql.max-requests-per-connection

value: '5000'

- name: gremlinserver.maxAccumulationBufferComponents

value: '2048'

- name: gremlinserver.maxChunkSize

value: '28192'

- name: gremlinserver.maxContentLength

value: '128192'

- name: gremlinserver.maxWorkQueueSize

value: '28192'

- name: gremlinserver.threadPoolWorker

value: '8'

- name: gremlinserver.gremlinPool

value: '16'

- name: janusgraph.storage.cql.local-datacenter

value: dc1

- name: JANUS_PROPS_TEMPLATE

value: cql-es

- name: janusgraph.storage.hostname

value: jabyss-cassandra-dc1-service

- name: janusgraph.index.search.backend

value: es

- name: janusgraph.index.search.elasticsearch.http.auth.type

value: basic

- name: janusgraph.index.search.elasticsearch.http.auth.basic.username

valueFrom:

secretKeyRef:

name: elsearch-credentials

key: username

- name: janusgraph.index.search.elasticsearch.http.auth.basic.password

valueFrom:

secretKeyRef:

name: elsearch-credentials

key: password

- name: janusgraph.index.search.hostname

value: jabyss-master

- name: janusgraph.storage.password

valueFrom:

secretKeyRef:

name: jabyss-cassandra-superuser

key: password

- name: janusgraph.storage.username

valueFrom:

secretKeyRef:

name: jabyss-cassandra-superuser

key: username

- name: janusgraph.ids.block-size

value: '100000'

- name: janusgraph.storage.cql.read-consistency-level

value: QUORUM

- name: janusgraph.storage.cql.write-consistency-level

value: QUORUM

- name: janusgraph.storage.cql.replication-factor

value: '3'

- name: janusgraph.cache.db-cache

value: 'false'

- name: janusgraph.query.batch

value: 'true'

- name: janusgraph.query.batch-property-prefetch

value: 'true'

- name: janusgraph.query.smart-limit

value: 'true'

- name: janusgraph.storage.batch-loading

value: 'true'

- name: gremlinserver.writeBufferHighWaterMark

value: '6553600'

- name: gremlinserver.writeBufferLowWaterMark

value: '65536'

Beta Was this translation helpful? Give feedback.

All reactions