diff --git a/docs/kusion/1-what-is-kusion/1-overview.md b/docs/kusion/1-what-is-kusion/1-overview.md

index bbbc5fbb..7141aeee 100644

--- a/docs/kusion/1-what-is-kusion/1-overview.md

+++ b/docs/kusion/1-what-is-kusion/1-overview.md

@@ -6,7 +6,7 @@ slug: /

# Overview

-Welcome to Kusion! This introduction section covers what Kusion is, the Kusion workflow, and how Kusion compares to other software. If you just want to dive into using Kusion, feel free to skip ahead to the [Getting Started](getting-started/install-kusion) section.

+Welcome to Kusion! This introduction section covers what Kusion is, the Kusion workflow, and how Kusion compares to other software. If you just want to dive into using Kusion, feel free to skip ahead to the [Getting Started](../2-getting-started/2-getting-started-with-kusion-cli/0-install-kusion.md) section.

## What is Kusion?

diff --git a/docs/kusion/2-getting-started/1-install-kusion.md b/docs/kusion/2-getting-started/2-getting-started-with-kusion-cli/0-install-kusion.md

similarity index 99%

rename from docs/kusion/2-getting-started/1-install-kusion.md

rename to docs/kusion/2-getting-started/2-getting-started-with-kusion-cli/0-install-kusion.md

index 540881d6..556228b4 100644

--- a/docs/kusion/2-getting-started/1-install-kusion.md

+++ b/docs/kusion/2-getting-started/2-getting-started-with-kusion-cli/0-install-kusion.md

@@ -1,7 +1,7 @@

import Tabs from '@theme/Tabs';

import TabItem from '@theme/TabItem';

-# Install Kusion

+# Install Kusion CLI

You can install the latest Kusion CLI on MacOS, Linux and Windows.

diff --git a/docs/kusion/2-getting-started/2-getting-started-with-kusion-cli/1-deliver-quickstart.md b/docs/kusion/2-getting-started/2-getting-started-with-kusion-cli/1-deliver-quickstart.md

index 744ebd20..ff1c3e9e 100644

--- a/docs/kusion/2-getting-started/2-getting-started-with-kusion-cli/1-deliver-quickstart.md

+++ b/docs/kusion/2-getting-started/2-getting-started-with-kusion-cli/1-deliver-quickstart.md

@@ -10,7 +10,7 @@ In this tutorial, we will walk through how to deploy a quickstart application on

Before we start to play with this example, we need to have the Kusion CLI installed and run an accessible Kubernetes cluster. Here are some helpful documents:

-- Install [Kusion CLI](../1-install-kusion.md).

+- Install [Kusion CLI](../2-getting-started-with-kusion-cli/0-install-kusion.md).

- Run a [Kubernetes](https://kubernetes.io) cluster. Some light and convenient options for Kubernetes local deployment include [k3s](https://docs.k3s.io/quick-start), [k3d](https://k3d.io/v5.4.4/#installation), and [MiniKube](https://minikube.sigs.k8s.io/docs/tutorials/multi_node).

## Initialize Project

diff --git a/docs/kusion/2-getting-started/3-getting-started-with-kusion-server/1-deliver-quickstart.md b/docs/kusion/2-getting-started/3-getting-started-with-kusion-server/1-deliver-quickstart.md

index e9d5c5d8..d2488116 100644

--- a/docs/kusion/2-getting-started/3-getting-started-with-kusion-server/1-deliver-quickstart.md

+++ b/docs/kusion/2-getting-started/3-getting-started-with-kusion-server/1-deliver-quickstart.md

@@ -10,7 +10,7 @@ In this tutorial, we will walk through how to deploy a quickstart application on

Before we start to play with this example, we need to have the Kusion Server installed and run an accessible Kubernetes cluster. Here are some helpful documents:

-- Install [Kusion Server](../1-install-kusion.md).

+- Install [Kusion Server](../2-getting-started-with-kusion-cli/0-install-kusion.md).

- Run a [Kubernetes](https://kubernetes.io) cluster. Some light and convenient options for Kubernetes local deployment include [k3s](https://docs.k3s.io/quick-start), [k3d](https://k3d.io/v5.4.4/#installation), and [MiniKube](https://minikube.sigs.k8s.io/docs/tutorials/multi_node).

## Initialize Backend, Source, and Workspace

diff --git a/docs/kusion/2-getting-started/3-getting-started-with-kusion-server/2-deliver-quickstart-with-db.md b/docs/kusion/2-getting-started/3-getting-started-with-kusion-server/2-deliver-quickstart-with-db.md

index d0993aec..d5632ab9 100644

--- a/docs/kusion/2-getting-started/3-getting-started-with-kusion-server/2-deliver-quickstart-with-db.md

+++ b/docs/kusion/2-getting-started/3-getting-started-with-kusion-server/2-deliver-quickstart-with-db.md

@@ -10,7 +10,7 @@ In this tutorial, we will learn how to create and manage our own application wit

Before we start to play with this example, we need to have the Kusion Server installed and run an accessible Kubernetes cluster. Besides, we need to have a GitHub account to initiate our own config code repository as `Source` in Kusion.

-- Install [Kusion Server](../1-install-kusion.md).

+- Install [Kusion Server](../2-getting-started-with-kusion-cli/0-install-kusion.md).

- Run a [Kubernetes](https://kubernetes.io) cluster. Some light and convenient options for Kubernetes local deployment include [k3s](https://docs.k3s.io/quick-start), [k3d](https://k3d.io/v5.4.4/#installation), and [MiniKube](https://minikube.sigs.k8s.io/docs/tutorials/multi_node).

diff --git a/docs/kusion/5-user-guides/1-using-kusion-cli/1-cloud-resources/1-database.md b/docs/kusion/5-user-guides/1-using-kusion-cli/1-cloud-resources/1-database.md

index 613b01bf..ecd99d4b 100644

--- a/docs/kusion/5-user-guides/1-using-kusion-cli/1-cloud-resources/1-database.md

+++ b/docs/kusion/5-user-guides/1-using-kusion-cli/1-cloud-resources/1-database.md

@@ -11,7 +11,7 @@ This tutorial will demonstrate how to deploy a WordPress application with Kusion

## Prerequisites

-- Install [Kusion](../../../2-getting-started/1-install-kusion.md).

+- Install [Kusion](../../../2-getting-started/2-getting-started-with-kusion-cli/0-install-kusion.md).

- Install [kubectl CLI](https://kubernetes.io/docs/tasks/tools/#kubectl) and run a [Kubernetes](https://kubernetes.io/) or [k3s](https://docs.k3s.io/quick-start) or [k3d](https://k3d.io/v5.4.4/#installation) or [MiniKube](https://minikube.sigs.k8s.io/docs/tutorials/multi_node) cluster.

- Prepare a cloud service account and create a user with at least **VPCFullAccess** and **RDSFullAccess** related permissions to use the Relational Database Service (RDS). This kind of user can be created and managed in the Identity and Access Management (IAM) console of the cloud vendor.

- The environment that executes `kusion` needs to have connectivity to terraform registry to download the terraform providers.

diff --git a/docs/kusion/5-user-guides/1-using-kusion-cli/2-working-with-k8s/1-deploy-application.md b/docs/kusion/5-user-guides/1-using-kusion-cli/2-working-with-k8s/1-deploy-application.md

index df283a11..7ab07df3 100644

--- a/docs/kusion/5-user-guides/1-using-kusion-cli/2-working-with-k8s/1-deploy-application.md

+++ b/docs/kusion/5-user-guides/1-using-kusion-cli/2-working-with-k8s/1-deploy-application.md

@@ -31,7 +31,7 @@ Before we start, we need to complete the following steps:

1、Install Kusion

We recommend using HomeBrew(Mac), Scoop(Windows), or an installation shell script to download and install Kusion.

-See [Download and Install](../../../2-getting-started/1-install-kusion.md) for more details.

+See [Download and Install](../../../2-getting-started/2-getting-started-with-kusion-cli/0-install-kusion.md) for more details.

2、Running Kubernetes cluster

diff --git a/docs_versioned_docs/version-v0.14/1-what-is-kusion/1-overview.md b/docs_versioned_docs/version-v0.14/1-what-is-kusion/1-overview.md

new file mode 100644

index 00000000..7141aeee

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/1-what-is-kusion/1-overview.md

@@ -0,0 +1,62 @@

+---

+id: overview

+title: Overview

+slug: /

+---

+

+# Overview

+

+Welcome to Kusion! This introduction section covers what Kusion is, the Kusion workflow, and how Kusion compares to other software. If you just want to dive into using Kusion, feel free to skip ahead to the [Getting Started](../2-getting-started/2-getting-started-with-kusion-cli/0-install-kusion.md) section.

+

+## What is Kusion?

+

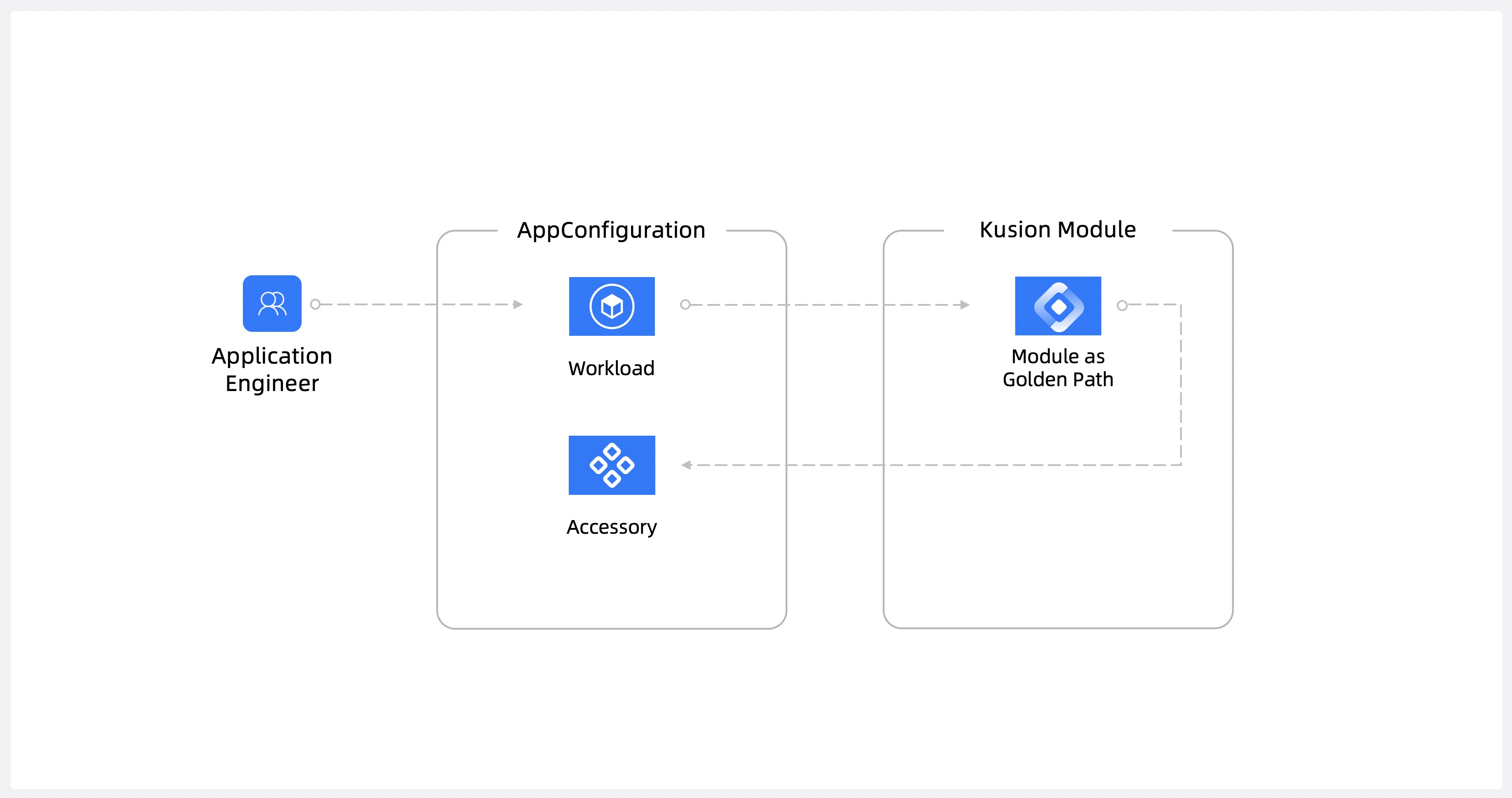

+Kusion is an intent-driven [Platform Orchestrator](https://internaldeveloperplatform.org/platform-orchestrators/), which sits at the core of an [Internal Developer Platform (IDP)](https://internaldeveloperplatform.org/what-is-an-internal-developer-platform/). With Kusion you can enable app-centric development, your developers only need to write a single application specification - [AppConfiguration](https://www.kusionstack.io/docs/concepts/app-configuration). [AppConfiguration](https://www.kusionstack.io/docs/concepts/app-configuration) defines the workload and all resource dependencies without needing to supply environment-specific values, Kusion ensures it provides everything needed for the application to run.

+

+Kusion helps app developers who are responsible for creating applications and the platform engineers responsible for maintaining the infrastructure the applications run on. These roles may overlap or align differently in your organization, but Kusion is intended to ease the workload for any practitioner responsible for either set of tasks.

+

+

+

+

+## How does Kusion work?

+

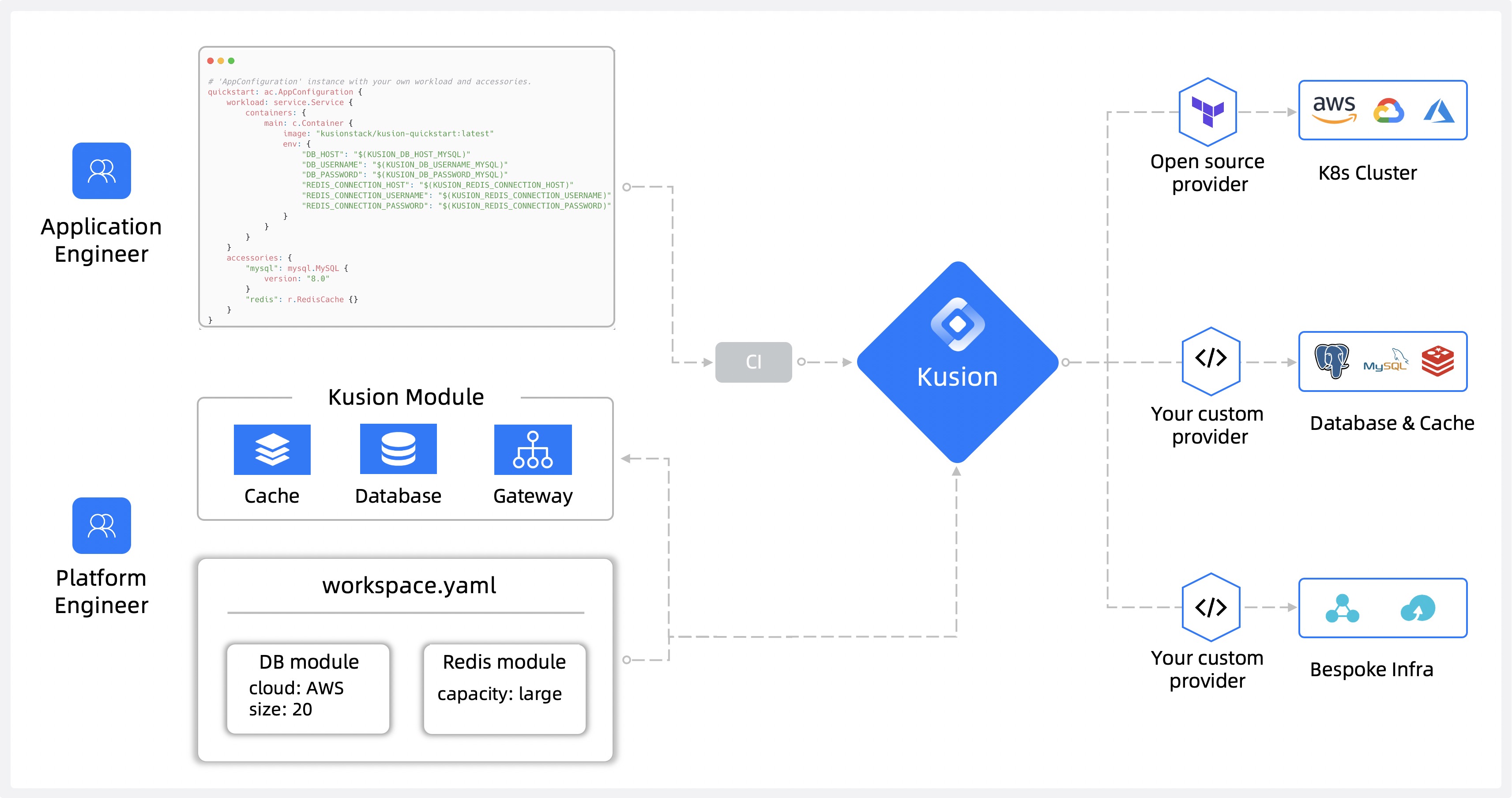

+As a Platform Orchestrator, Kusion enables you to address challenges often associated with Day 0 and Day 1. Both platform engineers and application engineers can benefit from Kusion.

+

+There are two key workflows for Kusion:

+

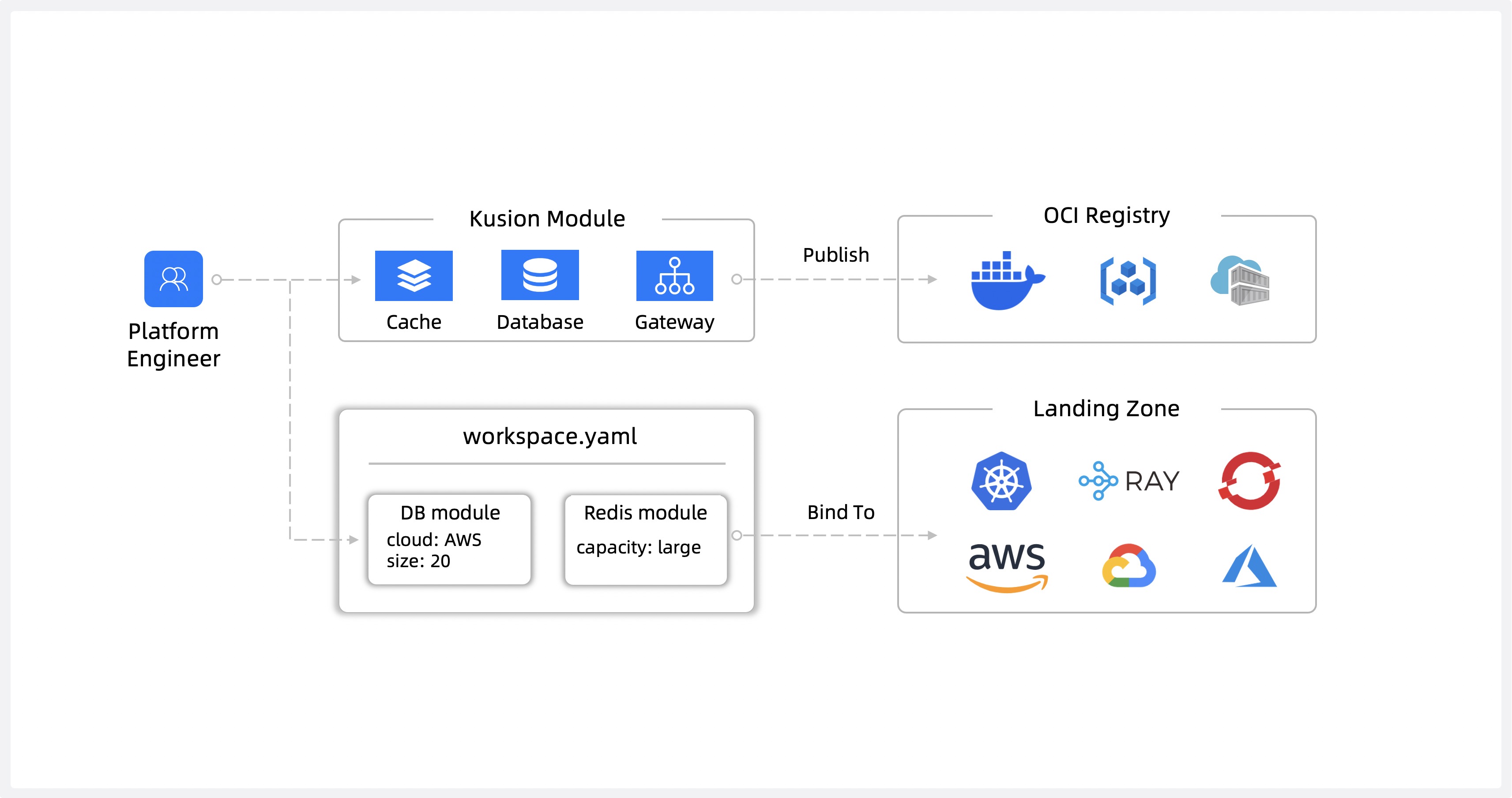

+1. **Day 0 - Set up the modules and workspaces:** Platform engineers create shared modules for deploying applications and their underlying infrastructure, and workspace definitions for target landing zone. These standardized, shared modules codify the requirements from stakeholders across the organization including security, compliance, and finance.

+

+ Kusion modules abstract the complexity of underlying infrastructure tooling, enabling app developers to deploy their applications using a self-service model.

+

+

+

+

+

+

+2. **Day 1 - Set up the application:** Application developers leverage the workspaces and modules created by the platform engineers to deploy applications and their supporting infrastructure. The platform team maintains the workspaces and modules, which allows application developers to focus on building applications using a repeatable process on standardized infrastructure.

+

+

+

+

+

+

+## Kusion Highlights

+

+* **Platform as Code**

+

+ Specify desired application intent through declarative configuration code, drive continuous deployment with any CI/CD systems or GitOps to match that intent. No ad-hoc scripts, no hard maintain custom workflows, just declarative configuration code.

+

+* **Dynamic Configuration Management**

+

+ Enable platform teams to set baseline-templates, control how and where to deploy application workloads and provision accessory resources. While still enabling application developers freedom via workload-centric specification and deployment.

+

+* **Security & Compliance Built In**

+

+ Enforce security and infrastructure best practices with out-of-box [base models](https://github.com/KusionStack/catalog), create security and compliance guardrails for any Kusion deploy with third-party Policy as Code tools. All accessory resource secrets are automatically injected into Workloads.

+

+* **Lightweight and Open Model Ecosystem**

+

+ Pure client-side solution ensures good portability and the rich APIs make it easier to integrate and automate. Large growing model ecosystem covers all stages in application lifecycle, with extensive connections to various infrastructure capabilities.

+

+:::tip

+

+**Kusion is an early project.** The end goal of Kusion is to boost [Internal Developer Platform](https://internaldeveloperplatform.org/) revolution, and we are iterating on Kusion quickly to strive towards this goal. For any help or feedback, please contact us in [Slack](https://github.com/KusionStack/community/discussions/categories/meeting) or [issues](https://github.com/KusionStack/kusion/issues).

diff --git a/docs_versioned_docs/version-v0.14/1-what-is-kusion/2-kusion-vs-x.md b/docs_versioned_docs/version-v0.14/1-what-is-kusion/2-kusion-vs-x.md

new file mode 100644

index 00000000..ca077620

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/1-what-is-kusion/2-kusion-vs-x.md

@@ -0,0 +1,37 @@

+---

+id: kusion-vs-x

+---

+

+# Kusion vs Other Software

+

+It can be difficult to understand how different software compare to each other. Is one a replacement for the other? Are they complementary? etc. In this section, we compare Kusion to other software.

+

+**vs. GitOps (ArgoCD, FluxCD, etc.)**

+

+According to the [open GitOps principles](https://opengitops.dev/), GitOps systems typically have its desired state expressed declaratively, continuously observe actual system state and attempt to apply the desired state. In the design of Kusion toolchain, we refer to those principles but have no intention to reinvent any GitOps systems wheel.

+

+Kusion adopts your GitOps process and improves it with richness of features. The declarative [AppConfiguration](../concepts/appconfigurations) model can be used to express desired intent, once intent is declared [Kusion CLI](../reference/commands) takes the role to make production match intent as safely as possible.

+

+**vs. PaaS (Heroku, Vercel, etc.)**

+

+Kusion shares the same goal with traditional PaaS platforms to provide application delivery and management capabilities. The intuitive difference from the full functionality PaaS platforms is that Kusion is a client-side toolchain, not a complete PaaS platform.

+

+Also traditional PaaS platforms typically constrain the type of applications they can run but there is no such constrain for Kusion which means Kusion provides greater flexibility.

+

+Kusion allows you to have platform-like features without the constraints of a traditional PaaS. However, Kusion is not attempting to replace any PaaS platforms, instead Kusion can be used to deploy to a platform such as Heroku.

+

+**vs. KubeVela**

+

+KubeVela is a modern software delivery and management control plane which makes it easier to deploy and operate applications on top of Kubernetes.

+

+Although some might initially perceive an overlap between Kusion and KubeVela, they are in fact complementary and can be integrated to work together. As a lightweight, purely client-side tool, coupled with corresponding [Generator](https://github.com/KusionStack/kusion-module-framework) implementation, Kusion can render [AppConfiguration](../concepts/appconfigurations) schema to generate CRD resources for KubeVela and leverage KubeVela's control plane to implement application delivery.

+

+**vs. Helm**

+

+The concept of Helm originates from the [package management](https://en.wikipedia.org/wiki/Package_manager) mechanism of the operating system. It is a package management tool based on templated YAML files and supports the execution and management of resources in the package.

+

+Kusion is not a package manager. Kusion naturally provides a superset of Helm capabilities with the modeled key-value pairs, so that developers can use Kusion directly as a programable alternative to avoid the pain of writing text templates. For users who have adopted Helm, the stack compilation results in Kusion can be packaged and used in Helm format.

+

+**vs. Kubernetes**

+

+Kubernetes(K8s) is a container scheduling and management runtime widely used around the world, an "operating system" core for containers, and a platform for building platforms. Above the Kubernetes API layer, Kusion aims to provide app-centric **abstraction** and unified **workspace**, better **user experience** and automation **workflow**, and helps developers build the app delivery model easily and collaboratively.

diff --git a/docs_versioned_docs/version-v0.14/1-what-is-kusion/_category_.json b/docs_versioned_docs/version-v0.14/1-what-is-kusion/_category_.json

new file mode 100644

index 00000000..0817eb90

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/1-what-is-kusion/_category_.json

@@ -0,0 +1,3 @@

+{

+ "label": "What is Kusion?"

+}

diff --git a/docs_versioned_docs/version-v0.14/2-getting-started/2-getting-started-with-kusion-cli/0-install-kusion.md b/docs_versioned_docs/version-v0.14/2-getting-started/2-getting-started-with-kusion-cli/0-install-kusion.md

new file mode 100644

index 00000000..556228b4

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/2-getting-started/2-getting-started-with-kusion-cli/0-install-kusion.md

@@ -0,0 +1,144 @@

+import Tabs from '@theme/Tabs';

+import TabItem from '@theme/TabItem';

+

+# Install Kusion CLI

+

+You can install the latest Kusion CLI on MacOS, Linux and Windows.

+

+## MacOs/Linux

+

+For the MacOs and Linux, Homebrew and sh script are supported. Choose the one you prefer from the methods below.

+

+

+

+

+The recommended method for installing on MacOS and Linux is to use the brew package manager.

+

+**Install Kusion**

+

+```bash

+# tap formula repository Kusionstack/tap

+brew tap KusionStack/tap

+

+# install Kusion

+brew install KusionStack/tap/kusion

+```

+

+**Update Kusion**

+

+```bash

+# update formulae from remote

+brew update

+

+# update Kusion

+brew upgrade KusionStack/tap/kusion

+```

+

+**Uninstall Kusion**

+

+```bash

+# uninstall Kusion

+brew uninstall KusionStack/tap/kusion

+```

+

+```mdx-code-block

+

+

+```

+

+**Install Kusion**

+

+```bash

+# install Kusion, default latest version

+curl https://www.kusionstack.io/scripts/install.sh | sh

+```

+

+**Install the Specified Version of Kusion**

+

+You can also install the specified version of Kusion by appointing the version as shell script parameter, where the version is the [available tag](https://github.com/KusionStack/kusion/tags) trimming prefix "v", such as 0.11.0, 0.10.0, etc. In general, you don't need to specify Kusion version, just use the command above to install the latest version.

+

+```bash

+# install Kusion of specified version 0.11.0

+curl https://www.kusionstack.io/scripts/install.sh | sh -s 0.11.0

+```

+

+**Uninstall Kusion**

+

+```bash

+# uninstall Kusion

+curl https://www.kusionstack.io/scripts/uninstall.sh | sh

+```

+

+```mdx-code-block

+

+

+```

+

+## Windows

+

+For the Windows, Scoop and Powershell script are supported. Choose the one you prefer from the methods below.

+

+

+

+

+The recommended method for installing on Windows is to use the scoop package manager.

+

+**Install Kusion**

+

+```bash

+# add scoop bucket KusionStack

+scoop bucket add KusionStack https://github.com/KusionStack/scoop-bucket.git

+

+# install kusion

+scoop install KusionStack/kusion

+```

+

+**Update Kusion**

+

+```bash

+# update manifest from remote

+scoop update

+

+# update Kusion

+scoop install KusionStack/kusion

+```

+

+**Uninstall Kusion**

+

+```bash

+# uninstall Kusion

+brew uninstall KusionStack/kusion

+```

+

+```mdx-code-block

+

+

+```

+

+**Install Kusion**

+

+```bash

+# install Kusion, default latest version

+powershell -Command "iwr -useb https://www.kusionstack.io/scripts/install.ps1 | iex"

+```

+

+**Install the Specified Version of Kusion**

+

+You can also install the specified version of Kusion by appointing the version as shell script parameter, where the version is the [available tag](https://github.com/KusionStack/kusion/tags) trimming prefix "v", such as 0.11.0, etc. In general, you don't need to specify Kusion version, just use the command above to install the latest version.

+

+```bash

+# install Kusion of specified version 0.10.0

+powershell {"& { $(irm https://www.kusionstack.io/scripts/install.ps1) } -Version 0.11.0" | iex}

+```

+

+**Uninstall Kusion**

+

+```bash

+# uninstall Kusion

+powershell -Command "iwr -useb https://www.kusionstack.io/scripts/uninstall.ps1 | iex"

+```

+

+```mdx-code-block

+

+

+```

diff --git a/docs_versioned_docs/version-v0.14/2-getting-started/2-getting-started-with-kusion-cli/1-deliver-quickstart.md b/docs_versioned_docs/version-v0.14/2-getting-started/2-getting-started-with-kusion-cli/1-deliver-quickstart.md

new file mode 100644

index 00000000..ff1c3e9e

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/2-getting-started/2-getting-started-with-kusion-cli/1-deliver-quickstart.md

@@ -0,0 +1,221 @@

+---

+id: deliver-quickstart

+---

+

+# Run Your First App on Kubernetes with Kusion CLI

+

+In this tutorial, we will walk through how to deploy a quickstart application on Kubernetes with Kusion. The demo application can interact with a locally deployed MySQL database, which is declared as an accessory in the config codes and will be automatically created and managed by Kusion.

+

+## Prerequisites

+

+Before we start to play with this example, we need to have the Kusion CLI installed and run an accessible Kubernetes cluster. Here are some helpful documents:

+

+- Install [Kusion CLI](../2-getting-started-with-kusion-cli/0-install-kusion.md).

+- Run a [Kubernetes](https://kubernetes.io) cluster. Some light and convenient options for Kubernetes local deployment include [k3s](https://docs.k3s.io/quick-start), [k3d](https://k3d.io/v5.4.4/#installation), and [MiniKube](https://minikube.sigs.k8s.io/docs/tutorials/multi_node).

+

+## Initialize Project

+

+We can start by initializing this tutorial project with `kusion init` cmd.

+

+```shell

+# Create a new directory and navigate into it.

+mkdir quickstart && cd quickstart

+

+# Initialize the demo project with the name of the current directory.

+kusion init

+```

+

+The created project structure looks like below:

+

+```shell

+tree

+.

+├── default

+│ ├── kcl.mod

+│ ├── main.k

+│ └── stack.yaml

+└── project.yaml

+

+2 directories, 4 files

+```

+

+:::info

+More details about the project and stack structure can be found in [Project](../../3-concepts/1-project/1-overview.md) and [Stack](../../3-concepts/2-stack/1-overview.md).

+:::

+

+### Review Configuration Files

+

+Now let's have a glance at the configuration codes of `default` stack:

+

+```shell

+cat default/main.k

+```

+

+```python

+import kam.v1.app_configuration as ac

+import service

+import service.container as c

+import network as n

+

+# main.k declares the customized configuration codes for default stack.

+quickstart: ac.AppConfiguration {

+ workload: service.Service {

+ containers: {

+ quickstart: c.Container {

+ image: "kusionstack/kusion-quickstart:latest"

+ }

+ }

+ }

+ accessories: {

+ "network": n.Network {

+ ports: [

+ n.Port {

+ port: 8080

+ }

+ ]

+ }

+ }

+}

+```

+

+The configuration file `main.k`, usually written by the **App Developers**, declares the customized configuration codes for `default` stack, including an `AppConfiguration` instance with the name of `quickstart`. The `quickstart` application consists of a `Workload` with the type of `service.Service`, which runs a container named `quickstart` using the image of `kusionstack/kusion-quickstart:latest`.

+

+Besides, it declares a **Kusion Module** with the type of `network.Network`, exposing `8080` port to be accessed for the long-running service.

+

+The `AppConfiguration` model can hide the major complexity of Kubernetes resources such as `Namespace`, `Deployment`, and `Service` which will be created and managed by Kusion, providing the concepts that are **application-centric** and **infrastructure-agnostic** for a more developer-friendly experience.

+

+:::info

+More details about the `AppConfiguration` model and built-in Kusion Module can be found in [kam](https://github.com/KusionStack/kam) and [catalog](https://github.com/KusionStack/catalog).

+:::

+

+The declaration of the dependency packages can be found in `default/kcl.mod`:

+

+```shell

+cat default/kcl.mod

+```

+

+```shell

+[dependencies]

+kam = { git = "https://github.com/KusionStack/kam.git", tag = "0.2.0" }

+service = {oci = "oci://ghcr.io/kusionstack/service", tag = "0.1.0" }

+network = { oci = "oci://ghcr.io/kusionstack/network", tag = "0.2.0" }

+```

+

+:::info

+More details about the application model and module dependency declaration can be found in [Kusion Module guide for app dev](../../3-concepts/3-module/3-app-dev-guide.md).

+:::

+

+:::tip

+The specific module versions we used in the above demonstration is only applicable for Kusion CLI after **v0.12.0**.

+:::

+

+## Application Delivery

+

+Use the following command to deliver the quickstart application in `default` stack on your accessible Kubernetes cluster, while watching the resource creation and automatically port-forwarding the specified port (8080) from local to the Kubernetes Service of the application. We can check the details of the resource preview results before we confirm to apply the diffs.

+

+```shell

+cd default && kusion apply --port-forward 8080

+```

+

+

+

+:::info

+During the first apply, the models and modules that the application depends on will be downloaded, so it may take some time (usually within one minute). You can take a break and have a cup of coffee.

+:::

+

+:::info

+Kusion by default will create the Kubernetes resources of the application in the namespace the same as the project name. If you want to customize the namespace, please refer to [Project Namespace Extension](../../3-concepts/1-project/2-configuration.md#kubernetesnamespace) and [Stack Namespace Extension](../../3-concepts/2-stack/2-configuration.md#kubernetesnamespace).

+:::

+

+Now we can visit [http://localhost:8080](http://localhost:8080) in our browser and play with the demo application!

+

+

+

+## Add MySQL Accessory

+

+As you can see, the demo application page indicates that the MySQL database is not ready yet. Hence, we will now add a MySQL database as an accessory for the workload.

+

+We can add the Kusion-provided built-in dependency in the `default/kcl.mod`, so that we can use the `MySQL` module in the configuration codes.

+

+```shell

+[dependencies]

+kam = { git = "https://github.com/KusionStack/kam.git", tag = "0.2.0" }

+service = {oci = "oci://ghcr.io/kusionstack/service", tag = "0.1.0" }

+network = { oci = "oci://ghcr.io/kusionstack/network", tag = "0.2.0" }

+mysql = { oci = "oci://ghcr.io/kusionstack/mysql", tag = "0.2.0" }

+```

+

+We can update the `default/main.k` with the following configuration codes:

+

+```python

+import kam.v1.app_configuration as ac

+import service

+import service.container as c

+import network as n

+import mysql

+

+# main.k declares the customized configuration codes for default stack.

+quickstart: ac.AppConfiguration {

+ workload: service.Service {

+ containers: {

+ quickstart: c.Container {

+ image: "kusionstack/kusion-quickstart:latest"

+ env: {

+ "DB_HOST": "$(KUSION_DB_HOST_QUICKSTART_DEFAULT_QUICKSTART_MYSQL)"

+ "DB_USERNAME": "$(KUSION_DB_USERNAME_QUICKSTART_DEFAULT_QUICKSTART_MYSQL)"

+ "DB_PASSWORD": "$(KUSION_DB_PASSWORD_QUICKSTART_DEFAULT_QUICKSTART_MYSQL)"

+ }

+ }

+ }

+ }

+ accessories: {

+ "network": n.Network {

+ ports: [

+ n.Port {

+ port: 8080

+ }

+ ]

+ }

+ "mysql": mysql.MySQL {

+ type: "local"

+ version: "8.0"

+ }

+ }

+}

+```

+

+The configuration codes above declare a local `mysql.MySQL` with the engine version of `8.0` as an accessory for the application workload. The necessary Kubernetes resources for deploying and using the local MySQL database will be generated and users can get the `host`, `username` and `password` of the database through the [MySQL Credentials And Connectivity](../../6-reference/2-modules/1-developer-schemas/database/mysql.md#credentials-and-connectivity) of Kusion in application containers.

+

+:::info

+For more information about the naming convention of Kusion built-in MySQL module, you can refer to [Module Naming Convention](../../6-reference/2-modules/3-naming-conventions.md).

+:::

+

+After that, we can re-apply the application, and we can set the `--watch=false` to skip watching the resources to be reconciled:

+

+```shell

+kusion apply --port-forward 8080 --watch=false

+```

+

+

+

+:::info

+You may wait another minute to download the MySQL Module.

+:::

+

+Let's visit [http://localhost:8080](http://localhost:8080) in our browser, and we can find that the application has successfully connected to the MySQL database. The connection information is also printed on the page.

+

+

+

+Now please feel free to enjoy the demo application!

+

+

+

+## Delete Application

+

+We can delete the quickstart demo workload and related accessory resources with the following cmd:

+

+```shell

+kusion destroy --yes

+```

+

+

diff --git a/docs_versioned_docs/version-v0.14/2-getting-started/2-getting-started-with-kusion-cli/_category_.json b/docs_versioned_docs/version-v0.14/2-getting-started/2-getting-started-with-kusion-cli/_category_.json

new file mode 100644

index 00000000..da29385b

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/2-getting-started/2-getting-started-with-kusion-cli/_category_.json

@@ -0,0 +1,3 @@

+{

+ "label": "Start with Kusion CLI"

+}

\ No newline at end of file

diff --git a/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/0-installation.md b/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/0-installation.md

new file mode 100644

index 00000000..d667f64b

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/0-installation.md

@@ -0,0 +1,222 @@

+---

+title: Installation

+---

+

+## Install with Helm

+

+If you have a Kubernetes cluster, Helm is the recommended installation method.

+

+The following tutorial will guide you to install Kusion using Helm, which will install the chart with the release name `kusion-release` in namespace `kusion`.

+

+### Prerequisites

+

+* Helm v3+

+* A Kubernetes Cluster (The simplest way is to deploy a Kubernetes cluster locally using `kind` or `minikube`)

+

+### Installation Options

+

+> Note: A valid kubeconfig configuration is required for Kusion to function properly. You must either use the installation script, provide your own kubeconfig in values.yaml, or set it through the --set parameter

+

+You have several options to install Kusion:

+

+#### 1. Using the installation script (recommended)

+

+Download the installation script from the [KusionStack charts repository](https://github.com/KusionStack/charts/blob/master/scripts/install-kusion.sh)

+

+```shell

+curl -O https://raw.githubusercontent.com/KusionStack/charts/master/scripts/install-kusion.sh

+chmod +x install-kusion.sh

+```

+

+Run the installation script with your kubeconfig files:

+

+```shell

+./install-kusion-server.sh ...

+```

+

+**Parameters:**

+

+- **kubeconfig_key**: The key for the kubeconfig file. It should be unique and not contain spaces.

+

+- **kubeconfig_path**: The path to the kubeconfig file.

+

+#### 2. Remote installation with Helm

+

+First, add the `kusionstack` chart repo to your local repository:

+

+```shell

+helm repo add kusionstack https://kusionstack.github.io/charts

+helm repo update

+```

+

+Then install with your encoded kubeconfig:

+

+```shell

+# Base64 encode your kubeconfig files

+KUBECONFIG_CONTENT1=$(base64 -w 0 /path/to/your/kubeconfig1)

+KUBECONFIG_CONTENT2=$(base64 -w 0 /path/to/your/kubeconfig2)

+

+# Install with kubeconfig and optional configurations

+helm install kusion-release kusionstack/kusion \

+--set kubeconfig.kubeConfigs.kubeconfig0="$KUBECONFIG_CONTENT1" \

+--set kubeconfig.kubeConfigs.kubeconfig1="$KUBECONFIG_CONTENT2"

+```

+

+You may have to set your specific configurations if it is deployed into a production cluster, or you want to customize the chart configuration, such as `database`, `replicas`, `port` etc.

+

+```shell

+helm install kusion-release kusionstack/kusion \

+--set kubeconfig.kubeConfigs.kubeconfig0="$KUBECONFIG_CONTENT1" \

+--set kubeconfig.kubeConfigs.kubeconfig1="$KUBECONFIG_CONTENT2" \

+--set server.port=8080 \

+--set server.replicas=3 \

+--set mysql.enabled=true \

+```

+

+> All configurable parameters of the Kusion chart are detailed [here](#chart-parameters).

+

+### Search all available versions

+

+You can use the following command to view all installable chart versions.

+

+```shell

+helm repo update

+helm search repo kusionstack/kusion --versions

+```

+

+### Upgrade specified version

+

+You can specify the version to be upgraded through the `--version`.

+

+```shell

+# Upgrade to the latest version.

+helm upgrade kusion-release kusionstack/kusion

+

+# Upgrade to the specified version.

+helm upgrade kusion-release kusionstack/kusion --version 1.2.3

+```

+

+### Local Installation

+

+If you have problem connecting to [https://kusionstack.github.io/charts/](https://kusionstack.github.io/charts/) in production, you may need to manually download the chart from [here](https://github.com/KusionStack/charts) and use it to install or upgrade locally.

+

+```shell

+git clone https://github.com/KusionStack/charts.git

+```

+

+Edit the [default template values](https://github.com/KusionStack/charts/blob/master/charts/kusion/values.yaml) file to set your own kubeconfig and other configurations.

+> For more information about the KubeConfig configuration, please refer to the [KubeConfig](#kubeconfig) section.

+

+Then install the chart:

+

+```shell

+helm install kusion-release charts/kusion

+```

+

+### Uninstall

+

+To uninstall/delete the `kusion-release` Helm release in namespace `kusion`:

+

+```shell

+helm uninstall kusion-release

+```

+

+### Image Registry Proxy for China

+

+If you are in China and have problem to pull image from official DockerHub, you can use the registry proxy:

+

+```shell

+helm install kusion-release kusionstack/kusion --set registryProxy=docker.m.daocloud.io

+```

+

+**NOTE**: The above is just an example, you can replace the value of `registryProxy` as needed. You also need to provide your own kubeconfig in values.yaml or set it through the --set parameter.

+

+## Chart Parameters

+

+The following table lists the configurable parameters of the chart and their default values.

+

+### General Parameters

+

+| Key | Type | Default | Description |

+|-----|------|---------|-------------|

+| namespace | string | `"kusion"` | Which namespace to be deployed |

+| namespaceEnabled | bool | `true` | Whether to generate namespace |

+| registryProxy | string | `""` | Image registry proxy will be the prefix as all component images |

+

+### Global Parameters

+

+| Key | Type | Default | Description |

+|-----|------|---------|-------------|

+

+### Kusion Server

+

+The Kusion Server Component is the main backend server that provides the core functionality and REST APIs.

+

+| Key | Type | Default | Description |

+|-----|------|---------|-------------|

+| server.args.authEnabled | bool | `false` | Whether to enable authentication |

+| server.args.authKeyType | string | `"RSA"` | Authentication key type |

+| server.args.authWhitelist | list | `[]` | Authentication whitelist |

+| server.args.autoMigrate | bool | `true` | Whether to enable automatic migration |

+| server.args.dbHost | string | `""` | Database host |

+| server.args.dbName | string | `""` | Database name |

+| server.args.dbPassword | string | `""` | Database password |

+| server.args.dbPort | int | `3306` | Database port |

+| server.args.dbUser | string | `""` | Database user |

+| server.args.defaultSourceRemote | string | `""` | Default source URL |

+| server.args.logFilePath | string | `"/logs/kusion.log"` | Logging |

+| server.args.maxAsyncBuffer | int | `100` | Maximum number of buffer zones during concurrent async executions including generate, preview, apply and destroy |

+| server.args.maxAsyncConcurrent | int | `1` | Maximum number of concurrent async executions including generate, preview, apply and destroy |

+| server.args.maxConcurrent | int | `10` | Maximum number of concurrent executions including preview, apply and destroy |

+| server.args.migrateFile | string | `""` | Migration file path |

+| server.env | list | `[]` | Additional environment variables for the server |

+| server.image.imagePullPolicy | string | `"IfNotPresent"` | Image pull policy |

+| server.image.repo | string | `"kusionstack/kusion"` | Repository for Kusion server image |

+| server.image.tag | string | `""` | Tag for Kusion server image. Defaults to the chart's appVersion if not specified |

+| server.name | string | `"kusion-server"` | Component name for kusion server |

+| server.port | int | `80` | Port for kusion server |

+| server.replicas | int | `1` | The number of kusion server pods to run |

+| server.resources | object | `{"limits":{"cpu":"500m","memory":"1Gi"},"requests":{"cpu":"250m","memory":"256Mi"}}` | Resource limits and requests for the kusion server pods |

+| server.serviceType | string | `"ClusterIP"` | Service type for the kusion server. The available type values list as ["ClusterIP"、"NodePort"、"LoadBalancer"]. |

+

+### MySQL Database

+

+The MySQL database is used to store Kusion's persistent data.

+

+| Key | Type | Default | Description |

+|-----|------|---------|-------------|

+| mysql.database | string | `"kusion"` | MySQL database name |

+| mysql.enabled | bool | `true` | Whether to enable MySQL deployment |

+| mysql.image.imagePullPolicy | string | `"IfNotPresent"` | Image pull policy |

+| mysql.image.repo | string | `"mysql"` | Repository for MySQL image |

+| mysql.image.tag | string | `"8.0"` | Specific tag for MySQL image |

+| mysql.name | string | `"mysql"` | Component name for MySQL |

+| mysql.password | string | `""` | MySQL password |

+| mysql.persistence.accessModes | list | `["ReadWriteOnce"]` | Access modes for MySQL PVC |

+| mysql.persistence.size | string | `"10Gi"` | Size of MySQL persistent volume |

+| mysql.persistence.storageClass | string | `""` | Storage class for MySQL PVC |

+| mysql.port | int | `3306` | Port for MySQL |

+| mysql.replicas | int | `1` | The number of MySQL pods to run |

+| mysql.resources | object | `{"limits":{"cpu":"1000m","memory":"1Gi"},"requests":{"cpu":"250m","memory":"512Mi"}}` | Resource limits and requests for MySQL pods |

+| mysql.rootPassword | string | `""` | MySQL root password |

+| mysql.user | string | `"kusion"` | MySQL user |

+

+### KubeConfig

+

+The KubeConfig is used to store the KubeConfig files for the Kusion Server.

+

+| Key | Type | Default | Description |

+|-----|------|---------|-------------|

+| kubeconfig.kubeConfigVolumeMountPath | string | `"/var/run/secrets/kubernetes.io/kubeconfigs/"` | Volume mount path for KubeConfig files |

+| kubeconfig.kubeConfigs | object | `{}` | KubeConfig contents map |

+

+**NOTE**: The KubeConfig contents map is a key-value pair, where the key is the key of the KubeConfig file and the value is the contents of the KubeConfig file.

+

+```yaml

+# Example structure:

+ kubeConfigs:

+ kubeconfig0: |

+ Please fill in your KubeConfig contents here.

+ kubeconfig1: |

+ Please fill in your KubeConfig contents here.

+```

diff --git a/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/1-deliver-quickstart.md b/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/1-deliver-quickstart.md

new file mode 100644

index 00000000..d2488116

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/1-deliver-quickstart.md

@@ -0,0 +1,195 @@

+---

+id: deliver-quickstart

+---

+

+# Run Your First App on Kubernetes with Kusion Server

+

+In this tutorial, we will walk through how to deploy a quickstart application on Kubernetes with Kusion. The demo application only contains the `Namespace`, `Deployment`, and `Service` resources necessary for a long-running service workload.

+

+## Prerequisites

+

+Before we start to play with this example, we need to have the Kusion Server installed and run an accessible Kubernetes cluster. Here are some helpful documents:

+

+- Install [Kusion Server](../2-getting-started-with-kusion-cli/0-install-kusion.md).

+- Run a [Kubernetes](https://kubernetes.io) cluster. Some light and convenient options for Kubernetes local deployment include [k3s](https://docs.k3s.io/quick-start), [k3d](https://k3d.io/v5.4.4/#installation), and [MiniKube](https://minikube.sigs.k8s.io/docs/tutorials/multi_node).

+

+## Initialize Backend, Source, and Workspace

+

+We can start this tutorial with the initialization of `Backend`, `Source`, and `Workspace` on Kusion.

+

+First, create a `Backend` with local file system as Kusion's storage 👇

+

+

+

+Second, set the sample repository `konfig` we provided as the `Source` of application configuration codes 👇

+

+

+

+Then, create a `Workspace` named `dev` which can correspond to the development environment of the application. After it is created, we can copy the following example configurations into the `workspace.yaml`. This configuration declares the `Kusion Modules` that can be used in the application config codes, and specifies the Kubernetes cluster associated with this `Workspace`.

+

+

+

+```yaml

+# This is a sample of a `workspace.yaml` configuration, in which three Kusion Modules (kam, service, and network) and

+# their specified versions are declared, along with the Kubernetes cluster bound to this workspace.

+# Usually, applications deployed to this workspace can only use the Kusion Modules declared in the `workspace.yaml`.

+modules:

+ kam:

+ path: git://github.com/KusionStack/kam

+ version: 0.2.2

+ configs:

+ default: {}

+ service:

+ path: oci://ghcr.io/kusionstack/service

+ version: 0.2.1

+ configs:

+ default: {}

+ network:

+ path: oci://ghcr.io/kusionstack/network

+ version: 0.3.0

+ configs:

+ default: {}

+context:

+ KUBECONFIG_PATH: /var/run/secrets/kubernetes.io/kubeconfigs/kubeconfig-0

+```

+

+

+

+We can check the available Kusion Modules declared in the workspace. The `kam`, `service`, and `network` modules declared in the example have been pre-registered when we installed Kusion.

+

+

+

+

+

+:::info

+More info about the concepts of `Backend`, `Source`, `Workspace`, and `Kusion Module` can be found [here](../../3-concepts/0-overview.md)

+:::

+

+## Initialize Project and Stack

+

+Next, we can create our first `Project` and `Stack` with the `Source` of `konfig` repo.

+

+

+

+When creating a `Project`, the `path` field should be filled with the path of the project relative to the root directory of the `Source` repo. After the creation, click the project name to initiate a `Stack`.

+

+

+

+Similarly, the `path` field of `Stack` should also be filled with the path of the stack relative to the root directory of the `Source` repo.

+

+:::info

+More info about the concepts of `Project` and `Stack` can be found [here](../../3-concepts/0-overview.md)

+:::

+

+### Review Configuration Files

+

+Now let's have a glance at the configuration codes of `default` stack in `quickstart` project, the configuration code link is https://github.com/KusionStack/konfig/tree/main/example/quickstart/default.

+

+

+

+The codes in the configuration file `main.k` are shown below:

+

+```python

+# The configuration codes in perspective of developers.

+import kam.v1.app_configuration as ac

+import service

+import service.container as c

+import network as n

+

+# `main.k` declares the customized configuration codes for default stack.

+#

+# Please replace the ${APPLICATION_NAME} with the name of your application, and complete the

+# 'AppConfiguration' instance with your own workload and accessories.

+quickstart: ac.AppConfiguration {

+ workload: service.Service {

+ containers: {

+ quickstart: c.Container {

+ image: "kusionstack/kusion-quickstart:latest"

+ }

+ }

+ }

+ accessories: {

+ "network": n.Network {

+ ports: [

+ n.Port {

+ port: 8080

+ }

+ ]

+ }

+ }

+}

+

+```

+

+The configuration file `main.k`, usually written by the **App Developers**, declares the customized configuration codes for `default` stack, including an `AppConfiguration` instance with the name of `quickstart`. The `quickstart` application consists of a `Workload` with the type of `service.Service`, which runs a container named `quickstart` using the image of `kusionstack/kusion-quickstart:latest`.

+

+Besides, it declares a **Kusion Module** with the type of `network.Network`, exposing `8080` port to be accessed for the long-running service.

+

+The `AppConfiguration` model can hide the major complexity of Kubernetes resources such as `Namespace`, `Deployment`, and `Service` which will be created and managed by Kusion, providing the concepts that are **application-centric** and **infrastructure-agnostic** for a more developer-friendly experience.

+

+:::info

+More details about the `AppConfiguration` model and built-in Kusion Module can be found in [kam](https://github.com/KusionStack/kam) and [catalog](https://github.com/KusionStack/catalog).

+:::

+

+The declaration of the dependency packages can be found in `default/kcl.mod`:

+

+```shell

+[dependencies]

+kam = { git = "git://github.com/KusionStack/kam", tag = "0.2.2" }

+network = { oci = "oci://ghcr.io/kusionstack/network", tag = "0.3.0" }

+service = { oci = "oci://ghcr.io/kusionstack/service", tag = "0.2.1" }

+```

+

+:::info

+More details about the application model and module dependency declaration can be found in [Kusion Module guide for app dev](../../3-concepts/3-module/3-app-dev-guide.md).

+:::

+

+:::tip

+The specific module versions we used in the above demonstration is only applicable for Kusion after **v0.14.0**.

+:::

+

+## Application Delivery

+

+After the initialization, we can start to run the application delivery.

+

+### Preview Changes

+

+We can first preview the changes to the application resources that are going to be deployed to the `dev` workspace.

+

+

+

+We can click the `Detail` button to view the `Preview` results.

+

+

+

+:::info

+During the first preview, the models and modules that the application depends on will be downloaded, so it may take some time (usually within one minute). You can take a break and have a cup of coffee.

+:::

+

+### Apply Resources

+

+Then we can create a `Run` operation of the type of `Apply` to conveniently deploy the previewed application resources to the Kubernetes cluster corresponding to the `dev` workspace.

+

+

+

+After successfully completing the `Apply`, we can check the application resource graph, which will display the topology of the application resources.

+

+

+

+Next, we can expose the service of the application we just applied through port-forwarding Kubernetes Pod and verify it in the browser.

+

+

+

+

+

+Oops, it seems that the page indicates we are missing a database. But no worries, we will cover how to add a database configuration for our application in the [next post](2-deliver-quickstart-with-db.md).

+

+:::info

+Kusion by default will create the Kubernetes resources of the application in the namespace the same as the project name. If you want to customize the namespace, please refer to [Project Namespace Extension](../../3-concepts/1-project/2-configuration.md#kubernetesnamespace) and [Stack Namespace Extension](../../3-concepts/2-stack/2-configuration.md#kubernetesnamespace).

+:::

+

+## Delete Application

+

+We can delete the quickstart demo workload and related accessory resources with the `Destroy` run:

+

+

diff --git a/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/2-deliver-quickstart-with-db.md b/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/2-deliver-quickstart-with-db.md

new file mode 100644

index 00000000..d5632ab9

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/2-deliver-quickstart-with-db.md

@@ -0,0 +1,159 @@

+---

+id: deliver-quickstart-with-db

+---

+

+# Run Your Own App with MySQL on Kubernetes with Kusion Server

+

+In this tutorial, we will learn how to create and manage our own application with MySQL database on Kubernetes with Kusion. The locally deployed MySQL database is declared as an accessory in the config codes and will be automatically created and managed by Kusion.

+

+## Prerequisites

+

+Before we start to play with this example, we need to have the Kusion Server installed and run an accessible Kubernetes cluster. Besides, we need to have a GitHub account to initiate our own config code repository as `Source` in Kusion.

+

+- Install [Kusion Server](../2-getting-started-with-kusion-cli/0-install-kusion.md).

+- Run a [Kubernetes](https://kubernetes.io) cluster. Some light and convenient options for Kubernetes local deployment include [k3s](https://docs.k3s.io/quick-start), [k3d](https://k3d.io/v5.4.4/#installation), and [MiniKube](https://minikube.sigs.k8s.io/docs/tutorials/multi_node).

+

+

+:::info

+Please walk through [this documentation](1-deliver-quickstart.md) before proceeding with the upcoming instructions.

+:::

+

+## Initialize Source

+

+First, we need to create our own application configuration code repository as `Source` in Kusion.

+

+

+

+We can simply copy the `quickstart` example in [KusionStack/konfig](https://github.com/KusionStack/konfig/tree/main/example/quickstart) into our new repo.

+

+

+

+Then, we need to create a new `Source` with the created repository url.

+

+

+

+## Register Module And Update Workspace

+

+Next, we can register the `mysql` module provided by KusionStack community in Kusion.

+

+

+

+After the registration, we should add the `mysql` module to the `dev` workspace and re-generate the `kcl.mod`.

+

+

+

+

+

+We can copy and paste to save the updated `kcl.mod`.

+

+## Create Project and Stack

+

+Next, we can create a new `Project` with our own config code repo.

+

+

+

+And similarly, create a new `Stack`.

+

+

+

+## Add MySQL Accessory

+

+As you can see, the demo application page in [this doc](1-deliver-quickstart.md#apply-resources) indicates that the MySQL database is not ready yet. Hence, we will now add a MySQL database as an `accessory` for the workload.

+

+We should first update the module dependencies in the `default/kcl.mod` with the ones we previously stored, so that we can use the `MySQL` module in the configuration codes.

+

+```shell

+[dependencies]

+kam = { git = "git://github.com/KusionStack/kam", tag = "0.2.2" }

+mysql = { oci = "oci://ghcr.io/kusionstack/mysql", tag = "0.2.0" }

+network = { oci = "oci://ghcr.io/kusionstack/network", tag = "0.3.0" }

+service = { oci = "oci://ghcr.io/kusionstack/service", tag = "0.2.1" }

+```

+

+We can update the `default/main.k` with the following configuration codes:

+

+```python

+import kam.v1.app_configuration as ac

+import service

+import service.container as c

+import network as n

+import mysql

+

+# main.k declares the customized configuration codes for default-with-db stack.

+quickstart: ac.AppConfiguration {

+ workload: service.Service {

+ containers: {

+ quickstart: c.Container {

+ image: "kusionstack/kusion-quickstart:latest"

+ env: {

+ "DB_HOST": "$(KUSION_DB_HOST_QUICKSTARTWITHDBDEFAULTWITHDBQU)"

+ "DB_USERNAME": "$(KUSION_DB_USERNAME_QUICKSTARTWITHDBDEFAULTWITHDBQU)"

+ "DB_PASSWORD": "$(KUSION_DB_PASSWORD_QUICKSTARTWITHDBDEFAULTWITHDBQU)"

+ }

+ }

+ }

+ }

+ accessories: {

+ "network": n.Network {

+ ports: [

+ n.Port {

+ port: 8080

+ }

+ ]

+ }

+ "mysql": mysql.MySQL {

+ type: "local"

+ version: "8.0"

+ }

+ }

+}

+```

+

+The configuration codes above declare a local `mysql.MySQL` with the engine version of `8.0` as an accessory for the application workload. The necessary Kubernetes resources for deploying and using the local MySQL database will be generated and users can get the `host`, `username` and `password` of the database through the [MySQL Credentials And Connectivity](../../6-reference/2-modules/1-developer-schemas/database/mysql.md#credentials-and-connectivity) of Kusion in application containers.

+

+:::info

+For more information about the naming convention of Kusion built-in MySQL module, you can refer to [Module Naming Convention](../../6-reference/2-modules/3-naming-conventions.md).

+:::

+

+After that, we need to update the remote repository with the modified config code files.

+

+

+

+## Application Delivery

+

+Now we can start to run the application delivery!

+

+### Preview Changes

+

+We can first preview the changes to the application resources that are going to be deployed to the `dev` workspace.

+

+

+

+We can click the `Detail` button to view the `Preview` results, and we can find that compared to the results in [this doc](1-deliver-quickstart.md#preview-changes), the `mysql` accessory has brought us database related Kubernetes resources.

+

+

+

+### Apply Resources

+

+Then we can create a `Run` operation of the type of `Apply` to deploy the previewed application resources to the Kubernetes cluster corresponding to the `dev` workspace.

+

+

+

+After successfully completing the `Apply`, we can check the application resource graph, which will display the topology of the application resources related to mysql database, including Kubernetes `Deployment`, `Service`, `Secret`, and `PVC`.

+

+

+

+Next, we can expose the service of the application we just applied through port-forwarding Kubernetes Pod and verify it in the browser.

+

+

+

+We can find that the application has successfully connected to the MySQL database, and the connection information is also printed on the page. Now please feel free to enjoy the demo application!

+

+

+

+## Delete Application

+

+We can delete the quickstart demo workload and related accessory resources with the `Destroy` run:

+

+

+

diff --git a/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/_category_.json b/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/_category_.json

new file mode 100644

index 00000000..4a3ae631

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/2-getting-started/3-getting-started-with-kusion-server/_category_.json

@@ -0,0 +1,3 @@

+{

+ "label": "Start with Kusion Server"

+}

\ No newline at end of file

diff --git a/docs_versioned_docs/version-v0.14/2-getting-started/_category_.json b/docs_versioned_docs/version-v0.14/2-getting-started/_category_.json

new file mode 100644

index 00000000..41f4c00e

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/2-getting-started/_category_.json

@@ -0,0 +1,3 @@

+{

+ "label": "Getting Started"

+}

diff --git a/docs_versioned_docs/version-v0.14/3-concepts/0-overview.md b/docs_versioned_docs/version-v0.14/3-concepts/0-overview.md

new file mode 100644

index 00000000..b0db99dc

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/3-concepts/0-overview.md

@@ -0,0 +1,29 @@

+---

+id: overview

+---

+

+# Overview

+

+## Platform Orchestrator

+

+

+

+A Platform Orchestrator is a system designed to capture and "orchestrate" intents from different configurations coming from different roles, and connecting them with different infrastructures. It serves as the glue for different intents throughout the software development lifecycle, from deployment to monitoring and operations, ensuring that users' intentions can seamlessly integrate and flow across different environments and infrastructures.

+

+## Kusion Workflow

+

+In this section, we will provide an overview of the core concepts of Kusion from the perspective of the Kusion workflow.

+

+

+

+The workflow of Kusion is illustrated in the diagram above, which consists of three steps.

+

+The first step is **Write**, where the platform engineers build the [Kusion Modules](./3-module/1-overview.md) and initialize a [Workspace](./4-workspace/1-overview.md). And the application developers declare their operational intent in [AppConfiguration](./5-appconfigurations.md) under a specific [Project](./1-project/1-overview.md) and [Stack](./2-stack/1-overview.md) path.

+

+The second step is the **Generate** process, which results in the creation of the **SSoT** (Single Source of Truth), also known as the [Spec](./6-specs.md) of the current operation. Kusion stores and version controls the Spec as part of a [Release](./8-release.md).

+

+The third step is **Apply**, which makes the `Spec` effective. Kusion parses the operational intent based on the `Spec` produced in the previous step. Before applying the `Spec`, Kusion will execute the `Preview` command (you can also execute this command manually) which will use a three-way diff algorithm to preview changes and prompt users to make sure all changes meet their expectations. And the `Apply` command will then actualize the operation intent onto various infrastructure platforms, currently supporting **Kubernetes**, **Terraform**, and **On-Prem** infrastructures. A [Release](./8-release.md) file will be created in the [Storage Backend](./7-backend/1-overview.md) to record an operation. The `Destroy` command will delete the resources recorded in the `Release` file of a project in a specific workspace.

+

+A more detailed demonstration of the Kusion engine can be seen below.

+

+

\ No newline at end of file

diff --git a/docs_versioned_docs/version-v0.14/3-concepts/1-project/1-overview.md b/docs_versioned_docs/version-v0.14/3-concepts/1-project/1-overview.md

new file mode 100644

index 00000000..152d0ed1

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/3-concepts/1-project/1-overview.md

@@ -0,0 +1,14 @@

+---

+sidebar_label: Overview

+id: overview

+---

+

+# Overview

+

+Projects, [Stacks](../2-stack/1-overview.md), [Modules](../3-module/1-overview.md) and [Workspaces](../4-workspace/1-overview.md) are the most pivotal concepts when using Kusion.

+

+A project in Kusion is defined as a folder that contains a `project.yaml` file and is generally recommended to be stored to a Git repository. There are generally no constraints on where this folder may reside. In some cases it can be stored in a large monorepo that manages configurations for multiple applications. It could also be stored either in a separate repo or alongside the application code. A project can logically consist of one or more applications.

+

+The purpose of the project is to bundle application configurations that are relevant. Specifically, it contains configurations for internal components that makes up the application and orchestrates these configurations to suit different roles, such as developers and SREs, thereby covering the entire lifecycle of application deployment.

+

+From the perspective of the application development lifecycle, the configurations delineated by the project is decoupled from the application code. It takes an immutable application image as input, allowing users to perform operations and maintain the application within an independent configuration codebase.

\ No newline at end of file

diff --git a/docs_versioned_docs/version-v0.14/3-concepts/1-project/2-configuration.md b/docs_versioned_docs/version-v0.14/3-concepts/1-project/2-configuration.md

new file mode 100644

index 00000000..b5823df8

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/3-concepts/1-project/2-configuration.md

@@ -0,0 +1,38 @@

+---

+id: configuration

+sidebar_label: Project file reference

+---

+

+# Kusion project file reference

+

+Every Kusion project has a project file, `project.yaml`, which specifies metadata about your project, such as the project name and project description. The project file must begin with lowercase `project` and have an extension of either `.yaml` or `.yml`.

+

+## Attributes

+

+| Name | Required | Description | Options |

+|:------------- |:--------------- |:------------- |:------------- |

+| `name` | required | Name of the project containing alphanumeric characters, hyphens, underscores. | None |

+| `description` | optional | A brief description of the project. | None |

+| `extensions` | optional | List of extensions on the project. | [See blow](#extensions) |

+

+### Extensions

+

+Extensions allow you to customize how resources are generated or customized as part of release.

+

+#### kubernetesNamespace

+

+The Kubernetes namespace extension allows you to customize namespace within your application generate Kubernetes resources.

+

+| Key | Required | Description | Example |

+|:------|:--------:|:-------------|:---------|

+| kind | y | The kind of extension being used. Must be 'kubernetesNamespace' | `kubernetesNamespace` |

+| namespace | y | The namespace where all application-scoped resources generate Kubernetes objects. | `default` |

+

+```yaml

+# Example `project.yaml` file with customized namespace of `test`.

+name: example

+extensions:

+ - kind: kubernetesNamespace

+ kubernetesNamespace:

+ namespace: test

+```

diff --git a/docs_versioned_docs/version-v0.14/3-concepts/1-project/_category_.json b/docs_versioned_docs/version-v0.14/3-concepts/1-project/_category_.json

new file mode 100644

index 00000000..b62ac774

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/3-concepts/1-project/_category_.json

@@ -0,0 +1,3 @@

+{

+ "label": "Projects"

+}

diff --git a/docs_versioned_docs/version-v0.14/3-concepts/10-resources.md b/docs_versioned_docs/version-v0.14/3-concepts/10-resources.md

new file mode 100644

index 00000000..582f17bf

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/3-concepts/10-resources.md

@@ -0,0 +1,43 @@

+# Resources

+

+Kusion uses [Spec](./6-specs.md) to manage resource specifications. A Kusion resource is a logical concept that encapsulates physical resources on different resource planes, including but not limited to Kubernetes, AWS, AliCloud, Azure and Google Cloud.

+

+Kusion Resources are produced by [Kusion Module Generators](./3-module/1-overview.md) and usually map to a physical resource that can be applied via a Kusion Runtime.

+

+## Runtimes

+

+Runtimes is the consumer of the resources in a Spec by turning them into actual physical resources in infrastructure providers.

+

+Currently there are two in-tree runtimes defined in the Kusion source code but we are planning to make them out-of-tree in the future:

+

+- Kubernetes, used to manage resources inside a Kubernetes cluster. This can be any native Kubernetes resources or CRDs (if you wish to manage infrastructures via [CrossPlane](https://www.crossplane.io/) or [Kubevela](https://kubevela.io/), for example, this is completely doable by creating a Kusion Module with a generator that produces the resource YAML for the relevant CRDs)

+- Terraform, used to manage infrastructure resources outside a Kubernetes cluster. Kusion uses Terraform as an executor that can manage basically any infrastructure given there is a [terraform provider](https://developer.hashicorp.com/terraform/language/providers) tailored for the infrastructure API. This is generally used to manage the lifecycle of infrastructure resources on clouds, no matter public or on-prem.

+

+## Resource Planes

+

+Resource Plane is a property of a Kusion resource. It represents the actual plane on which the resource exists. Current resource planes include `kubernetes`,`aws`,`azure`,`google`,`alicloud`,`ant` and `custom`.

+

+## Resource ID, Resource URN and Cloud Resource ID

+

+Kusion Resource ID is a unique identifier for a Kusion Resource within a Spec. It must be unique across a Spec. The resource ID is technically generated by module generators so there are no definite rules for producing a Kusion Resource ID. The best practice is to use the `KubernetesResourceID()` and `TerraformResourceID()` method from [kusion-module-framework](https://github.com/KusionStack/kusion-module-framework) to manage Kusion Resource IDs. You can use the [official module generators](https://github.com/KusionStack/catalog/blob/main/modules/mysql/src/alicloud_rds.go#L164) as a reference.

+

+'''tip

+Resource ID validations do exist.

+For Kubernetes resources, the resource ID must include API version, kind, namespace (if applicable) and name.

+For Terraform resources, the resource ID must include provider namespace, provider name, resource type and resource name.

+It's always recommended to use the `KubernetesResourceID()` and `TerraformResourceID()` method from [kusion-module-framework](https://github.com/KusionStack/kusion-module-framework) to produce the Resource IDs.

+'''

+

+Kusion Resource URN is used to uniquely identify a Kusion Resource across a Kusion server instance. It consists of `${project-name}:${stack-name}:${workspace-name}:${kusion-resource-id}` to ensure global uniqueness.

+

+Cloud Resource ID is used to map to an actual resource on the cloud. For AWS and Alicloud, this is usually known as the resource `ARN` on the cloud. For Azure and Google Cloud, this is known as the Resource ID. It can be empty in some cases, for example, a Kubernetes resource does not have cloud resource ID.

+

+## Resource Graphs

+

+A Resource Graph visualizes the relationship between all resources for a given stack. In the Kusion developer portal, you can inspect the resource graph by clicking on the `Resource Graph` tab on the stack page:

+

+

+

+You can closely inspect the resource details by hovering over the resource node on the graph.

+

+

\ No newline at end of file

diff --git a/docs_versioned_docs/version-v0.14/3-concepts/11-cli-configuration.md b/docs_versioned_docs/version-v0.14/3-concepts/11-cli-configuration.md

new file mode 100644

index 00000000..fc681634

--- /dev/null

+++ b/docs_versioned_docs/version-v0.14/3-concepts/11-cli-configuration.md

@@ -0,0 +1,118 @@

+---

+id: configuration

+sidebar_label: CLI Configurations

+---

+

+# CLI Configurations

+

+:::tip

+If you are using Kusion server, this article does NOT apply.

+:::

+

+Kusion CLI can be configured with some global settings, which are separate from the AppConfiguration written by the application developers and the workspace configurations written by the platform engineers.

+

+The configurations are only relevant to the Kusion itself, and can be managed by command `kusion config`. The configuration items are specified, which are in the hierarchical format with full stop for segmentation, such as `backends.current`. For now, only the backend configurations are included.

+

+The configuration is stored in the file `${KUSION_HOME}/config.yaml`. For sensitive data, such as password, access key id and secret, setting them in the configuration file is not recommended, using the corresponding environment variables is safer.

+

+## Configuration Management

+

+Kusion provides the command `kusion config`, and its sub-commands `get`, `list`, `set`, `unset` to manage the configuration. The usages are shown as below:

+

+### Get a Specified Configuration Item

+

+Use `kusion config get` to get the value of a specified configuration item, only the registered item can be obtained correctly. The example is as below.

+

+```shell

+# get a configuration item

+kusion config get backends.current

+```

+

+### List the Configuration Items

+

+Use `kusion config list` to list all the Kusion configurations, where the result is in the YAML format. The example is as below.

+

+```shell

+# list all the Kusion configurations

+kusion config list

+```

+

+### Set a Specified Configuration Item

+

+Use `kusion config set` to set the value of a specified configuration item, where the type of the value of is also determinate. Kusion supports `string`, `int`, `bool`, `array` and `map` as the value type, which should be conveyed in the following format through CLI.

+

+- `string`: the original format, such as `local-dev`, `oss-pre`;

+- `int`: convert to string, such as `3306`, `80`;

+- `bool`: convert to string, only support `true` and `false`;

+- `array`: convert to string with JSON marshal, such as `'["s3","oss"]'`. To preserve the format, enclosing the string content in single quotes is a good idea, or there may be unexpected errors;

+- `map`: convert to string with JSON marshal, such as `'{"path":"\etc"}'`.

+

+Besides the type, some configuration items have more setting requirements. The configuration item dependency may exist, that is, a configuration item must be set after another item. And there may exist more restrictions for the configuration values themselves. For example, the valid keys for the map type value, the data range for the int type value. For detailed configuration item information, please refer to the following content of this article.

+

+The example of setting configuration item is as blow.

+

+```shell

+# set a configuration item of type string

+kusion config set backends.pre.type s3

+

+# set a configuration item of type map

+kusion config set backends.prod `{"configs":{"bucket":"kusion"},"type":"s3"}`

+```

+

+### Unset a Specified Configuration Item

+

+Use `kusion config unset` to unset a specified configuration item. Be attention, some items have dependencies, which must be unset in a correct order. The example is as below.

+

+```shell

+# unset a specified configuration item

+kusion config unset backends.pre

+```

+

+## Backend Configurations

+

+The backend configurations define the place to store Workspace, Spec and State files. Multiple backends and current backend are supported to set.

+

+### Available Configuration Items

+

+- **backends.current**: type `string`, the current used backend name. It can be set as the configured backend name. If not set, the default local backend will be used.

+- **backends.${name}**: type `map`, a total backend configuration, contains type and config items, whose format is as below. It can be unset when the backend is not the current.

+```yaml

+{

+ "type": "${backend_type}", # type string, required, support local, oss, s3.

+ "configs": ${backend_configs} # type map, optional for type local, required for the others, the specific keys depend on the type, refer to the description of backends.${name}.configs.

+}

+```

+- **backends.${name}.type**: type `string`, the backend type, support `local`, `s3` and `oss`. It can be unset when the backend is not the current, and the corresponding `backends.${name}.configs` are empty.

+- **backends.${name}.configs**: type `map`, the backend config items, whose format depends on the backend type and is as below. It must be set after `backends.${name}.type`.

+```yaml

+# type local

+{

+ "path": "${local_path}" # type string, optional, the directory to store the files. If not set, use the default path ${KUSION_HOME}.

+}

+

+# type oss

+{

+ "endpoint": "${oss_endpoint}", # type string, required, the oss endpoint.

+ "accessKeyID": "${oss_access_key_id}", # type string, optional, the oss access key id, which can be also obtained by environment variable OSS_ACCESS_KEY_ID.

+ "accessKeySecret": "${oss_access_key_secret}", # type string, optional, the oss access key secret, which can be also obtained by environment variable OSS_ACCESS_KEY_SECRET

+ "bucket": "${oss_bucket}", # type string, required, the oss bucket.

+ "prefix": "${oss_prefix}" # type string, optional, the prefix to store the files.

+}

+

+ # type s3

+{

+ "region": "${s3_region}", # type string, optional, the aws region, which can be also obtained by environment variables AWS_REGION and AWS_DEFAULT_REGION.

+ "endpoint": "${s3_endpoint}", # type string, optional, the aws endpoint.

+ "accessKeyID": "${s3_access_key_id}", # type string, optional, the aws access key id, which can be also obtained by environment variable AWS_ACCESS_KEY_ID.

+ "accessKeySecret": "${s3_access_key_secret}", # type string, optional, the aws access key secret, which can be also obtained by environment variable AWS_SECRET_ACCESS_KEY

+ "bucket": "${s3_bucket}", # type string, required, the s3 bucket.

+ "prefix": "${s3_prefix}" # type string, optional, the prefix to store the files.