confusion about self.log() in test_step() #10801

Replies: 1 comment 1 reply

-

|

It looks like there's confusion between "steps", "epochs", and "batches". My hypothesis is that your code is running test_step() 10000 times, but that 10000 iterations is only 1500 epochs, and you have the flag Can you run some diagnostics? First, set the number of test batches to 1000 or even 100 instead of 10000. This will make things much faster while we iterate. Then: Once you've run some tests and have a little more information, hopefully we can find the solution to your problem. |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Hi, I wrote following code in

test_step()method:According to the official document, Pytorch Lightning will cache every

test_lossandtest_accvalue produced intest_step()and then do a mean reduction at the end of the test epoch.Now, I set my

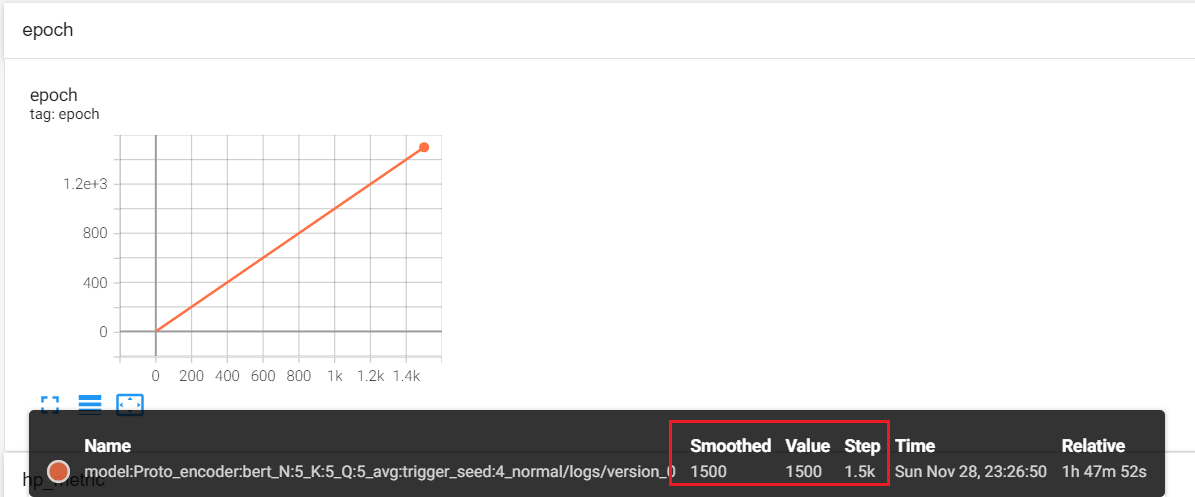

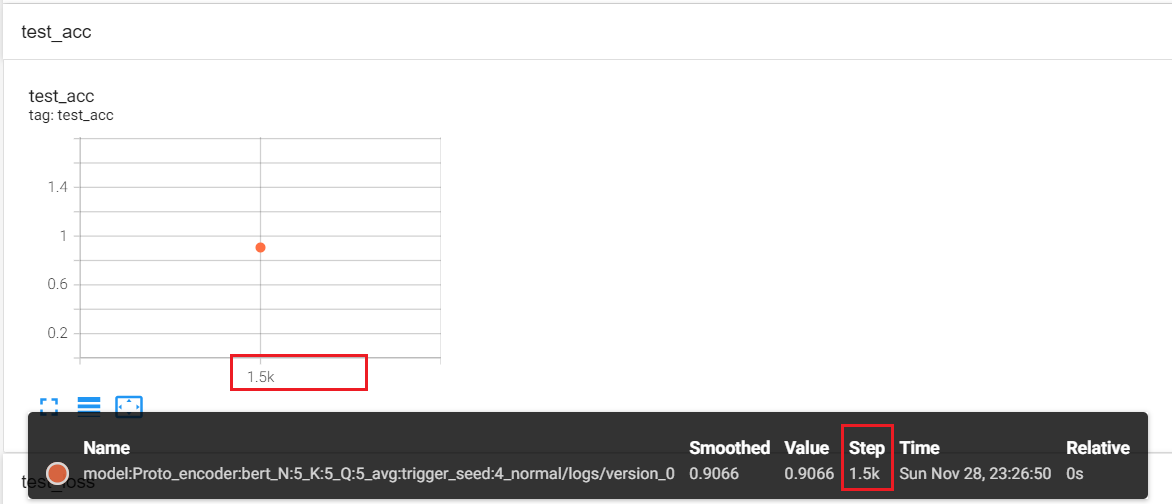

number_of_test_batch=10000, this means the code will runtest_step()10000 times, and there will be atest_acc_lstwhose len is 10000, andtest_loss_lstwhose len is 10000, and there will besum(test_acc_lst)/10000operation, is that right?finally, when I check the tensorboard, I got following two pics:

I am very confused why there is a

1500(1.5K) step appeared in the picture, from the point of mine, it should be 10000 !!!Can anyone solve this problem, thank you 😥

Beta Was this translation helpful? Give feedback.

All reactions