Shuffled batches' datapoints with multi-gpu #13161

Unanswered

fmorenopino

asked this question in

DDP / multi-GPU / multi-node

Replies: 0 comments

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

Hello,

I'm training a model with time-dependence where each datapoint in my batch represents 1 day of data. When I work with just 1 GPU, my model makes predictions for each of the datapoints in the batch individually without problem, but when I use 2 gpus (using ddp, because I want a specific batch size), each datapoint appears shuffled in the batch.

I need them to be ordered because, once my model has done the prediction to the whole batch, I want to plot those predictions (if Batch_size=100, then I have 100 predictions that I want to plot in the correct order).

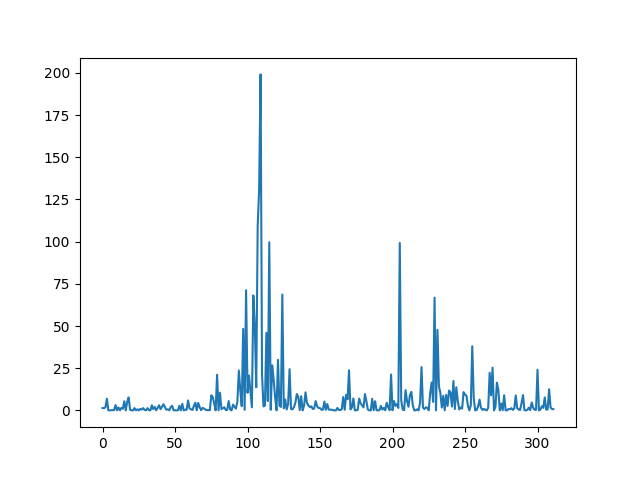

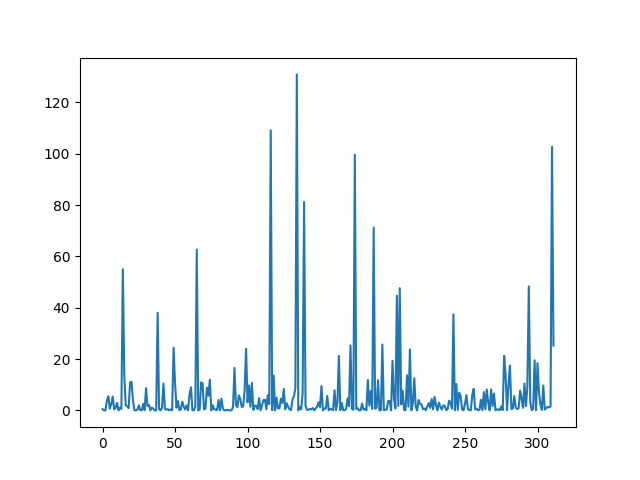

I attach two examples to illustrate what I mean, first image are the true_labels with a batch_size=312, second image are the true_labels in one of the 2 gpus when using multi-gpu, with the datapoints of the batch completely shuffled.

Could someone indicate to me which is the proper way of doing this?

Thanks

.

Beta Was this translation helpful? Give feedback.

All reactions