TensorBoard logger step is inconsistent with trainer.gloabel_step under manual-optimization #14504

Unanswered

jim4399266

asked this question in

Lightning Trainer API: Trainer, LightningModule, LightningDataModule

Replies: 0 comments

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

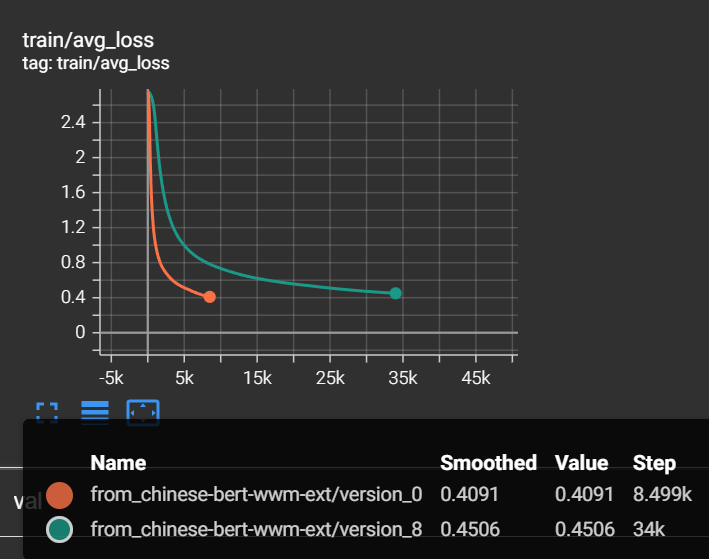

I recently want to combine adversarial learning(FGM and AWP) with Lightning frame, so I found a tutorial and changed my module to manual-optimization. When I checked logs in TensorBoard I found the steps are 4 times as many as that not under manual-optimization( my accumulate_grad_batches = 4 ).

As the pic shows, , oringe line is common method and green line is manual-optimization, I checked the trainer.globel_step and they were all the same in both method. How to fix this problem? Thanks a lot!

Following is my TensorBoardLooger settings:

Following is my Trainer settings:

Following is my training_step function:

Beta Was this translation helpful? Give feedback.

All reactions