Training is slow on GPU #14917

Unanswered

mtomic123

asked this question in

Lightning Trainer API: Trainer, LightningModule, LightningDataModule

Replies: 0 comments

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

I built a Temporal Fusion Transformer model from Pytorch-Forecasting using the guide here:

https://pytorch-forecasting.readthedocs.io/en/stable/tutorials/stallion.html

I used my own data which is a time-series with 62k samples. I set training to be on GPU by specifying

accelerator="gpu"inpl.Trainer. The issue is that training is quite slow considering this dataset is not that large.I first ran the training on my laptop GPU GTX 1650 Ti, then on a A100 40GB and I got only 2x uplift in performance. A100 is many many times faster than my laptop and performance uplift should be much bigger than 2x. I have NVIDIA drivers installed, cuDNN and other things installed (A100 is on google cloud which comes preinstalled with all of that). The GPU utilisation is low (10-15%), but I can see that the data has been loaded into GPU memory.

Things I tried:

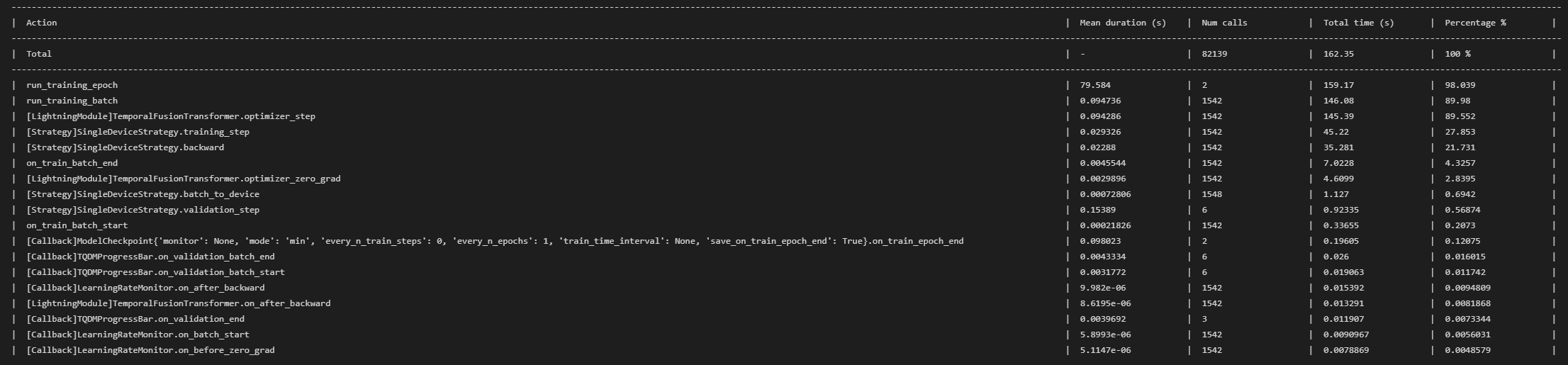

num_workersto 8 indataloaderIs there some other bottleneck in my model? Below are the results from the

profilerand snippets of my model configuration.Dataloaders

Model Configuration

Profiler (Only the most intensive processes)

Beta Was this translation helpful? Give feedback.

All reactions