Training with dual (optimizer+scheduler) only one learning rate is updated over training steps #14970

Answered

by

celsofranssa

celsofranssa

asked this question in

Lightning Trainer API: Trainer, LightningModule, LightningDataModule

-

|

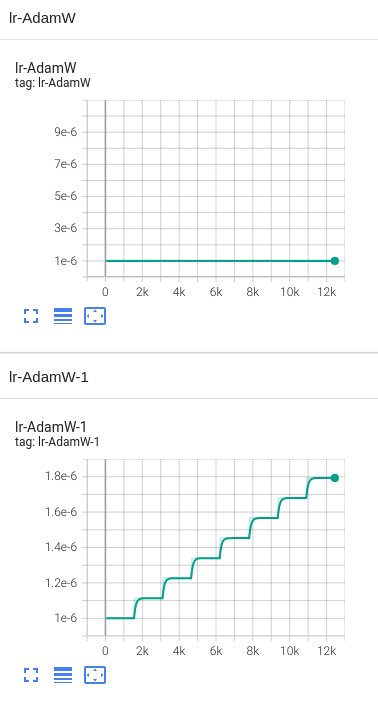

I am using two optimizers and two schedules in my PL model: def configure_optimizers(self):

# optimizers

opt1 = torch.optim.AdamW(

self.encoder_1.parameters(),

lr=self.hparams.lr_1,

weight_decay=self.hparams.weight_decay

)

opt2 = torch.optim.AdamW(

self.encoder_2.parameters(),

lr=self.hparams.lr_2,

weight_decay=self.hparams.weight_decay

)

step_size_up = round(0.07 * self.trainer.estimated_stepping_batches)

schdlr_1 = torch.optim.lr_scheduler.CyclicLR(opt1, mode='triangular2',

base_lr=self.hparams.base_lr,

max_lr=self.hparams.max_lr, step_size_up=step_size_up

)

schdlr_2 = torch.optim.lr_scheduler.CyclicLR(opt2, mode='triangular2',

base_lr=self.hparams.base_lr,

max_lr=self.hparams.max_lr, step_size_up=step_size_up

)

return (

{"optimizer": opt1, "lr_scheduler": schdlr_1, "frequency": self.hparams.frequency_1},

{"optimizer": opt2, "lr_scheduler": schdlr_2, "frequency": self.hparams.frequency_2},

)However, inspecting the loss over the training steps revealed that only one learning rate was updated: |

Beta Was this translation helpful? Give feedback.

Answered by

celsofranssa

Oct 3, 2022

Replies: 1 comment

-

|

It happend because I've forgotten about specifying the scheduler's interval. return (

{"optimizer": opt_1, "lr_scheduler": {"scheduler": schdlr_1, "interval": "step", "name": "LRS-1"}, "frequency": 1},

{"optimizer": opt_2, "lr_scheduler": {"scheduler": schdlr_1, "interval": "step", "name": "LRS-2"}, "frequency": 1}

) |

Beta Was this translation helpful? Give feedback.

0 replies

Answer selected by

celsofranssa

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

It happend because I've forgotten about specifying the scheduler's interval.

The correct desired config is something like: