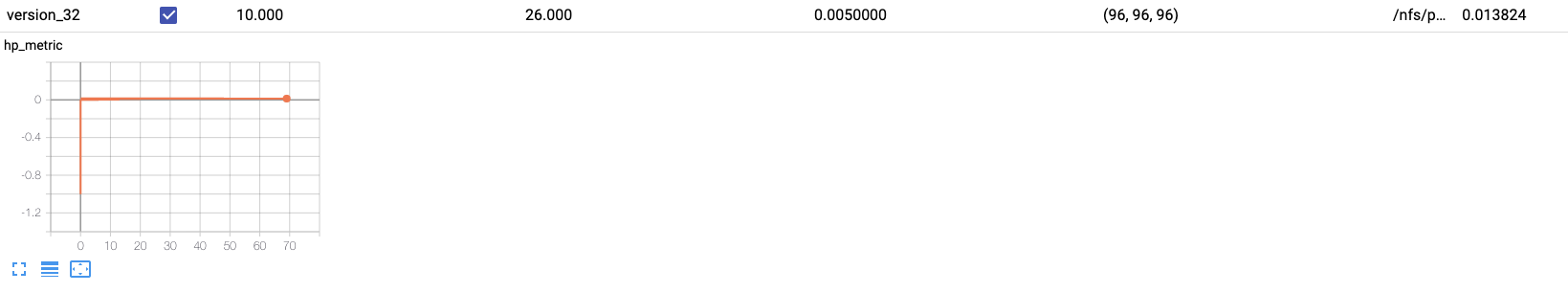

How to remove hp_metric initial -1 point and x=0 points? #5890

-

Beta Was this translation helpful? Give feedback.

Replies: 5 comments 2 replies

-

|

That's a great question ! Why do you want to see the metric under hparams tab ? |

Beta Was this translation helpful? Give feedback.

-

|

As @bartmch, I use |

Beta Was this translation helpful? Give feedback.

-

|

You can set |

Beta Was this translation helpful? Give feedback.

-

|

Yes but then the |

Beta Was this translation helpful? Give feedback.

-

|

I already use your first way but setting Here are what I've tried so far:

I will try to calculate metric before training start to appropriately populate it, or to delay |

Beta Was this translation helpful? Give feedback.

I already use your first way but setting

default_hp_metrictoFalsemakeshp_metricbe removed from "hparams" tab (this tab isn't there at all even if I have set some hyper parameters). Adding the finallog_hyperparamscreates the hparams tab but the graph ofhp_metricgets a final value at iteration 0 instead of final iteration), and this step will also be skipped if the job is killed.Here are what I've tried so far:

default_hp_metric=True: hparams tab visible in Tensorboad withhp_metricupdated during training,hp_metricwrong initial value that makes the corresponding graph unsuitable with log scale and smoothing activated.default_hp_metric=False: no hparams tab in TensorBoard,hp_…