AMP: Native AMP doesn't work in my Gigabyte Aorus Master RTX 3070 environment #7098

roma-glushko

started this conversation in

General

Replies: 2 comments 1 reply

-

|

Hey @Borda @williamFalcon 👋 have you seen anything similar to this issue? |

Beta Was this translation helpful? Give feedback.

0 replies

-

|

I had a conversation with PyTorch community about this issue to exclude a case of environment misconfiguration on my side (https://discuss.pytorch.org/t/rtx-3070-amp-doesnt-seem-to-be-working/118835/7) We came up to a conclusion that, generally, AMP works there, but there is a project, that I originally mentioned, which possibly has a combination of model/dataset that triggers an issue on Lightning side. |

Beta Was this translation helpful? Give feedback.

1 reply

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

-

I'm trying to use AMP in my new Gigabyte Aorus Master RTX 3070 environment.

I have changed precision config from 32 to 16 and back to track global memory consumptions. Surprisingly, in both cases I see the same memory consumption (batch_size was the same).

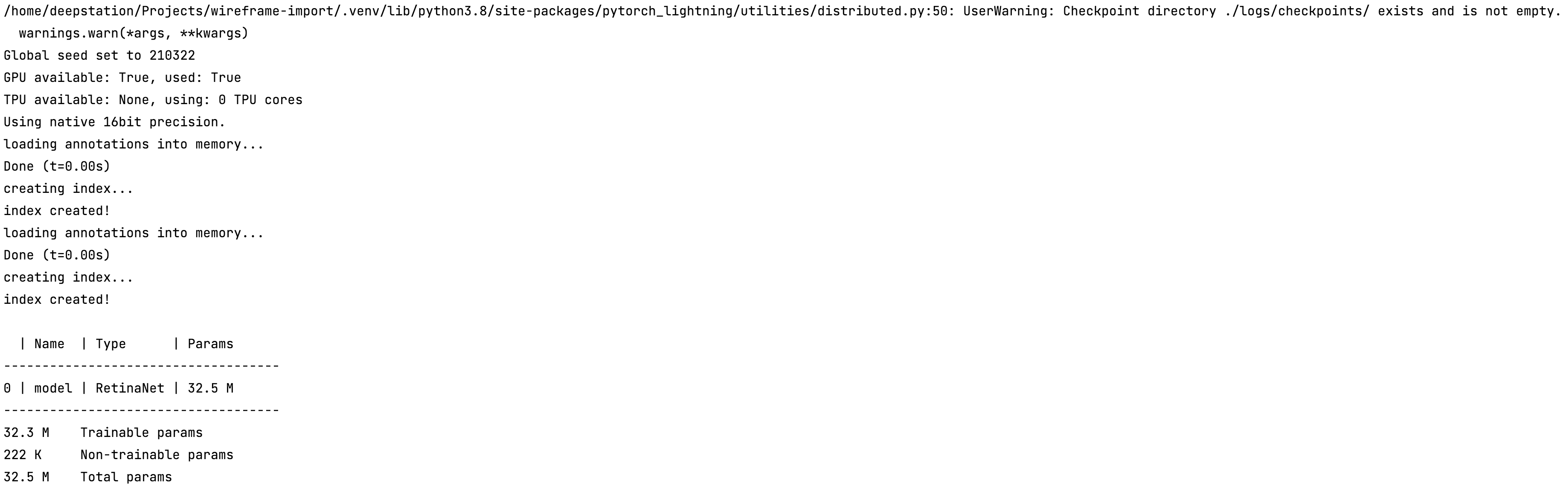

Though, during training initialization, PyTorch Lightning seems to recognise that AMP should be used for the training:

Despite that, memory consumptions are the same:

I expect AMP to reduce my memory consumptions.

My dependencies:

Any clues why it does work for me?

Beta Was this translation helpful? Give feedback.

All reactions