MLFlow logger step vs epoch #7463

-

|

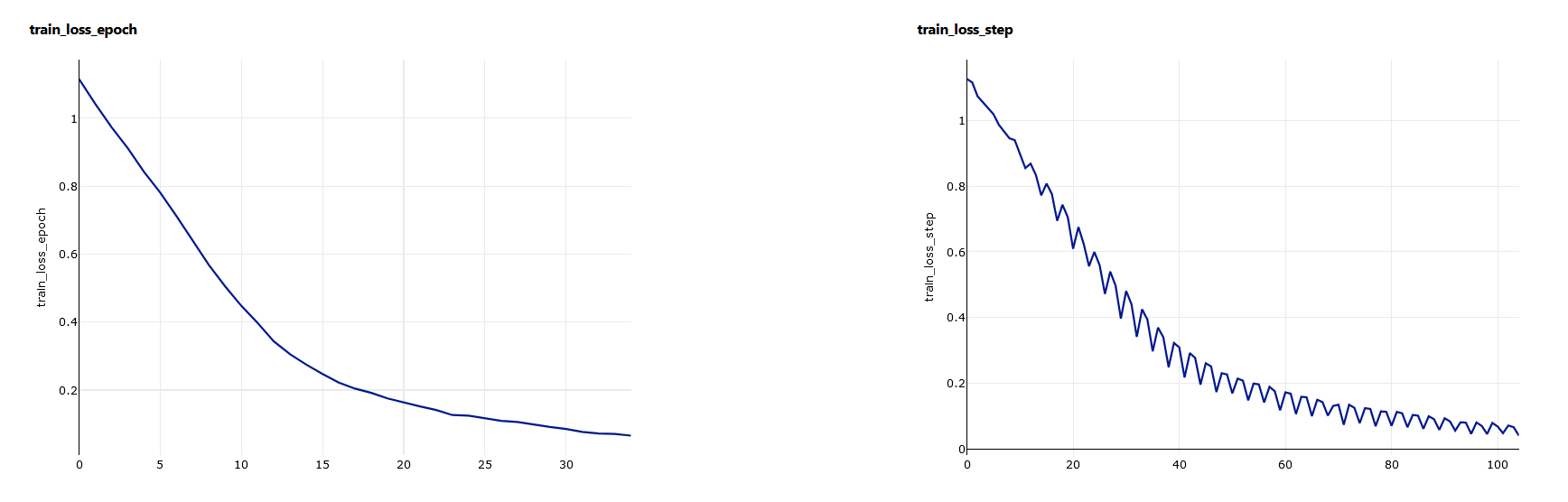

I am using MLFlowLogger to log my experiment into Azure ML. Everything works fine but I noticed that when I ask the logger to store a metric every step (instead of every epoch), the logger does not increase the step number but instead keeps overwriting the current step in the batch. That is, if I have 5 minibatches for each epoch, and I have 10 epochs, the logger will overwrite step 1-5 continuously, instead of logging step 1-50. Something I noticed is that MLFlow/AzureML log metrics according to steps, regardless of whether it is a epoch or not, perhaps this is causing some issues. Would you be able to help? An example here (7 steps only for |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 1 reply

-

|

I got it. By default the logger logs every 50 steps (https://pytorch-lightning.readthedocs.io/en/1.3.0/extensions/logging.html#control-logging-frequency). It so happened that my dataset was very small so there were way more epochs than training steps. If I increase the frequency |

Beta Was this translation helpful? Give feedback.

I got it. By default the logger logs every 50 steps (https://pytorch-lightning.readthedocs.io/en/1.3.0/extensions/logging.html#control-logging-frequency). It so happened that my dataset was very small so there were way more epochs than training steps. If I increase the frequency

trainer = Trainer(log_every_n_steps=1)then I do get a log for every steps in the training process.