how to use one cyle learning rate? #9601

Unanswered

talhaanwarch

asked this question in

Lightning Trainer API: Trainer, LightningModule, LightningDataModule

Replies: 3 comments 6 replies

-

|

Take a look at issue #9630 |

Beta Was this translation helpful? Give feedback.

0 replies

-

|

Dear @talhaanwarch, You should do the following. Your scheduler is being executed on epoch end only. def configure_optimizers(self):

opt=torch.optim.AdamW(params=self.parameters(),lr=self.lr )

scheduler=OneCycleLR(opt,max_lr=1e-2,epochs=50,steps_per_epoch=len(self.train_split)//self.batch_size)

return [opt], [{"scheduler": scheduler, "interval": "step"}] |

Beta Was this translation helpful? Give feedback.

4 replies

-

|

@rohitgr7 @tchaton i am still unable to fix it. I have create a complete gist, hope it will help |

Beta Was this translation helpful? Give feedback.

2 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

-

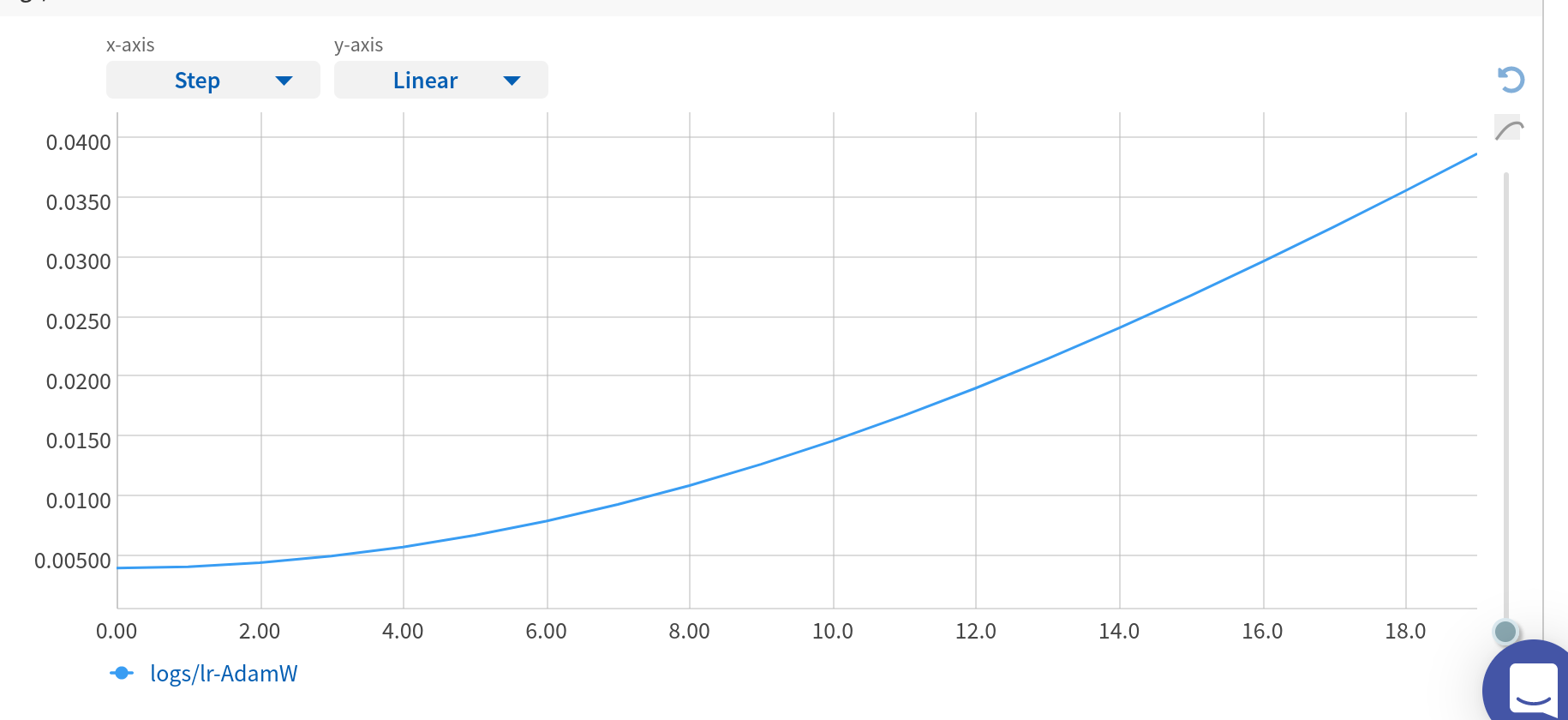

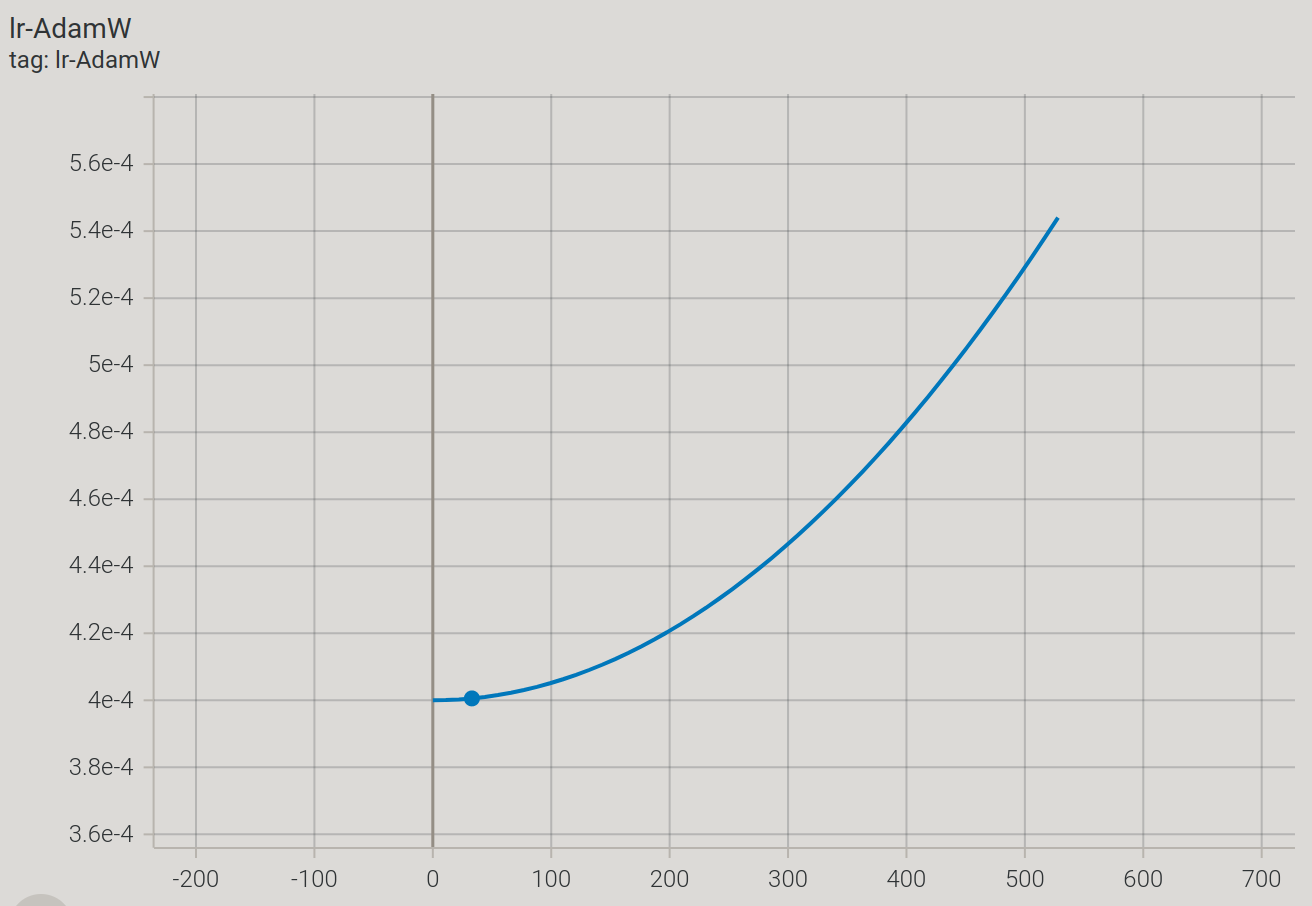

My one cycle learning rate curve goes above but did not goes down, i mean cycle is not complete.

lr=1e-4

here is learning rate monitor

lr_monitor = LearningRateMonitor(logging_interval='epoch')code for trainer

Here is curve in logger

Beta Was this translation helpful? Give feedback.

All reactions