|

66 | 66 | "\n", |

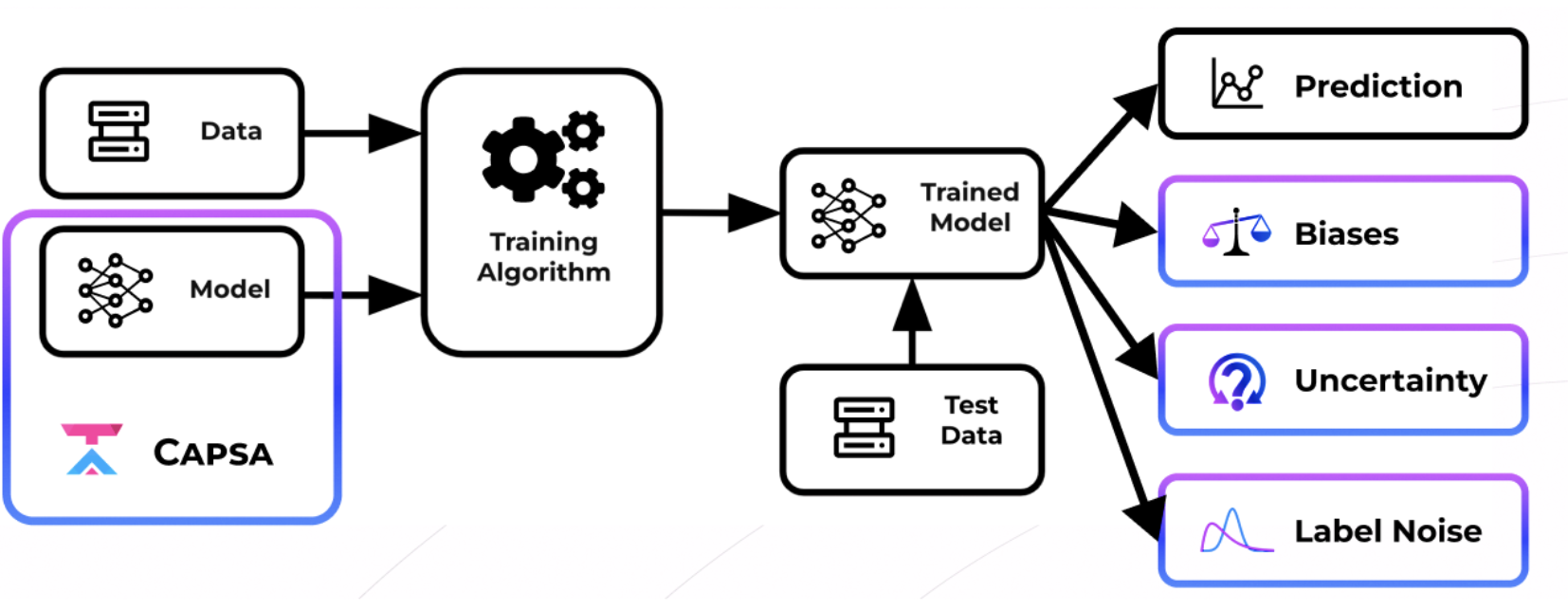

67 | 67 | "This lab introduces Capsa and its functionalities, to next build automated tools that use Capsa to mitigate the underlying issues of bias and uncertainty.\n", |

68 | 68 | "\n", |

69 | | - "The core idea behind Capsa is that any deep learning model of interest can be ***wrapped*** -- just like wrapping a gift -- to be made ***aware of its own risks***. Risk is captured in representation bias, data uncertainty, and model uncertainty.\n", |

| 69 | + "The core idea behind [Capsa](https://themisai.io/capsa/) is that any deep learning model of interest can be ***wrapped*** -- just like wrapping a gift -- to be made ***aware of its own risks***. Risk is captured in representation bias, data uncertainty, and model uncertainty.\n", |

70 | 70 | "\n", |

71 | 71 | "\n", |

72 | 72 | "\n", |

73 | | - "This means that Capsa takes the user's original model as input, and modifies it minimally to create a risk-aware variant while preserving the model's underlying structure and training pipeline. Capsa is a one-line addition to any training workflow in TensorFlow. In this part of the lab, we'll apply Capsa's risk estimation methods to a simple regression problem to further explore the notions of bias and uncertainty. " |

| 73 | + "This means that Capsa takes the user's original model as input, and modifies it minimally to create a risk-aware variant while preserving the model's underlying structure and training pipeline. Capsa is a one-line addition to any training workflow in TensorFlow. In this part of the lab, we'll apply Capsa's risk estimation methods to a simple regression problem to further explore the notions of bias and uncertainty. \n", |

| 74 | + "\n", |

| 75 | + "Please refer to [Capsa's documentation](https://themisai.io/capsa/) for additional details." |

74 | 76 | ] |

75 | 77 | }, |

76 | 78 | { |

|

270 | 272 | "\n", |

271 | 273 | "Now that we've seen what the predictions from this model look like, we will identify and quantify bias and uncertainty in this problem. We first consider bias.\n", |

272 | 274 | "\n", |

273 | | - "Recall that *representation bias* reflects how likely combinations of features are to appear in a given dataset. Capsa calculates how likely combinations of features are by using a histogram estimation approach: the `capsa.HistogramWrapper`. For low-dimensional data, the `capsa.HistogramWrapper` bins the input directly into discrete categories and measures the density. \n", |

| 275 | + "Recall that *representation bias* reflects how likely combinations of features are to appear in a given dataset. Capsa calculates how likely combinations of features are by using a histogram estimation approach: the `capsa.HistogramWrapper`. For low-dimensional data, the `capsa.HistogramWrapper` bins the input directly into discrete categories and measures the density. More details of the `HistogramWrapper` and how it can be used are [available here](https://themisai.io/capsa/api_documentation/HistogramWrapper.html).\n", |

274 | 276 | "\n", |

275 | 277 | "We start by taking our `dense_NN` and wrapping it with the `capsa.HistogramWrapper`:" |

276 | 278 | ] |

|

389 | 391 | "\n", |

390 | 392 | "As introduced in Lecture 5 on Robust & Trustworthy Deep Learning, in regression we can estimate aleatoric uncertainty by training the model to predict both a target value and a variance for every input. Because we estimate both a mean and variance for every input, this method is called Mean Variance Estimation (MVE). MVE involves modifying the output layer to predict both the mean and variance, and changing the loss to reflect the prediction likelihood.\n", |

391 | 393 | "\n", |

392 | | - "Capsa automatically implements these changes for us: we can wrap a given model using `capsa.MVEWrapper` to use MVE to estimate aleatoric uncertainty. All we have to do is define the model and the loss function to evaluate its predictions!\n", |

| 394 | + "Capsa automatically implements these changes for us: we can wrap a given model using `capsa.MVEWrapper` to use MVE to estimate aleatoric uncertainty. All we have to do is define the model and the loss function to evaluate its predictions! More details of the `MVEWrapper` and how it can be used are [available here](https://themisai.io/capsa/api_documentation/MVEWrapper.html).\n", |

393 | 395 | "\n", |

394 | 396 | "Let's take our standard network, wrap it with `capsa.MVEWrapper`, build the wrapped model, and then train it for the regression task. Finally, we evaluate performance of the resulting model by quantifying the aleatoric uncertainty across the data space: " |

395 | 397 | ] |

|

467 | 469 | "\n", |

468 | 470 | "Finally, we use Capsa for estimating the uncertainty underlying the model predictions -- the epistemic uncertainty. In this example, we'll use ensembles, which essentially copy the model `N` times and average predictions across all runs for a more robust prediction, and also calculate the variance of the `N` runs to estimate the uncertainty.\n", |

469 | 471 | "\n", |

470 | | - "Capsa provides a neat wrapper, `capsa.EnsembleWrapper`, to make an ensemble from an input model. Just like with aleatoric estimation, we can take our standard dense network model, wrap it with `capsa.EnsembleWrapper`, build the wrapped model, and then train it for the regression task. Finally, we evaluate the resulting model by quantifying the epistemic uncertainty on the test data:" |

| 472 | + "Capsa provides a neat wrapper, `capsa.EnsembleWrapper`, to make an ensemble from an input model. Just like with aleatoric estimation, we can take our standard dense network model, wrap it with `capsa.EnsembleWrapper`, build the wrapped model, and then train it for the regression task. More details of the `EnsembleWrapper` and how it can be used are [available here](https://themisai.io/capsa/api_documentation/EnsembleWrapper.html).\n", |

| 473 | + "\n", |

| 474 | + "Finally, we evaluate the resulting model by quantifying the epistemic uncertainty on the test data:" |

471 | 475 | ] |

472 | 476 | }, |

473 | 477 | { |

|

0 commit comments