|

15 | 15 | # Download possion_64 data from https://drive.google.com/drive/folders/1crIsTZGxZULWhrXkwGDiWF33W6RHxJkf |

16 | 16 | # Download helmholtz_64 data from https://drive.google.com/drive/folders/1UjIaF6FsjmN_xlGGSUX-1K2V3EF2Zalw |

17 | 17 |

|

18 | | - # Update the file paths in `cexamples/data_efficient_nopt/config/data_efficient_nopt.yaml`, specify to mode in `train` |

19 | | - # UPdate the file paths in config/operators_poisson.yaml or config/operators_helmholtz.yaml, specify to `train_path`, `val_path`, `test_path`, `scales_path` and `train_rand_idx_path` |

20 | | - |

21 | | - # possion_64 or helmholtz_64 pretrain, for example, specify as following: |

22 | | - # run_name: r0 |

23 | | - # config: helm-64-pretrain-o1_20_m1 |

24 | | - # yaml_config: config/operators_helmholtz.yaml |

25 | | - python data_efficient_nopt.py |

26 | | - |

27 | | - # possion_64 or helmholtz_64 finetune, for example, specify as following: |

28 | | - # run_name: r0 |

29 | | - # config: helm-64-o5_15_ft5_r2 |

30 | | - # yaml_config: config/operators_helmholtz.yaml |

31 | | - python data_efficient_nopt.py |

| 18 | + # Update the file paths in `cexamples/data_efficient_nopt/config/data_efficient_nopt.yaml`, specify to mode in `train`, and then specify to `train_path`, `val_path`, `test_path`, `scales_path` and `train_rand_idx_path` |

| 19 | + |

| 20 | + # pretrain or finetune, for possion_64 or helmholtz_64. |

| 21 | + # specify config_name to fno_possion using `data_efficient_nopt_fno_poisson`, or to fno_helmholtz using `data_efficient_nopt_fno_helmholtz` |

| 22 | + python data_efficient_nopt.py --config-name=<config_name> |

32 | 23 | ``` |

33 | 24 |

|

34 | 25 | === "模型评估命令" |

|

43 | 34 |

|

44 | 35 | ``` sh |

45 | 36 | cd examples/data_efficient_nopt |

46 | | - # Update the file paths in `cexamples/data_efficient_nopt/config/data_efficient_nopt.yaml`, specify to mode in `infer` |

| 37 | + # Update the file paths in `cexamples/data_efficient_nopt/config/data_efficient_nopt.yaml`, specify to mode in `infer`, and then specify to `ckpt_path`, `train_path`, `test_path` and `scales_path` |

47 | 38 | # Use a fine-tuned model as the checkpoint in 'exp' or utilize `model_convert.py` to convert the official checkpoint. |

48 | | - # UPdate the file paths in config/inference_poisson.yaml or config/inference_poisson.yaml, specify to `train_path`, `test_path` and `scales_path` |

49 | 39 |

|

50 | 40 | # use your onw finetune checkpoints, or download checkpoint from [FNO-Poisson](https://drive.google.com/drive/folders/1ekmXqqvpaY6pNStTciw1SCAzF0gjFP_V) or [FNO-Helmholtz](https://drive.google.com/drive/folders/1k7US8ZAgB14Wj9bfdgO_Cjw6hOrG6UaZ) and then convert to paddlepaddle weights. |

51 | 41 | python model_convert.py --pt-model <pt_checkpiont> --pd-model <pd_checkpiont> |

52 | 42 |

|

53 | | - # possion_64 inference, specify as following: |

54 | | - # evaluation: config/inference_poisson.yaml |

| 43 | + # inference for possion_64 or helmholtz_64, specify in config as following: |

55 | 44 | # ckpt_path: <ckpt_path> |

56 | | - python data_efficient_nopt.py |

| 45 | + # specify config_name to fno_possion using `data_efficient_nopt_fno_poisson`, or to fno_helmholtz using `data_efficient_nopt_fno_helmholtz` |

| 46 | + python data_efficient_nopt.py --config-name=<config_name> |

57 | 47 | ``` |

58 | 48 |

|

59 | 49 | ## 1. 背景简介 |

|

89 | 79 |

|

90 | 80 | 为了解决上述问题,论文提出了一种创新性的数据高效神经算子学习框架,该框架主要由两个阶段组成:*无监督预训练*和*情境学习推理*。 |

91 | 81 |

|

| 82 | + |

| 83 | + |

92 | 84 | #### 3.1 无监督预训练 (Unsupervised Pretraining) |

93 | 85 |

|

94 | 86 | 无监督预训练的目标是在不使用昂贵PDE解数据的情况下,预先训练神经算子以学习物理相关的特征表示,为后续的监督微调提供一个良好的初始化。 |

@@ -161,6 +153,14 @@ examples/data_efficient_nopt/data_efficient_nopt.py |

161 | 153 | - 无需训练开销:情境学习在推理阶段灵活地引入相似示例,且不增加任何训练成本。 |

162 | 154 | - 持续性能提升:实验证明,通过增加情境示例的数量,神经算子在各种PDE上的OOD泛化能力能够持续得到提升。这为在模型部署后动态提升其在复杂未知情况下的性能提供了可能。尤其是在解决OOD问题时,情境示例能够帮助模型校准输出的量级和模式,使其更接近真实解。 |

163 | 155 |

|

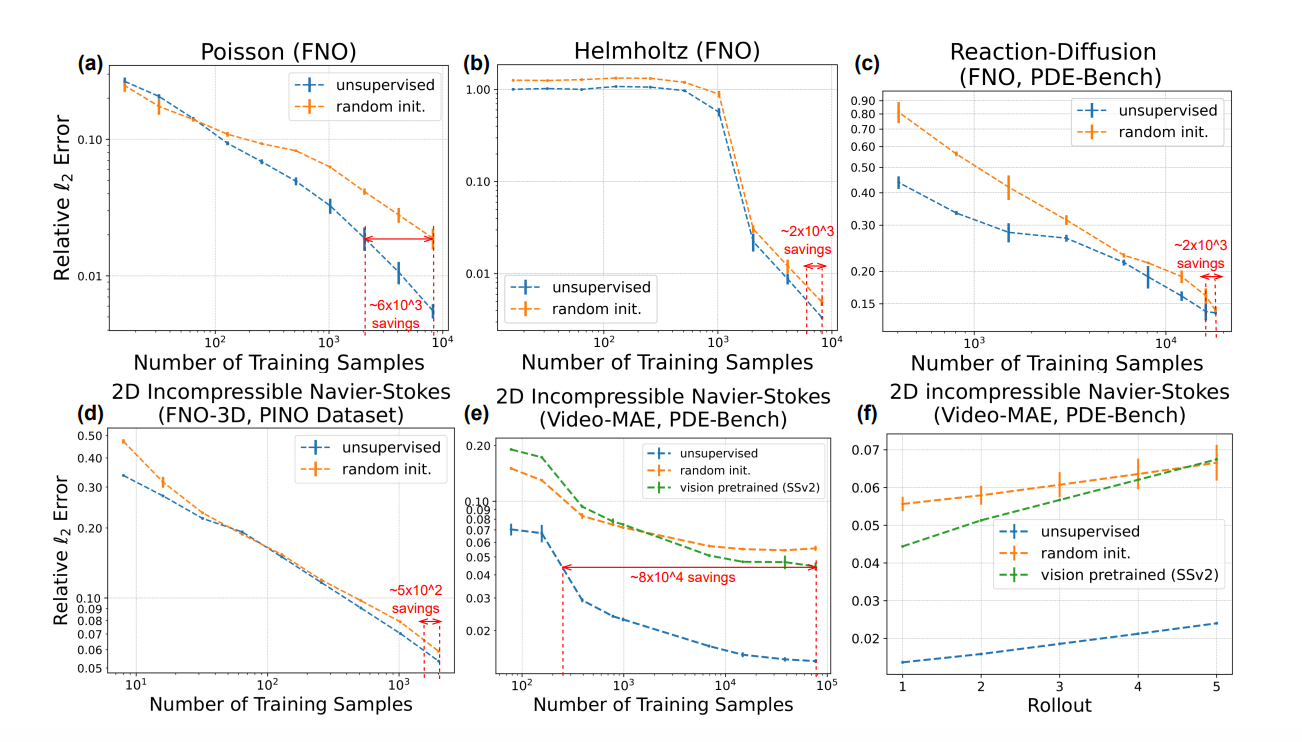

| 156 | +图2体现了在该方法下,在多种泛化场景下,数据效率使用的提升,有更好的收敛速度。 |

| 157 | + |

| 158 | + |

| 159 | + |

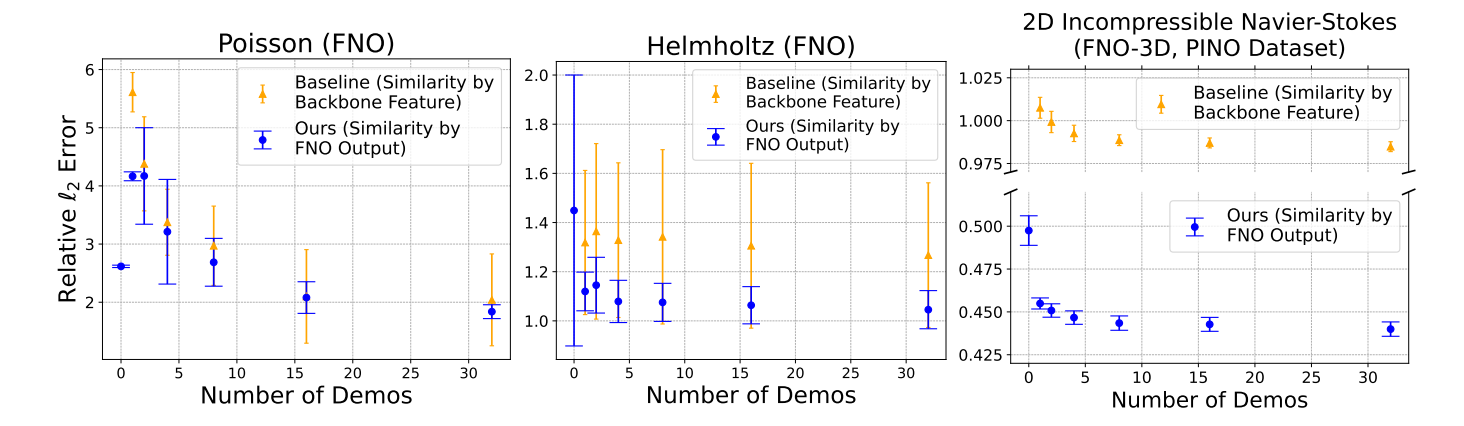

| 160 | +图3体现该种方法在情景推理场景中,超越了现有的通用预训练模型,具有额外泛化优势。 |

| 161 | + |

| 162 | + |

| 163 | + |

164 | 164 | 总而言之,这篇论文提出了一种创新且高效的神经算子学习框架,通过无监督预训练在大量廉价的无标签物理数据上学习通用表示,并通过情境学习在推理阶段利用少量相似案例来提升OOD泛化能力。这一框架显著降低了对昂贵模拟数据的需求,并提高了模型在复杂物理问题中的适应性和泛化性,为科学机器学习的数据高效发展开辟了新途径。 |

165 | 165 |

|

166 | 166 | 下方展示了部分实验结果: |

|

0 commit comments