diff --git a/README.md b/README.md

index 5b6ecba58d..4529c22442 100644

--- a/README.md

+++ b/README.md

@@ -124,6 +124,7 @@ PaddleScience 是一个基于深度学习框架 PaddlePaddle 开发的科学计

| 天气预报 | [FengWu 气象预报](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/fengwu) | 数据驱动 | Transformer | 监督学习 | - | [Paper](https://arxiv.org/pdf/2304.02948) |

| 天气预报 | [Pangu-Weather 气象预报](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/pangu_weather) | 数据驱动 | Transformer | 监督学习 | - | [Paper](https://arxiv.org/pdf/2211.02556) |

| 大气污染物 | [UNet 污染物扩散](https://aistudio.baidu.com/projectdetail/5663515?channel=0&channelType=0&sUid=438690&shared=1&ts=1698221963752) | 数据驱动 | UNet | 监督学习 | [Data](https://aistudio.baidu.com/datasetdetail/198102) | - |

+| 大气污染物 | [STAFNet 污染物浓度预测](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/stafnet) | 数据驱动 | STAFNet | 监督学习 | [Data](https://quotsoft.net/air) | [Paper](https://link.springer.com/chapter/10.1007/978-3-031-78186-5_22) |

| 天气预报 | [DGMR 气象预报](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/dgmr.md) | 数据驱动 | GAN | 监督学习 | [UK dataset](https://huggingface.co/datasets/openclimatefix/nimrod-uk-1km) | [Paper](https://arxiv.org/pdf/2104.00954.pdf) |

| 地震波形反演 | [VelocityGAN 地震波形反演](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/velocity_gan.md) | 数据驱动 | VelocityGAN | 监督学习 | [OpenFWI](https://openfwi-lanl.github.io/docs/data.html#vel) | [Paper](https://arxiv.org/abs/1809.10262v6) |

| 交通预测 | [TGCN 交通流量预测](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/tgcn.md) | 数据驱动 | GCN & CNN | 监督学习 | [PEMSD4 & PEMSD8](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tgcn/tgcn_data.zip) | - |

diff --git a/docs/index.md b/docs/index.md

index 95ec7b42c5..5268a23fae 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -156,6 +156,7 @@

| 天气预报 | [FengWu 气象预报](./zh/examples/fengwu.md) | 数据驱动 | Transformer | 监督学习 | - | [Paper](https://arxiv.org/pdf/2304.02948) |

| 天气预报 | [Pangu-Weather 气象预报](./zh/examples/pangu_weather.md) | 数据驱动 | Transformer | 监督学习 | - | [Paper](https://arxiv.org/pdf/2211.02556) |

| 大气污染物 | [UNet 污染物扩散](https://aistudio.baidu.com/projectdetail/5663515?channel=0&channelType=0&sUid=438690&shared=1&ts=1698221963752) | 数据驱动 | UNet | 监督学习 | [Data](https://aistudio.baidu.com/datasetdetail/198102) | - |

+| 大气污染物 | [STAFNet 污染物浓度预测](./zh/examples/stafnet.md) | 数据驱动 | STAFNet | 监督学习 | [Data](https://quotsoft.net/air) | [Paper](https://link.springer.com/chapter/10.1007/978-3-031-78186-5_22) |

| 天气预报 | [DGMR 气象预报](./zh/examples/dgmr.md) | 数据驱动 | GAN | 监督学习 | [UK dataset](https://huggingface.co/datasets/openclimatefix/nimrod-uk-1km) | [Paper](https://arxiv.org/pdf/2104.00954.pdf) |

| 地震波形反演 | [VelocityGAN 地震波形反演](./zh/examples/velocity_gan.md) | 数据驱动 | VelocityGAN | 监督学习 | [OpenFWI](https://openfwi-lanl.github.io/docs/data.html#vel) | [Paper](https://arxiv.org/abs/1809.10262v6) |

| 交通预测 | [TGCN 交通流量预测](./zh/examples/tgcn.md) | 数据驱动 | GCN & CNN | 监督学习 | [PEMSD4 & PEMSD8](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tgcn/tgcn_data.zip) | - |

diff --git a/docs/zh/api/arch.md b/docs/zh/api/arch.md

index e69bf9e752..456e7df97c 100644

--- a/docs/zh/api/arch.md

+++ b/docs/zh/api/arch.md

@@ -29,6 +29,7 @@

- NowcastNet

- SFNONet

- SPINN

+ - STAFNet

- TFNO1dNet

- TFNO2dNet

- TFNO3dNet

diff --git a/docs/zh/api/data/dataset.md b/docs/zh/api/data/dataset.md

index 60718dd0a3..e5a4c3d159 100644

--- a/docs/zh/api/data/dataset.md

+++ b/docs/zh/api/data/dataset.md

@@ -35,4 +35,5 @@

- DrivAerNetDataset

- DrivAerNetPlusPlusDataset

- IFMMoeDataset

+ - STAFNetDataset

show_root_heading: true

diff --git a/docs/zh/examples/stafnet.md b/docs/zh/examples/stafnet.md

new file mode 100644

index 0000000000..0ec8d7bf18

--- /dev/null

+++ b/docs/zh/examples/stafnet.md

@@ -0,0 +1,166 @@

+# STAFNet: Spatiotemporal-Aware Fusion Network for Air Quality Prediction

+

+| 预训练模型 | 指标 |

+| ------------------------------------------------------------ | -------------------- |

+| [stafnet.pdparams](https://paddle-org.bj.bcebos.com/paddlescience/models/stafnet/stafnet.pdparams) | MAE(1-48h) : 8.70933 |

+

+=== "模型训练命令"

+

+``` sh

+python stafnet.py DATASET.data_dir="Your train dataset path" EVAL.eval_data_path="Your evaluate dataset path"

+```

+

+=== "模型评估命令"

+

+``` sh

+wget -nc https://paddle-org.bj.bcebos.com/paddlescience/datasets/stafnet/val_data.pkl -P ./dataset/

+python stafnet.py mode=eval EVAL.pretrained_model_path="https://paddle-org.bj.bcebos.com/paddlescience/models/stafnet/stafnet.pdparams"

+```

+

+## 1. 背景介绍

+

+近些年,全球城市化和工业化不可避免地导致了严重的空气污染问题。心脏病、哮喘和肺癌等非传染性疾病的高发与暴露于空气污染直接相关。因此,空气质量预测已成为公共卫生、国民经济和城市管理的研究热点。目前已经建立了大量监测站来监测空气质量,并将其地理位置和历史观测数据合并为时空数据。然而,由于空气污染形成和扩散的高度复杂性,空气质量预测仍然面临着一些挑战。

+

+首先,空气中污染物的排放和扩散会导致邻近地区的空气质量迅速恶化,这一现象在托布勒地理第一定律中被描述为空间依赖关系,建立空间关系模型对于预测空气质量至关重要。然而,由于空气监测站的地理分布稀疏,要捕捉数据中内在的空间关联具有挑战性。其次,空气质量受到多源复杂因素的影响,尤其是气象条件。例如,长时间的小风或静风会抑制空气污染物的扩散,而自然降雨则在清除和冲刷空气污染物方面发挥作用。然而,空气质量站和气象站位于不同区域,导致多模态特征不对齐。融合不对齐的多模态特征并获取互补信息以准确预测空气质量是另一个挑战。最后但并非最不重要的一点是,空气质量的变化具有明显的多周期性特征。利用这一特点对提高空气质量预测的准确性非常重要,但也具有挑战性。

+

+针对空气质量预测提出了许多研究。早期的方法侧重于学习单个观测站观测数据的时间模式,而放弃了观测站之间的空间关系。最近,由于图神经网络(GNN)在处理非欧几里得图结构方面的有效性,越来越多的方法采用 GNN 来模拟空间依赖关系。这些方法将车站位置作为上下文特征,隐含地建立空间依赖关系模型,没有充分利用车站位置和车站之间关系所包含的宝贵空间信息。此外,现有的时空 GNN 缺乏在错位图中融合多个特征的能力。因此,大多数方法都需要额外的插值算法,以便在早期阶段将气象特征与 AQ 特征进行对齐和连接。这种方法消除了空气质量站和气象站之间的空间和结构信息,还可能引入噪声导致误差累积。此外,在空气质量预测中利用多周期性的问题仍未得到探索。

+

+该案例研究时空图网络在空气质量预测方向上的应用。

+

+## 2. 模型原理

+

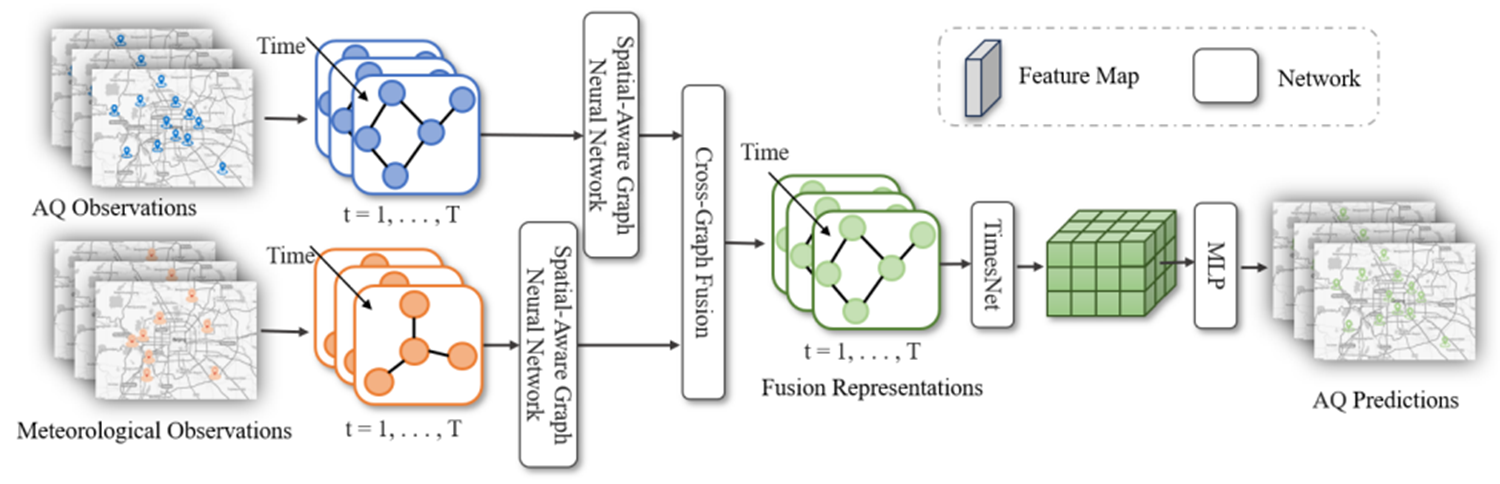

+STAFNet是一个新颖的多模式预报框架--时空感知融合网络来预测空气质量。STAFNet 由三个主要部分组成:空间感知 GNN、跨图融合关注机制和 TimesNet 。具体来说,为了捕捉各站点之间的空间关系,我们首先引入了空间感知 GNN,将空间信息明确纳入信息传递和节点表示中。为了全面表示气象影响,我们随后提出了一种基于交叉图融合关注机制的多模态融合策略,在不同类型站点的数量和位置不一致的情况下,将气象数据整合到 AQ 数据中。受多周期分析的启发,我们采用 TimesNet 将时间序列数据分解为不同频率的周期信号,并分别提取时间特征。

+

+本章节仅对 STAFNet的模型原理进行简单地介绍,详细的理论推导请阅读 STAFNet: Spatiotemporal-Aware Fusion Network for Air Quality Prediction

+

+模型的总体结构如图所示:

+

+

+

+STAFNet网络模型

+

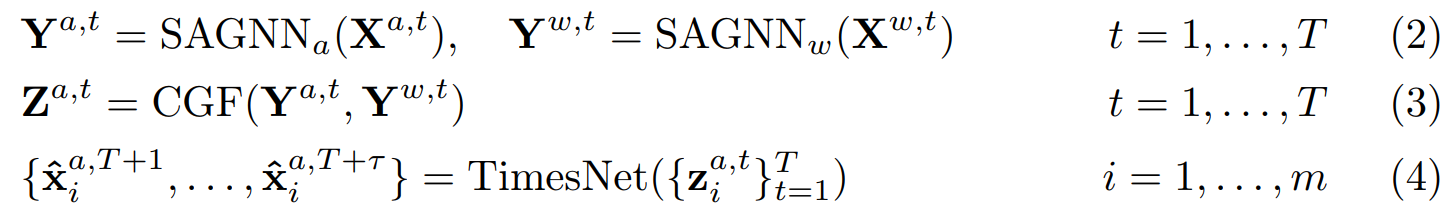

+STAFNet 包含三个模块,分别将空间信息、气象信息和历史信息融合到空气质量特征表征中。首先模型的输入:过去T个时刻的**空气质量**数据和**气象**数据,使用两个空间感知 GNN(SAGNN),利用监测站之间的空间关系分别提取空气质量和气象信息。然后,跨图融合注意(CGF)将气象信息融合到空气质量表征中。最后,我们采用 TimesNet 模型来描述空气质量序列的时间动态,并生成多步骤预测。这一推理过程可表述如下,

+

+

+

+## 3. 模型构建

+

+### 3.1 数据集介绍

+

+数据集采用了STAFNet处理好的北京空气质量数据集。数据集都包含:

+

+(1)空气质量观测值(即 PM2.5、PM10、O3、NO2、SO2 和 CO);

+

+(2)气象观测值(即温度、气压、湿度、风速和风向);

+

+(3)站点位置(即经度和纬度)。

+

+所有空气质量和气象观测数据每小时记录一次。数据集的收集时间为 2021 年 1 月 24 日至 2023 年 1 月 19 日,按 9:1的比例将数据分为训练集和测试集。空气质量观测数据来自国家城市空气质量实时发布平台,气象观测数据来自中国气象局。数据集的具体细节如下表所示:

+

+

北京空气质量数据集

+

+具体的数据集可从https://quotsoft.net/air/下载。

+

+运行本问题代码前请下载[数据集](https://paddle-org.bj.bcebos.com/paddlescience/datasets/stafnet/val_data.pkl), 下载后分别存放在路径:

+

+```

+./dataset

+```

+

+### 3.2 模型搭建

+

+在STAFNet模型中,输入过去72小时35个站点的空气质量数据,预测这35个站点未来48小时的空气质量。在本问题中,我们使用神经网络 `stafnet` 作为模型,其接收图结构数据,输出预测结果。

+

+```py linenums="10" title="examples/stafnet/stafnet.py"

+--8<--

+examples/stafnet/stafnet.py:10:10

+--8<--

+```

+

+### 3.4 参数和超参数设定

+

+其中超参数`cfg.MODEL.gat_hidden_dim`、`cfg.MODEL.e_layers`、`cfg.MODEL.d_model`、`cfg.MODEL.top_k`等默认设定如下:

+

+``` yaml linenums="35" title="examples/stafnet/conf/stafnet.yaml"

+--8<--

+examples/stafnet/conf/stafnet.yaml:35:59

+--8<--

+```

+

+### 3.5 优化器构建

+

+训练过程会调用优化器来更新模型参数,此处选择较为常用的 `Adam` 优化器。

+

+``` py linenums="62" title="examples/stafnet/stafnet.py"

+--8<--

+examples/stafnet/stafnet.py:62:62

+--8<--

+```

+

+其中学习率相关的设定如下:

+

+``` yaml linenums="70" title="examples/stafnet/conf/stafnet.yaml"

+--8<--

+examples/stafnet/conf/stafnet.yaml:70:75

+--8<--

+```

+

+### 3.6 约束构建

+

+在本案例中,我们使用监督数据集对模型进行训练,因此需要构建监督约束。

+

+在定义约束之前,我们需要指定数据集的路径等相关配置,将这些信息存放到对应的 YAML 文件中,如下所示。

+

+``` yaml linenums="31" title="examples/stafnet/conf/stafnet.yaml"

+--8<--

+examples/stafnet/conf/stafnet.yaml:31:34

+--8<--

+```

+

+最后构建监督约束,如下所示。

+

+``` py linenums="46" title="examples/stafnet/stafnet.py"

+--8<--

+examples/stafnet/stafnet.py:46:51

+--8<--

+```

+

+### 3.7 评估器构建

+

+在训练过程中通常会按一定轮数间隔,用验证集(测试集)评估当前模型的训练情况,因此使用 `ppsci.validate.SupervisedValidator` 构建评估器,构建过程与 [约束构建 3.6](https://github.com/PaddlePaddle/PaddleScience/blob/develop/docs/zh/examples/stafnet.md#36) 类似,只需把数据目录改为测试集的目录,并在配置文件中设置 `EVAL.batch_size=1` 即可。

+

+``` py linenums="52" title="examples/stafnet/stafnet.py"

+--8<--

+examples/stafnet/stafnet.py:52:58

+--8<--

+```

+

+评估指标为预测结果和真实结果的MAE 值,因此使用PaddleScience内置的`ppsci.metric.MAE()`,如下所示。

+

+``` py linenums="55" title="examples/stafnet/stafnet.py"

+--8<--

+examples/stafnet/stafnet.py:55:55

+--8<--

+```

+

+### 3.8 模型训练

+

+由于本问题为时序预测问题,因此可以使用PaddleScience内置的`psci.loss.MAELoss('mean')`作为训练过程的损失函数。同时选择使用随机梯度下降法对网络进行优化。完成述设置之后,只需要将上述实例化的对象按顺序传递给 `ppsci.solver.Solver`,然后启动训练。具体代码如下:

+

+``` py linenums="66" title="examples/stafnet/stafnet.py"

+--8<--

+examples/stafnet/stafnet.py:66:82

+--8<--

+```

+

+## 4. 完整代码

+

+```py linenums="1" title="examples/stafnet/stafnet.py"

+--8<--

+examples/stafnet/stafnet.py

+--8<--

+```

+

+## 5. 参考资料

+

+- [STAFNet: Spatiotemporal-Aware Fusion Network for Air Quality Prediction](https://link.springer.com/chapter/10.1007/978-3-031-78186-5_22)

diff --git a/examples/quick_start/case3.ipynb b/examples/quick_start/case3.ipynb

index 452502ceea..322e3832a9 100644

--- a/examples/quick_start/case3.ipynb

+++ b/examples/quick_start/case3.ipynb

@@ -81,7 +81,6 @@

"\n",

"import ppsci\n",

"import sympy as sp\n",

- "import numpy as np\n",

"\n",

"# 设置薄板计算域长、宽参数\n",

"Lx = 2.0 # 薄板x方向长度(m)\n",

diff --git a/examples/stafnet/conf/stafnet.yaml b/examples/stafnet/conf/stafnet.yaml

new file mode 100644

index 0000000000..87345cad66

--- /dev/null

+++ b/examples/stafnet/conf/stafnet.yaml

@@ -0,0 +1,84 @@

+defaults:

+ - ppsci_default

+ - TRAIN: train_default

+ - TRAIN/ema: ema_default

+ - TRAIN/swa: swa_default

+ - EVAL: eval_default

+ - INFER: infer_default

+ - hydra/job/config/override_dirname/exclude_keys: exclude_keys_default

+ - _self_

+hydra:

+ run:

+ # dynamic output directory according to running time and override name

+ dir: outputs_stafnet/${now:%Y-%m-%d}/${now:%H-%M-%S}/${hydra.job.override_dirname}

+ job:

+ name: ${mode} # name of logfile

+ chdir: false # keep current working directory unchanged

+ callbacks:

+ init_callback:

+ _target_: ppsci.utils.callbacks.InitCallback

+ sweep:

+ # output directory for multirun

+ dir: ${hydra.run.dir}

+ subdir: ./

+

+# general settings

+mode: train # running mode: train/eval

+seed: 42

+output_dir: ${hydra:run.dir}

+log_freq: 20

+# dataset setting

+DATASET:

+ label_keys: [label]

+ data_dir: ./dataset/train_data.pkl

+

+MODEL:

+ input_keys: [aq_train_data, mete_train_data]

+ output_keys: [label]

+ output_attention: true

+ seq_len: 72

+ pred_len: 48

+ aq_gat_node_features: 7

+ aq_gat_node_num: 35

+ mete_gat_node_features: 7

+ mete_gat_node_num: 18

+ gat_hidden_dim: 32

+ gat_edge_dim: 3

+ e_layers: 1

+ enc_in: 7

+ dec_in: 7

+ c_out: 7

+ d_model: 16

+ embed: fixed

+ freq: t

+ dropout: 0.05

+ factor: 3

+ n_heads: 4

+ d_ff: 32

+ num_kernels: 6

+ top_k: 4

+

+# training settings

+TRAIN:

+ epochs: 100

+ iters_per_epoch: 400

+ save_freq: 10

+ eval_during_train: true

+ eval_freq: 10

+ batch_size: 32

+ learning_rate: 0.0001

+ lr_scheduler:

+ epochs: ${TRAIN.epochs}

+ iters_per_epoch: ${TRAIN.iters_per_epoch}

+ learning_rate: 0.0005

+ step_size: 20

+ gamma: 0.95

+ pretrained_model_path: null

+ checkpoint_path: null

+

+EVAL:

+ eval_data_path: ./dataset/val_data.pkl

+ pretrained_model_path: null

+ compute_metric_by_batch: false

+ eval_with_no_grad: true

+ batch_size: 32

diff --git a/examples/stafnet/stafnet.py b/examples/stafnet/stafnet.py

new file mode 100644

index 0000000000..77f3c0160b

--- /dev/null

+++ b/examples/stafnet/stafnet.py

@@ -0,0 +1,139 @@

+import multiprocessing

+

+import hydra

+from omegaconf import DictConfig

+

+import ppsci

+

+

+def train(cfg: DictConfig):

+ # set model

+ model = ppsci.arch.STAFNet(**cfg.MODEL)

+ train_dataloader_cfg = {

+ "dataset": {

+ "name": "STAFNetDataset",

+ "file_path": cfg.DATASET.data_dir,

+ "input_keys": cfg.MODEL.input_keys,

+ "label_keys": cfg.MODEL.output_keys,

+ "seq_len": cfg.MODEL.seq_len,

+ "pred_len": cfg.MODEL.pred_len,

+ },

+ "batch_size": cfg.TRAIN.batch_size,

+ "sampler": {

+ "name": "BatchSampler",

+ "drop_last": False,

+ "shuffle": True,

+ },

+ "num_workers": 0,

+ }

+ eval_dataloader_cfg = {

+ "dataset": {

+ "name": "STAFNetDataset",

+ "file_path": cfg.EVAL.eval_data_path,

+ "input_keys": cfg.MODEL.input_keys,

+ "label_keys": cfg.MODEL.output_keys,

+ "seq_len": cfg.MODEL.seq_len,

+ "pred_len": cfg.MODEL.pred_len,

+ },

+ "batch_size": cfg.TRAIN.batch_size,

+ "sampler": {

+ "name": "BatchSampler",

+ "drop_last": False,

+ "shuffle": False,

+ },

+ "num_workers": 0,

+ }

+

+ sup_constraint = ppsci.constraint.SupervisedConstraint(

+ train_dataloader_cfg,

+ loss=ppsci.loss.MSELoss("mean"),

+ name="STAFNet_Sup",

+ )

+ constraint = {sup_constraint.name: sup_constraint}

+ sup_validator = ppsci.validate.SupervisedValidator(

+ eval_dataloader_cfg,

+ loss=ppsci.loss.MSELoss("mean"),

+ metric={"MAE": ppsci.metric.MAE()},

+ name="Sup_Validator",

+ )

+ validator = {sup_validator.name: sup_validator}

+

+ # set optimizer

+ lr_scheduler = ppsci.optimizer.lr_scheduler.Step(**cfg.TRAIN.lr_scheduler)()

+ optimizer = ppsci.optimizer.Adam(lr_scheduler)(model)

+ ITERS_PER_EPOCH = len(sup_constraint.data_loader)

+

+ # initialize solver

+ solver = ppsci.solver.Solver(

+ model,

+ constraint,

+ cfg.output_dir,

+ optimizer,

+ lr_scheduler,

+ cfg.TRAIN.epochs,

+ ITERS_PER_EPOCH,

+ eval_during_train=cfg.TRAIN.eval_during_train,

+ seed=cfg.seed,

+ validator=validator,

+ compute_metric_by_batch=cfg.EVAL.compute_metric_by_batch,

+ eval_with_no_grad=cfg.EVAL.eval_with_no_grad,

+ )

+

+ # train model

+ solver.train()

+

+

+def evaluate(cfg: DictConfig):

+ model = ppsci.arch.STAFNet(**cfg.MODEL)

+ eval_dataloader_cfg = {

+ "dataset": {

+ "name": "STAFNetDataset",

+ "file_path": cfg.EVAL.eval_data_path,

+ "input_keys": cfg.MODEL.input_keys,

+ "label_keys": cfg.MODEL.output_keys,

+ "seq_len": cfg.MODEL.seq_len,

+ "pred_len": cfg.MODEL.pred_len,

+ },

+ "batch_size": cfg.TRAIN.batch_size,

+ "sampler": {

+ "name": "BatchSampler",

+ "drop_last": False,

+ "shuffle": False,

+ },

+ "num_workers": 0,

+ }

+ sup_validator = ppsci.validate.SupervisedValidator(

+ eval_dataloader_cfg,

+ loss=ppsci.loss.MSELoss("mean"),

+ metric={"MAE": ppsci.metric.MAE()},

+ name="Sup_Validator",

+ )

+ validator = {sup_validator.name: sup_validator}

+

+ # initialize solver

+ solver = ppsci.solver.Solver(

+ model,

+ validator=validator,

+ cfg=cfg,

+ pretrained_model_path=cfg.EVAL.pretrained_model_path,

+ compute_metric_by_batch=cfg.EVAL.compute_metric_by_batch,

+ eval_with_no_grad=cfg.EVAL.eval_with_no_grad,

+ )

+

+ # evaluate model

+ solver.eval()

+

+

+@hydra.main(version_base=None, config_path="./conf", config_name="stafnet.yaml")

+def main(cfg: DictConfig):

+ if cfg.mode == "train":

+ train(cfg)

+ elif cfg.mode == "eval":

+ evaluate(cfg)

+ else:

+ raise ValueError(f"cfg.mode should in ['train', 'eval'], but got '{cfg.mode}'")

+

+

+if __name__ == "__main__":

+ multiprocessing.set_start_method("spawn")

+ main()

diff --git a/jointContribution/DU_CNN/requirements.txt b/jointContribution/DU_CNN/requirements.txt

index df2b9a3181..5a07c6511b 100644

--- a/jointContribution/DU_CNN/requirements.txt

+++ b/jointContribution/DU_CNN/requirements.txt

@@ -1,7 +1,7 @@

+mat73

+matplotlib

numpy

+paddlepaddle-gpu

pyaml

-tqdm

-matplotlib

sklearn

-mat73

-paddlepaddle-gpu

+tqdm

diff --git a/jointContribution/IJCAI_2024/aminos/download_dataset.ipynb b/jointContribution/IJCAI_2024/aminos/download_dataset.ipynb

index 48aa6357f8..8e60d995ff 100644

--- a/jointContribution/IJCAI_2024/aminos/download_dataset.ipynb

+++ b/jointContribution/IJCAI_2024/aminos/download_dataset.ipynb

@@ -1,139 +1,138 @@

{

- "cells": [

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "kBRw5QHhBkax"

- },

- "source": [

- "# 数据集导入(预制链接)"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {},

- "outputs": [],

- "source": [

- "\n",

- "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1JwR0Q1ArTg6c47EF2ZuIBpQwCPgXKrO2&export=download&authuser=0&confirm=t&uuid=8ce890e0-0019-4e1e-ac63-14718948f612&at=APZUnTW-e7sn7C7k5UVU2BaxZPGT%3A1721020888524' -O dataset_1.zip\n",

- "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1izP72pHtoXpQvOV8WFCnh_LekzLunyG5&export=download&authuser=0&confirm=t&uuid=8e453e3d-84ac-4f51-9cbf-45d47cbdcc65&at=APZUnTVfJYZBQwnHawB72aq5MPvv%3A1721020973099' -O dataset_2.zip\n",

- "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1djT0tlmLBi15LYZG0dxci1RSjPI94sM8&export=download&authuser=0&confirm=t&uuid=4687dd5d-a001-47f2-bacd-e72d5c7361e4&at=APZUnTWWEM2OCtpaZNuS4UQjMzxc%3A1721021154071' -O dataset_3.zip\n"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "gaD7ugivEL2R"

- },

- "source": [

- "## 官方版本数据导入"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {},

- "outputs": [],

- "source": [

- "# Unzip dataset file\n",

- "import os\n",

- "import shutil\n",

- "\n",

- "# 指定新文件夹的名称\n",

- "new_folder_A = 'unziped/dataset_1'\n",

- "new_folder_B = 'unziped/dataset_2'\n",

- "new_folder_C = 'unziped/dataset_3'\n",

- "\n",

- "# 在当前目录下创建新文件夹\n",

- "if not os.path.exists(new_folder_A):\n",

- " os.makedirs(new_folder_A)\n",

- "if not os.path.exists(new_folder_B):\n",

- " os.makedirs(new_folder_B)\n",

- "if not os.path.exists(new_folder_B):\n",

- " os.makedirs(new_folder_B)\n",

- "\n",

- "# 使用!unzip命令将文件解压到新文件夹中\n",

- "!unzip dataset_1.zip -d {new_folder_A}\n",

- "!unzip dataset_2.zip -d {new_folder_B}\n",

- "!unzip dataset_3.zip -d {new_folder_C}\n"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "z0Sek0wtEs5n"

- },

- "source": [

- "## 百度Baseline版本数据导入"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "kY81z-fCgPfK"

- },

- "source": [

- "## 自定义导入(在下面代码块导入并解压您的数据集)"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "ITzT8s2wgZG0"

- },

- "source": [

- "下载我们自己的数据集"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {},

- "outputs": [],

- "source": [

- "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1lUV7mJaoWPfRks4DMhJgDCxTZwMr8LQI&export=download&authuser=0&confirm=t&uuid=fbd3c896-23e8-42e0-b09d-410cf3c91487&at=APZUnTXNoF-geyh6R_qbWipUdgeP%3A1721020120231' -O trackCtrain.zip\n",

- "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1imPyFjFqAj5WTT_K-lHe7_6lFJ4azJ1L&export=download&authuser=0&confirm=t&uuid=ca9d62d3-394a-4798-b2f3-03455a381cf0&at=APZUnTVrYJw4okYQJSrdDXLPqdSX%3A1721020169107' -O trackCtest.zip\n",

- "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1y23xCHepK-NamTm3OFCAy41RnYolCjzq&export=download&authuser=0' -O trackCsrc.zip"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {},

- "outputs": [],

- "source": [

- "!mkdir ./Datasets\n",

- "!unzip trackCtrain.zip -d ./Datasets\n",

- "!unzip trackCtest.zip -d ./Datasets\n",

- "!unzip trackCsrc.zip"

- ]

- }

- ],

- "metadata": {

- "accelerator": "GPU",

- "colab": {

- "gpuType": "T4",

- "machine_shape": "hm",

- "provenance": []

- },

- "kernelspec": {

- "display_name": "Python 3",

- "name": "python3"

- },

- "language_info": {

- "codemirror_mode": {

- "name": "ipython",

- "version": 3

- },

- "file_extension": ".py",

- "mimetype": "text/x-python",

- "name": "python",

- "nbconvert_exporter": "python",

- "pygments_lexer": "ipython3",

- "version": "3.10.14"

- }

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "kBRw5QHhBkax"

+ },

+ "source": [

+ "# 数据集导入(预制链接)"

+ ]

},

- "nbformat": 4,

- "nbformat_minor": 0

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "\n",

+ "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1JwR0Q1ArTg6c47EF2ZuIBpQwCPgXKrO2&export=download&authuser=0&confirm=t&uuid=8ce890e0-0019-4e1e-ac63-14718948f612&at=APZUnTW-e7sn7C7k5UVU2BaxZPGT%3A1721020888524' -O dataset_1.zip\n",

+ "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1izP72pHtoXpQvOV8WFCnh_LekzLunyG5&export=download&authuser=0&confirm=t&uuid=8e453e3d-84ac-4f51-9cbf-45d47cbdcc65&at=APZUnTVfJYZBQwnHawB72aq5MPvv%3A1721020973099' -O dataset_2.zip\n",

+ "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1djT0tlmLBi15LYZG0dxci1RSjPI94sM8&export=download&authuser=0&confirm=t&uuid=4687dd5d-a001-47f2-bacd-e72d5c7361e4&at=APZUnTWWEM2OCtpaZNuS4UQjMzxc%3A1721021154071' -O dataset_3.zip\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "gaD7ugivEL2R"

+ },

+ "source": [

+ "## 官方版本数据导入"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "# Unzip dataset file\n",

+ "import os\n",

+ "\n",

+ "# 指定新文件夹的名称\n",

+ "new_folder_A = 'unziped/dataset_1'\n",

+ "new_folder_B = 'unziped/dataset_2'\n",

+ "new_folder_C = 'unziped/dataset_3'\n",

+ "\n",

+ "# 在当前目录下创建新文件夹\n",

+ "if not os.path.exists(new_folder_A):\n",

+ " os.makedirs(new_folder_A)\n",

+ "if not os.path.exists(new_folder_B):\n",

+ " os.makedirs(new_folder_B)\n",

+ "if not os.path.exists(new_folder_B):\n",

+ " os.makedirs(new_folder_B)\n",

+ "\n",

+ "# 使用!unzip命令将文件解压到新文件夹中\n",

+ "!unzip dataset_1.zip -d {new_folder_A}\n",

+ "!unzip dataset_2.zip -d {new_folder_B}\n",

+ "!unzip dataset_3.zip -d {new_folder_C}\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "z0Sek0wtEs5n"

+ },

+ "source": [

+ "## 百度Baseline版本数据导入"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "kY81z-fCgPfK"

+ },

+ "source": [

+ "## 自定义导入(在下面代码块导入并解压您的数据集)"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "ITzT8s2wgZG0"

+ },

+ "source": [

+ "下载我们自己的数据集"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1lUV7mJaoWPfRks4DMhJgDCxTZwMr8LQI&export=download&authuser=0&confirm=t&uuid=fbd3c896-23e8-42e0-b09d-410cf3c91487&at=APZUnTXNoF-geyh6R_qbWipUdgeP%3A1721020120231' -O trackCtrain.zip\n",

+ "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1imPyFjFqAj5WTT_K-lHe7_6lFJ4azJ1L&export=download&authuser=0&confirm=t&uuid=ca9d62d3-394a-4798-b2f3-03455a381cf0&at=APZUnTVrYJw4okYQJSrdDXLPqdSX%3A1721020169107' -O trackCtest.zip\n",

+ "!wget --no-check-certificate 'https://drive.usercontent.google.com/download?id=1y23xCHepK-NamTm3OFCAy41RnYolCjzq&export=download&authuser=0' -O trackCsrc.zip"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {},

+ "outputs": [],

+ "source": [

+ "!mkdir ./Datasets\n",

+ "!unzip trackCtrain.zip -d ./Datasets\n",

+ "!unzip trackCtest.zip -d ./Datasets\n",

+ "!unzip trackCsrc.zip"

+ ]

+ }

+ ],

+ "metadata": {

+ "accelerator": "GPU",

+ "colab": {

+ "gpuType": "T4",

+ "machine_shape": "hm",

+ "provenance": []

+ },

+ "kernelspec": {

+ "display_name": "Python 3",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.10.14"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 0

}

diff --git a/jointContribution/PINO/PINO_paddle/configs/pretrain/Darcy-pretrain.yaml b/jointContribution/PINO/PINO_paddle/configs/pretrain/Darcy-pretrain.yaml

index 7ebe16071f..6c5ce1935e 100644

--- a/jointContribution/PINO/PINO_paddle/configs/pretrain/Darcy-pretrain.yaml

+++ b/jointContribution/PINO/PINO_paddle/configs/pretrain/Darcy-pretrain.yaml

@@ -30,5 +30,3 @@ log:

project: 'PINO-Darcy-pretrain'

group: 'gelu-pino'

entity: hzzheng-pino

-

-

diff --git a/jointContribution/PINO/PINO_paddle/configs/pretrain/burgers-pretrain.yaml b/jointContribution/PINO/PINO_paddle/configs/pretrain/burgers-pretrain.yaml

index 7870982f5f..1b7ae25d4c 100644

--- a/jointContribution/PINO/PINO_paddle/configs/pretrain/burgers-pretrain.yaml

+++ b/jointContribution/PINO/PINO_paddle/configs/pretrain/burgers-pretrain.yaml

@@ -33,4 +33,3 @@ log:

project: PINO-burgers-pretrain

group: gelu-eqn

entity: hzzheng-pino

-

diff --git a/jointContribution/PINO/PINO_paddle/configs/test/burgers.yaml b/jointContribution/PINO/PINO_paddle/configs/test/burgers.yaml

index 716c8847fe..3314ea9e28 100644

--- a/jointContribution/PINO/PINO_paddle/configs/test/burgers.yaml

+++ b/jointContribution/PINO/PINO_paddle/configs/test/burgers.yaml

@@ -23,5 +23,3 @@ test:

log:

project: 'PINO-burgers-test'

group: 'gelu-test'

-

-

diff --git a/jointContribution/PINO/PINO_paddle/configs/test/darcy.yaml b/jointContribution/PINO/PINO_paddle/configs/test/darcy.yaml

index 9822de6122..1aa19178fb 100644

--- a/jointContribution/PINO/PINO_paddle/configs/test/darcy.yaml

+++ b/jointContribution/PINO/PINO_paddle/configs/test/darcy.yaml

@@ -22,5 +22,3 @@ test:

log:

project: 'PINO-Darcy'

group: 'default'

-

-

diff --git a/jointContribution/PINO/PINO_paddle/download_data.py b/jointContribution/PINO/PINO_paddle/download_data.py

index 5651617381..32f41d9ad4 100644

--- a/jointContribution/PINO/PINO_paddle/download_data.py

+++ b/jointContribution/PINO/PINO_paddle/download_data.py

@@ -1,40 +1,44 @@

import os

from argparse import ArgumentParser

+

import requests

from tqdm import tqdm

_url_dict = {

- 'NS-T4000': 'https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS_fft_Re500_T4000.npy',

- 'NS-Re500Part0': 'https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS_fine_Re500_T128_part0.npy',

- 'NS-Re500Part1': 'https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS_fine_Re500_T128_part1.npy',

- 'NS-Re500Part2': 'https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS_fine_Re500_T128_part2.npy',

- 'NS-Re100Part0': 'https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS_fine_Re100_T128_part0.npy',

- 'burgers': 'https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/burgers_pino.mat',

- 'NS-Re500_T300_id0': 'https://hkzdata.s3.us-west-2.amazonaws.com/PINO/NS-Re500_T300_id0.npy',

- 'NS-Re500_T300_id0-shuffle': 'https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS-Re500_T300_id0-shuffle.npy',

- 'darcy-train': 'https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/piececonst_r421_N1024_smooth1.mat',

- 'Re500-1_8s-800-pino-140k': 'https://hkzdata.s3.us-west-2.amazonaws.com/PINO/checkpoints/Re500-1_8s-800-PINO-140000.pt',

+ "NS-T4000": "https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS_fft_Re500_T4000.npy",

+ "NS-Re500Part0": "https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS_fine_Re500_T128_part0.npy",

+ "NS-Re500Part1": "https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS_fine_Re500_T128_part1.npy",

+ "NS-Re500Part2": "https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS_fine_Re500_T128_part2.npy",

+ "NS-Re100Part0": "https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS_fine_Re100_T128_part0.npy",

+ "burgers": "https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/burgers_pino.mat",

+ "NS-Re500_T300_id0": "https://hkzdata.s3.us-west-2.amazonaws.com/PINO/NS-Re500_T300_id0.npy",

+ "NS-Re500_T300_id0-shuffle": "https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/NS-Re500_T300_id0-shuffle.npy",

+ "darcy-train": "https://hkzdata.s3.us-west-2.amazonaws.com/PINO/data/piececonst_r421_N1024_smooth1.mat",

+ "Re500-1_8s-800-pino-140k": "https://hkzdata.s3.us-west-2.amazonaws.com/PINO/checkpoints/Re500-1_8s-800-PINO-140000.pt",

}

+

def download_file(url, file_path):

- print('Start downloading...')

+ print("Start downloading...")

with requests.get(url, stream=True) as r:

r.raise_for_status()

- with open(file_path, 'wb') as f:

+ with open(file_path, "wb") as f:

for chunk in tqdm(r.iter_content(chunk_size=256 * 1024 * 1024)):

f.write(chunk)

- print('Complete')

+ print("Complete")

+

def main(args):

url = _url_dict[args.name]

- file_name = url.split('/')[-1]

+ file_name = url.split("/")[-1]

os.makedirs(args.outdir, exist_ok=True)

file_path = os.path.join(args.outdir, file_name)

download_file(url, file_path)

-if __name__ == '__main__':

- parser = ArgumentParser(description='Parser for downloading assets')

- parser.add_argument('--name', type=str, default='NS-T4000')

- parser.add_argument('--outdir', type=str, default='../data')

+

+if __name__ == "__main__":

+ parser = ArgumentParser(description="Parser for downloading assets")

+ parser.add_argument("--name", type=str, default="NS-T4000")

+ parser.add_argument("--outdir", type=str, default="../data")

args = parser.parse_args()

- main(args)

\ No newline at end of file

+ main(args)

diff --git a/jointContribution/PINO/PINO_paddle/eval_operator.py b/jointContribution/PINO/PINO_paddle/eval_operator.py

index 40991f86c9..6996381302 100644

--- a/jointContribution/PINO/PINO_paddle/eval_operator.py

+++ b/jointContribution/PINO/PINO_paddle/eval_operator.py

@@ -1,78 +1,94 @@

-import yaml

+from argparse import ArgumentParser

import paddle

+import yaml

+from models import FNO2d

+from models import FNO3d

from paddle.io import DataLoader

-from models import FNO3d, FNO2d

-from train_utils import NSLoader, get_forcing, DarcyFlow

-

-from train_utils.eval_3d import eval_ns

+from train_utils import DarcyFlow

+from train_utils import NSLoader

+from train_utils import get_forcing

from train_utils.eval_2d import eval_darcy

+from train_utils.eval_3d import eval_ns

-from argparse import ArgumentParser

def test_3d(config):

- device = 0 if paddle.cuda.is_available() else 'cpu'

- data_config = config['data']

- loader = NSLoader(datapath1=data_config['datapath'],

- nx=data_config['nx'], nt=data_config['nt'],

- sub=data_config['sub'], sub_t=data_config['sub_t'],

- N=data_config['total_num'],

- t_interval=data_config['time_interval'])

+ device = 0 if paddle.cuda.is_available() else "cpu"

+ data_config = config["data"]

+ loader = NSLoader(

+ datapath1=data_config["datapath"],

+ nx=data_config["nx"],

+ nt=data_config["nt"],

+ sub=data_config["sub"],

+ sub_t=data_config["sub_t"],

+ N=data_config["total_num"],

+ t_interval=data_config["time_interval"],

+ )

- eval_loader = loader.make_loader(n_sample=data_config['n_sample'],

- batch_size=config['test']['batchsize'],

- start=data_config['offset'],

- train=data_config['shuffle'])

- model = FNO3d(modes1=config['model']['modes1'],

- modes2=config['model']['modes2'],

- modes3=config['model']['modes3'],

- fc_dim=config['model']['fc_dim'],

- layers=config['model']['layers']).to(device)

+ eval_loader = loader.make_loader(

+ n_sample=data_config["n_sample"],

+ batch_size=config["test"]["batchsize"],

+ start=data_config["offset"],

+ train=data_config["shuffle"],

+ )

+ model = FNO3d(

+ modes1=config["model"]["modes1"],

+ modes2=config["model"]["modes2"],

+ modes3=config["model"]["modes3"],

+ fc_dim=config["model"]["fc_dim"],

+ layers=config["model"]["layers"],

+ ).to(device)

- if 'ckpt' in config['test']:

- ckpt_path = config['test']['ckpt']

+ if "ckpt" in config["test"]:

+ ckpt_path = config["test"]["ckpt"]

ckpt = paddle.load(ckpt_path)

- model.load_state_dict(ckpt['model'])

- print('Weights loaded from %s' % ckpt_path)

- print(f'Resolution : {loader.S}x{loader.S}x{loader.T}')

+ model.load_state_dict(ckpt["model"])

+ print("Weights loaded from %s" % ckpt_path)

+ print(f"Resolution : {loader.S}x{loader.S}x{loader.T}")

forcing = get_forcing(loader.S).to(device)

- eval_ns(model,

- loader,

- eval_loader,

- forcing,

- config,

- device=device)

+ eval_ns(model, loader, eval_loader, forcing, config, device=device)

+

def test_2d(config):

- data_config = config['data']

- dataset = DarcyFlow(data_config['datapath'],

- nx=data_config['nx'], sub=data_config['sub'],

- offset=data_config['offset'], num=data_config['n_sample'])

- dataloader = DataLoader(dataset, batch_size=config['test']['batchsize'], shuffle=False)

- model = FNO2d(modes1=config['model']['modes1'],

- modes2=config['model']['modes2'],

- fc_dim=config['model']['fc_dim'],

- layers=config['model']['layers'],

- act=config['model']['act'])

+ data_config = config["data"]

+ dataset = DarcyFlow(

+ data_config["datapath"],

+ nx=data_config["nx"],

+ sub=data_config["sub"],

+ offset=data_config["offset"],

+ num=data_config["n_sample"],

+ )

+ dataloader = DataLoader(

+ dataset, batch_size=config["test"]["batchsize"], shuffle=False

+ )

+ model = FNO2d(

+ modes1=config["model"]["modes1"],

+ modes2=config["model"]["modes2"],

+ fc_dim=config["model"]["fc_dim"],

+ layers=config["model"]["layers"],

+ act=config["model"]["act"],

+ )

# Load from checkpoint

- if 'ckpt' in config['test']:

- ckpt_path = config['test']['ckpt']

+ if "ckpt" in config["test"]:

+ ckpt_path = config["test"]["ckpt"]

ckpt = paddle.load(ckpt_path)

- model.set_dict(ckpt['model'])

- print('Weights loaded from %s' % ckpt_path)

+ model.set_dict(ckpt["model"])

+ print("Weights loaded from %s" % ckpt_path)

eval_darcy(model, dataloader, config)

-if __name__ == '__main__':

- parser = ArgumentParser(description='Basic paser')

- parser.add_argument('--config_path', type=str, help='Path to the configuration file')

- parser.add_argument('--log', action='store_true', help='Turn on the wandb')

+

+if __name__ == "__main__":

+ parser = ArgumentParser(description="Basic paser")

+ parser.add_argument(

+ "--config_path", type=str, help="Path to the configuration file"

+ )

+ parser.add_argument("--log", action="store_true", help="Turn on the wandb")

options = parser.parse_args()

config_file = options.config_path

- with open(config_file, 'r') as stream:

+ with open(config_file, "r") as stream:

config = yaml.load(stream, yaml.FullLoader)

- if 'name' in config['data'] and config['data']['name'] == 'Darcy':

+ if "name" in config["data"] and config["data"]["name"] == "Darcy":

test_2d(config)

else:

test_3d(config)

-

diff --git a/jointContribution/PINO/PINO_paddle/models/FCN.py b/jointContribution/PINO/PINO_paddle/models/FCN.py

index d429d0f43f..7435c76eca 100644

--- a/jointContribution/PINO/PINO_paddle/models/FCN.py

+++ b/jointContribution/PINO/PINO_paddle/models/FCN.py

@@ -1,29 +1,31 @@

import paddle.nn as nn

+

def linear_block(in_channel, out_channel):

- block = nn.Sequential(

- nn.Linear(in_channel, out_channel),

- nn.Tanh()

- )

+ block = nn.Sequential(nn.Linear(in_channel, out_channel), nn.Tanh())

return block

+

class FCNet(nn.Layer):

- '''

+ """

Fully connected layers with Tanh as nonlinearity

Reproduced from PINNs Burger equation

- '''

+ """

def __init__(self, layers=[2, 10, 1]):

super(FCNet, self).__init__()

- fc_list = [linear_block(in_size, out_size)

- for in_size, out_size in zip(layers, layers[1:-1])]

+ fc_list = [

+ linear_block(in_size, out_size)

+ for in_size, out_size in zip(layers, layers[1:-1])

+ ]

fc_list.append(nn.Linear(layers[-2], layers[-1]))

self.fc = nn.Sequential(*fc_list)

def forward(self, x):

return self.fc(x)

+

class DenseNet(nn.Layer):

def __init__(self, layers, nonlinearity, out_nonlinearity=None, normalize=False):

super(DenseNet, self).__init__()

@@ -31,20 +33,20 @@ def __init__(self, layers, nonlinearity, out_nonlinearity=None, normalize=False)

self.n_layers = len(layers) - 1

assert self.n_layers >= 1

if isinstance(nonlinearity, str):

- if nonlinearity == 'tanh':

+ if nonlinearity == "tanh":

nonlinearity = nn.Tanh

- elif nonlinearity == 'relu':

+ elif nonlinearity == "relu":

nonlinearity == nn.ReLU

else:

- raise ValueError(f'{nonlinearity} is not supported')

+ raise ValueError(f"{nonlinearity} is not supported")

self.layers = nn.ModuleList()

for j in range(self.n_layers):

- self.layers.append(nn.Linear(layers[j], layers[j+1]))

+ self.layers.append(nn.Linear(layers[j], layers[j + 1]))

if j != self.n_layers - 1:

if normalize:

- self.layers.append(nn.BatchNorm1d(layers[j+1]))

+ self.layers.append(nn.BatchNorm1d(layers[j + 1]))

self.layers.append(nonlinearity())

@@ -56,4 +58,3 @@ def forward(self, x):

x = l(x)

return x

-

diff --git a/jointContribution/PINO/PINO_paddle/models/FNO_blocks.py b/jointContribution/PINO/PINO_paddle/models/FNO_blocks.py

index c158f4f217..86ff9a8241 100644

--- a/jointContribution/PINO/PINO_paddle/models/FNO_blocks.py

+++ b/jointContribution/PINO/PINO_paddle/models/FNO_blocks.py

@@ -1,9 +1,11 @@

+import itertools

+

import paddle

import paddle.nn as nn

-import itertools

einsum_symbols = "abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ"

+

def _contract_dense(x, weight, separable=False):

# order = tl.ndim(x)

order = len(x.shape)

@@ -28,6 +30,7 @@ def _contract_dense(x, weight, separable=False):

return paddle.einsum(eq, x, weight)

+

def _contract_dense_trick(x, weight, separable=False):

# the same as above function, but do the complex multiplication manually to avoid the einsum bug in paddle

weight_real = weight.data.real()

@@ -59,11 +62,13 @@ def _contract_dense_trick(x, weight, separable=False):

x = paddle.complex(o1_real, o1_imag)

return x

+

def _contract_dense_separable(x, weight, separable=True):

- if separable == False:

+ if not separable:

raise ValueError("This function is only for separable=True")

return x * weight

+

def _contract_cp(x, cp_weight, separable=False):

# order = tl.ndim(x)

order = len(x.shape)

@@ -84,6 +89,7 @@ def _contract_cp(x, cp_weight, separable=False):

return paddle.einsum(eq, x, cp_weight.weights, *cp_weight.factors)

+

def _contract_tucker(x, tucker_weight, separable=False):

# order = tl.ndim(x)

order = len(x.shape)

@@ -118,6 +124,7 @@ def _contract_tucker(x, tucker_weight, separable=False):

print(eq) # 'abcd,fghi,bf,eg,ch,di->aecd'

return paddle.einsum(eq, x, tucker_weight.core, *tucker_weight.factors)

+

def _contract_tt(x, tt_weight, separable=False):

# order = tl.ndim(x)

order = len(x.shape)

@@ -144,16 +151,17 @@ def _contract_tt(x, tt_weight, separable=False):

return paddle.einsum(eq, x, *tt_weight.factors)

+

def get_contract_fun(weight, implementation="reconstructed", separable=False):

"""Generic ND implementation of Fourier Spectral Conv contraction

-

+

Parameters

----------

weight : tensorl-paddle's FactorizedTensor

implementation : {'reconstructed', 'factorized'}, default is 'reconstructed'

whether to reconstruct the weight and do a forward pass (reconstructed)

or contract directly the factors of the factorized weight with the input (factorized)

-

+

Returns

-------

function : (x, weight) -> x * weight in Fourier space

@@ -176,6 +184,7 @@ def get_contract_fun(weight, implementation="reconstructed", separable=False):

f'Got implementation = {implementation}, expected "reconstructed" or "factorized"'

)

+

class FactorizedTensor(nn.Layer):

def __init__(self, shape, init_scale):

super().__init__()

@@ -195,6 +204,7 @@ def __repr__(self):

def data(self):

return paddle.complex(self.real, self.imag)

+

class FactorizedSpectralConv(nn.Layer):

"""Generic N-Dimensional Fourier Neural Operator

@@ -258,7 +268,7 @@ def __init__(

if isinstance(n_modes, int):

n_modes = [n_modes]

self.n_modes = n_modes

- half_modes = [m // 2 for m in n_modes]

+ [m // 2 for m in n_modes]

self.half_modes = n_modes

self.rank = rank

@@ -324,14 +334,14 @@ def __init__(

def forward(self, x, indices=0):

"""Generic forward pass for the Factorized Spectral Conv

-

- Parameters

+

+ Parameters

----------

x : paddle.Tensor

input activation of size (batch_size, channels, d1, ..., dN)

indices : int, default is 0

if joint_factorization, index of the layers for n_layers > 1

-

+

Returns

-------

tensorized_spectral_conv(x)

@@ -348,7 +358,8 @@ def forward(self, x, indices=0):

x = paddle.fft.rfftn(x_float, norm=self.fft_norm, axes=fft_dims)

out_fft = paddle.zeros(

- [batchsize, self.out_channels, *fft_size], dtype=paddle.complex64,

+ [batchsize, self.out_channels, *fft_size],

+ dtype=paddle.complex64,

) # [1,32,16,9], all zeros, complex

# We contract all corners of the Fourier coefs

@@ -402,13 +413,14 @@ def get_conv(self, indices):

def __getitem__(self, indices):

return self.get_conv(indices)

+

class SubConv2d(nn.Layer):

"""Class representing one of the convolutions from the mother joint factorized convolution

Notes

-----

This relies on the fact that nn.Parameters are not duplicated:

- if the same nn.Parameter is assigned to multiple modules, they all point to the same data,

+ if the same nn.Parameter is assigned to multiple modules, they all point to the same data,

which is shared.

"""

@@ -420,6 +432,7 @@ def __init__(self, main_conv, indices):

def forward(self, x):

return self.main_conv.forward(x, self.indices)

+

class FactorizedSpectralConv1d(FactorizedSpectralConv):

def __init__(

self,

@@ -462,7 +475,8 @@ def forward(self, x, indices=0):

x = paddle.fft.rfft(x, norm=self.fft_norm)

out_fft = paddle.zeros(

- [batchsize, self.out_channels, width // 2 + 1], dtype=paddle.complex64,

+ [batchsize, self.out_channels, width // 2 + 1],

+ dtype=paddle.complex64,

)

out_fft[:, :, : self.half_modes_height] = self._contract(

x[:, :, : self.half_modes_height],

@@ -477,6 +491,7 @@ def forward(self, x, indices=0):

return x

+

class FactorizedSpectralConv2d(FactorizedSpectralConv):

def __init__(

self,

@@ -522,7 +537,8 @@ def forward(self, x, indices=0):

# The output will be of size (batch_size, self.out_channels, x.size(-2), x.size(-1)//2 + 1)

out_fft = paddle.zeros(

- [batchsize, self.out_channels, height, width // 2 + 1], dtype=x.dtype,

+ [batchsize, self.out_channels, height, width // 2 + 1],

+ dtype=x.dtype,

)

# upper block (truncate high freq)

@@ -551,6 +567,7 @@ def forward(self, x, indices=0):

return x

+

class FactorizedSpectralConv3d(FactorizedSpectralConv):

def __init__(

self,

@@ -679,6 +696,7 @@ def forward(self, x, indices=0):

return x

+

if __name__ == "__main__":

# let x be a complex tensor of size (32, 32, 8, 8)

x = paddle.randn([32, 32, 8, 8]).astype("complex64")

diff --git a/jointContribution/PINO/PINO_paddle/models/basics.py b/jointContribution/PINO/PINO_paddle/models/basics.py

index 8a8fea46f3..73bfdd57b1 100644

--- a/jointContribution/PINO/PINO_paddle/models/basics.py

+++ b/jointContribution/PINO/PINO_paddle/models/basics.py

@@ -1,33 +1,36 @@

-import numpy as np

-

import paddle

import paddle.nn as nn

import paddle.nn.initializer as Initializer

+

def compl_mul1d(a: paddle.Tensor, b: paddle.Tensor) -> paddle.Tensor:

# (batch, in_channel, x ), (in_channel, out_channel, x) -> (batch, out_channel, x)

res = paddle.einsum("bix,iox->box", a, b)

return res

+

def compl_mul2d(a: paddle.Tensor, b: paddle.Tensor) -> paddle.Tensor:

# (batch, in_channel, x,y,t ), (in_channel, out_channel, x,y,t) -> (batch, out_channel, x,y,t)

- res = paddle.einsum("bixy,ioxy->boxy", a, b)

+ res = paddle.einsum("bixy,ioxy->boxy", a, b)

return res

+

def compl_mul3d(a: paddle.Tensor, b: paddle.Tensor) -> paddle.Tensor:

res = paddle.einsum("bixyz,ioxyz->boxyz", a, b)

return res

+

################################################################

# 1d fourier layer

################################################################

+

class SpectralConv1d(nn.Layer):

def __init__(self, in_channels, out_channels, modes1):

super(SpectralConv1d, self).__init__()

"""

- 1D Fourier layer. It does FFT, linear transform, and Inverse FFT.

+ 1D Fourier layer. It does FFT, linear transform, and Inverse FFT.

"""

self.in_channels = in_channels

@@ -35,8 +38,14 @@ def __init__(self, in_channels, out_channels, modes1):

# Number of Fourier modes to multiply, at most floor(N/2) + 1

self.modes1 = modes1

- self.scale = (1 / (in_channels*out_channels))

- self.weights1 = paddle.create_parameter(shape=(in_channels, out_channels), dtype=paddle.complex64, attr=Initializer.Assign(self.scale * paddle.rand(in_channels, out_channels, self.modes1)))

+ self.scale = 1 / (in_channels * out_channels)

+ self.weights1 = paddle.create_parameter(

+ shape=(in_channels, out_channels),

+ dtype=paddle.complex64,

+ attr=Initializer.Assign(

+ self.scale * paddle.rand(in_channels, out_channels, self.modes1)

+ ),

+ )

def forward(self, x):

batchsize = x.shape[0]

@@ -44,17 +53,23 @@ def forward(self, x):

x_ft = paddle.fft.rfftn(x, dim=[2])

# Multiply relevant Fourier modes

- out_ft = paddle.zeros(batchsize, self.in_channels, x.shape[-1]//2 + 1, dtype=paddle.complex64)

- out_ft[:, :, :self.modes1] = compl_mul1d(x_ft[:, :, :self.modes1], self.weights1)

+ out_ft = paddle.zeros(

+ batchsize, self.in_channels, x.shape[-1] // 2 + 1, dtype=paddle.complex64

+ )

+ out_ft[:, :, : self.modes1] = compl_mul1d(

+ x_ft[:, :, : self.modes1], self.weights1

+ )

# Return to physical space

x = paddle.fft.irfftn(out_ft, s=[x.shape[-1]], axes=[2])

return x

+

################################################################

# 2d fourier layer

################################################################

+

class SpectralConv2d(nn.Layer):

def __init__(self, in_channels, out_channels, modes1, modes2):

super(SpectralConv2d, self).__init__()

@@ -64,102 +79,212 @@ def __init__(self, in_channels, out_channels, modes1, modes2):

self.modes1 = modes1

self.modes2 = modes2

- self.scale = (1 / (in_channels * out_channels))

- weights1_attr = Initializer.Assign(self.scale * paddle.rand([in_channels, out_channels, self.modes1, self.modes2], dtype=paddle.float32))

- self.weights1_real = paddle.create_parameter(shape=(in_channels, out_channels), dtype=paddle.float32, attr=weights1_attr)

- self.weights1_imag = paddle.create_parameter(shape=(in_channels, out_channels), dtype=paddle.float32, attr=weights1_attr)

+ self.scale = 1 / (in_channels * out_channels)

+ weights1_attr = Initializer.Assign(

+ self.scale

+ * paddle.rand(

+ [in_channels, out_channels, self.modes1, self.modes2],

+ dtype=paddle.float32,

+ )

+ )

+ self.weights1_real = paddle.create_parameter(

+ shape=(in_channels, out_channels), dtype=paddle.float32, attr=weights1_attr

+ )

+ self.weights1_imag = paddle.create_parameter(

+ shape=(in_channels, out_channels), dtype=paddle.float32, attr=weights1_attr

+ )

self.weights1 = paddle.concat([self.weights1_real, self.weights1_imag], axis=0)

- self.weights2 = paddle.create_parameter(shape=(in_channels, out_channels), dtype=paddle.float32, attr=Initializer.Assign(self.scale * paddle.rand([in_channels, out_channels, self.modes1, self.modes2], dtype=paddle.float32)))

+ self.weights2 = paddle.create_parameter(

+ shape=(in_channels, out_channels),

+ dtype=paddle.float32,

+ attr=Initializer.Assign(

+ self.scale

+ * paddle.rand(

+ [in_channels, out_channels, self.modes1, self.modes2],

+ dtype=paddle.float32,

+ )

+ ),

+ )

def forward(self, x):

batchsize = x.shape[0]

- size1 = x.shape[-2]

- size2 = x.shape[-1]

+ x.shape[-2]

+ x.shape[-1]

# Compute Fourier coeffcients up to factor of e^(- something constant)

x_ft = paddle.fft.rfftn(x, axes=[2, 3])

# Multiply relevant Fourier modes

- out_ft = paddle.zeros([batchsize, self.out_channels, x.shape[-2], x.shape[-1] // 2 + 1],

- dtype=paddle.float32)

- out_ft[:, :, :self.modes1, :self.modes2] = \

- compl_mul2d(x_ft[:, :, :self.modes1, :self.modes2], self.weights1)

- out_ft[:, :, -self.modes1:, :self.modes2] = \

- compl_mul2d(x_ft[:, :, -self.modes1:, :self.modes2], self.weights2)

+ out_ft = paddle.zeros(

+ [batchsize, self.out_channels, x.shape[-2], x.shape[-1] // 2 + 1],

+ dtype=paddle.float32,

+ )

+ out_ft[:, :, : self.modes1, : self.modes2] = compl_mul2d(

+ x_ft[:, :, : self.modes1, : self.modes2], self.weights1

+ )

+ out_ft[:, :, -self.modes1 :, : self.modes2] = compl_mul2d(

+ x_ft[:, :, -self.modes1 :, : self.modes2], self.weights2

+ )

# Return to physical space

x = paddle.fft.irfftn(out_ft, s=(x.shape[-2], x.shape[-1]), axes=[2, 3])

return x

+

class SpectralConv3d(nn.Layer):

def __init__(self, in_channels, out_channels, modes1, modes2, modes3):

super(SpectralConv3d, self).__init__()

self.in_channels = in_channels

self.out_channels = out_channels

- self.modes1 = modes1 #Number of Fourier modes to multiply, at most floor(N/2) + 1

+ self.modes1 = (

+ modes1 # Number of Fourier modes to multiply, at most floor(N/2) + 1

+ )

self.modes2 = modes2

self.modes3 = modes3

- self.scale = (1 / (in_channels * out_channels))

- self.weights1 = paddle.create_parameter(shape=(in_channels, out_channels), dtype=paddle.complex64, attr=Initializer.Assign(self.scale * paddle.rand(in_channels, out_channels, self.modes1, self.modes2, self.modes3)))

- self.weights2 = paddle.create_parameter(shape=(in_channels, out_channels), dtype=paddle.complex64, attr=Initializer.Assign(self.scale * paddle.rand(in_channels, out_channels, self.modes1, self.modes2, self.modes3)))

- self.weights3 = paddle.create_parameter(shape=(in_channels, out_channels), dtype=paddle.complex64, attr=Initializer.Assign(self.scale * paddle.rand(in_channels, out_channels, self.modes1, self.modes2, self.modes3)))

- self.weights4 = paddle.create_parameter(shape=(in_channels, out_channels), dtype=paddle.complex64, attr=Initializer.Assign(self.scale * paddle.rand(in_channels, out_channels, self.modes1, self.modes2, self.modes3)))

+ self.scale = 1 / (in_channels * out_channels)

+ self.weights1 = paddle.create_parameter(

+ shape=(in_channels, out_channels),

+ dtype=paddle.complex64,

+ attr=Initializer.Assign(

+ self.scale

+ * paddle.rand(

+ in_channels, out_channels, self.modes1, self.modes2, self.modes3

+ )

+ ),

+ )

+ self.weights2 = paddle.create_parameter(

+ shape=(in_channels, out_channels),

+ dtype=paddle.complex64,

+ attr=Initializer.Assign(

+ self.scale

+ * paddle.rand(

+ in_channels, out_channels, self.modes1, self.modes2, self.modes3

+ )

+ ),

+ )

+ self.weights3 = paddle.create_parameter(

+ shape=(in_channels, out_channels),

+ dtype=paddle.complex64,

+ attr=Initializer.Assign(

+ self.scale

+ * paddle.rand(

+ in_channels, out_channels, self.modes1, self.modes2, self.modes3

+ )

+ ),

+ )

+ self.weights4 = paddle.create_parameter(

+ shape=(in_channels, out_channels),

+ dtype=paddle.complex64,

+ attr=Initializer.Assign(

+ self.scale

+ * paddle.rand(

+ in_channels, out_channels, self.modes1, self.modes2, self.modes3

+ )

+ ),

+ )

def forward(self, x):

batchsize = x.shape[0]

# Compute Fourier coeffcients up to factor of e^(- something constant)

- x_ft = paddle.fft.rfftn(x, dim=[2,3,4])

-

+ x_ft = paddle.fft.rfftn(x, dim=[2, 3, 4])

+

z_dim = min(x_ft.shape[4], self.modes3)

-

+

# Multiply relevant Fourier modes

- out_ft = paddle.zeros(batchsize, self.out_channels, x_ft.shape[2], x_ft.shape[3], self.modes3, dtype=paddle.complex64)

-

- # if x_ft.shape[4] > self.modes3, truncate; if x_ft.shape[4] < self.modes3, add zero padding

- coeff = paddle.zeros(batchsize, self.in_channels, self.modes1, self.modes2, self.modes3, dtype=paddle.complex64)

- coeff[..., :z_dim] = x_ft[:, :, :self.modes1, :self.modes2, :z_dim]

- out_ft[:, :, :self.modes1, :self.modes2, :] = compl_mul3d(coeff, self.weights1)

-

- coeff = paddle.zeros(batchsize, self.in_channels, self.modes1, self.modes2, self.modes3, dtype=paddle.complex64)

- coeff[..., :z_dim] = x_ft[:, :, -self.modes1:, :self.modes2, :z_dim]

- out_ft[:, :, -self.modes1:, :self.modes2, :] = compl_mul3d(coeff, self.weights2)

-

- coeff = paddle.zeros(batchsize, self.in_channels, self.modes1, self.modes2, self.modes3, dtype=paddle.complex64)

- coeff[..., :z_dim] = x_ft[:, :, :self.modes1, -self.modes2:, :z_dim]

- out_ft[:, :, :self.modes1, -self.modes2:, :] = compl_mul3d(coeff, self.weights3)

-

- coeff = paddle.zeros(batchsize, self.in_channels, self.modes1, self.modes2, self.modes3, dtype=paddle.complex64)

- coeff[..., :z_dim] = x_ft[:, :, -self.modes1:, -self.modes2:, :z_dim]

- out_ft[:, :, -self.modes1:, -self.modes2:, :] = compl_mul3d(coeff, self.weights4)

-

- #Return to physical space

- x = paddle.fft.irfftn(out_ft, s=(x.shape[2], x.shape[3], x.shape[4]), axes=[2,3,4])

+ out_ft = paddle.zeros(

+ batchsize,

+ self.out_channels,

+ x_ft.shape[2],

+ x_ft.shape[3],

+ self.modes3,

+ dtype=paddle.complex64,

+ )

+

+ # if x_ft.shape[4] > self.modes3, truncate; if x_ft.shape[4] < self.modes3, add zero padding

+ coeff = paddle.zeros(

+ batchsize,

+ self.in_channels,

+ self.modes1,

+ self.modes2,

+ self.modes3,

+ dtype=paddle.complex64,

+ )

+ coeff[..., :z_dim] = x_ft[:, :, : self.modes1, : self.modes2, :z_dim]

+ out_ft[:, :, : self.modes1, : self.modes2, :] = compl_mul3d(

+ coeff, self.weights1

+ )

+

+ coeff = paddle.zeros(

+ batchsize,

+ self.in_channels,

+ self.modes1,

+ self.modes2,

+ self.modes3,

+ dtype=paddle.complex64,

+ )

+ coeff[..., :z_dim] = x_ft[:, :, -self.modes1 :, : self.modes2, :z_dim]

+ out_ft[:, :, -self.modes1 :, : self.modes2, :] = compl_mul3d(

+ coeff, self.weights2

+ )

+

+ coeff = paddle.zeros(

+ batchsize,

+ self.in_channels,

+ self.modes1,

+ self.modes2,

+ self.modes3,

+ dtype=paddle.complex64,

+ )

+ coeff[..., :z_dim] = x_ft[:, :, : self.modes1, -self.modes2 :, :z_dim]

+ out_ft[:, :, : self.modes1, -self.modes2 :, :] = compl_mul3d(

+ coeff, self.weights3

+ )

+

+ coeff = paddle.zeros(

+ batchsize,

+ self.in_channels,

+ self.modes1,

+ self.modes2,

+ self.modes3,

+ dtype=paddle.complex64,

+ )

+ coeff[..., :z_dim] = x_ft[:, :, -self.modes1 :, -self.modes2 :, :z_dim]

+ out_ft[:, :, -self.modes1 :, -self.modes2 :, :] = compl_mul3d(

+ coeff, self.weights4

+ )

+

+ # Return to physical space

+ x = paddle.fft.irfftn(

+ out_ft, s=(x.shape[2], x.shape[3], x.shape[4]), axes=[2, 3, 4]

+ )

return x

+

class FourierBlock(nn.Layer):

- def __init__(self, in_channels, out_channels, modes1, modes2, modes3, act='tanh'):

+ def __init__(self, in_channels, out_channels, modes1, modes2, modes3, act="tanh"):

super(FourierBlock, self).__init__()

self.in_channel = in_channels

self.out_channel = out_channels

self.speconv = SpectralConv3d(in_channels, out_channels, modes1, modes2, modes3)

self.linear = nn.Conv1D(in_channels, out_channels, 1)

- if act == 'tanh':

+ if act == "tanh":

self.act = paddle.tanh_

- elif act == 'gelu':

+ elif act == "gelu":

self.act = nn.GELU

- elif act == 'none':

+ elif act == "none":

self.act = None

else:

- raise ValueError(f'{act} is not supported')

+ raise ValueError(f"{act} is not supported")

def forward(self, x):

- '''

+ """

input x: (batchsize, channel width, x_grid, y_grid, t_grid)

- '''

+ """

x1 = self.speconv(x)

x2 = self.linear(x.reshape(x.shape[0], self.in_channel, -1))

- out = x1 + x2.reshape(x.shape[0], self.out_channel, x.shape[2], x.shape[3], x.shape[4])

+ out = x1 + x2.reshape(

+ x.shape[0], self.out_channel, x.shape[2], x.shape[3], x.shape[4]

+ )

if self.act is not None:

out = self.act(out)

return out

-

diff --git a/jointContribution/PINO/PINO_paddle/models/fourier2d.py b/jointContribution/PINO/PINO_paddle/models/fourier2d.py

index 327369aed1..33126970a9 100644

--- a/jointContribution/PINO/PINO_paddle/models/fourier2d.py

+++ b/jointContribution/PINO/PINO_paddle/models/fourier2d.py

@@ -1,34 +1,43 @@

import paddle.nn as nn

-from .basics import SpectralConv2d

+

from .FNO_blocks import FactorizedSpectralConv2d

-from .utils import _get_act, add_padding2, remove_padding2

+from .utils import _get_act

+from .utils import add_padding2

+from .utils import remove_padding2

+

class FNO2d(nn.Layer):

- def __init__(self, modes1, modes2,

- width=64, fc_dim=128,

- layers=None,

- in_dim=3, out_dim=1,

- act='gelu',

- pad_ratio=[0., 0.]):

+ def __init__(

+ self,

+ modes1,

+ modes2,

+ width=64,

+ fc_dim=128,

+ layers=None,

+ in_dim=3,

+ out_dim=1,

+ act="gelu",

+ pad_ratio=[0.0, 0.0],

+ ):

super(FNO2d, self).__init__()

"""

Args:

- modes1: list of int, number of modes in first dimension in each layer

- modes2: list of int, number of modes in second dimension in each layer

- - width: int, optional, if layers is None, it will be initialized as [width] * [len(modes1) + 1]

+ - width: int, optional, if layers is None, it will be initialized as [width] * [len(modes1) + 1]

- in_dim: number of input channels

- out_dim: number of output channels

- act: activation function, {tanh, gelu, relu, leaky_relu}, default: gelu

- - pad_ratio: list of float, or float; portion of domain to be extended. If float, paddings are added to the right.

- If list, paddings are added to both sides. pad_ratio[0] pads left, pad_ratio[1] pads right.

+ - pad_ratio: list of float, or float; portion of domain to be extended. If float, paddings are added to the right.

+ If list, paddings are added to both sides. pad_ratio[0] pads left, pad_ratio[1] pads right.

"""

if isinstance(pad_ratio, float):

pad_ratio = [pad_ratio, pad_ratio]

else:

- assert len(pad_ratio) == 2, 'Cannot add padding in more than 2 directions'

+ assert len(pad_ratio) == 2, "Cannot add padding in more than 2 directions"

self.modes1 = modes1

self.modes2 = modes2

-

+

self.pad_ratio = pad_ratio

# input channel is 3: (a(x, y), x, y)

if layers is None:

@@ -37,13 +46,21 @@ def __init__(self, modes1, modes2,

self.layers = layers

self.fc0 = nn.Linear(in_dim, layers[0])

- self.sp_convs = nn.LayerList([FactorizedSpectralConv2d(

- in_size, out_size, mode1_num, mode2_num)

- for in_size, out_size, mode1_num, mode2_num

- in zip(self.layers, self.layers[1:], self.modes1, self.modes2)])

+ self.sp_convs = nn.LayerList(

+ [

+ FactorizedSpectralConv2d(in_size, out_size, mode1_num, mode2_num)

+ for in_size, out_size, mode1_num, mode2_num in zip(

+ self.layers, self.layers[1:], self.modes1, self.modes2

+ )

+ ]

+ )

- self.ws = nn.LayerList([nn.Conv1D(in_size, out_size, 1)

- for in_size, out_size in zip(self.layers, self.layers[1:])])

+ self.ws = nn.LayerList(

+ [

+ nn.Conv1D(in_size, out_size, 1)

+ for in_size, out_size in zip(self.layers, self.layers[1:])

+ ]

+ )

self.fc1 = nn.Linear(layers[-1], fc_dim)

self.fc2 = nn.Linear(fc_dim, layers[-1])

@@ -51,28 +68,30 @@ def __init__(self, modes1, modes2,

self.act = _get_act(act)

def forward(self, x):

- '''

+ """

Args:

- x : (batch size, x_grid, y_grid, 2)

Returns:

- x: (batch size, x_grid, y_grid, 1)

- '''

+ """

size_1, size_2 = x.shape[1], x.shape[2]

if max(self.pad_ratio) > 0:

num_pad1 = [round(i * size_1) for i in self.pad_ratio]

num_pad2 = [round(i * size_2) for i in self.pad_ratio]

else:

- num_pad1 = num_pad2 = [0.]

+ num_pad1 = num_pad2 = [0.0]

length = len(self.ws)

batchsize = x.shape[0]

x = self.fc0(x)

- x = x.transpose([0, 3, 1, 2]) # B, C, X, Y

+ x = x.transpose([0, 3, 1, 2]) # B, C, X, Y

x = add_padding2(x, num_pad1, num_pad2)

size_x, size_y = x.shape[-2], x.shape[-1]

for i, (speconv, w) in enumerate(zip(self.sp_convs, self.ws)):

x1 = speconv(x)

- x2 = w(x.reshape([batchsize, self.layers[i], -1])).reshape([batchsize, self.layers[i+1], size_x, size_y])

+ x2 = w(x.reshape([batchsize, self.layers[i], -1])).reshape(

+ [batchsize, self.layers[i + 1], size_x, size_y]

+ )

x = x1 + x2

if i != length - 1:

x = self.act(x)

diff --git a/jointContribution/PINO/PINO_paddle/models/fourier3d.py b/jointContribution/PINO/PINO_paddle/models/fourier3d.py

index 077794f645..12fb58a13b 100644

--- a/jointContribution/PINO/PINO_paddle/models/fourier3d.py

+++ b/jointContribution/PINO/PINO_paddle/models/fourier3d.py

@@ -1,18 +1,26 @@

import paddle.nn as nn

-from .basics import SpectralConv3d

+

from .FNO_blocks import FactorizedSpectralConv3d

-from .utils import add_padding, remove_padding, _get_act

+from .utils import _get_act

+from .utils import add_padding

+from .utils import remove_padding

+

class FNO3d(nn.Layer):

- def __init__(self,

- modes1, modes2, modes3,

- width=16,

- fc_dim=128,

- layers=None,

- in_dim=4, out_dim=1,

- act='gelu',

- pad_ratio=[0., 0.]):

- '''

+ def __init__(

+ self,

+ modes1,

+ modes2,

+ modes3,

+ width=16,

+ fc_dim=128,

+ layers=None,

+ in_dim=4,

+ out_dim=1,

+ act="gelu",

+ pad_ratio=[0.0, 0.0],

+ ):

+ """

Args:

modes1: list of int, first dimension maximal modes for each layer

modes2: list of int, second dimension maximal modes for each layer

@@ -23,13 +31,13 @@ def __init__(self,

out_dim: int, output dimension

act: {tanh, gelu, relu, leaky_relu}, activation function

pad_ratio: the ratio of the extended domain

- '''

+ """

super(FNO3d, self).__init__()

if isinstance(pad_ratio, float):

pad_ratio = [pad_ratio, pad_ratio]

else:

- assert len(pad_ratio) == 2, 'Cannot add padding in more than 2 directions.'

+ assert len(pad_ratio) == 2, "Cannot add padding in more than 2 directions."

self.pad_ratio = pad_ratio

self.modes1 = modes1

@@ -43,35 +51,45 @@ def __init__(self,

self.layers = layers

self.fc0 = nn.Linear(in_dim, layers[0])

- self.sp_convs = nn.LayerList([FactorizedSpectralConv3d(

- in_size, out_size, mode1_num, mode2_num, mode3_num)

- for in_size, out_size, mode1_num, mode2_num, mode3_num

- in zip(self.layers, self.layers[1:], self.modes1, self.modes2, self.modes3)])

+ self.sp_convs = nn.LayerList(

+ [

+ FactorizedSpectralConv3d(

+ in_size, out_size, mode1_num, mode2_num, mode3_num

+ )

+ for in_size, out_size, mode1_num, mode2_num, mode3_num in zip(

+ self.layers, self.layers[1:], self.modes1, self.modes2, self.modes3

+ )

+ ]

+ )

- self.ws = nn.LayerList([nn.Conv1D(in_size, out_size, 1)

- for in_size, out_size in zip(self.layers, self.layers[1:])])

+ self.ws = nn.LayerList(

+ [

+ nn.Conv1D(in_size, out_size, 1)

+ for in_size, out_size in zip(self.layers, self.layers[1:])

+ ]

+ )

self.fc1 = nn.Linear(layers[-1], fc_dim)

self.fc2 = nn.Linear(fc_dim, out_dim)

self.act = _get_act(act)

def forward(self, x):

- '''

+ """

Args:

x: (batchsize, x_grid, y_grid, t_grid, 3)

Returns:

u: (batchsize, x_grid, y_grid, t_grid, 1)

- '''

+ """

size_z = x.shape[-2]

if max(self.pad_ratio) > 0:

num_pad = [round(size_z * i) for i in self.pad_ratio]

else:

- num_pad = [0., 0.]

+ num_pad = [0.0, 0.0]

length = len(self.ws)

batchsize = x.shape[0]

-

+

x = self.fc0(x)

x = x.transpose([0, 4, 1, 2, 3])

x = add_padding(x, num_pad=num_pad)

@@ -79,7 +97,9 @@ def forward(self, x):

for i, (speconv, w) in enumerate(zip(self.sp_convs, self.ws)):

x1 = speconv(x)

- x2 = w(x.reshape([batchsize, self.layers[i], -1])).reshape([batchsize, self.layers[i+1], size_x, size_y, size_z])

+ x2 = w(x.reshape([batchsize, self.layers[i], -1])).reshape(

+ [batchsize, self.layers[i + 1], size_x, size_y, size_z]

+ )

x = x1 + x2

if i != length - 1:

x = self.act(x)

@@ -88,4 +108,4 @@ def forward(self, x):

x = self.fc1(x)

x = self.act(x)

x = self.fc2(x)

- return x

\ No newline at end of file

+ return x

diff --git a/jointContribution/PINO/PINO_paddle/models/utils.py b/jointContribution/PINO/PINO_paddle/models/utils.py

index 0302c5ab3c..2ae65ef56c 100644

--- a/jointContribution/PINO/PINO_paddle/models/utils.py

+++ b/jointContribution/PINO/PINO_paddle/models/utils.py

@@ -1,45 +1,51 @@

import paddle.nn.functional as F

+

def add_padding(x, num_pad):

if max(num_pad) > 0:

- res = F.pad(x, (num_pad[0], num_pad[1]), 'constant', 0)

+ res = F.pad(x, (num_pad[0], num_pad[1]), "constant", 0)

else:

res = x

return res

+

def add_padding2(x, num_pad1, num_pad2):

if max(num_pad1) > 0 or max(num_pad2) > 0:

- res = F.pad(x, (num_pad2[0], num_pad2[1], num_pad1[0], num_pad1[1]), 'constant', 0.)

+ res = F.pad(

+ x, (num_pad2[0], num_pad2[1], num_pad1[0], num_pad1[1]), "constant", 0.0

+ )

else:

res = x

return res

+

def remove_padding(x, num_pad):

if max(num_pad) > 0:

- res = x[..., num_pad[0]:-num_pad[1]]

+ res = x[..., num_pad[0] : -num_pad[1]]

else:

res = x

return res

+

def remove_padding2(x, num_pad1, num_pad2):

if max(num_pad1) > 0 or max(num_pad2) > 0:

- res = x[..., num_pad1[0]:-num_pad1[1], num_pad2[0]:-num_pad2[1]]

+ res = x[..., num_pad1[0] : -num_pad1[1], num_pad2[0] : -num_pad2[1]]

else:

res = x

return res

+

def _get_act(act):

- if act == 'tanh':

+ if act == "tanh":

func = F.tanh

- elif act == 'gelu':

+ elif act == "gelu":

func = F.gelu

- elif act == 'relu':

+ elif act == "relu":

func = F.relu_

- elif act == 'elu':

+ elif act == "elu":

func = F.elu_

- elif act == 'leaky_relu':

+ elif act == "leaky_relu":

func = F.leaky_relu

else:

- raise ValueError(f'{act} is not supported')

+ raise ValueError(f"{act} is not supported")

return func

-

diff --git a/jointContribution/PINO/PINO_paddle/prepare_data.py b/jointContribution/PINO/PINO_paddle/prepare_data.py

index 74a14694e1..2dc4349a81 100644

--- a/jointContribution/PINO/PINO_paddle/prepare_data.py

+++ b/jointContribution/PINO/PINO_paddle/prepare_data.py

@@ -1,33 +1,35 @@

import numpy as np

-import matplotlib.pyplot as plt

+

def shuffle_data(datapath):

data = np.load(datapath)

rng = np.random.default_rng(123)

rng.shuffle(data, axis=0)

- savepath = datapath.replace('.npy', '-shuffle.npy')

+ savepath = datapath.replace(".npy", "-shuffle.npy")

np.save(savepath, data)

+

def test_data(datapath):

- raw = np.load(datapath, mmap_mode='r')

+ raw = np.load(datapath, mmap_mode="r")

print(raw[0, 0, 0, 0:10])

- newpath = datapath.replace('.npy', '-shuffle.npy')

- new = np.load(newpath, mmap_mode='r')

+ newpath = datapath.replace(".npy", "-shuffle.npy")

+ new = np.load(newpath, mmap_mode="r")

print(new[0, 0, 0, 0:10])

+

def get_slice(datapath):

- raw = np.load(datapath, mmap_mode='r')

+ raw = np.load(datapath, mmap_mode="r")

data = raw[-10:]

print(data.shape)

- savepath = 'data/Re500-5x513x256x256.npy'

+ savepath = "data/Re500-5x513x256x256.npy"

np.save(savepath, data)

+

def plot_test(datapath):

- duration = 0.125

- raw = np.load(datapath, mmap_mode='r')

-

+ np.load(datapath, mmap_mode="r")

+

-if __name__ == '__main__':

- datapath = '/raid/hongkai/NS-Re500_T300_id0-shuffle.npy'

- get_slice(datapath)

\ No newline at end of file

+if __name__ == "__main__":

+ datapath = "/raid/hongkai/NS-Re500_T300_id0-shuffle.npy"

+ get_slice(datapath)

diff --git a/jointContribution/PINO/PINO_paddle/solver/random_fields.py b/jointContribution/PINO/PINO_paddle/solver/random_fields.py

index 448567db3e..458c1a0e72 100644

--- a/jointContribution/PINO/PINO_paddle/solver/random_fields.py

+++ b/jointContribution/PINO/PINO_paddle/solver/random_fields.py

@@ -1,58 +1,102 @@

+import math

+

import paddle

-import math

class GaussianRF(object):

- def __init__(self, dim, size, length=1.0, alpha=2.0, tau=3.0, sigma=None, boundary="periodic", constant_eig=False):

+ def __init__(

+ self,

+ dim,

+ size,

+ length=1.0,

+ alpha=2.0,

+ tau=3.0,

+ sigma=None,

+ boundary="periodic",

+ constant_eig=False,

+ ):

self.dim = dim

if sigma is None:

- sigma = tau**(0.5*(2*alpha - self.dim))

+ sigma = tau ** (0.5 * (2 * alpha - self.dim))

- k_max = size//2

+ k_max = size // 2

- const = (4*(math.pi**2))/(length**2)

+ const = (4 * (math.pi**2)) / (length**2)

if dim == 1:

- k = paddle.concat((paddle.arange(start=0, end=k_max, step=1), \

- paddle.arange(start=-k_max, end=0, step=1)), 0)

-

- self.sqrt_eig = size*math.sqrt(2.0)*sigma*((const*(k**2) + tau**2)**(-alpha/2.0))

+ k = paddle.concat(

+ (

+ paddle.arange(start=0, end=k_max, step=1),

+ paddle.arange(start=-k_max, end=0, step=1),

+ ),

+ 0,

+ )

+

+ self.sqrt_eig = (

+ size

+ * math.sqrt(2.0)

+ * sigma

+ * ((const * (k**2) + tau**2) ** (-alpha / 2.0))

+ )

if constant_eig:

- self.sqrt_eig[0] = size*sigma*(tau**(-alpha))

+ self.sqrt_eig[0] = size * sigma * (tau ** (-alpha))

else:

self.sqrt_eig[0] = 0.0

elif dim == 2:

- wavenumers = paddle.concat((paddle.arange(start=0, end=k_max, step=1), \

- paddle.arange(start=-k_max, end=0, step=1)), 0).repeat(size,1)

-

- k_x = wavenumers.transpose(0,1)

+ wavenumers = paddle.concat(

+ (

+ paddle.arange(start=0, end=k_max, step=1),

+ paddle.arange(start=-k_max, end=0, step=1),

+ ),

+ 0,

+ ).repeat(size, 1)

+

+ k_x = wavenumers.transpose(0, 1)

k_y = wavenumers

- self.sqrt_eig = (size**2)*math.sqrt(2.0)*sigma*((const*(k_x**2 + k_y**2) + tau**2)**(-alpha/2.0))

+ self.sqrt_eig = (

+ (size**2)

+ * math.sqrt(2.0)

+ * sigma

+ * ((const * (k_x**2 + k_y**2) + tau**2) ** (-alpha / 2.0))

+ )

if constant_eig:

- self.sqrt_eig[0,0] = (size**2)*sigma*(tau**(-alpha))

+ self.sqrt_eig[0, 0] = (size**2) * sigma * (tau ** (-alpha))

else:

- self.sqrt_eig[0,0] = 0.0

+ self.sqrt_eig[0, 0] = 0.0

elif dim == 3: