model (3D UNet) is not learning and dice score is always below 0.10 #4023

Replies: 3 comments 4 replies

-

|

Hi, Whenever I faced such issues (that the training loss is not reducing at all), it had to do with the data. Can you post a screen shot of your original images. In addition, one thing you should try is to see if the image and labels are aligned after applying the transform chain. |

Beta Was this translation helpful? Give feedback.

-

|

Hi @ankitabuntolia , you can also try to let the training set be able to overfit by removing all image augmentations (to see if the easiest settings can reach to a very low training loss), for your code the following transforms can be removed: |

Beta Was this translation helpful? Give feedback.

-

|

What does this bit do? SpatialCropd(keys=['image_1', 'label_1'], roi_start=(0,248,0), roi_end=(256,496,49),allow_missing_keys=True),

SpatialCropd(keys=['image_2', 'label_2'], roi_start=(256,0,0), roi_end=(512,248,49),allow_missing_keys=True),

SpatialCropd(keys=['image_3', 'label_3'], roi_start=(256,248,0), roi_end=(512,496,49),allow_missing_keys=True),

ConcatItemsd(['image_1', 'image_2', 'image_3'], name='image', dim=1, allow_missing_keys=True),

DeleteItemsd(['image_1', 'image_2', 'image_3']),If I understand correctly, you're taking 3 sections of your image and joining them together along the x-axis. Does this make sense? |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Hello,

I am new to UNet and Monai, but Monai has been so far quite useful to work on Medical Images, so thank you for maintaining and answering questions.

Problem

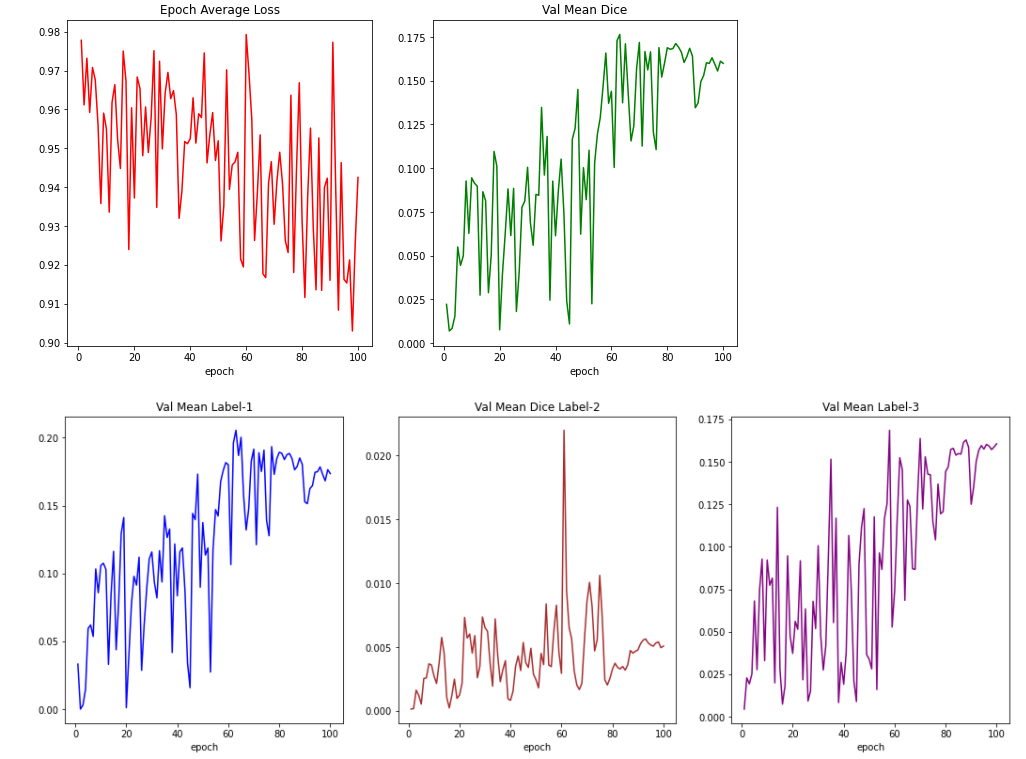

My mode is not learning with 100 epochs. I also tried with 200 and 400 but losses are always around 0.90 and dice score is always below 0.10

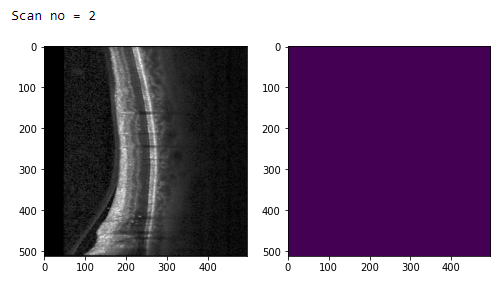

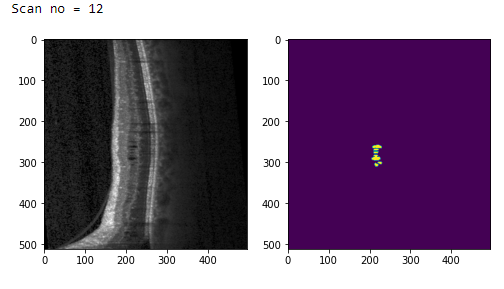

Background of task

I am working on 3D OCT Image segmentation to identify IRF, SRF, and PED fluids using Monai. My input image size is 512x496x49 and my label is also 512x496x49. An image can have 3 labels (IRF, SRF, and PED) so it's a multilabel segmentation task.

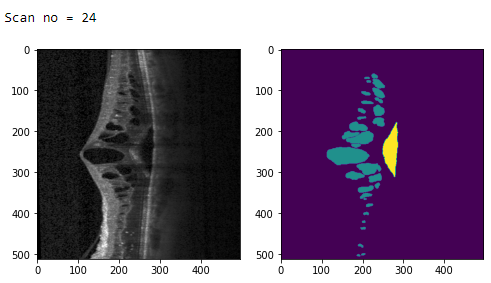

After using transform, the shape of image changes to torch.Size([1, 128, 128, 128]), label shape: torch.Size([3, 128, 128, 128]).

I am following BRAT Tutorial but the network I wanted to use UNet so I use it from Spleen Tutorial with modification discussed in #415

Here is my code

Transform

Dataloader

Model

Actual Training

Here is the output from 98th, 99th and 100th epoch

here is my plot of loss and dice score

Any suggestions to reduce loss and improve dice score would be really helpful!

Thank you!

Beta Was this translation helpful? Give feedback.

All reactions