Replies: 5 comments 7 replies

-

|

Hi @bax24 thanks for starting the discussion. Could you share a github link with the CNN code as well? The ViT usage at the first glance does not seem to be incorrect, there does seem to be some confusion between out_channel and num_classes as that is not being tied together, please check and verify that. Another thing that is interesting here is that how are you testing with a fixed input patch size. Is your CNN network also monai based? |

Beta Was this translation helpful? Give feedback.

-

|

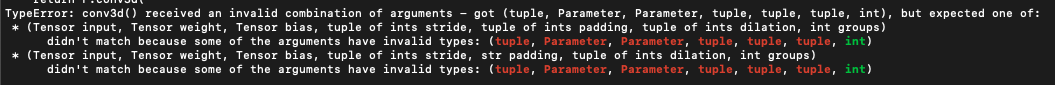

Hello, What do you mean when you say 'tied together' i'm sorry I don't get it? The sizes of my MRI is (96, 96, 48) and it only has one channel (I believe). And for the patch size I just used (16, 16, 16) for training and testing, should I use something else ? The CNN is not MONAI based, its implementation uses simple 3D CNN layers and fully connected ones. Here is my github with the relevant codes : https://github.com/bax24/Alzheimer-Classification Thanks again for your help! PS : I am still a student and this is part of my master thesis so if you see something outrageous in the way that I am training or testing my models don't hesitate to tell me |

Beta Was this translation helpful? Give feedback.

-

|

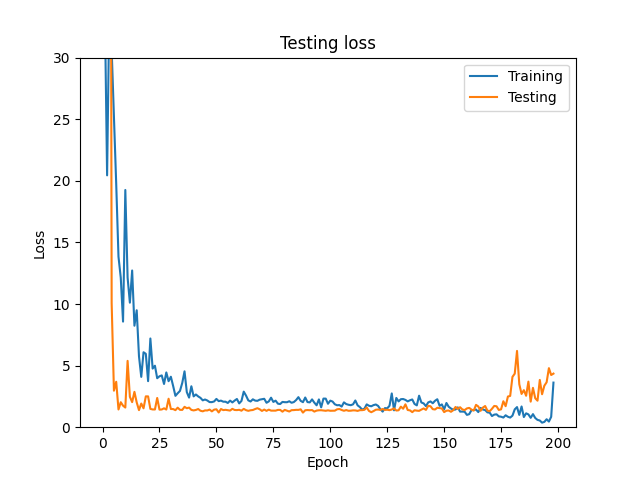

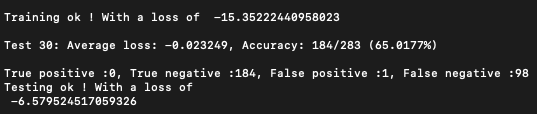

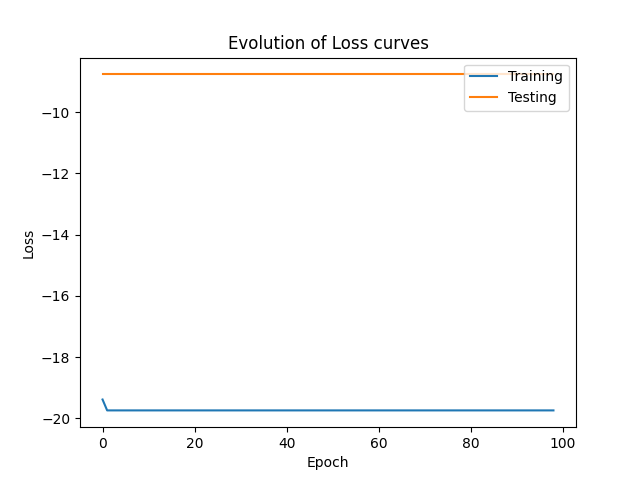

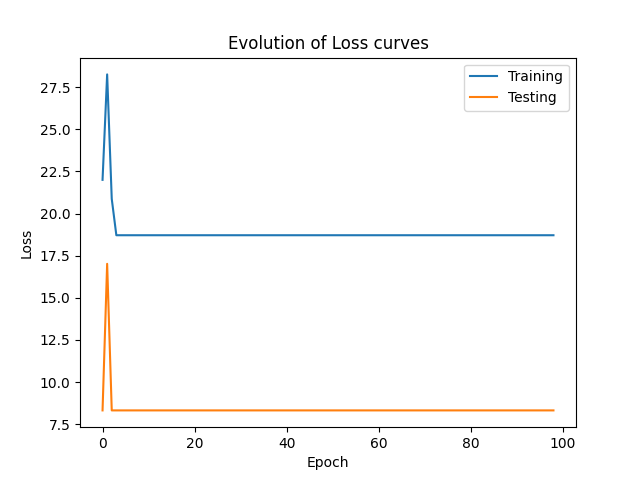

The CNN models now gives very good results but I still cannot make the ViT model learn (the loss is constant throughout the learning No matter the epoch I get the following I have updated my github (https://github.com/bax24/Alzheimer-Classification) could you please take a look and tell me if i am doing something wrong ? Maybe with the backpropagation ? Thanks a lot in advance, Lucas |

Beta Was this translation helpful? Give feedback.

-

|

Here is the plot when running the ViT with a lower learning rate of 1e-4. Everything remain constant.. I do not use the activation because I already use a Sigmoid and it didn't work either with the log_softmax do you think I should try again ? |

Beta Was this translation helpful? Give feedback.

-

|

Hi, I noticed that the Vision Transformer (ViT) with model = monai.networks.nets.ViT(...)

model.classification_head = nn.Sequential(nn.Linear(768, num_classes))Best |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Hello everyone !

I didn't know whether to ask this in the discussion forum or to start a "New issue" so I hope this is the right place..

I am currently trying to implement a classification network with MRI scans (from ADNI) to detect alzheimer. I already implemented 3D CNN network which works quite well and I wanted to test the backbone of UNETR, so the ViT, to see what kind of results I could get.

However, the network doesn't seem to learn as the loss remains the same throughout epochs. I tried with a classification of 1 class and 2 classes and the results are the same. I suppose I am not using it correctly and I was wondering if you could help me ?

I attached the codes for my main, my model, how I train and test it. I hope this is ok I didn't know whether a github would be easier for you or not (since my code is still a bit messy).

I hope to hear from you and I thank you in advance,

Kind regards,

Lucas

Beta Was this translation helpful? Give feedback.

All reactions