diff --git a/.gitignore b/.gitignore

index 485dee6..1c8bd59 100644

--- a/.gitignore

+++ b/.gitignore

@@ -1 +1,13 @@

-.idea

+.vscode

+

+*.o

+*.so

+*.cpp

+*.egg-info

+

+__pycache__/

+

+data/

+results/

+test/

+*log*

\ No newline at end of file

diff --git a/LICENSE b/LICENSE

deleted file mode 100644

index 6d37ae8..0000000

--- a/LICENSE

+++ /dev/null

@@ -1,176 +0,0 @@

-

-# Attribution-NonCommercial-ShareAlike 4.0 International

-

-Creative Commons Corporation (“Creative Commons”) is not a law firm and does not provide legal services or legal advice. Distribution of Creative Commons public licenses does not create a lawyer-client or other relationship. Creative Commons makes its licenses and related information available on an “as-is” basis. Creative Commons gives no warranties regarding its licenses, any material licensed under their terms and conditions, or any related information. Creative Commons disclaims all liability for damages resulting from their use to the fullest extent possible.

-

-### Using Creative Commons Public Licenses

-

-Creative Commons public licenses provide a standard set of terms and conditions that creators and other rights holders may use to share original works of authorship and other material subject to copyright and certain other rights specified in the public license below. The following considerations are for informational purposes only, are not exhaustive, and do not form part of our licenses.

-

-* __Considerations for licensors:__ Our public licenses are intended for use by those authorized to give the public permission to use material in ways otherwise restricted by copyright and certain other rights. Our licenses are irrevocable. Licensors should read and understand the terms and conditions of the license they choose before applying it. Licensors should also secure all rights necessary before applying our licenses so that the public can reuse the material as expected. Licensors should clearly mark any material not subject to the license. This includes other CC-licensed material, or material used under an exception or limitation to copyright. [More considerations for licensors](http://wiki.creativecommons.org/Considerations_for_licensors_and_licensees#Considerations_for_licensors).

-

-* __Considerations for the public:__ By using one of our public licenses, a licensor grants the public permission to use the licensed material under specified terms and conditions. If the licensor’s permission is not necessary for any reason–for example, because of any applicable exception or limitation to copyright–then that use is not regulated by the license. Our licenses grant only permissions under copyright and certain other rights that a licensor has authority to grant. Use of the licensed material may still be restricted for other reasons, including because others have copyright or other rights in the material. A licensor may make special requests, such as asking that all changes be marked or described. Although not required by our licenses, you are encouraged to respect those requests where reasonable. [More considerations for the public](http://wiki.creativecommons.org/Considerations_for_licensors_and_licensees#Considerations_for_licensees).

-

-## Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International Public License

-

-By exercising the Licensed Rights (defined below), You accept and agree to be bound by the terms and conditions of this Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International Public License ("Public License"). To the extent this Public License may be interpreted as a contract, You are granted the Licensed Rights in consideration of Your acceptance of these terms and conditions, and the Licensor grants You such rights in consideration of benefits the Licensor receives from making the Licensed Material available under these terms and conditions.

-

-### Section 1 – Definitions.

-

-a. __Adapted Material__ means material subject to Copyright and Similar Rights that is derived from or based upon the Licensed Material and in which the Licensed Material is translated, altered, arranged, transformed, or otherwise modified in a manner requiring permission under the Copyright and Similar Rights held by the Licensor. For purposes of this Public License, where the Licensed Material is a musical work, performance, or sound recording, Adapted Material is always produced where the Licensed Material is synched in timed relation with a moving image.

-

-b. __Adapter's License__ means the license You apply to Your Copyright and Similar Rights in Your contributions to Adapted Material in accordance with the terms and conditions of this Public License.

-

-c. __BY-NC-SA Compatible License__ means a license listed at [creativecommons.org/compatiblelicenses](http://creativecommons.org/compatiblelicenses), approved by Creative Commons as essentially the equivalent of this Public License.

-

-d. __Copyright and Similar Rights__ means copyright and/or similar rights closely related to copyright including, without limitation, performance, broadcast, sound recording, and Sui Generis Database Rights, without regard to how the rights are labeled or categorized. For purposes of this Public License, the rights specified in Section 2(b)(1)-(2) are not Copyright and Similar Rights.

-

-e. __Effective Technological Measures__ means those measures that, in the absence of proper authority, may not be circumvented under laws fulfilling obligations under Article 11 of the WIPO Copyright Treaty adopted on December 20, 1996, and/or similar international agreements.

-

-f. __Exceptions and Limitations__ means fair use, fair dealing, and/or any other exception or limitation to Copyright and Similar Rights that applies to Your use of the Licensed Material.

-

-g. __License Elements__ means the license attributes listed in the name of a Creative Commons Public License. The License Elements of this Public License are Attribution, NonCommercial, and ShareAlike.

-

-h. __Licensed Material__ means the artistic or literary work, database, or other material to which the Licensor applied this Public License.

-

-i. __Licensed Rights__ means the rights granted to You subject to the terms and conditions of this Public License, which are limited to all Copyright and Similar Rights that apply to Your use of the Licensed Material and that the Licensor has authority to license.

-

-j. __Licensor__ means the individual(s) or entity(ies) granting rights under this Public License.

-

-k. __NonCommercial__ means not primarily intended for or directed towards commercial advantage or monetary compensation. For purposes of this Public License, the exchange of the Licensed Material for other material subject to Copyright and Similar Rights by digital file-sharing or similar means is NonCommercial provided there is no payment of monetary compensation in connection with the exchange.

-

-l. __Share__ means to provide material to the public by any means or process that requires permission under the Licensed Rights, such as reproduction, public display, public performance, distribution, dissemination, communication, or importation, and to make material available to the public including in ways that members of the public may access the material from a place and at a time individually chosen by them.

-

-m. __Sui Generis Database Rights__ means rights other than copyright resulting from Directive 96/9/EC of the European Parliament and of the Council of 11 March 1996 on the legal protection of databases, as amended and/or succeeded, as well as other essentially equivalent rights anywhere in the world.

-

-n. __You__ means the individual or entity exercising the Licensed Rights under this Public License. Your has a corresponding meaning.

-

-### Section 2 – Scope.

-

-a. ___License grant.___

-

- 1. Subject to the terms and conditions of this Public License, the Licensor hereby grants You a worldwide, royalty-free, non-sublicensable, non-exclusive, irrevocable license to exercise the Licensed Rights in the Licensed Material to:

-

- A. reproduce and Share the Licensed Material, in whole or in part, for NonCommercial purposes only; and

-

- B. produce, reproduce, and Share Adapted Material for NonCommercial purposes only.

-

- 2. __Exceptions and Limitations.__ For the avoidance of doubt, where Exceptions and Limitations apply to Your use, this Public License does not apply, and You do not need to comply with its terms and conditions.

-

- 3. __Term.__ The term of this Public License is specified in Section 6(a).

-

- 4. __Media and formats; technical modifications allowed.__ The Licensor authorizes You to exercise the Licensed Rights in all media and formats whether now known or hereafter created, and to make technical modifications necessary to do so. The Licensor waives and/or agrees not to assert any right or authority to forbid You from making technical modifications necessary to exercise the Licensed Rights, including technical modifications necessary to circumvent Effective Technological Measures. For purposes of this Public License, simply making modifications authorized by this Section 2(a)(4) never produces Adapted Material.

-

- 5. __Downstream recipients.__

-

- A. __Offer from the Licensor – Licensed Material.__ Every recipient of the Licensed Material automatically receives an offer from the Licensor to exercise the Licensed Rights under the terms and conditions of this Public License.

-

- B. __Additional offer from the Licensor – Adapted Material.__ Every recipient of Adapted Material from You automatically receives an offer from the Licensor to exercise the Licensed Rights in the Adapted Material under the conditions of the Adapter’s License You apply.

-

- C. __No downstream restrictions.__ You may not offer or impose any additional or different terms or conditions on, or apply any Effective Technological Measures to, the Licensed Material if doing so restricts exercise of the Licensed Rights by any recipient of the Licensed Material.

-

- 6. __No endorsement.__ Nothing in this Public License constitutes or may be construed as permission to assert or imply that You are, or that Your use of the Licensed Material is, connected with, or sponsored, endorsed, or granted official status by, the Licensor or others designated to receive attribution as provided in Section 3(a)(1)(A)(i).

-

-b. ___Other rights.___

-

- 1. Moral rights, such as the right of integrity, are not licensed under this Public License, nor are publicity, privacy, and/or other similar personality rights; however, to the extent possible, the Licensor waives and/or agrees not to assert any such rights held by the Licensor to the limited extent necessary to allow You to exercise the Licensed Rights, but not otherwise.

-

- 2. Patent and trademark rights are not licensed under this Public License.

-

- 3. To the extent possible, the Licensor waives any right to collect royalties from You for the exercise of the Licensed Rights, whether directly or through a collecting society under any voluntary or waivable statutory or compulsory licensing scheme. In all other cases the Licensor expressly reserves any right to collect such royalties, including when the Licensed Material is used other than for NonCommercial purposes.

-

-### Section 3 – License Conditions.

-

-Your exercise of the Licensed Rights is expressly made subject to the following conditions.

-

-a. ___Attribution.___

-

- 1. If You Share the Licensed Material (including in modified form), You must:

-

- A. retain the following if it is supplied by the Licensor with the Licensed Material:

-

- i. identification of the creator(s) of the Licensed Material and any others designated to receive attribution, in any reasonable manner requested by the Licensor (including by pseudonym if designated);

-

- ii. a copyright notice;

-

- iii. a notice that refers to this Public License;

-

- iv. a notice that refers to the disclaimer of warranties;

-

- v. a URI or hyperlink to the Licensed Material to the extent reasonably practicable;

-

- B. indicate if You modified the Licensed Material and retain an indication of any previous modifications; and

-

- C. indicate the Licensed Material is licensed under this Public License, and include the text of, or the URI or hyperlink to, this Public License.

-

- 2. You may satisfy the conditions in Section 3(a)(1) in any reasonable manner based on the medium, means, and context in which You Share the Licensed Material. For example, it may be reasonable to satisfy the conditions by providing a URI or hyperlink to a resource that includes the required information.

-

- 3. If requested by the Licensor, You must remove any of the information required by Section 3(a)(1)(A) to the extent reasonably practicable.

-

-b. ___ShareAlike.___

-

-In addition to the conditions in Section 3(a), if You Share Adapted Material You produce, the following conditions also apply.

-

- 1. The Adapter’s License You apply must be a Creative Commons license with the same License Elements, this version or later, or a BY-NC-SA Compatible License.

-

- 2. You must include the text of, or the URI or hyperlink to, the Adapter's License You apply. You may satisfy this condition in any reasonable manner based on the medium, means, and context in which You Share Adapted Material.

-

- 3. You may not offer or impose any additional or different terms or conditions on, or apply any Effective Technological Measures to, Adapted Material that restrict exercise of the rights granted under the Adapter's License You apply.

-

-### Section 4 – Sui Generis Database Rights.

-

-Where the Licensed Rights include Sui Generis Database Rights that apply to Your use of the Licensed Material:

-

-a. for the avoidance of doubt, Section 2(a)(1) grants You the right to extract, reuse, reproduce, and Share all or a substantial portion of the contents of the database for NonCommercial purposes only;

-

-b. if You include all or a substantial portion of the database contents in a database in which You have Sui Generis Database Rights, then the database in which You have Sui Generis Database Rights (but not its individual contents) is Adapted Material, including for purposes of Section 3(b); and

-

-c. You must comply with the conditions in Section 3(a) if You Share all or a substantial portion of the contents of the database.

-

-For the avoidance of doubt, this Section 4 supplements and does not replace Your obligations under this Public License where the Licensed Rights include other Copyright and Similar Rights.

-

-### Section 5 – Disclaimer of Warranties and Limitation of Liability.

-

-a. __Unless otherwise separately undertaken by the Licensor, to the extent possible, the Licensor offers the Licensed Material as-is and as-available, and makes no representations or warranties of any kind concerning the Licensed Material, whether express, implied, statutory, or other. This includes, without limitation, warranties of title, merchantability, fitness for a particular purpose, non-infringement, absence of latent or other defects, accuracy, or the presence or absence of errors, whether or not known or discoverable. Where disclaimers of warranties are not allowed in full or in part, this disclaimer may not apply to You.__

-

-b. __To the extent possible, in no event will the Licensor be liable to You on any legal theory (including, without limitation, negligence) or otherwise for any direct, special, indirect, incidental, consequential, punitive, exemplary, or other losses, costs, expenses, or damages arising out of this Public License or use of the Licensed Material, even if the Licensor has been advised of the possibility of such losses, costs, expenses, or damages. Where a limitation of liability is not allowed in full or in part, this limitation may not apply to You.__

-

-c. The disclaimer of warranties and limitation of liability provided above shall be interpreted in a manner that, to the extent possible, most closely approximates an absolute disclaimer and waiver of all liability.

-

-### Section 6 – Term and Termination.

-

-a. This Public License applies for the term of the Copyright and Similar Rights licensed here. However, if You fail to comply with this Public License, then Your rights under this Public License terminate automatically.

-

-b. Where Your right to use the Licensed Material has terminated under Section 6(a), it reinstates:

-

- 1. automatically as of the date the violation is cured, provided it is cured within 30 days of Your discovery of the violation; or

-

- 2. automatically as of the date the violation is cured, provided it is cured within 30 days of Your discovery of the violation; or

-

- For the avoidance of doubt, this Section 6(b) does not affect any right the Licensor may have to seek remedies for Your violations of this Public License.

-

-c. For the avoidance of doubt, the Licensor may also offer the Licensed Material under separate terms or conditions or stop distributing the Licensed Material at any time; however, doing so will not terminate this Public License.

-

-d. Sections 1, 5, 6, 7, and 8 survive termination of this Public License.

-

-### Section 7 – Other Terms and Conditions.

-

-a. The Licensor shall not be bound by any additional or different terms or conditions communicated by You unless expressly agreed.

-

-b. Any arrangements, understandings, or agreements regarding the Licensed Material not stated herein are separate from and independent of the terms and conditions of this Public License.

-

-### Section 8 – Interpretation.

-

-a. For the avoidance of doubt, this Public License does not, and shall not be interpreted to, reduce, limit, restrict, or impose conditions on any use of the Licensed Material that could lawfully be made without permission under this Public License.

-

-b. To the extent possible, if any provision of this Public License is deemed unenforceable, it shall be automatically reformed to the minimum extent necessary to make it enforceable. If the provision cannot be reformed, it shall be severed from this Public License without affecting the enforceability of the remaining terms and conditions.

-

-c. No term or condition of this Public License will be waived and no failure to comply consented to unless expressly agreed to by the Licensor.

-

-d. Nothing in this Public License constitutes or may be interpreted as a limitation upon, or waiver of, any privileges and immunities that apply to the Licensor or You, including from the legal processes of any jurisdiction or authority.

-

-```

-Creative Commons is not a party to its public licenses. Notwithstanding, Creative Commons may elect to apply one of its public licenses to material it publishes and in those instances will be considered the “Licensor.” Except for the limited purpose of indicating that material is shared under a Creative Commons public license or as otherwise permitted by the Creative Commons policies published at [creativecommons.org/policies](http://creativecommons.org/policies), Creative Commons does not authorize the use of the trademark “Creative Commons” or any other trademark or logo of Creative Commons without its prior written consent including, without limitation, in connection with any unauthorized modifications to any of its public licenses or any other arrangements, understandings, or agreements concerning use of licensed material. For the avoidance of doubt, this paragraph does not form part of the public licenses.

-

-Creative Commons may be contacted at [creativecommons.org](http://creativecommons.org/).

-```

diff --git a/README.md b/README.md

index 8fbd586..302aef2 100644

--- a/README.md

+++ b/README.md

@@ -1,180 +1,27 @@

-[](https://paperswithcode.com/sota/semantic-segmentation-on-semantic3d?p=191111236)

-[](https://paperswithcode.com/sota/3d-semantic-segmentation-on-semantickitti?p=191111236)

-[](https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode)

-

-# RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds (CVPR 2020)

-

-This is the official implementation of **RandLA-Net** (CVPR2020, Oral presentation), a simple and efficient neural architecture for semantic segmentation of large-scale 3D point clouds. For technical details, please refer to:

-

-**RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds**

-[Qingyong Hu](https://www.cs.ox.ac.uk/people/qingyong.hu/), [Bo Yang*](https://yang7879.github.io/), [Linhai Xie](https://www.cs.ox.ac.uk/people/linhai.xie/), [Stefano Rosa](https://www.cs.ox.ac.uk/people/stefano.rosa/), [Yulan Guo](http://yulanguo.me/), [Zhihua Wang](https://www.cs.ox.ac.uk/people/zhihua.wang/), [Niki Trigoni](https://www.cs.ox.ac.uk/people/niki.trigoni/), [Andrew Markham](https://www.cs.ox.ac.uk/people/andrew.markham/).

-**[[Paper](https://arxiv.org/abs/1911.11236)] [[Video](https://youtu.be/Ar3eY_lwzMk)] [[Blog](https://zhuanlan.zhihu.com/p/105433460)] [[Project page](http://randla-net.cs.ox.ac.uk/)]**

-

-

-

-

-

-

-### (1) Setup

-This code has been tested with Python 3.5, Tensorflow 1.11, CUDA 9.0 and cuDNN 7.4.1 on Ubuntu 16.04.

-

-- Clone the repository

-```

-git clone --depth=1 https://github.com/QingyongHu/RandLA-Net && cd RandLA-Net

-```

-- Setup python environment

-```

-conda create -n randlanet python=3.5

-source activate randlanet

-pip install -r helper_requirements.txt

-sh compile_op.sh

-```

-

-**Update 03/21/2020, pre-trained models and results are available now.**

-You can download the pre-trained models and results [here](https://drive.google.com/open?id=1iU8yviO3TP87-IexBXsu13g6NklwEkXB).

-Note that, please specify the model path in the main function (e.g., `main_S3DIS.py`) if you want to use the pre-trained model and have a quick try of our RandLA-Net.

-

-### (2) S3DIS

-S3DIS dataset can be found

-here.

-Download the files named "Stanford3dDataset_v1.2_Aligned_Version.zip". Uncompress the folder and move it to

-`/data/S3DIS`.

-

-- Preparing the dataset:

-```

-python utils/data_prepare_s3dis.py

-```

-- Start 6-fold cross validation:

-```

-sh jobs_6_fold_cv_s3dis.sh

-```

-- Move all the generated results (*.ply) in `/test` folder to `/data/S3DIS/results`, calculate the final mean IoU results:

-```

-python utils/6_fold_cv.py

-```

-

-Quantitative results of different approaches on S3DIS dataset (6-fold cross-validation):

-

-

-

-Qualitative results of our RandLA-Net:

-

-|  |  |

-| ------------------------------ | ---------------------------- |

-

-

-

-### (3) Semantic3D

-7zip is required to uncompress the raw data in this dataset, to install p7zip:

-```

-sudo apt-get install p7zip-full

-```

-- Download and extract the dataset. First, please specify the path of the dataset by changing the `BASE_DIR` in "download_semantic3d.sh"

-```

-sh utils/download_semantic3d.sh

-```

-- Preparing the dataset:

-```

-python utils/data_prepare_semantic3d.py

-```

-- Start training:

-```

-python main_Semantic3D.py --mode train --gpu 0

-```

-- Evaluation:

-```

-python main_Semantic3D.py --mode test --gpu 0

-```

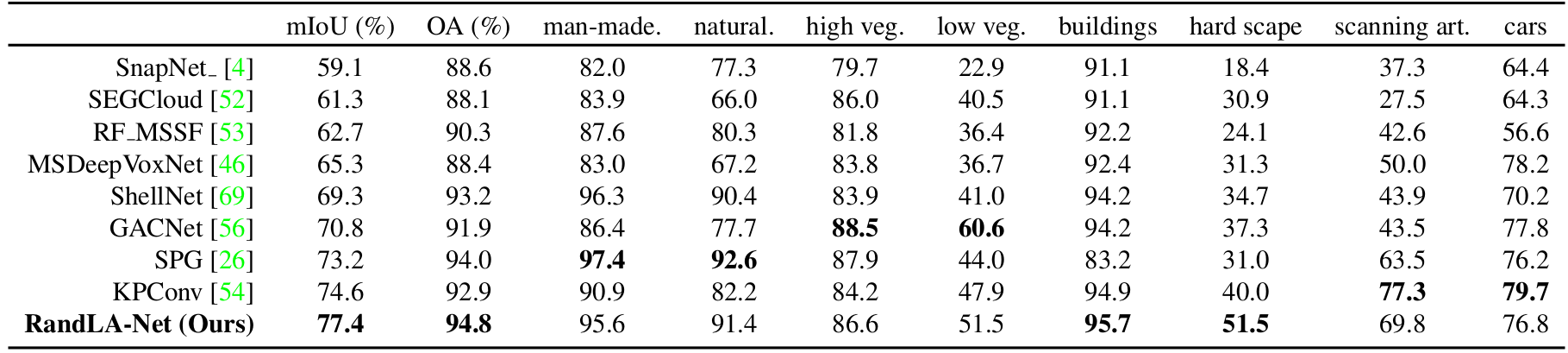

-Quantitative results of different approaches on Semantic3D (reduced-8):

-

-

-

-Qualitative results of our RandLA-Net:

-

-|  |  |

-| -------------------------------- | ------------------------------- |

-|  |  |

-

-

-

-**Note:**

-- Preferably with more than 64G RAM to process this dataset due to the large volume of point cloud

-

-

-### (4) SemanticKITTI

-

-SemanticKITTI dataset can be found here. Download the files

- related to semantic segmentation and extract everything into the same folder. Uncompress the folder and move it to

-`/data/semantic_kitti/dataset`.

-

-- Preparing the dataset:

-```

-python utils/data_prepare_semantickitti.py

-```

-

-- Start training:

-```

-python main_SemanticKITTI.py --mode train --gpu 0

-```

-

-- Evaluation:

-```

-sh jobs_test_semantickitti.sh

-```

-

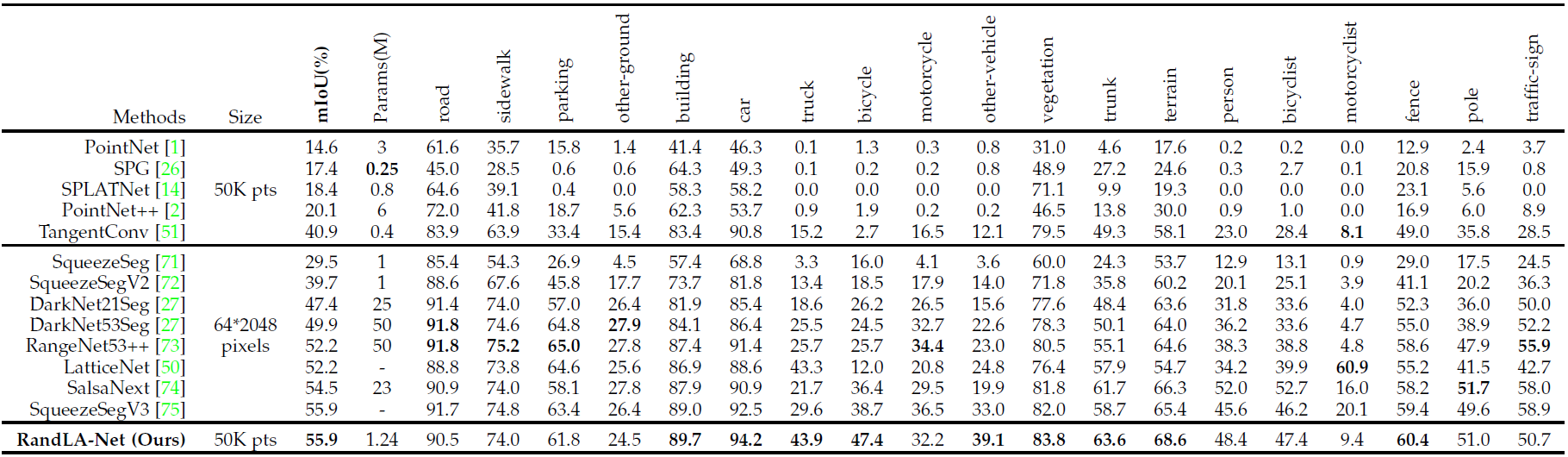

-Quantitative results of different approaches on SemanticKITTI dataset:

-

-

-

-Qualitative results of our RandLA-Net:

-

-

-

-

-### (5) Demo

-

-

-

-

-### Citation

-If you find our work useful in your research, please consider citing:

-

- @article{hu2019randla,

- title={RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds},

- author={Hu, Qingyong and Yang, Bo and Xie, Linhai and Rosa, Stefano and Guo, Yulan and Wang, Zhihua and Trigoni, Niki and Markham, Andrew},

- journal={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

- year={2020}

- }

-

- @article{hu2021learning,

- title={Learning Semantic Segmentation of Large-Scale Point Clouds with Random Sampling},

- author={Hu, Qingyong and Yang, Bo and Xie, Linhai and Rosa, Stefano and Guo, Yulan and Wang, Zhihua and Trigoni, Niki and Markham, Andrew},

- journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

- year={2021},

- publisher={IEEE}

- }

-

-

-### Acknowledgment

-- Part of our code refers to nanoflann library and the the recent work KPConv.

-- We use blender to make the video demo.

-

-

-### License

-Licensed under the CC BY-NC-SA 4.0 license, see [LICENSE](./LICENSE).

-

-

-### Updates

-* 21/03/2020: Updating all experimental results

-* 21/03/2020: Adding pretrained models and results

-* 02/03/2020: Code available!

-* 15/11/2019: Initial release!

-

-## Related Repos

-1. [SoTA-Point-Cloud: Deep Learning for 3D Point Clouds: A Survey](https://github.com/QingyongHu/SoTA-Point-Cloud)

-2. [SensatUrban: Learning Semantics from Urban-Scale Photogrammetric Point Clouds](https://github.com/QingyongHu/SpinNet)

-3. [3D-BoNet: Learning Object Bounding Boxes for 3D Instance Segmentation on Point Clouds](https://github.com/Yang7879/3D-BoNet)

-4. [SpinNet: Learning a General Surface Descriptor for 3D Point Cloud Registration](https://github.com/QingyongHu/SpinNet)

-5. [SQN: Weakly-Supervised Semantic Segmentation of Large-Scale 3D Point Clouds with 1000x Fewer Labels](https://github.com/QingyongHu/SQN)

-

+# Toronto-3D and OpenGF dataset code for RandLA-Net

+

+Code for [Toronto-3D](https://github.com/WeikaiTan/Toronto-3D.git) has been uploaded. Try it for building your own network.

+

+Will release code for OpenGF later

+

+## Train and test RandLA-Net on Toronto-3D

+1. Set up environment and compile the operations - exactly the same as the RandLA-Net environment

+1. Create a folder called `data` and move the `.ply` files into `data/Tronto_3D/original_ply/`

+1. Change parameters according to your preference in `data_prepare_toronto3d.py` and run to preprocess point clouds

+1. Change parameters according to your preference in `helper_tool.ply` to build the network

+1. Train the network by running `python main_Toronto3D.py --mode train`

+1. Test and evaluate on `L002` by running `python main_Toronto3D.py --mode test --test_eval True`

+1. Modify the code to find a good parameter set or test on your own data

+

+## Sample results of Toronto-3D

+The highest results reported are from RandLA-Net in [Hu et al. (2021)](https://doi.org/10.1109/TPAMI.2021.3083288). Here are some results I got on my code with the default parameters. The largest factor in mIoU is the accuracy of *Road Markings*, which is impossible to be classified with XYZ only.

+

+| Features | OA | mIoU | Road | Road mrk. | Natural | Bldg | Util. line | Pole | Car | Fence |

+|----------|----|------|------|-----------|---------|------|------------|------|-----|-------|

+| [Hu et al. (2021)](https://doi.org/10.1109/TPAMI.2021.3083288) | 92.95 | 77.71 | 94.61 | 42.62 | 96.89 | 93.01 | 86.51 | 78.07 | 92.85 | 37.12 |

+| [Hu et al. (2021)](https://doi.org/10.1109/TPAMI.2021.3083288) with RGB| 94.37 | 81.77 | 96.69 | 64.21 | 96.92 | 94.24 | 88.06 | 77.84 | 93.37 | 42.86 |

+|XYZRGBI | 96.57 | 81.00 | 95.61 | 58.04 | 97.22 | 93.45 | 87.58 | 82.64 | 91.06 | 42.39 |

+|XYZRGB | 96.71 | 80.89 | 95.88 | 60.75 | 97.02 | 94.04 | 86.71 | 83.30 | 87.66 | 41.80 |

+|XYZI | 95.65 | 80.03 | 94.59 | 50.14 | 95.90 | 92.76 | 87.70 | 77.77 | 91.10 | 50.30 |

+|XYZ | 94.94 | 74.13 | 93.53 | 12.52 | 96.67 | 92.34 | 86.25 | 80.10 | 88.04 | 43.57 |

diff --git a/RandLANet.py b/RandLANet.py

index bf90997..22bff36 100755

--- a/RandLANet.py

+++ b/RandLANet.py

@@ -7,6 +7,8 @@

import helper_tf_util

import time

+import warnings

+warnings.simplefilter(action="ignore", category=FutureWarning)

def log_out(out_str, f_out):

f_out.write(out_str + '\n')

diff --git a/data_prepare_toronto3d.py b/data_prepare_toronto3d.py

new file mode 100644

index 0000000..8444d0f

--- /dev/null

+++ b/data_prepare_toronto3d.py

@@ -0,0 +1,54 @@

+from sklearn.neighbors import KDTree

+from os.path import join, exists, dirname, abspath

+import numpy as np

+import os, pickle

+import sys

+

+BASE_DIR = dirname(abspath(__file__))

+ROOT_DIR = dirname(BASE_DIR)

+sys.path.append(BASE_DIR)

+sys.path.append(ROOT_DIR)

+from helper_ply import write_ply, read_ply

+from helper_tool import DataProcessing as DP

+

+grid_size = 0.06

+dataset_path = 'data/Toronto_3D'

+train_files = ['L001', 'L003', 'L004']

+val_files = ['L002']

+UTM_OFFSET = [627285, 4841948, 0]

+original_pc_folder = join(dataset_path, 'original_ply')

+sub_pc_folder = join(dataset_path, 'input_{:.3f}'.format(grid_size))

+os.mkdir(sub_pc_folder) if not exists(sub_pc_folder) else None

+

+for pc_path in [join(original_pc_folder, fname + '.ply') for fname in train_files + val_files]:

+ print(pc_path)

+ file_name = pc_path.split('/')[-1][:-4]

+

+ pc = read_ply(pc_path)

+ labels = pc['scalar_Label'].astype(np.uint8)

+ xyz = np.vstack((pc['x'] - UTM_OFFSET[0], pc['y'] - UTM_OFFSET[1], pc['z'] - UTM_OFFSET[2])).T.astype(np.float32)

+ color = np.vstack((pc['red'], pc['green'], pc['blue'])).T.astype(np.uint8)

+ intensity = pc['scalar_Intensity'].astype(np.uint8).reshape(-1,1)

+ # Subsample to save space

+ sub_xyz, sub_colors, sub_labels = DP.grid_sub_sampling(xyz, color, labels, grid_size)

+ _, sub_intensity = DP.grid_sub_sampling(xyz, features=intensity, grid_size=grid_size)

+

+ sub_colors = sub_colors / 255.0

+ sub_intensity = sub_intensity[:,0] / 255.0

+ sub_ply_file = join(sub_pc_folder, file_name + '.ply')

+ write_ply(sub_ply_file, [sub_xyz, sub_colors, sub_intensity, sub_labels], ['x', 'y', 'z', 'red', 'green', 'blue', 'intensity', 'class'])

+

+ search_tree = KDTree(sub_xyz, leaf_size=50)

+ kd_tree_file = join(sub_pc_folder, file_name + '_KDTree.pkl')

+ with open(kd_tree_file, 'wb') as f:

+ pickle.dump(search_tree, f)

+

+ if file_name not in train_files:

+ proj_idx = np.squeeze(search_tree.query(xyz, return_distance=False))

+ proj_idx = proj_idx.astype(np.int32)

+ proj_save = join(sub_pc_folder, file_name + '_proj.pkl')

+ with open(proj_save, 'wb') as f:

+ pickle.dump([proj_idx, labels], f)

+

+

+

diff --git a/helper_tool.py b/helper_tool.py

index 6354f9f..b6f95f7 100755

--- a/helper_tool.py

+++ b/helper_tool.py

@@ -1,4 +1,4 @@

-from open3d import linux as open3d

+import open3d

from os.path import join

import numpy as np

import colorsys, random, os, sys

@@ -14,7 +14,38 @@

import cpp_wrappers.cpp_subsampling.grid_subsampling as cpp_subsampling

import nearest_neighbors.lib.python.nearest_neighbors as nearest_neighbors

+class ConfigToronto3D:

+ k_n = 16 # KNN

+ num_layers = 5 # Number of layers

+ num_points = 65536 # Number of input points

+ num_classes = 8 # Number of valid classes

+ sub_grid_size = 0.06 # preprocess_parameter

+ use_rgb = False # Use RGB

+ use_intensity = False # Use intensity

+

+ batch_size = 4 # batch_size during training

+ val_batch_size = 14 # batch_size during validation and test

+ train_steps = 500 # Number of steps per epochs

+ val_steps = 25 # Number of validation steps per epoch

+

+ sub_sampling_ratio = [4, 4, 4, 4, 2] # sampling ratio of random sampling at each layer

+ d_out = [16, 64, 128, 256, 512] # feature dimension

+

+ noise_init = 3.5 # noise initial parameter

+ max_epoch = 100 # maximum epoch during training

+ learning_rate = 1e-2 # initial learning rate

+ lr_decays = {i: 0.95 for i in range(0, 500)} # decay rate of learning rate

+

+ train_sum_dir = 'train_log'

+ saving = True

+ saving_path = None

+ augment_scale_anisotropic = True

+ augment_symmetries = [True, False, False]

+ augment_rotation = 'vertical'

+ augment_scale_min = 0.8

+ augment_scale_max = 1.2

+ augment_noise = 0.001

class ConfigSemanticKITTI:

k_n = 16 # KNN

num_layers = 4 # Number of layers

@@ -255,7 +286,9 @@ def get_class_weights(dataset_name):

elif dataset_name is 'SemanticKITTI':

num_per_class = np.array([55437630, 320797, 541736, 2578735, 3274484, 552662, 184064, 78858,

240942562, 17294618, 170599734, 6369672, 230413074, 101130274, 476491114,

- 9833174, 129609852, 4506626, 1168181])

+ 9833174, 129609852, 4506626, 1168181], dtype=np.int32)

+ elif dataset_name is 'Toronto3D':

+ num_per_class = np.array([35391894, 1449308, 4650919, 18252779, 589856, 743579, 4311631, 356463], dtype=np.int32)

weight = num_per_class / float(sum(num_per_class))

ce_label_weight = 1 / (weight + 0.02)

return np.expand_dims(ce_label_weight, axis=0)

diff --git a/imgs/S3DIS_area2.gif b/imgs/S3DIS_area2.gif

deleted file mode 100644

index 1df595b..0000000

Binary files a/imgs/S3DIS_area2.gif and /dev/null differ

diff --git a/imgs/S3DIS_area3.gif b/imgs/S3DIS_area3.gif

deleted file mode 100644

index c754f9d..0000000

Binary files a/imgs/S3DIS_area3.gif and /dev/null differ

diff --git a/imgs/Semantic3D-1.gif b/imgs/Semantic3D-1.gif

deleted file mode 100644

index 02f1a95..0000000

Binary files a/imgs/Semantic3D-1.gif and /dev/null differ

diff --git a/imgs/Semantic3D-3.gif b/imgs/Semantic3D-3.gif

deleted file mode 100644

index a93c4b3..0000000

Binary files a/imgs/Semantic3D-3.gif and /dev/null differ

diff --git a/imgs/Semantic3D-4.gif b/imgs/Semantic3D-4.gif

deleted file mode 100644

index 628f718..0000000

Binary files a/imgs/Semantic3D-4.gif and /dev/null differ

diff --git a/imgs/SemanticKITTI-2.gif b/imgs/SemanticKITTI-2.gif

deleted file mode 100644

index 006fadd..0000000

Binary files a/imgs/SemanticKITTI-2.gif and /dev/null differ

diff --git a/jobs_6_fold_cv_s3dis.sh b/jobs_6_fold_cv_s3dis.sh

deleted file mode 100644

index eb14c8c..0000000

--- a/jobs_6_fold_cv_s3dis.sh

+++ /dev/null

@@ -1,14 +0,0 @@

-python -B main_S3DIS.py --gpu 0 --mode train --test_area 1

-python -B main_S3DIS.py --gpu 0 --mode test --test_area 1

-python -B main_S3DIS.py --gpu 0 --mode train --test_area 2

-python -B main_S3DIS.py --gpu 0 --mode test --test_area 2

-python -B main_S3DIS.py --gpu 0 --mode train --test_area 3

-python -B main_S3DIS.py --gpu 0 --mode test --test_area 3

-python -B main_S3DIS.py --gpu 0 --mode train --test_area 4

-python -B main_S3DIS.py --gpu 0 --mode test --test_area 4

-python -B main_S3DIS.py --gpu 0 --mode train --test_area 5

-python -B main_S3DIS.py --gpu 0 --mode test --test_area 5

-python -B main_S3DIS.py --gpu 0 --mode train --test_area 6

-python -B main_S3DIS.py --gpu 0 --mode test --test_area 6

-

-

diff --git a/jobs_test_semantickitti.sh b/jobs_test_semantickitti.sh

deleted file mode 100644

index 340c66c..0000000

--- a/jobs_test_semantickitti.sh

+++ /dev/null

@@ -1,12 +0,0 @@

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 11

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 12

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 13

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 14

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 15

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 16

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 17

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 18

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 19

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 20

-python -B main_SemanticKITTI.py --gpu 0 --mode test --test_area 21

-

diff --git a/main_S3DIS.py b/main_S3DIS.py

deleted file mode 100755

index 7e21834..0000000

--- a/main_S3DIS.py

+++ /dev/null

@@ -1,269 +0,0 @@

-from os.path import join

-from RandLANet import Network

-from tester_S3DIS import ModelTester

-from helper_ply import read_ply

-from helper_tool import ConfigS3DIS as cfg

-from helper_tool import DataProcessing as DP

-from helper_tool import Plot

-import tensorflow as tf

-import numpy as np

-import time, pickle, argparse, glob, os

-

-

-class S3DIS:

- def __init__(self, test_area_idx):

- self.name = 'S3DIS'

- self.path = '/data/S3DIS'

- self.label_to_names = {0: 'ceiling',

- 1: 'floor',

- 2: 'wall',

- 3: 'beam',

- 4: 'column',

- 5: 'window',

- 6: 'door',

- 7: 'table',

- 8: 'chair',

- 9: 'sofa',

- 10: 'bookcase',

- 11: 'board',

- 12: 'clutter'}

- self.num_classes = len(self.label_to_names)

- self.label_values = np.sort([k for k, v in self.label_to_names.items()])

- self.label_to_idx = {l: i for i, l in enumerate(self.label_values)}

- self.ignored_labels = np.array([])

-

- self.val_split = 'Area_' + str(test_area_idx)

- self.all_files = glob.glob(join(self.path, 'original_ply', '*.ply'))

-

- # Initiate containers

- self.val_proj = []

- self.val_labels = []

- self.possibility = {}

- self.min_possibility = {}

- self.input_trees = {'training': [], 'validation': []}

- self.input_colors = {'training': [], 'validation': []}

- self.input_labels = {'training': [], 'validation': []}

- self.input_names = {'training': [], 'validation': []}

- self.load_sub_sampled_clouds(cfg.sub_grid_size)

-

- def load_sub_sampled_clouds(self, sub_grid_size):

- tree_path = join(self.path, 'input_{:.3f}'.format(sub_grid_size))

- for i, file_path in enumerate(self.all_files):

- t0 = time.time()

- cloud_name = file_path.split('/')[-1][:-4]

- if self.val_split in cloud_name:

- cloud_split = 'validation'

- else:

- cloud_split = 'training'

-

- # Name of the input files

- kd_tree_file = join(tree_path, '{:s}_KDTree.pkl'.format(cloud_name))

- sub_ply_file = join(tree_path, '{:s}.ply'.format(cloud_name))

-

- data = read_ply(sub_ply_file)

- sub_colors = np.vstack((data['red'], data['green'], data['blue'])).T

- sub_labels = data['class']

-

- # Read pkl with search tree

- with open(kd_tree_file, 'rb') as f:

- search_tree = pickle.load(f)

-

- self.input_trees[cloud_split] += [search_tree]

- self.input_colors[cloud_split] += [sub_colors]

- self.input_labels[cloud_split] += [sub_labels]

- self.input_names[cloud_split] += [cloud_name]

-

- size = sub_colors.shape[0] * 4 * 7

- print('{:s} {:.1f} MB loaded in {:.1f}s'.format(kd_tree_file.split('/')[-1], size * 1e-6, time.time() - t0))

-

- print('\nPreparing reprojected indices for testing')

-

- # Get validation and test reprojected indices

- for i, file_path in enumerate(self.all_files):

- t0 = time.time()

- cloud_name = file_path.split('/')[-1][:-4]

-

- # Validation projection and labels

- if self.val_split in cloud_name:

- proj_file = join(tree_path, '{:s}_proj.pkl'.format(cloud_name))

- with open(proj_file, 'rb') as f:

- proj_idx, labels = pickle.load(f)

- self.val_proj += [proj_idx]

- self.val_labels += [labels]

- print('{:s} done in {:.1f}s'.format(cloud_name, time.time() - t0))

-

- # Generate the input data flow

- def get_batch_gen(self, split):

- if split == 'training':

- num_per_epoch = cfg.train_steps * cfg.batch_size

- elif split == 'validation':

- num_per_epoch = cfg.val_steps * cfg.val_batch_size

-

- self.possibility[split] = []

- self.min_possibility[split] = []

- # Random initialize

- for i, tree in enumerate(self.input_colors[split]):

- self.possibility[split] += [np.random.rand(tree.data.shape[0]) * 1e-3]

- self.min_possibility[split] += [float(np.min(self.possibility[split][-1]))]

-

- def spatially_regular_gen():

- # Generator loop

- for i in range(num_per_epoch):

-

- # Choose the cloud with the lowest probability

- cloud_idx = int(np.argmin(self.min_possibility[split]))

-

- # choose the point with the minimum of possibility in the cloud as query point

- point_ind = np.argmin(self.possibility[split][cloud_idx])

-

- # Get all points within the cloud from tree structure

- points = np.array(self.input_trees[split][cloud_idx].data, copy=False)

-

- # Center point of input region

- center_point = points[point_ind, :].reshape(1, -1)

-

- # Add noise to the center point

- noise = np.random.normal(scale=cfg.noise_init / 10, size=center_point.shape)

- pick_point = center_point + noise.astype(center_point.dtype)

-

- # Check if the number of points in the selected cloud is less than the predefined num_points

- if len(points) < cfg.num_points:

- # Query all points within the cloud

- queried_idx = self.input_trees[split][cloud_idx].query(pick_point, k=len(points))[1][0]

- else:

- # Query the predefined number of points

- queried_idx = self.input_trees[split][cloud_idx].query(pick_point, k=cfg.num_points)[1][0]

-

- # Shuffle index

- queried_idx = DP.shuffle_idx(queried_idx)

- # Get corresponding points and colors based on the index

- queried_pc_xyz = points[queried_idx]

- queried_pc_xyz = queried_pc_xyz - pick_point

- queried_pc_colors = self.input_colors[split][cloud_idx][queried_idx]

- queried_pc_labels = self.input_labels[split][cloud_idx][queried_idx]

-

- # Update the possibility of the selected points

- dists = np.sum(np.square((points[queried_idx] - pick_point).astype(np.float32)), axis=1)

- delta = np.square(1 - dists / np.max(dists))

- self.possibility[split][cloud_idx][queried_idx] += delta

- self.min_possibility[split][cloud_idx] = float(np.min(self.possibility[split][cloud_idx]))

-

- # up_sampled with replacement

- if len(points) < cfg.num_points:

- queried_pc_xyz, queried_pc_colors, queried_idx, queried_pc_labels = \

- DP.data_aug(queried_pc_xyz, queried_pc_colors, queried_pc_labels, queried_idx, cfg.num_points)

-

- if True:

- yield (queried_pc_xyz.astype(np.float32),

- queried_pc_colors.astype(np.float32),

- queried_pc_labels,

- queried_idx.astype(np.int32),

- np.array([cloud_idx], dtype=np.int32))

-

- gen_func = spatially_regular_gen

- gen_types = (tf.float32, tf.float32, tf.int32, tf.int32, tf.int32)

- gen_shapes = ([None, 3], [None, 3], [None], [None], [None])

- return gen_func, gen_types, gen_shapes

-

- @staticmethod

- def get_tf_mapping2():

- # Collect flat inputs

- def tf_map(batch_xyz, batch_features, batch_labels, batch_pc_idx, batch_cloud_idx):

- batch_features = tf.concat([batch_xyz, batch_features], axis=-1)

- input_points = []

- input_neighbors = []

- input_pools = []

- input_up_samples = []

-

- for i in range(cfg.num_layers):

- neighbour_idx = tf.py_func(DP.knn_search, [batch_xyz, batch_xyz, cfg.k_n], tf.int32)

- sub_points = batch_xyz[:, :tf.shape(batch_xyz)[1] // cfg.sub_sampling_ratio[i], :]

- pool_i = neighbour_idx[:, :tf.shape(batch_xyz)[1] // cfg.sub_sampling_ratio[i], :]

- up_i = tf.py_func(DP.knn_search, [sub_points, batch_xyz, 1], tf.int32)

- input_points.append(batch_xyz)

- input_neighbors.append(neighbour_idx)

- input_pools.append(pool_i)

- input_up_samples.append(up_i)

- batch_xyz = sub_points

-

- input_list = input_points + input_neighbors + input_pools + input_up_samples

- input_list += [batch_features, batch_labels, batch_pc_idx, batch_cloud_idx]

-

- return input_list

-

- return tf_map

-

- def init_input_pipeline(self):

- print('Initiating input pipelines')

- cfg.ignored_label_inds = [self.label_to_idx[ign_label] for ign_label in self.ignored_labels]

- gen_function, gen_types, gen_shapes = self.get_batch_gen('training')

- gen_function_val, _, _ = self.get_batch_gen('validation')

- self.train_data = tf.data.Dataset.from_generator(gen_function, gen_types, gen_shapes)

- self.val_data = tf.data.Dataset.from_generator(gen_function_val, gen_types, gen_shapes)

-

- self.batch_train_data = self.train_data.batch(cfg.batch_size)

- self.batch_val_data = self.val_data.batch(cfg.val_batch_size)

- map_func = self.get_tf_mapping2()

-

- self.batch_train_data = self.batch_train_data.map(map_func=map_func)

- self.batch_val_data = self.batch_val_data.map(map_func=map_func)

-

- self.batch_train_data = self.batch_train_data.prefetch(cfg.batch_size)

- self.batch_val_data = self.batch_val_data.prefetch(cfg.val_batch_size)

-

- iter = tf.data.Iterator.from_structure(self.batch_train_data.output_types, self.batch_train_data.output_shapes)

- self.flat_inputs = iter.get_next()

- self.train_init_op = iter.make_initializer(self.batch_train_data)

- self.val_init_op = iter.make_initializer(self.batch_val_data)

-

-

-if __name__ == '__main__':

- parser = argparse.ArgumentParser()

- parser.add_argument('--gpu', type=int, default=0, help='the number of GPUs to use [default: 0]')

- parser.add_argument('--test_area', type=int, default=5, help='Which area to use for test, option: 1-6 [default: 5]')

- parser.add_argument('--mode', type=str, default='train', help='options: train, test, vis')

- parser.add_argument('--model_path', type=str, default='None', help='pretrained model path')

- FLAGS = parser.parse_args()

-

- os.environ["CUDA_DEVICE_ORDER"] = "PCI_BUS_ID"

- os.environ['CUDA_VISIBLE_DEVICES'] = str(FLAGS.gpu)

- os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

- Mode = FLAGS.mode

-

- test_area = FLAGS.test_area

- dataset = S3DIS(test_area)

- dataset.init_input_pipeline()

-

- if Mode == 'train':

- model = Network(dataset, cfg)

- model.train(dataset)

- elif Mode == 'test':

- cfg.saving = False

- model = Network(dataset, cfg)

- if FLAGS.model_path is not 'None':

- chosen_snap = FLAGS.model_path

- else:

- chosen_snapshot = -1

- logs = np.sort([os.path.join('results', f) for f in os.listdir('results') if f.startswith('Log')])

- chosen_folder = logs[-1]

- snap_path = join(chosen_folder, 'snapshots')

- snap_steps = [int(f[:-5].split('-')[-1]) for f in os.listdir(snap_path) if f[-5:] == '.meta']

- chosen_step = np.sort(snap_steps)[-1]

- chosen_snap = os.path.join(snap_path, 'snap-{:d}'.format(chosen_step))

- tester = ModelTester(model, dataset, restore_snap=chosen_snap)

- tester.test(model, dataset)

- else:

- ##################

- # Visualize data #

- ##################

-

- with tf.Session() as sess:

- sess.run(tf.global_variables_initializer())

- sess.run(dataset.train_init_op)

- while True:

- flat_inputs = sess.run(dataset.flat_inputs)

- pc_xyz = flat_inputs[0]

- sub_pc_xyz = flat_inputs[1]

- labels = flat_inputs[21]

- Plot.draw_pc_sem_ins(pc_xyz[0, :, :], labels[0, :])

- Plot.draw_pc_sem_ins(sub_pc_xyz[0, :, :], labels[0, 0:np.shape(sub_pc_xyz)[1]])

diff --git a/main_SemanticKITTI.py b/main_SemanticKITTI.py

deleted file mode 100644

index 07fd6c5..0000000

--- a/main_SemanticKITTI.py

+++ /dev/null

@@ -1,240 +0,0 @@

-from helper_tool import DataProcessing as DP

-from helper_tool import ConfigSemanticKITTI as cfg

-from helper_tool import Plot

-from os.path import join

-from RandLANet import Network

-from tester_SemanticKITTI import ModelTester

-import tensorflow as tf

-import numpy as np

-import os, argparse, pickle

-

-

-class SemanticKITTI:

- def __init__(self, test_id):

- self.name = 'SemanticKITTI'

- self.dataset_path = '/data/semantic_kitti/dataset/sequences_0.06'

- self.label_to_names = {0: 'unlabeled',

- 1: 'car',

- 2: 'bicycle',

- 3: 'motorcycle',

- 4: 'truck',

- 5: 'other-vehicle',

- 6: 'person',

- 7: 'bicyclist',

- 8: 'motorcyclist',

- 9: 'road',

- 10: 'parking',

- 11: 'sidewalk',

- 12: 'other-ground',

- 13: 'building',

- 14: 'fence',

- 15: 'vegetation',

- 16: 'trunk',

- 17: 'terrain',

- 18: 'pole',

- 19: 'traffic-sign'}

- self.num_classes = len(self.label_to_names)

- self.label_values = np.sort([k for k, v in self.label_to_names.items()])

- self.label_to_idx = {l: i for i, l in enumerate(self.label_values)}

- self.ignored_labels = np.sort([0])

-

- self.val_split = '08'

-

- self.seq_list = np.sort(os.listdir(self.dataset_path))

- self.test_scan_number = str(test_id)

- self.train_list, self.val_list, self.test_list = DP.get_file_list(self.dataset_path,

- self.test_scan_number)

- self.train_list = DP.shuffle_list(self.train_list)

- self.val_list = DP.shuffle_list(self.val_list)

-

- self.possibility = []

- self.min_possibility = []

-

- # Generate the input data flow

- def get_batch_gen(self, split):

- if split == 'training':

- num_per_epoch = int(len(self.train_list) / cfg.batch_size) * cfg.batch_size

- path_list = self.train_list

- elif split == 'validation':

- num_per_epoch = int(len(self.val_list) / cfg.val_batch_size) * cfg.val_batch_size

- cfg.val_steps = int(len(self.val_list) / cfg.batch_size)

- path_list = self.val_list

- elif split == 'test':

- num_per_epoch = int(len(self.test_list) / cfg.val_batch_size) * cfg.val_batch_size * 4

- path_list = self.test_list

- for test_file_name in path_list:

- points = np.load(test_file_name)

- self.possibility += [np.random.rand(points.shape[0]) * 1e-3]

- self.min_possibility += [float(np.min(self.possibility[-1]))]

-

- def spatially_regular_gen():

- # Generator loop

- for i in range(num_per_epoch):

- if split != 'test':

- cloud_ind = i

- pc_path = path_list[cloud_ind]

- pc, tree, labels = self.get_data(pc_path)

- # crop a small point cloud

- pick_idx = np.random.choice(len(pc), 1)

- selected_pc, selected_labels, selected_idx = self.crop_pc(pc, labels, tree, pick_idx)

- else:

- cloud_ind = int(np.argmin(self.min_possibility))

- pick_idx = np.argmin(self.possibility[cloud_ind])

- pc_path = path_list[cloud_ind]

- pc, tree, labels = self.get_data(pc_path)

- selected_pc, selected_labels, selected_idx = self.crop_pc(pc, labels, tree, pick_idx)

-

- # update the possibility of the selected pc

- dists = np.sum(np.square((selected_pc - pc[pick_idx]).astype(np.float32)), axis=1)

- delta = np.square(1 - dists / np.max(dists))

- self.possibility[cloud_ind][selected_idx] += delta

- self.min_possibility[cloud_ind] = np.min(self.possibility[cloud_ind])

-

- if True:

- yield (selected_pc.astype(np.float32),

- selected_labels.astype(np.int32),

- selected_idx.astype(np.int32),

- np.array([cloud_ind], dtype=np.int32))

-

- gen_func = spatially_regular_gen

- gen_types = (tf.float32, tf.int32, tf.int32, tf.int32)

- gen_shapes = ([None, 3], [None], [None], [None])

-

- return gen_func, gen_types, gen_shapes

-

- def get_data(self, file_path):

- seq_id = file_path.split('/')[-3]

- frame_id = file_path.split('/')[-1][:-4]

- kd_tree_path = join(self.dataset_path, seq_id, 'KDTree', frame_id + '.pkl')

- # Read pkl with search tree

- with open(kd_tree_path, 'rb') as f:

- search_tree = pickle.load(f)

- points = np.array(search_tree.data, copy=False)

- # Load labels

- if int(seq_id) >= 11:

- labels = np.zeros(np.shape(points)[0], dtype=np.uint8)

- else:

- label_path = join(self.dataset_path, seq_id, 'labels', frame_id + '.npy')

- labels = np.squeeze(np.load(label_path))

- return points, search_tree, labels

-

- @staticmethod

- def crop_pc(points, labels, search_tree, pick_idx):

- # crop a fixed size point cloud for training

- center_point = points[pick_idx, :].reshape(1, -1)

- select_idx = search_tree.query(center_point, k=cfg.num_points)[1][0]

- select_idx = DP.shuffle_idx(select_idx)

- select_points = points[select_idx]

- select_labels = labels[select_idx]

- return select_points, select_labels, select_idx

-

- @staticmethod

- def get_tf_mapping2():

-

- def tf_map(batch_pc, batch_label, batch_pc_idx, batch_cloud_idx):

- features = batch_pc

- input_points = []

- input_neighbors = []

- input_pools = []

- input_up_samples = []

-

- for i in range(cfg.num_layers):

- neighbour_idx = tf.py_func(DP.knn_search, [batch_pc, batch_pc, cfg.k_n], tf.int32)

- sub_points = batch_pc[:, :tf.shape(batch_pc)[1] // cfg.sub_sampling_ratio[i], :]

- pool_i = neighbour_idx[:, :tf.shape(batch_pc)[1] // cfg.sub_sampling_ratio[i], :]

- up_i = tf.py_func(DP.knn_search, [sub_points, batch_pc, 1], tf.int32)

- input_points.append(batch_pc)

- input_neighbors.append(neighbour_idx)

- input_pools.append(pool_i)

- input_up_samples.append(up_i)

- batch_pc = sub_points

-

- input_list = input_points + input_neighbors + input_pools + input_up_samples

- input_list += [features, batch_label, batch_pc_idx, batch_cloud_idx]

-

- return input_list

-

- return tf_map

-

- def init_input_pipeline(self):

- print('Initiating input pipelines')

- cfg.ignored_label_inds = [self.label_to_idx[ign_label] for ign_label in self.ignored_labels]

- gen_function, gen_types, gen_shapes = self.get_batch_gen('training')

- gen_function_val, _, _ = self.get_batch_gen('validation')

- gen_function_test, _, _ = self.get_batch_gen('test')

-

- self.train_data = tf.data.Dataset.from_generator(gen_function, gen_types, gen_shapes)

- self.val_data = tf.data.Dataset.from_generator(gen_function_val, gen_types, gen_shapes)

- self.test_data = tf.data.Dataset.from_generator(gen_function_test, gen_types, gen_shapes)

-

- self.batch_train_data = self.train_data.batch(cfg.batch_size)

- self.batch_val_data = self.val_data.batch(cfg.val_batch_size)

- self.batch_test_data = self.test_data.batch(cfg.val_batch_size)

-

- map_func = self.get_tf_mapping2()

-

- self.batch_train_data = self.batch_train_data.map(map_func=map_func)

- self.batch_val_data = self.batch_val_data.map(map_func=map_func)

- self.batch_test_data = self.batch_test_data.map(map_func=map_func)

-

- self.batch_train_data = self.batch_train_data.prefetch(cfg.batch_size)

- self.batch_val_data = self.batch_val_data.prefetch(cfg.val_batch_size)

- self.batch_test_data = self.batch_test_data.prefetch(cfg.val_batch_size)

-

- iter = tf.data.Iterator.from_structure(self.batch_train_data.output_types, self.batch_train_data.output_shapes)

- self.flat_inputs = iter.get_next()

- self.train_init_op = iter.make_initializer(self.batch_train_data)

- self.val_init_op = iter.make_initializer(self.batch_val_data)

- self.test_init_op = iter.make_initializer(self.batch_test_data)

-

-

-if __name__ == '__main__':

- parser = argparse.ArgumentParser()

- parser.add_argument('--gpu', type=int, default=0, help='the number of GPUs to use [default: 0]')

- parser.add_argument('--mode', type=str, default='train', help='options: train, test, vis')

- parser.add_argument('--test_area', type=str, default='14', help='options: 08, 11,12,13,14,15,16,17,18,19,20,21')

- parser.add_argument('--model_path', type=str, default='None', help='pretrained model path')

- FLAGS = parser.parse_args()

-

- os.environ["CUDA_DEVICE_ORDER"] = "PCI_BUS_ID"

- os.environ['CUDA_VISIBLE_DEVICES'] = str(FLAGS.gpu)

- os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

- Mode = FLAGS.mode

-

- test_area = FLAGS.test_area

- dataset = SemanticKITTI(test_area)

- dataset.init_input_pipeline()

-

- if Mode == 'train':

- model = Network(dataset, cfg)

- model.train(dataset)

- elif Mode == 'test':

- cfg.saving = False

- model = Network(dataset, cfg)

- if FLAGS.model_path is not 'None':

- chosen_snap = FLAGS.model_path

- else:

- chosen_snapshot = -1

- logs = np.sort([os.path.join('results', f) for f in os.listdir('results') if f.startswith('Log')])

- chosen_folder = logs[-1]

- snap_path = join(chosen_folder, 'snapshots')

- snap_steps = [int(f[:-5].split('-')[-1]) for f in os.listdir(snap_path) if f[-5:] == '.meta']

- chosen_step = np.sort(snap_steps)[-1]

- chosen_snap = os.path.join(snap_path, 'snap-{:d}'.format(chosen_step))

- tester = ModelTester(model, dataset, restore_snap=chosen_snap)

- tester.test(model, dataset)

- else:

- ##################

- # Visualize data #

- ##################

-

- with tf.Session() as sess:

- sess.run(tf.global_variables_initializer())

- sess.run(dataset.train_init_op)

- while True:

- flat_inputs = sess.run(dataset.flat_inputs)

- pc_xyz = flat_inputs[0]

- sub_pc_xyz = flat_inputs[1]

- labels = flat_inputs[17]

- Plot.draw_pc_sem_ins(pc_xyz[0, :, :], labels[0, :])

- Plot.draw_pc_sem_ins(sub_pc_xyz[0, :, :], labels[0, 0:np.shape(sub_pc_xyz)[1]])

diff --git a/main_Semantic3D.py b/main_Toronto3D.py

similarity index 70%

rename from main_Semantic3D.py

rename to main_Toronto3D.py

index 6eb9c7b..3d14f7d 100644

--- a/main_Semantic3D.py

+++ b/main_Toronto3D.py

@@ -1,60 +1,45 @@

from os.path import join, exists

from RandLANet import Network

-from tester_Semantic3D import ModelTester

+from tester_Toronto3D import ModelTester

from helper_ply import read_ply

from helper_tool import Plot

from helper_tool import DataProcessing as DP

-from helper_tool import ConfigSemantic3D as cfg

+from helper_tool import ConfigToronto3D as cfg

import tensorflow as tf

import numpy as np

import pickle, argparse, os

-class Semantic3D:

- def __init__(self):

- self.name = 'Semantic3D'

- self.path = '/data/semantic3d'

- self.label_to_names = {0: 'unlabeled',

- 1: 'man-made terrain',

- 2: 'natural terrain',

- 3: 'high vegetation',

- 4: 'low vegetation',

- 5: 'buildings',

- 6: 'hard scape',

- 7: 'scanning artefacts',

- 8: 'cars'}

+class Toronto3D:

+ def __init__(self, mode='train'):

+ self.name = 'Toronto3D'

+ self.path = 'data/Toronto_3D'

+ self.label_to_names = {0: 'unclassified',

+ 1: 'Ground',

+ 2: 'Road marking',

+ 3: 'Natural',

+ 4: 'Building',

+ 5: 'Utility line',

+ 6: 'Pole',

+ 7: 'Car',

+ 8: 'Fence'}

self.num_classes = len(self.label_to_names)

self.label_values = np.sort([k for k, v in self.label_to_names.items()])

self.label_to_idx = {l: i for i, l in enumerate(self.label_values)}

self.ignored_labels = np.sort([0])

- self.original_folder = join(self.path, 'original_data')

self.full_pc_folder = join(self.path, 'original_ply')

- self.sub_pc_folder = join(self.path, 'input_{:.3f}'.format(cfg.sub_grid_size))

-

- # Following KPConv to do the train-validation split

- self.all_splits = [0, 1, 4, 5, 3, 4, 3, 0, 1, 2, 3, 4, 2, 0, 5]

- self.val_split = 1

# Initial training-validation-testing files

- self.train_files = []

- self.val_files = []

- self.test_files = []

- cloud_names = [file_name[:-4] for file_name in os.listdir(self.original_folder) if file_name[-4:] == '.txt']

- for pc_name in cloud_names:

- if exists(join(self.original_folder, pc_name + '.labels')):

- self.train_files.append(join(self.sub_pc_folder, pc_name + '.ply'))

- else:

- self.test_files.append(join(self.full_pc_folder, pc_name + '.ply'))

-

- self.train_files = np.sort(self.train_files)

- self.test_files = np.sort(self.test_files)

+ self.train_files = ['L001', 'L003', 'L004']

+ self.val_files = ['L002']

+ self.test_files = ['L002']

- for i, file_path in enumerate(self.train_files):

- if self.all_splits[i] == self.val_split:

- self.val_files.append(file_path)

+ self.val_split = 3

- self.train_files = np.sort([x for x in self.train_files if x not in self.val_files])

+ self.train_files = [os.path.join(self.full_pc_folder, files + '.ply') for files in self.train_files]

+ self.val_files = [os.path.join(self.full_pc_folder, files + '.ply') for files in self.val_files]

+ self.test_files = [os.path.join(self.full_pc_folder, files + '.ply') for files in self.test_files]

# Initiate containers

self.val_proj = []

@@ -69,44 +54,26 @@ def __init__(self):

self.input_colors = {'training': [], 'validation': [], 'test': []}

self.input_labels = {'training': [], 'validation': []}

- # Ascii files dict for testing

- self.ascii_files = {

- 'MarketplaceFeldkirch_Station4_rgb_intensity-reduced.ply': 'marketsquarefeldkirch4-reduced.labels',

- 'sg27_station10_rgb_intensity-reduced.ply': 'sg27_10-reduced.labels',

- 'sg28_Station2_rgb_intensity-reduced.ply': 'sg28_2-reduced.labels',

- 'StGallenCathedral_station6_rgb_intensity-reduced.ply': 'stgallencathedral6-reduced.labels',

- 'birdfountain_station1_xyz_intensity_rgb.ply': 'birdfountain1.labels',

- 'castleblatten_station1_intensity_rgb.ply': 'castleblatten1.labels',

- 'castleblatten_station5_xyz_intensity_rgb.ply': 'castleblatten5.labels',

- 'marketplacefeldkirch_station1_intensity_rgb.ply': 'marketsquarefeldkirch1.labels',

- 'marketplacefeldkirch_station4_intensity_rgb.ply': 'marketsquarefeldkirch4.labels',

- 'marketplacefeldkirch_station7_intensity_rgb.ply': 'marketsquarefeldkirch7.labels',

- 'sg27_station10_intensity_rgb.ply': 'sg27_10.labels',

- 'sg27_station3_intensity_rgb.ply': 'sg27_3.labels',

- 'sg27_station6_intensity_rgb.ply': 'sg27_6.labels',

- 'sg27_station8_intensity_rgb.ply': 'sg27_8.labels',

- 'sg28_station2_intensity_rgb.ply': 'sg28_2.labels',

- 'sg28_station5_xyz_intensity_rgb.ply': 'sg28_5.labels',

- 'stgallencathedral_station1_intensity_rgb.ply': 'stgallencathedral1.labels',

- 'stgallencathedral_station3_intensity_rgb.ply': 'stgallencathedral3.labels',

- 'stgallencathedral_station6_intensity_rgb.ply': 'stgallencathedral6.labels'}

-

- self.load_sub_sampled_clouds(cfg.sub_grid_size)

-

- def load_sub_sampled_clouds(self, sub_grid_size):

+ self.load_sub_sampled_clouds(cfg.sub_grid_size, mode)

+

+ def load_sub_sampled_clouds(self, sub_grid_size, mode):

tree_path = join(self.path, 'input_{:.3f}'.format(sub_grid_size))

- files = np.hstack((self.train_files, self.val_files, self.test_files))

+ if mode == 'test':

+ files = self.test_files

+ else:

+ files = np.hstack((self.train_files, self.val_files))

for i, file_path in enumerate(files):

cloud_name = file_path.split('/')[-1][:-4]

print('Load_pc_' + str(i) + ': ' + cloud_name)

- if file_path in self.val_files:

- cloud_split = 'validation'

- elif file_path in self.train_files:

- cloud_split = 'training'

- else:

+ if mode == 'test':

cloud_split = 'test'

+ else:

+ if file_path in self.val_files:

+ cloud_split = 'validation'

+ else:

+ cloud_split = 'training'

# Name of the input files

kd_tree_file = join(tree_path, '{:s}_KDTree.pkl'.format(cloud_name))

@@ -114,7 +81,15 @@ def load_sub_sampled_clouds(self, sub_grid_size):

# read ply with data

data = read_ply(sub_ply_file)

- sub_colors = np.vstack((data['red'], data['green'], data['blue'])).T

+ # read RGB / intensity accoring to configuration

+ if cfg.use_rgb and cfg.use_intensity:

+ sub_colors = np.vstack((data['red'], data['green'], data['blue'], data['intensity'])).T

+ elif cfg.use_rgb and not cfg.use_intensity:

+ sub_colors = np.vstack((data['red'], data['green'], data['blue'])).T

+ elif not cfg.use_rgb and cfg.use_intensity:

+ sub_colors = data['intensity'].reshape(-1,1)

+ else:

+ sub_colors = np.ones((data.shape[0],1))

if cloud_split == 'test':

sub_labels = None

else:

@@ -129,29 +104,15 @@ def load_sub_sampled_clouds(self, sub_grid_size):

if cloud_split in ['training', 'validation']:

self.input_labels[cloud_split] += [sub_labels]

- # Get validation and test re_projection indices

- print('\nPreparing reprojection indices for validation and test')

-

- for i, file_path in enumerate(files):

-

- # get cloud name and split

- cloud_name = file_path.split('/')[-1][:-4]

-

- # Validation projection and labels

- if file_path in self.val_files:

- proj_file = join(tree_path, '{:s}_proj.pkl'.format(cloud_name))

- with open(proj_file, 'rb') as f:

- proj_idx, labels = pickle.load(f)

- self.val_proj += [proj_idx]

- self.val_labels += [labels]

-

- # Test projection

- if file_path in self.test_files:

+ # Get test re_projection indices

+ if cloud_split == 'test':

+ print('\nPreparing reprojection indices for {}'.format(cloud_name))

proj_file = join(tree_path, '{:s}_proj.pkl'.format(cloud_name))

with open(proj_file, 'rb') as f:

proj_idx, labels = pickle.load(f)

self.test_proj += [proj_idx]

self.test_labels += [labels]

+

print('finished')

return

@@ -163,6 +124,13 @@ def get_batch_gen(self, split):

num_per_epoch = cfg.val_steps * cfg.val_batch_size

elif split == 'test':

num_per_epoch = cfg.val_steps * cfg.val_batch_size

+

+ # assign number of features according to input

+ n_features = 1 # use xyz only by default

+ if cfg.use_rgb and cfg.use_intensity:

+ n_features = 4

+ elif cfg.use_rgb and not cfg.use_intensity:

+ n_features = 3

# Reset possibility

self.possibility[split] = []

@@ -214,6 +182,7 @@ def spatially_regular_gen():

queried_pc_labels = self.input_labels[split][cloud_idx][query_idx]

queried_pc_labels = np.array([self.label_to_idx[l] for l in queried_pc_labels])

queried_pt_weight = np.array([self.class_weight[split][0][n] for n in queried_pc_labels])

+

# Update the possibility of the selected points

dists = np.sum(np.square((points[query_idx] - pick_point).astype(np.float32)), axis=1)

@@ -230,7 +199,7 @@ def spatially_regular_gen():

gen_func = spatially_regular_gen

gen_types = (tf.float32, tf.float32, tf.int32, tf.int32, tf.int32)

- gen_shapes = ([None, 3], [None, 3], [None], [None], [None])

+ gen_shapes = ([None, 3], [None, n_features], [None], [None], [None])

return gen_func, gen_types, gen_shapes

def get_tf_mapping(self):

@@ -299,45 +268,53 @@ def tf_augment_input(inputs):

noise = tf.random_normal(tf.shape(transformed_xyz), stddev=cfg.augment_noise)

transformed_xyz = transformed_xyz + noise

- rgb = features[:, :3]

- stacked_features = tf.concat([transformed_xyz, rgb], axis=-1)

+ stacked_features = tf.concat([transformed_xyz, features], axis=-1)

return stacked_features

- def init_input_pipeline(self):

- print('Initiating input pipelines')

+ def init_train_pipeline(self):

+ print('Initiating training pipelines')

cfg.ignored_label_inds = [self.label_to_idx[ign_label] for ign_label in self.ignored_labels]

gen_function, gen_types, gen_shapes = self.get_batch_gen('training')

gen_function_val, _, _ = self.get_batch_gen('validation')

- gen_function_test, _, _ = self.get_batch_gen('test')

self.train_data = tf.data.Dataset.from_generator(gen_function, gen_types, gen_shapes)

self.val_data = tf.data.Dataset.from_generator(gen_function_val, gen_types, gen_shapes)

- self.test_data = tf.data.Dataset.from_generator(gen_function_test, gen_types, gen_shapes)

self.batch_train_data = self.train_data.batch(cfg.batch_size)

self.batch_val_data = self.val_data.batch(cfg.val_batch_size)

- self.batch_test_data = self.test_data.batch(cfg.val_batch_size)

map_func = self.get_tf_mapping()

self.batch_train_data = self.batch_train_data.map(map_func=map_func)

self.batch_val_data = self.batch_val_data.map(map_func=map_func)

- self.batch_test_data = self.batch_test_data.map(map_func=map_func)

self.batch_train_data = self.batch_train_data.prefetch(cfg.batch_size)

self.batch_val_data = self.batch_val_data.prefetch(cfg.val_batch_size)

- self.batch_test_data = self.batch_test_data.prefetch(cfg.val_batch_size)

iter = tf.data.Iterator.from_structure(self.batch_train_data.output_types, self.batch_train_data.output_shapes)

self.flat_inputs = iter.get_next()

self.train_init_op = iter.make_initializer(self.batch_train_data)

self.val_init_op = iter.make_initializer(self.batch_val_data)

- self.test_init_op = iter.make_initializer(self.batch_test_data)

+ def init_test_pipeline(self):

+ print('Initiating testing pipelines')

+ cfg.ignored_label_inds = [self.label_to_idx[ign_label] for ign_label in self.ignored_labels]

+ gen_function_test,gen_types, gen_shapes = self.get_batch_gen('test')

+

+ self.test_data = tf.data.Dataset.from_generator(gen_function_test, gen_types, gen_shapes)

+ self.batch_test_data = self.test_data.batch(cfg.val_batch_size)

+ map_func = self.get_tf_mapping()

+ self.batch_test_data = self.batch_test_data.map(map_func=map_func)

+ self.batch_test_data = self.batch_test_data.prefetch(cfg.val_batch_size)

+

+ iter = tf.data.Iterator.from_structure(self.batch_test_data.output_types, self.batch_test_data.output_shapes)

+ self.flat_inputs = iter.get_next()

+ self.test_init_op = iter.make_initializer(self.batch_test_data)

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--gpu', type=int, default=0, help='the number of GPUs to use [default: 0]')

- parser.add_argument('--mode', type=str, default='train', help='options: train, test, vis')

+ parser.add_argument('--mode', type=str, default='test', help='options: train, test, vis')

parser.add_argument('--model_path', type=str, default='None', help='pretrained model path')

+ parser.add_argument('--test_eval', type=bool, default=True, help='evaluate test result on L002')

FLAGS = parser.parse_args()

GPU_ID = FLAGS.gpu

@@ -346,14 +323,15 @@ def init_input_pipeline(self):

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

Mode = FLAGS.mode

- dataset = Semantic3D()

- dataset.init_input_pipeline()

+ dataset = Toronto3D(mode=Mode)

if Mode == 'train':

+ dataset.init_train_pipeline()

model = Network(dataset, cfg)

model.train(dataset)

elif Mode == 'test':

cfg.saving = False

+ dataset.init_test_pipeline()

model = Network(dataset, cfg)

if FLAGS.model_path is not 'None':

chosen_snap = FLAGS.model_path

@@ -363,10 +341,13 @@ def init_input_pipeline(self):

chosen_folder = logs[-1]

snap_path = join(chosen_folder, 'snapshots')

snap_steps = [int(f[:-5].split('-')[-1]) for f in os.listdir(snap_path) if f[-5:] == '.meta']

- chosen_step = np.sort(snap_steps)[-1]

+ chosen_step = np.sort(snap_steps)[chosen_snapshot]

chosen_snap = os.path.join(snap_path, 'snap-{:d}'.format(chosen_step))

- tester = ModelTester(model, dataset, restore_snap=chosen_snap)

- tester.test(model, dataset)

+ tester = ModelTester(model, dataset, cfg, restore_snap=chosen_snap)

+ if FLAGS.test_eval:

+ tester.test(model, dataset, eval=True)

+ else:

+ tester.test(model, dataset)

else:

##################

diff --git a/tester_S3DIS.py b/tester_S3DIS.py

deleted file mode 100755

index 47a8183..0000000

--- a/tester_S3DIS.py

+++ /dev/null

@@ -1,178 +0,0 @@

-from os import makedirs

-from os.path import exists, join

-from helper_ply import write_ply

-from sklearn.metrics import confusion_matrix

-from helper_tool import DataProcessing as DP

-import tensorflow as tf

-import numpy as np

-import time

-

-

-def log_out(out_str, log_f_out):

- log_f_out.write(out_str + '\n')

- log_f_out.flush()

- print(out_str)

-

-

-class ModelTester:

- def __init__(self, model, dataset, restore_snap=None):

- my_vars = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES)

- self.saver = tf.train.Saver(my_vars, max_to_keep=100)

- self.Log_file = open('log_test_' + str(dataset.val_split) + '.txt', 'a')

-

- # Create a session for running Ops on the Graph.

- on_cpu = False

- if on_cpu:

- c_proto = tf.ConfigProto(device_count={'GPU': 0})

- else:

- c_proto = tf.ConfigProto()

- c_proto.gpu_options.allow_growth = True

- self.sess = tf.Session(config=c_proto)

- self.sess.run(tf.global_variables_initializer())

-

- # Load trained model

- if restore_snap is not None:

- self.saver.restore(self.sess, restore_snap)

- print("Model restored from " + restore_snap)

-

- self.prob_logits = tf.nn.softmax(model.logits)

-

- # Initiate global prediction over all test clouds

- self.test_probs = [np.zeros(shape=[l.shape[0], model.config.num_classes], dtype=np.float32)

- for l in dataset.input_labels['validation']]

-

- def test(self, model, dataset, num_votes=100):

-

- # Smoothing parameter for votes

- test_smooth = 0.95

-

- # Initialise iterator with validation/test data

- self.sess.run(dataset.val_init_op)

-

- # Number of points per class in validation set

- val_proportions = np.zeros(model.config.num_classes, dtype=np.float32)

- i = 0

- for label_val in dataset.label_values:

- if label_val not in dataset.ignored_labels:

- val_proportions[i] = np.sum([np.sum(labels == label_val) for labels in dataset.val_labels])

- i += 1

-

- # Test saving path

- saving_path = time.strftime('results/Log_%Y-%m-%d_%H-%M-%S', time.gmtime())

- test_path = join('test', saving_path.split('/')[-1])

- makedirs(test_path) if not exists(test_path) else None

- makedirs(join(test_path, 'val_preds')) if not exists(join(test_path, 'val_preds')) else None

-