|

| 1 | +# Multiple Shooting |

| 2 | + |

| 3 | +In Multiple Shooting, the training data is split into overlapping intervals. |

| 4 | +The solver is then trained on individual intervals. If the end conditions of any |

| 5 | +interval co-incide with the initial conditions of the next immediate interval, |

| 6 | +then the joined/combined solution is same as solving on the whole dataset |

| 7 | +(without splitting). |

| 8 | + |

| 9 | +To ensure that the overlapping part of two consecutive intervals co-incide, |

| 10 | +we add a penalizing term, `continuity_strength * absolute_value_of( prediction |

| 11 | +of last point of some group, i - prediction of first point of group i+1 )`, to |

| 12 | +the loss. |

| 13 | + |

| 14 | +Note that the `continuity_strength` should have a large positive value to add |

| 15 | +high penalities in case the solver predicts discontinuous values. |

| 16 | + |

| 17 | + |

| 18 | +The following is a working demo, using Multiple Shooting |

| 19 | + |

| 20 | +```julia |

| 21 | +using DiffEqFlux, OrdinaryDiffEq, Flux, Optim, Plots |

| 22 | + |

| 23 | +# Define initial conditions and timesteps |

| 24 | +datasize = 30 |

| 25 | +u0 = Float32[2.0, 0.0] |

| 26 | +tspan = (0.0f0, 5.0f0) |

| 27 | +tsteps = range(tspan[1], tspan[2], length = datasize) |

| 28 | + |

| 29 | + |

| 30 | +# Get the data |

| 31 | +function trueODEfunc(du, u, p, t) |

| 32 | + true_A = [-0.1 2.0; -2.0 -0.1] |

| 33 | + du .= ((u.^3)'true_A)' |

| 34 | +end |

| 35 | +prob_trueode = ODEProblem(trueODEfunc, u0, tspan) |

| 36 | +ode_data = Array(solve(prob_trueode, Tsit5(), saveat = tsteps)) |

| 37 | + |

| 38 | + |

| 39 | +# Define the Neural Network |

| 40 | +dudt2 = FastChain((x, p) -> x.^3, |

| 41 | + FastDense(2, 16, tanh), |

| 42 | + FastDense(16, 2)) |

| 43 | + |

| 44 | +prob_neuralode = NeuralODE(dudt2, (0.0,5.0), Tsit5(), saveat = tsteps) |

| 45 | + |

| 46 | +function plot_function_for_multiple_shoot(plt, pred, grp_size) |

| 47 | + step = 1 |

| 48 | + if(grp_size != 1) |

| 49 | + step = grp_size-1 |

| 50 | + end |

| 51 | + if(grp_size == datasize) |

| 52 | + scatter!(plt, tsteps, pred[1][1,:], label = "pred") |

| 53 | + else |

| 54 | + for i in 1:step:datasize-grp_size |

| 55 | + # The term `trunc(Integer,(i-1)/(grp_size-1)+1)` goes from 1, 2, ... , N where N is the total number of groups that can be formed from `ode_data` (In other words, N = trunc(Integer, (datasize-1)/(grp_size-1))) |

| 56 | + scatter!(plt, tsteps[i:i+step], pred[trunc(Integer,(i-1)/step+1)][1,:], label = "grp"*string(trunc(Integer,(i-1)/step+1))) |

| 57 | + end |

| 58 | + end |

| 59 | +end |

| 60 | + |

| 61 | +callback = function (p, l, pred, predictions; doplot = true) |

| 62 | + display(l) |

| 63 | + if doplot |

| 64 | + # plot the original data |

| 65 | + plt = scatter(tsteps[1:size(pred,2)], ode_data[1,1:size(pred,2)], label = "data") |

| 66 | + |

| 67 | + # plot the different predictions for individual shoot |

| 68 | + plot_function_for_multiple_shoot(plt, predictions, grp_size_param) |

| 69 | + |

| 70 | + # plot a single shooting performance of our multiple shooting training (this is what the solver predicts after the training is done) |

| 71 | + # scatter!(plt, tsteps[1:size(pred,2)], pred[1,:], label = "pred") |

| 72 | + |

| 73 | + display(plot(plt)) |

| 74 | + end |

| 75 | + return false |

| 76 | +end |

| 77 | + |

| 78 | +# Define parameters for Multiple Shooting |

| 79 | +grp_size_param = 1 |

| 80 | +loss_multiplier_param = 100 |

| 81 | + |

| 82 | +neural_ode_f(u,p,t) = dudt2(u,p) |

| 83 | +prob_param = ODEProblem(neural_ode_f, u0, tspan, initial_params(dudt2)) |

| 84 | + |

| 85 | +function loss_function_param(ode_data, pred):: Float32 |

| 86 | + return sum(abs2, (ode_data .- pred))^2 |

| 87 | +end |

| 88 | + |

| 89 | +function loss_neuralode(p) |

| 90 | + return multiple_shoot(p, ode_data, tsteps, prob_param, loss_function_param, grp_size_param, loss_multiplier_param) |

| 91 | +end |

| 92 | + |

| 93 | +result_neuralode = DiffEqFlux.sciml_train(loss_neuralode, prob_neuralode.p, |

| 94 | + ADAM(0.05), cb = callback, |

| 95 | + maxiters = 300) |

| 96 | +callback(result_neuralode.minimizer,loss_neuralode(result_neuralode.minimizer)...;doplot=true) |

| 97 | + |

| 98 | +result_neuralode_2 = DiffEqFlux.sciml_train(loss_neuralode, result_neuralode.minimizer, |

| 99 | + BFGS(), cb = callback, |

| 100 | + maxiters = 100, allow_f_increases=true) |

| 101 | +callback(result_neuralode_2.minimizer,loss_neuralode(result_neuralode_2.minimizer)...;doplot=true) |

| 102 | + |

| 103 | +``` |

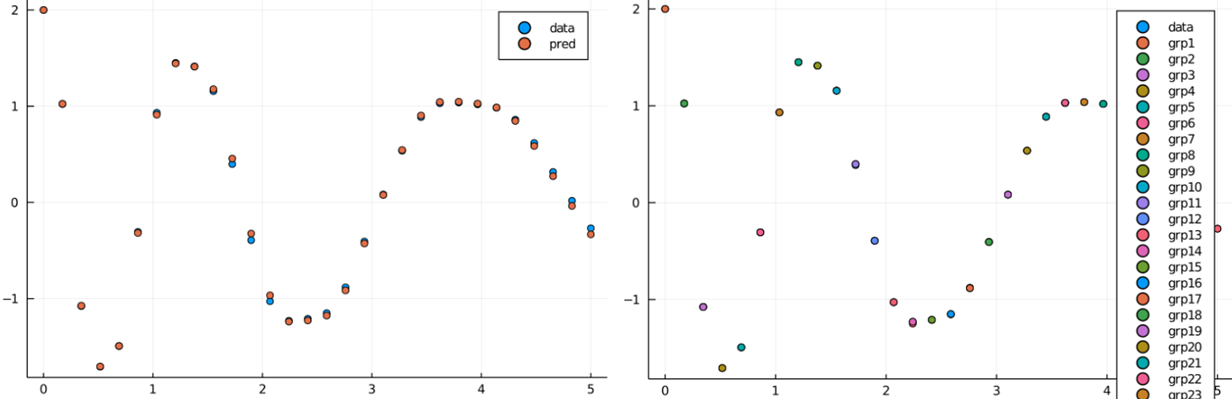

| 104 | +Here's the plots that we get from above |

| 105 | + |

| 106 | + |

| 107 | +The picture on the left shows how well our Neural Network does on a single shoot |

| 108 | +after training it through `multiple_shoot`. |

| 109 | +The picture on the right shows the predictions of each group (Notice that there |

| 110 | +are overlapping points as well. These are the points we are trying to co-incide.) |

| 111 | + |

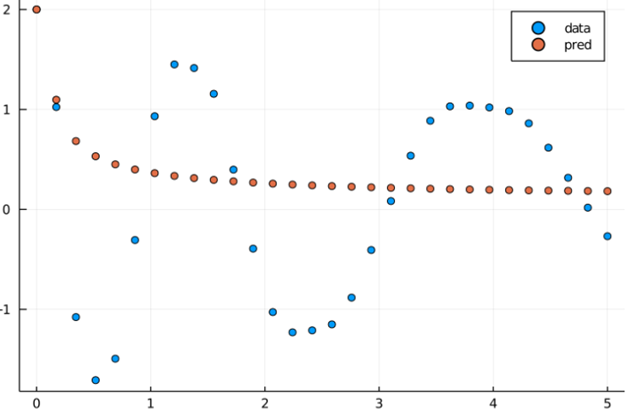

| 112 | +Here is an output with `grp_size` = 30 (which is same as solving on the whole |

| 113 | +interval without splitting also called single shooting) |

| 114 | + |

| 115 | + |

| 116 | + |

| 117 | +It is clear from the above picture, a single shoot doesn't perform very well |

| 118 | +with the ODE Problem we have and gets stuck in a local minima. |

0 commit comments