Best settings for making a video game overworld model #1626

Unanswered

Daviljoe193

asked this question in

Q&A

Replies: 2 comments 3 replies

-

|

send me the dataset, when I have time I'll try to find the right settings for this type of style |

Beta Was this translation helpful? Give feedback.

1 reply

-

|

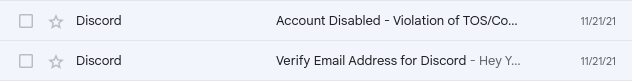

Do you have a discord ? I'll send the lora to you there |

Beta Was this translation helpful? Give feedback.

2 replies

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

I've tried for a good while now to get this right, but I just can't, with the output either being too different from the dataset, or kinda blocky and weird, almost like something from VQGAN+CLIP. The best I have to show is this model, which is far from optimal, as the results on the page were very cherry picked, and I could barely get it to produce the same outputs the day after, so it was a dead-end model. I don't remember what settings I used to train it, besides it being trained back on January 10th (Latest everything as of that date, since I always replace the drive cell with !mkdir -p /content/gdrive/MyDrive), and the dataset being made up of 20 overworld images (Sharing a theme of no NPCs, a completely stock camera angle, and the only somewhat reoccuring element being a save block in 7 out of the 20 images), trained on top of the 768 2.1 model. This output (That I made after creating the HF page) captures the exact kind of aesthetic I was going for, that being something that could be mistaken for unused/cut content from a certain game, in this case, Paper Mario: The Thousand Year Door.

Tl;dr, some time over a month ago, I lost days of sleep attempting (and failing) to nail settings for a video game overworld model, and I want some good baseline settings and guidance for a second attempt at this.

Additional info I just remembered! I remember trying just 10/12 images prior, and while I don't have the results on-hand anymore, I remember them looking really bad. At the cost of training time, I'd rather use more images, since this likely will give the model both more thematic consistency, and flexibility.

Beta Was this translation helpful? Give feedback.

All reactions