Replies: 6 comments 17 replies

-

|

Hi and thanks, use the latest notebook https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb |

Beta Was this translation helpful? Give feedback.

-

|

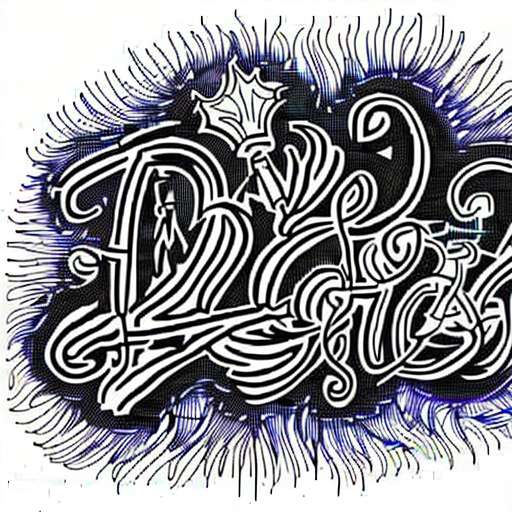

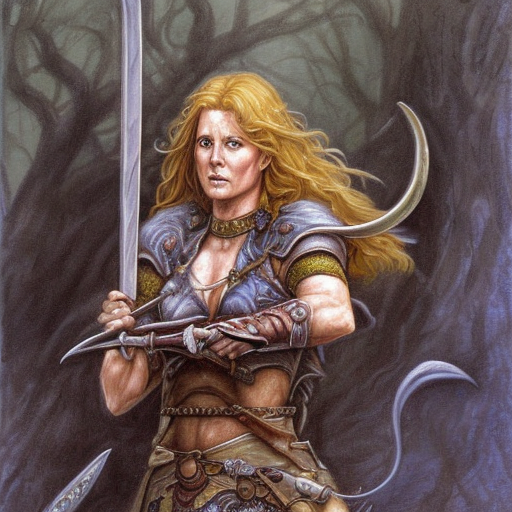

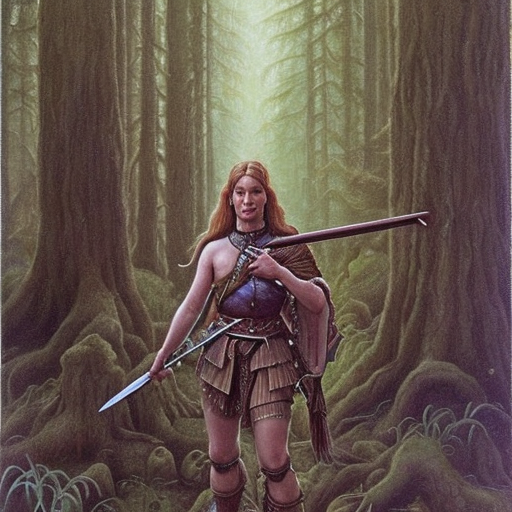

Howdy. Just sent you a tip on the Ko-Fi thing. Have a good coffee or 2! ;-) So back to the issue. I tried what you suggested but am not having much luck. As I mentioned, around Oct. 29, I trained a .ckpt on photos of my friend Ed and it worked incredibly well. Last night, I trained as you suggested on photos of his wife Danette and am not having near the success. The source image files were named 'ouedbkr (1).png' and 'dntbkr.png (1)' and so forth respectively. I think I had 22 images for Ed and 24 for Danette. For the photos of Ed, I followed the basic instructions as listed on that build's notebook. For the training last night, I followed the settings you mentioned above. Comparison below: Staying relatively close to "photo of x" produces more or less expected results. But, once you stray away from that, it quickly falls apart. I used the same settings and seed for both images. Sampler: Euler, resolution: 512x512, Steps: 100, CFG: 7.5, seed: 424242. Other than the subject, 'ouedbkr' or 'dntbkr,' the prompts are exactly the same. Prompt: color photo of x in New York City Prompt: a black and white newspaper photo of x from the 1950s Prompt: a pen and ink drawing of x Prompt: a fantasy painting of x dressed as a warrior by John Howe As you can see, the result is profoundly better from the Oct. 29 build in comparison to my current results with the settings you mentioned. Any thoughts or ideas? Would it be possible to re-post the Oct 29 build as a separate Colab Notebook in the meantime? Thanks again for your great work! Much appreciated!! |

Beta Was this translation helpful? Give feedback.

-

|

Hi and thank you for the tip |

Beta Was this translation helpful? Give feedback.

-

|

I had a similar issue which is why I switch to Shiv's Settings for Now, there is something wrong with the new method of training with both suggested and pre-defined settings (20 Images 3k Steps default txt encoder, also tried with 100% txt encoder, also tried with 4k Steps as suggested). |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

Yeah, I would say I got the training thing worked out!! :-) Thanks again for the great work!! |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Howdy!! First off, I want to thank you for the you're putting into this! I appreciate it greatly and I'm sure the community at large does as well!

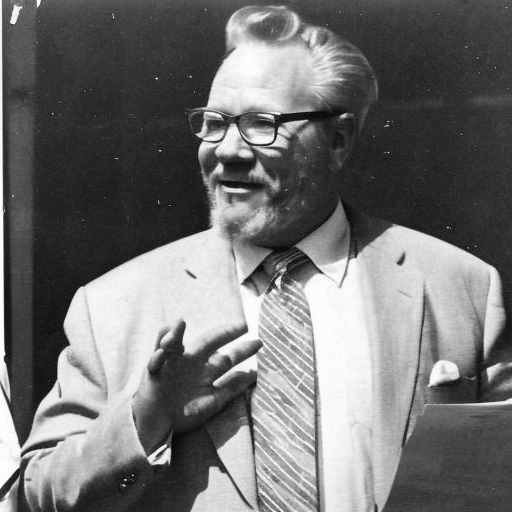

So, here's my issue: I trained a couple of things prior to Halloween and it worked brilliantly. October 29 would've been the actual date I ran them. One was from a photo of an old friend of mine, 22 images. The other was 24 various images of a Fender Stratocaster guitar. I typed in my prompt, and just about any style I put it to worked wonderfully! I followed the tips in the instructions, which at that time was 100 steps per image and so forth. I'm attaching a couple examples below.

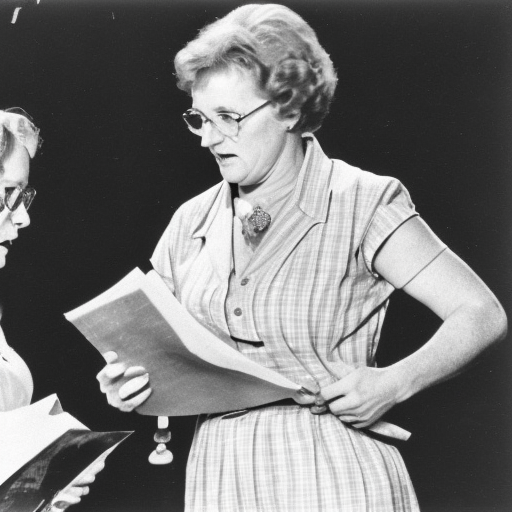

I've done a couple training sessions on some of the more recent builds that have the "text encoder training" thing. I run as you suggested using 200 steps per photo of a different friend (24 photos) with the text encoder set to 10 in hopes that it'll work more or less as it did before... successfully! ;-)

If I keep my prompt close to the model (dntbkr) it creates a pretty good likeness of her. e.g. prompts like "photo of dntbkr" or just "dntbkr" and so forth but if I stray much from that it's not even close. For example... this is something like "a black and white photo of dntbkr dressed as a hippy:

She doesn't have a beard... and if she did... she still wouldn't look anything like that lol. However, a very similar prompt of on my friend's photo, something like "color photo of rklcke dressed as a hippy at Woodstock" turned out like this training with whatever build was up around Oct. 29.

So... I don't know if it's something in the build that changed that's now no longer giving me wonderful results, or if I'm not using the "text encoder training" settings correctly but either way, I sure as hell would like to get back to the awesome results I was getting from your notebook back around Oct. 29!!! I've tried doing additional training on the model to no avail. Same sort of deal... any sort of style just doesn't take.

Thanks again for the awesome work though! I mostly do animation stuff using Deforum and folks like you and them have opened up incredible new methods of creativity for me. This was my most recent animation endeavor set to a Beats Antique song for Halloween.

[https://youtu.be/Ltvt3zDPvD0](Devil Dance by Beats Antique on YouTube)

Cheers!

Beta Was this translation helpful? Give feedback.

All reactions