Replies: 3 comments 10 replies

-

|

Why throw it away ? The results are exactly the same between fp16 and fp32 |

Beta Was this translation helpful? Give feedback.

-

|

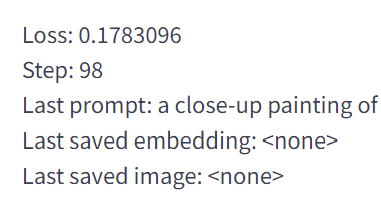

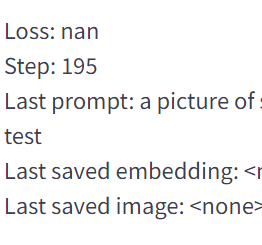

I've tried to train an embedding on a SD 2.1 fp16 ckpt and I am getting "nan". On a different SD 2.1 fp32 model I get normal loss readouts. Is embedding training possible on fp16? If not, how do I generate a 4GB ckpt out of a 2GB one? |

Beta Was this translation helpful? Give feedback.

-

|

I apologize, I was mistaken (although the whole 2.0/2.1 environment is quite confusing at the moment) I don't know about training embeddings on 2GB, haven't tested yet on a SD1.5 model, however the embedding issue above is not caused by the 2GB checkpoint, but by a training issue with SD2.1 overall. More info here: AUTOMATIC1111/stable-diffusion-webui#5715 I will be dropping SD20/21 for a while until it stabilizes, too many bugs and issues atm |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

Hi,

Just realized I need to throw away a session because it got trained in fp16 and I haven't noticed the tickbox is gone (I am aware I can change the option in code, but haven't realized I need to do so because the option is gone).

Please bring it back

TYVM

Beta Was this translation helpful? Give feedback.

All reactions