-

|

I want to pass some simulated frames through my trained network to see how robust it is by comparing the performance of a variety of datasets. However, the simulated frames I'm using come from a sample of the sim_train or sim_test simulation objects, but in comparing tensor values with that of real data and the parameter file, it looks different from the real data tensor values. The sampled frames don't seem to have a baseline or emitter intensity matching that of the parameter file resulting in finding no emitters when passed through the model. So, my question is what do these values inside the frame tensor of the simulation objects represent? Are they photon numbers or camera intensity values and have they already undergone some processing? How would one go about passing a simulated image through the model? My workflow is below: Thank you. |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 2 replies

-

|

Hey, The network always takes such frames containing photon numbers as input. That's why when you work with real images the frame processing includes the line |

Beta Was this translation helpful? Give feedback.

Hey,

thanks for sharing the question here.

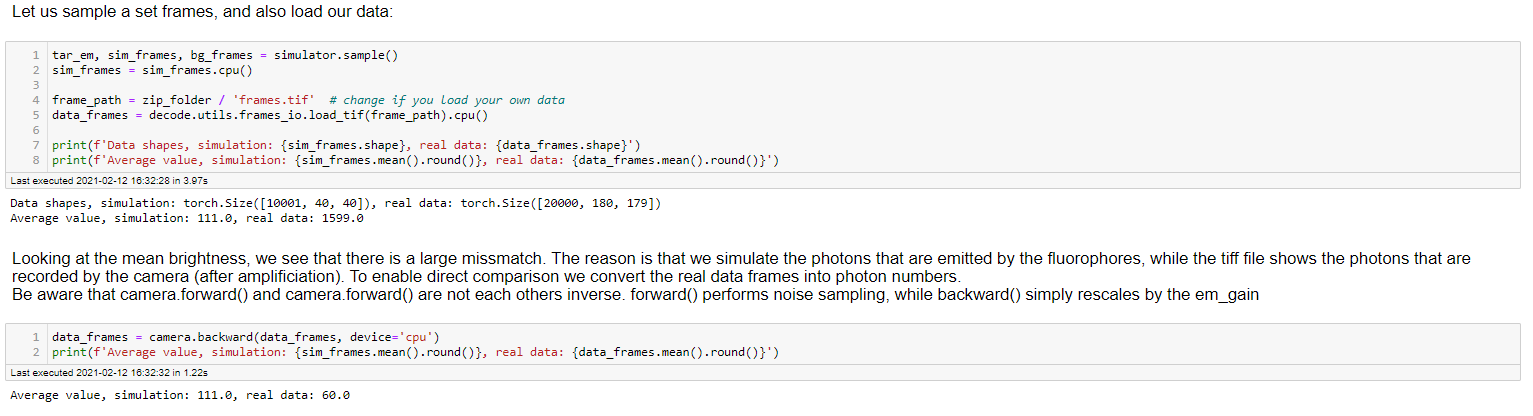

Indeed the simulations are photon numbers. You can also check the Training.ipynb notebook where we explain the simulation procedure, specifically in this section:

The network always takes such frames containing photon numbers as input. That's why when you work with real images the frame processing includes the line

decode.neuralfitter.utils.processing.wrap_callable(camera.backward)If you want to test it on simulated data that is already in the photon format you can just remove that line and it should work.