You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

We have different HRA and RunnerDeployments deployed in different namespaces.

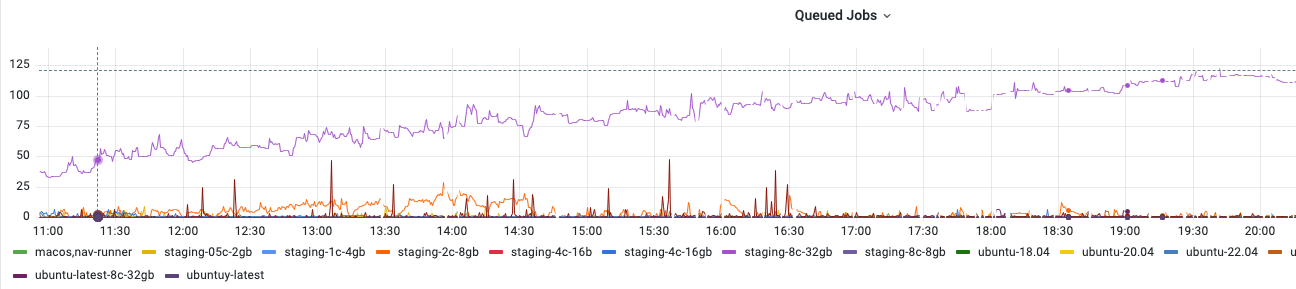

We are facing a long queued( ["Waiting for a runner to pick up this job"])

Might be a broad question but I would like to clarify one thing. What might be the reason, is there are lots of queues although maxReplica is set to 100, and only 20 pods/runners are available.We have enough node resources, and also cluster auto-scaling enabled.

Here are the logs, not sure whether this is related with scaling

Controller logs ->

2023-02-22T22:05:30Z INFO runnerdeployment The newest runnerreplicaset is 100% available. Deleting old runnerreplicasets {"runnerdeployment": "staging-8c-32gb/gha-staging-8c-32gb", "newest_runnerreplicaset": "staging-8c-32gb/gha-staging-8c-32gb-zbhzj", "newest_runnerreplicaset_replicas_ready": 11, "newest_runnerreplicaset_replicas_desired": 11, "old_runnerreplicasets_count": 2}

2023-02-22T22:05:35Z INFO runnerpod Runner pod is annotated to wait for completion, and the runner container is not restarting {"runnerpod": "staging-8c-32gb/gha-staging-8c-32gb-mplvl-bl52k"}

2023-02-22T22:05:35Z INFO runnerpod Runner pod is annotated to wait for completion, and the runner container is not restarting {"runnerpod": "staging-8c-32gb/gha-staging-8c-32gb-mplvl-nwxmn"}

2023-02-22T22:05:35Z INFO runnerpod Runner pod is annotated to wait for completion, and the runner container is not restarting {"runnerpod": "staging-8c-32gb/gha-staging-8c-32gb-mplvl-9rwfg"}

2023-02-22T22:05:35Z INFO runnerpod Runner pod is annotated to wait for completion, and the runner container is not restarting {"runnerpod": "staging-8c-32gb/gha-staging-8c-32gb-mplvl-lrd6n"}

2023-02-22T22:06:02Z INFO runnerpod Runner pod is annotated to wait for completion, and the runner container is not restarting {"runnerpod": "staging-8c-32gb/gha-staging-8c-32gb-vdv95-qskkt"}

2023-02-22T22:06:02Z INFO runnerpod Runner pod is annotated to wait for completion, and the runner container is not restarting {"runnerpod": "staging-8c-32gb/gha-staging-8c-32gb-vdv95-8t8vr"}

reacted with thumbs up emoji reacted with thumbs down emoji reacted with laugh emoji reacted with hooray emoji reacted with confused emoji reacted with heart emoji reacted with rocket emoji reacted with eyes emoji

Uh oh!

There was an error while loading. Please reload this page.

-

We have different HRA and RunnerDeployments deployed in different namespaces.

We are facing a long queued( ["Waiting for a runner to pick up this job"])

Might be a broad question but I would like to clarify one thing. What might be the reason, is there are lots of queues although maxReplica is set to 100, and only 20 pods/runners are available.We have enough node resources, and also cluster auto-scaling enabled.

Controller logs ->

This HRA for

staging-8c-32gbThis is RunnerDeployment

staging-8c-32gbBeta Was this translation helpful? Give feedback.

All reactions