diff --git a/README.md b/README.md

index ac58d5aab0..5db7d9f488 100644

--- a/README.md

+++ b/README.md

@@ -31,36 +31,58 @@ Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuni

- Example: [Mixture of SFT and GRPO](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html)

* 📊 For data engineers. [[tutorial]](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_operator.html)

- - Create task-specific datasets and build data pipelines for cleaning, augmentation, and human-in-the-loop scenarios.

+ - Create datasets and build data pipelines for cleaning, augmentation, and human-in-the-loop scenarios.

- Example: [Data Processing](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_data_functionalities.html)

## 🌟 Key Features

* **Flexible RFT Modes:**

- - Supports synchronous/asynchronous, on-policy/off-policy, and online/offline training. Rollout and training can run separately and scale independently across devices.

+ - Supports synchronous/asynchronous, on-policy/off-policy, and online/offline RL.

+ - Rollout and training can run separately and scale independently across devices.

+ - Boost sample and time efficiency by experience replay.

-* **General Agentic-RL Support:**

- - Supports both concatenated and general multi-turn agentic workflows. Able to directly train agent applications developed using agent frameworks like AgentScope.

+* **Agentic RL Support:**

+ - Supports both concatenated and general multi-step agentic workflows.

+ - Able to directly train agent applications developed using agent frameworks like AgentScope.

-* **General Agentic-RL Support:**

- - Supports both concatenated and general multi-turn agentic workflows. Able to directly train agent applications developed using agent frameworks like AgentScope.

+* **Agentic RL Support:**

+ - Supports both concatenated and general multi-step agentic workflows.

+ - Able to directly train agent applications developed using agent frameworks like AgentScope.

-* **Full Lifecycle Data Pipelines:**

- - Enables pipeline processing of rollout and experience data, supporting active management (prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+* **Full-Lifecycle Data Pipelines:**

+ - Enables pipeline processing of rollout tasks and experience samples.

+ - Active data management (e.g., prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+ - Native support for multi-task joint learning.

-

-* **Full Lifecycle Data Pipelines:**

- - Enables pipeline processing of rollout and experience data, supporting active management (prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+* **Full-Lifecycle Data Pipelines:**

+ - Enables pipeline processing of rollout tasks and experience samples.

+ - Active data management (e.g., prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+ - Native support for multi-task joint learning.

-  +

+  * **User-Friendly Design:**

- - Modular, decoupled architecture for easy adoption and development. Rich graphical user interfaces enable low-code usage.

+ - Plug-and-play modules and decoupled architecture, facilitating easy adoption and development.

+ - Rich graphical user interfaces enable low-code usage.

* **User-Friendly Design:**

- - Modular, decoupled architecture for easy adoption and development. Rich graphical user interfaces enable low-code usage.

+ - Plug-and-play modules and decoupled architecture, facilitating easy adoption and development.

+ - Rich graphical user interfaces enable low-code usage.

+## 🔨 Tutorials and Guidelines

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| Run diverse RFT modes | + [Quick example: GRPO on GSM8k](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)

+## 🔨 Tutorials and Guidelines

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| Run diverse RFT modes | + [Quick example: GRPO on GSM8k](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)

+ [Off-policy RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_advanced.html)

+ [Fully asynchronous RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_async_mode.html)

+ [Offline learning by DPO or SFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_dpo.html) |

+| Multi-step agentic scenarios | + [Concatenated multi-turn workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_multi_turn.html)

+ [General multi-step workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_step_wise.html)

+ [ReAct workflow with an agent framework](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_react.html) |

+| Advanced data pipelines | + [Rollout task mixing and selection](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_selector.html)

+ [Experience replay](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [Advanced data processing & human-in-the-loop](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_data_functionalities.html) |

+| Algorithm development / research | + [RL algorithm development with Trinity-RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html) ([paper](https://arxiv.org/pdf/2508.11408))

+ Non-verifiable domains: [RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [trainable RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward)

+ [Research project: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) ([paper](https://arxiv.org/abs/2509.24203))|

+| Going deeper into Trinity-RFT | + [Full configurations](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_configs.html)

+ [Benchmark toolkit for quick verification and experimentation](./benchmark/README.md)

+ [Understand the coordination between explorer and trainer](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/synchronizer.html) |

+

+

+> [!NOTE]

+> For more tutorials, please refer to the [Trinity-RFT documentation](https://modelscope.github.io/Trinity-RFT/).

+

+

## 🚀 News

-* [2025-10] ✨ [[Release Notes](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.3.1)] Trinity-RFT v0.3.1 released: multi-stage training support, improved agentic RL examples, LoRA support, debug mode and new RL algorithms.

+* [2025-10] [[Release Notes](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.3.1)] Trinity-RFT v0.3.1 released: multi-stage training support, improved agentic RL examples, LoRA support, debug mode and new RL algorithms.

* [2025-09] [[Release Notes](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.3.0)] Trinity-RFT v0.3.0 released: enhanced Buffer, FSDP2 & Megatron support, multi-modal models, and new RL algorithms/examples.

* [2025-08] Introducing [CHORD](https://github.com/modelscope/Trinity-RFT/tree/main/examples/mix_chord): dynamic SFT + RL integration for advanced LLM fine-tuning ([paper](https://arxiv.org/pdf/2508.11408)).

* [2025-08] [[Release Notes](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.2.1)] Trinity-RFT v0.2.1 released.

@@ -73,16 +95,13 @@ Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuni

---

-## Table of contents

-

+## Table of Contents

- [Quick Start](#quick-start)

- [Step 1: installation](#step-1-installation)

- [Step 2: prepare dataset and model](#step-2-prepare-dataset-and-model)

- [Step 3: configurations](#step-3-configurations)

- [Step 4: run the RFT process](#step-4-run-the-rft-process)

-- [Further tutorials](#further-tutorials)

-- [Upcoming features](#upcoming-features)

- [Contribution guide](#contribution-guide)

- [Acknowledgements](#acknowledgements)

- [Citation](#citation)

@@ -101,7 +120,7 @@ Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuni

Before installing, make sure your system meets the following requirements:

- **Python**: version 3.10 to 3.12 (inclusive)

-- **CUDA**: version 12.4 to 12.8 (inclusive)

+- **CUDA**: version >= 12.6

- **GPUs**: at least 2 GPUs

@@ -276,7 +295,9 @@ ray start --head

ray start --address=

```

-(Optional) Log in to [wandb](https://docs.wandb.ai/quickstart/) for better monitoring:

+(Optional) You may use [Wandb](https://docs.wandb.ai/quickstart/) / [TensorBoard](https://www.tensorflow.org/tensorboard) / [MLFlow](https://mlflow.org) for better monitoring.

+Please refer to [this documentation](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_configs.html#monitor-configuration) for the corresponding configurations.

+For example, to log in to Wandb:

```shell

export WANDB_API_KEY=

@@ -298,54 +319,8 @@ trinity run --config examples/grpo_gsm8k/gsm8k.yaml

For studio users, click "Run" in the web interface.

-## Further tutorials

-

-> [!NOTE]

-> For more tutorials, please refer to the [Trinity-RFT Documentation](https://modelscope.github.io/Trinity-RFT/).

-

-

-Tutorials for running different RFT modes:

-

-+ [Quick example: GRPO on GSM8k](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)

-+ [Off-policy RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_advanced.html)

-+ [Fully asynchronous RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_async_mode.html)

-+ [Offline learning by DPO or SFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_dpo.html)

-

-

-Tutorials for adapting Trinity-RFT to multi-step agentic scenarios:

-

-+ [Concatenated multi-turn workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_multi_turn.html)

-+ [General multi-step workflow](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_step_wise.html)

-+ [ReAct workflow with an agent framework](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_react.html)

-

-

-Tutorials for data-related functionalities:

-

-+ [Advanced data processing & human-in-the-loop](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_data_functionalities.html)

-

-

-Tutorials for RL algorithm development/research with Trinity-RFT:

-

-+ [RL algorithm development with Trinity-RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html)

-

-

-Guidelines for full configurations:

-

-+ See [this document](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_configs.html)

-

-

-Guidelines for developers and researchers:

-

-+ [Benchmark Toolkit for quick verification and experimentation](./benchmark/README.md)

-+ [Understand the coordination between explorer and trainer](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/synchronizer.html)

-

-

-## Upcoming features

-

-A tentative roadmap: [#51](https://github.com/modelscope/Trinity-RFT/issues/51)

-

-## Contribution guide

+## Contribution Guide

This project is currently under active development, and we welcome contributions from the community!

@@ -356,7 +331,7 @@ See [CONTRIBUTING.md](./CONTRIBUTING.md) for detailed contribution guidelines.

This project is built upon many excellent open-source projects, including:

-+ [verl](https://github.com/volcengine/verl) and [PyTorch's FSDP](https://pytorch.org/docs/stable/fsdp.html) for LLM training;

++ [verl](https://github.com/volcengine/verl), [FSDP](https://pytorch.org/docs/stable/fsdp.html) and [Megatron-LM](https://github.com/NVIDIA/Megatron-LM) for LLM training;

+ [vLLM](https://github.com/vllm-project/vllm) for LLM inference;

+ [Data-Juicer](https://github.com/modelscope/data-juicer?tab=readme-ov-file) for data processing pipelines;

+ [AgentScope](https://github.com/agentscope-ai/agentscope) for agentic workflow;

diff --git a/README_zh.md b/README_zh.md

index 8dadb307ef..1122f4cf88 100644

--- a/README_zh.md

+++ b/README_zh.md

@@ -28,38 +28,62 @@ Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RF

* 🧠 面向 RL 算法研究者。[[教程]](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/develop_algorithm.html)

- 在简洁、可插拔的类中设计和验证新的 RL 算法

- - 示例:[SFT/GRPO混合算法](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_mix_algo.html)

+ - 示例:[SFT/RL 混合算法](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_mix_algo.html)

* 📊 面向数据工程师。[[教程]](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/develop_operator.html)

- - 设计任务定制数据集,构建数据流水线以支持清洗、增强和人类参与场景

+ - 设计针对任务定制的数据集,构建处理流水线以支持数据清洗、增强以及人类参与场景

- 示例:[数据处理](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_data_functionalities.html)

# 🌟 核心特性

* **灵活的 RFT 模式:**

- - 支持同步/异步、on-policy/off-policy 以及在线/离线训练。采样与训练可分离运行,并可在多设备上独立扩展。

+ - 支持同步/异步、on-policy/off-policy 以及在线/离线强化学习

+ - 采样与训练可分离运行,并可在多设备上独立扩展

+ - 支持经验回放,进一步提升样本与时间效率

-* **通用 Agentic-RL:**

- - 支持拼接式和通用多轮交互,能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用。

+* **Agentic RL 支持:**

+ - 支持拼接式多轮和通用多轮交互

+ - 能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用

-* **通用 Agentic-RL:**

- - 支持拼接式和通用多轮交互,能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用。

+* **Agentic RL 支持:**

+ - 支持拼接式多轮和通用多轮交互

+ - 能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用

* **全流程的数据流水线:**

- - 支持 rollout 和经验数据的流水线处理,贯穿 RFT 生命周期实现主动管理(优先级、清洗、增强等)。

+ - 支持 rollout 任务和经验数据的流水线处理

+ - 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

+ - 原生支持多任务联合训练

-

* **全流程的数据流水线:**

- - 支持 rollout 和经验数据的流水线处理,贯穿 RFT 生命周期实现主动管理(优先级、清洗、增强等)。

+ - 支持 rollout 任务和经验数据的流水线处理

+ - 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

+ - 原生支持多任务联合训练

-  +

+  * **用户友好的框架设计:**

- - 模块化、解耦架构,便于快速上手和二次开发。丰富的图形界面支持低代码使用。

+ - 即插即用模块与解耦式架构,便于快速上手和二次开发

+ - 丰富的图形界面,支持低代码使用

* **用户友好的框架设计:**

- - 模块化、解耦架构,便于快速上手和二次开发。丰富的图形界面支持低代码使用。

+ - 即插即用模块与解耦式架构,便于快速上手和二次开发

+ - 丰富的图形界面,支持低代码使用

+

+## 🔨 教程与指南

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)

+

+## 🔨 教程与指南

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)

+ [Off-policy RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_advanced.html)

+ [全异步 RFT](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_async_mode.html)

+ [通过 DPO 或 SFT 进行离线学习](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_dpo.html) |

+| 多轮智能体场景 | + [拼接多轮任务](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_multi_turn.html)

+ [通用多轮任务](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_step_wise.html)

+ [调用智能体框架中的 ReAct 工作流](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_react.html) |

+| 数据流水线进阶能力 | + [Rollout 任务混合与选取](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/develop_selector.html)

+ [经验回放](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [高级数据处理能力 & Human-in-the-loop](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_data_functionalities.html) |

+| RL 算法开发/研究 | + [使用 Trinity-RFT 进行 RL 算法开发](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_mix_algo.html) ([论文](https://arxiv.org/pdf/2508.11408))

+ 不可验证的领域:[RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [可训练 RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward)

+ [研究项目: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) ([论文](https://arxiv.org/abs/2509.24203)) |

+| 深入认识 Trinity-RFT | + [完整配置指南](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_configs.html)

+ [用于快速验证和实验的 Benchmark 工具](./benchmark/README.md)

+ [理解 explorer-trainer 同步逻辑](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/synchronizer.html) |

+

+

+> [!NOTE]

+> 更多教程请参考 [Trinity-RFT 文档](https://modelscope.github.io/Trinity-RFT/)。

+

+

+

## 🚀 新闻

-* [2025-10] ✨ [[发布说明](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.3.1)] Trinity-RFT v0.3.1 发布:多阶段训练支持、改进的智能体 RL 示例、LoRA 支持、调试模式和全新 RL 算法。

+* [2025-10] [[发布说明](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.3.1)] Trinity-RFT v0.3.1 发布:多阶段训练支持、改进的智能体 RL 示例、LoRA 支持、调试模式和全新 RL 算法。

* [2025-09] [[发布说明](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.3.0)] Trinity-RFT v0.3.0 发布:增强的 Buffer、FSDP2 & Megatron 支持,多模态模型,以及全新 RL 算法/示例。

* [2025-08] 推出 [CHORD](https://github.com/modelscope/Trinity-RFT/tree/main/examples/mix_chord):动态 SFT + RL 集成,实现进阶 LLM 微调([论文](https://arxiv.org/pdf/2508.11408))。

* [2025-08] [[发布说明](https://github.com/modelscope/Trinity-RFT/releases/tag/v0.2.1)] Trinity-RFT v0.2.1 发布。

@@ -79,8 +103,6 @@ Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RF

- [第二步:准备数据集和模型](#第二步准备数据集和模型)

- [第三步:准备配置文件](#第三步准备配置文件)

- [第四步:运行 RFT 流程](#第四步运行-rft-流程)

-- [更多教程](#更多教程)

-- [开发路线图](#开发路线图)

- [贡献指南](#贡献指南)

- [致谢](#致谢)

- [引用](#引用)

@@ -99,7 +121,7 @@ Trinity-RFT 是一个灵活、通用的大语言模型(LLM)强化微调(RF

在安装之前,请确保您的系统满足以下要求:

- **Python**:版本 3.10 至 3.12(含)

-- **CUDA**:版本 12.4 至 12.8(含)

+- **CUDA**:版本 >= 12.6

- **GPU**:至少 2 块 GPU

## 源码安装(推荐)

@@ -272,7 +294,9 @@ ray start --head

ray start --address=

```

-(可选)登录 [wandb](https://docs.wandb.ai/quickstart/) 以便更好地监控 RFT 过程:

+(可选)您可以使用 [Wandb](https://docs.wandb.ai/quickstart/) / [TensorBoard](https://www.tensorflow.org/tensorboard) / [MLFlow](https://mlflow.org) 等工具,更方便地监控训练流程。

+相应的配置方法请参考 [这个文档](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/trinity_configs.html#monitor-configuration)。

+比如使用 Wandb 时,您需要先登录:

```shell

export WANDB_API_KEY=

@@ -294,53 +318,6 @@ trinity run --config examples/grpo_gsm8k/gsm8k.yaml

对于 Studio 用户,在 Web 界面中点击“运行”。

-## 更多教程

-

-> [!NOTE]

-> 更多教程请参考 [Trinity-RFT 文档](https://modelscope.github.io/Trinity-RFT/)。

-

-运行不同 RFT 模式的教程:

-

-+ [快速开始:在 GSM8k 上运行 GRPO](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_reasoning_basic.html)

-+ [Off-Policy RFT](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_reasoning_advanced.html)

-+ [全异步 RFT](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_async_mode.html)

-+ [通过 DPO 或 SFT 进行离线学习](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_dpo.html)

-

-

-将 Trinity-RFT 适配到新的多轮智能体场景的教程:

-

-+ [拼接多轮任务](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_multi_turn.html)

-+ [通用多轮任务](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_step_wise.html)

-+ [调用智能体框架中的 ReAct 工作流](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_react.html)

-

-

-数据相关功能的教程:

-

-+ [高级数据处理及 Human-in-the-loop](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_data_functionalities.html)

-

-

-使用 Trinity-RFT 进行 RL 算法开发/研究的教程:

-

-+ [使用 Trinity-RFT 进行 RL 算法开发](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/example_mix_algo.html)

-

-

-完整配置指南:

-

-+ 请参阅[此文档](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/trinity_configs.html)

-

-

-面向开发者和研究人员的指南:

-

-+ [用于快速验证实验的 Benchmark 工具](./benchmark/README.md)

-+ [理解 explorer-trainer 同步逻辑](https://modelscope.github.io/Trinity-RFT/zh/main/tutorial/synchronizer.html)

-

-

-

-## 开发路线图

-

-路线图:[#51](https://github.com/modelscope/Trinity-RFT/issues/51)

-

-

## 贡献指南

@@ -356,9 +333,9 @@ trinity run --config examples/grpo_gsm8k/gsm8k.yaml

本项目基于许多优秀的开源项目构建,包括:

-+ [verl](https://github.com/volcengine/verl) 和 [PyTorch's FSDP](https://pytorch.org/docs/stable/fsdp.html) 用于大模型训练;

++ [verl](https://github.com/volcengine/verl),[FSDP](https://pytorch.org/docs/stable/fsdp.html) 和 [Megatron-LM](https://github.com/NVIDIA/Megatron-LM) 用于大模型训练;

+ [vLLM](https://github.com/vllm-project/vllm) 用于大模型推理;

-+ [Data-Juicer](https://github.com/modelscope/data-juicer?tab=readme-ov-file) 用于数据处理管道;

++ [Data-Juicer](https://github.com/modelscope/data-juicer?tab=readme-ov-file) 用于数据处理流水线;

+ [AgentScope](https://github.com/agentscope-ai/agentscope) 用于智能体工作流;

+ [Ray](https://github.com/ray-project/ray) 用于分布式系统;

+ 我们也从 [OpenRLHF](https://github.com/OpenRLHF/OpenRLHF)、[TRL](https://github.com/huggingface/trl) 和 [ChatLearn](https://github.com/alibaba/ChatLearn) 等框架中汲取了灵感;

diff --git a/docs/sphinx_doc/assets/trinity_data_process.png b/docs/sphinx_doc/assets/trinity_data_process.png

index a99584943b..3e0ed69f87 100644

Binary files a/docs/sphinx_doc/assets/trinity_data_process.png and b/docs/sphinx_doc/assets/trinity_data_process.png differ

diff --git a/docs/sphinx_doc/source/main.md b/docs/sphinx_doc/source/main.md

index 2ed44e5b54..9c6857e237 100644

--- a/docs/sphinx_doc/source/main.md

+++ b/docs/sphinx_doc/source/main.md

@@ -11,33 +11,52 @@ Trinity-RFT is a flexible, general-purpose framework for reinforcement fine-tuni

- Example: [Mixture of SFT and GRPO](/tutorial/example_mix_algo.md)

* 📊 For data engineers. [[tutorial]](/tutorial/develop_operator.md)

- - Create task-specific datasets and build data pipelines for cleaning, augmentation, and human-in-the-loop scenarios.

+ - Create datasets and build data pipelines for cleaning, augmentation, and human-in-the-loop scenarios.

- Example: [Data Processing](/tutorial/example_data_functionalities.md)

## 🌟 Key Features

* **Flexible RFT Modes:**

- - Supports synchronous/asynchronous, on-policy/off-policy, and online/offline training. Rollout and training can run separately and scale independently across devices.

+ - Supports synchronous/asynchronous, on-policy/off-policy, and online/offline RL.

+ - Rollout and training can run separately and scale independently across devices.

+ - Boost sample and time efficiency by experience replay.

-* **General Agentic-RL Support:**

- - Supports both concatenated and general multi-turn agentic workflows. Able to directly train agent applications developed using agent frameworks like AgentScope.

+* **Agentic RL Support:**

+ - Supports both concatenated and general multi-step agentic workflows.

+ - Able to directly train agent applications developed using agent frameworks like AgentScope.

-* **General Agentic-RL Support:**

- - Supports both concatenated and general multi-turn agentic workflows. Able to directly train agent applications developed using agent frameworks like AgentScope.

+* **Agentic RL Support:**

+ - Supports both concatenated and general multi-step agentic workflows.

+ - Able to directly train agent applications developed using agent frameworks like AgentScope.

-* **Full Lifecycle Data Pipelines:**

- - Enables pipeline processing of rollout and experience data, supporting active management (prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+* **Full-Lifecycle Data Pipelines:**

+ - Enables pipeline processing of rollout tasks and experience samples.

+ - Active data management (e.g., prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+ - Native support for multi-task joint learning.

-

-* **Full Lifecycle Data Pipelines:**

- - Enables pipeline processing of rollout and experience data, supporting active management (prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+* **Full-Lifecycle Data Pipelines:**

+ - Enables pipeline processing of rollout tasks and experience samples.

+ - Active data management (e.g., prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+ - Native support for multi-task joint learning.

-  +

+  * **User-Friendly Design:**

- - Modular, decoupled architecture for easy adoption and development. Rich graphical user interfaces enable low-code usage.

+ - Plug-and-play modules and decoupled architecture, facilitating easy adoption and development.

+ - Rich graphical user interfaces enable low-code usage.

* **User-Friendly Design:**

- - Modular, decoupled architecture for easy adoption and development. Rich graphical user interfaces enable low-code usage.

+ - Plug-and-play modules and decoupled architecture, facilitating easy adoption and development.

+ - Rich graphical user interfaces enable low-code usage.

+

+## 🔨 Tutorials and Guidelines

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| Run diverse RFT modes | + [Quick example: GRPO on GSM8k](/tutorial/example_reasoning_basic.md)

+

+## 🔨 Tutorials and Guidelines

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| Run diverse RFT modes | + [Quick example: GRPO on GSM8k](/tutorial/example_reasoning_basic.md)

+ [Off-policy RFT](/tutorial/example_reasoning_advanced.md)

+ [Fully asynchronous RFT](/tutorial/example_async_mode.md)

+ [Offline learning by DPO or SFT](/tutorial/example_dpo.md) |

+| Multi-step agentic scenarios | + [Concatenated multi-turn workflow](/tutorial/example_multi_turn.md)

+ [General multi-step workflow](/tutorial/example_step_wise.md)

+ [ReAct workflow with an agent framework](/tutorial/example_react.md) |

+| Advanced data pipelines | + [Rollout task mixing and selection](/tutorial/develop_selector.md)

+ [Experience replay](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [Advanced data processing & human-in-the-loop](/tutorial/example_data_functionalities.md) |

+| Algorithm development / research | + [RL algorithm development with Trinity-RFT](/tutorial/example_mix_algo.md) ([paper](https://arxiv.org/pdf/2508.11408))

+ Non-verifiable domains: [RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [trainable RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward)

+ [Research project: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) ([paper](https://arxiv.org/abs/2509.24203))|

+| Going deeper into Trinity-RFT | + [Full configurations](/tutorial/trinity_configs.md)

+ [Benchmark toolkit for quick verification and experimentation](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/README.md)

+ [Understand the coordination between explorer and trainer](/tutorial/synchronizer.md) |

+

+

## Acknowledgements

This project is built upon many excellent open-source projects, including:

diff --git a/docs/sphinx_doc/source/tutorial/example_data_functionalities.md b/docs/sphinx_doc/source/tutorial/example_data_functionalities.md

index 905148c936..1ff321b451 100644

--- a/docs/sphinx_doc/source/tutorial/example_data_functionalities.md

+++ b/docs/sphinx_doc/source/tutorial/example_data_functionalities.md

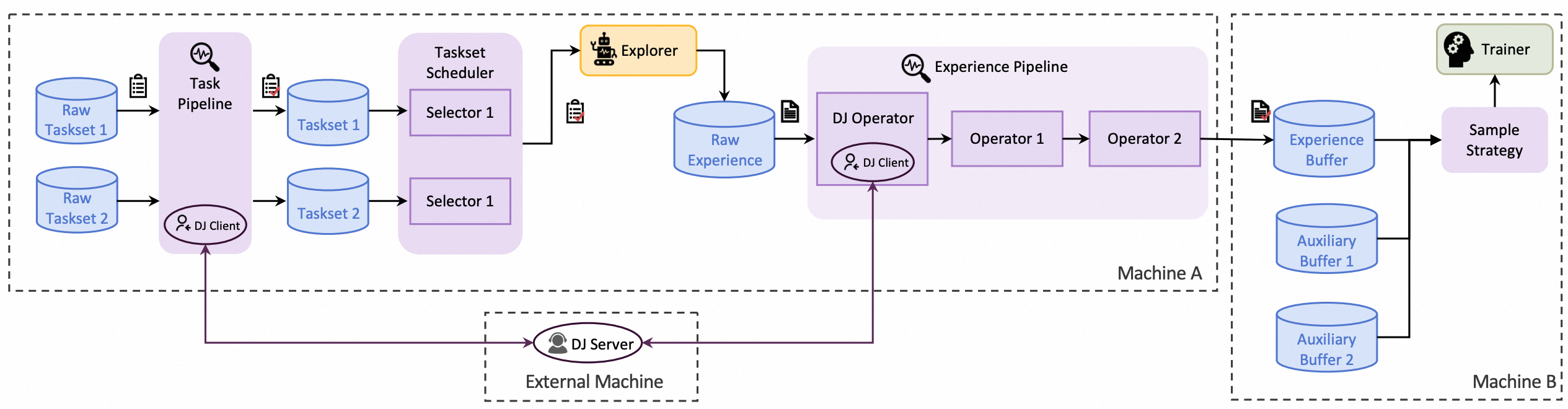

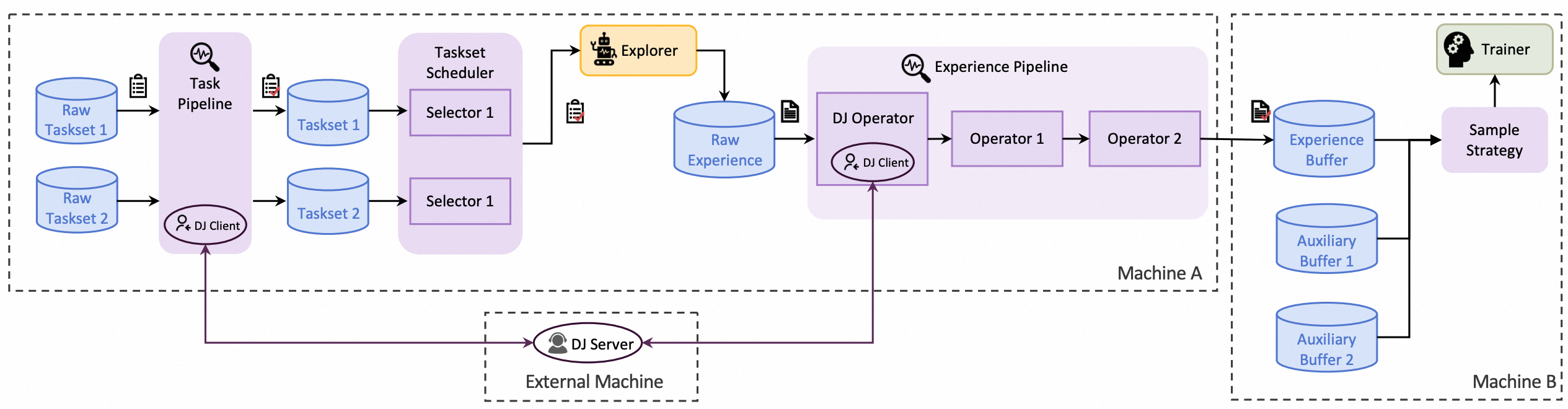

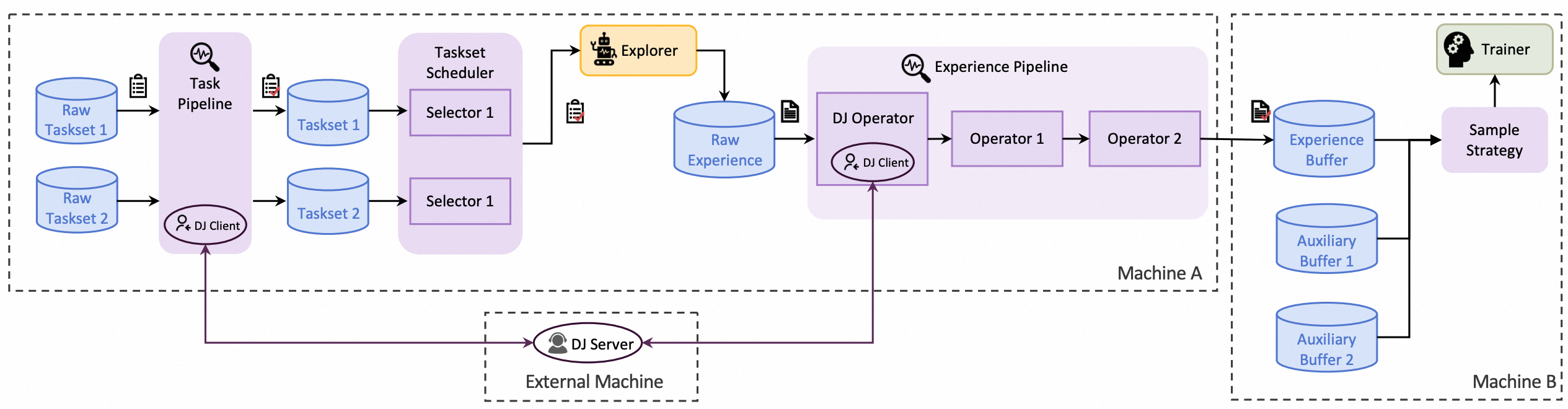

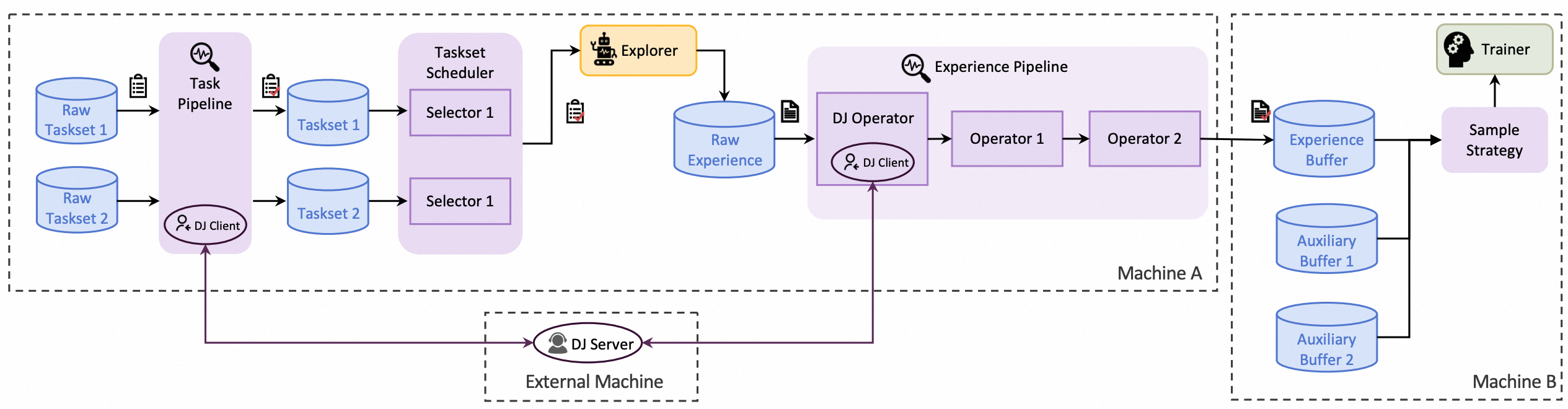

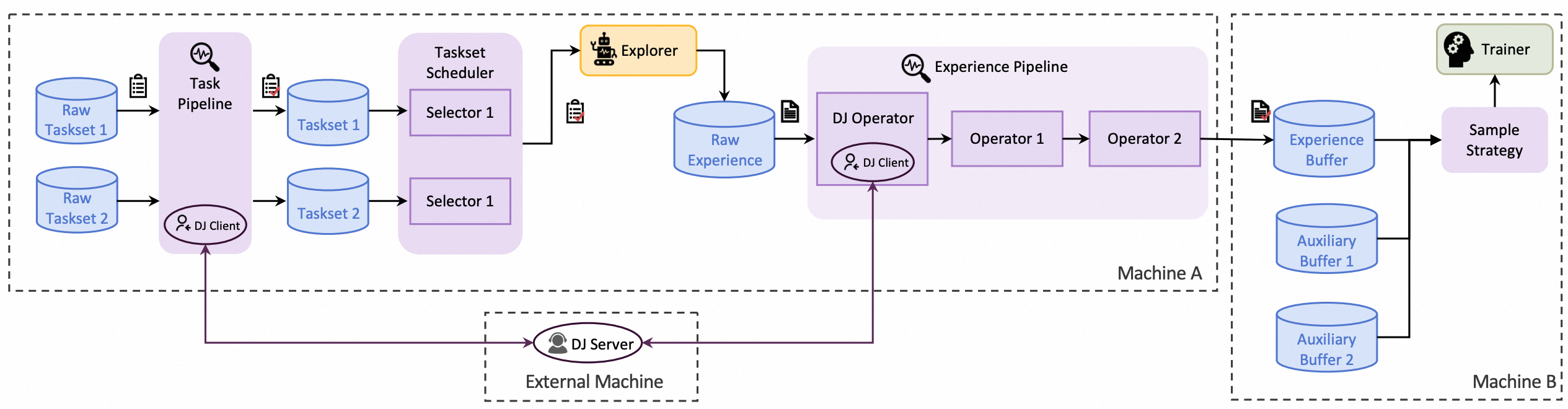

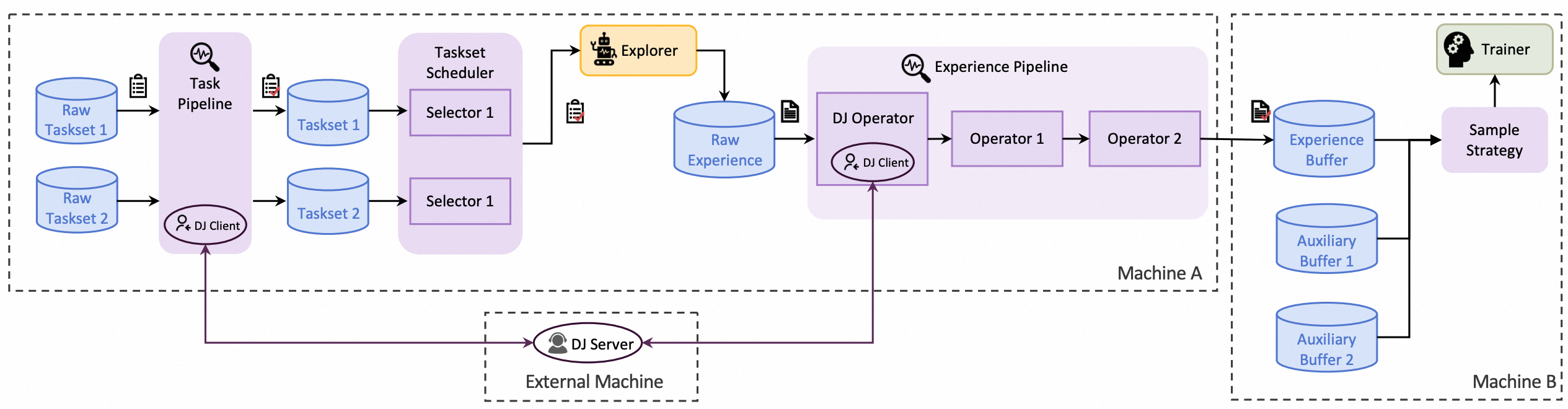

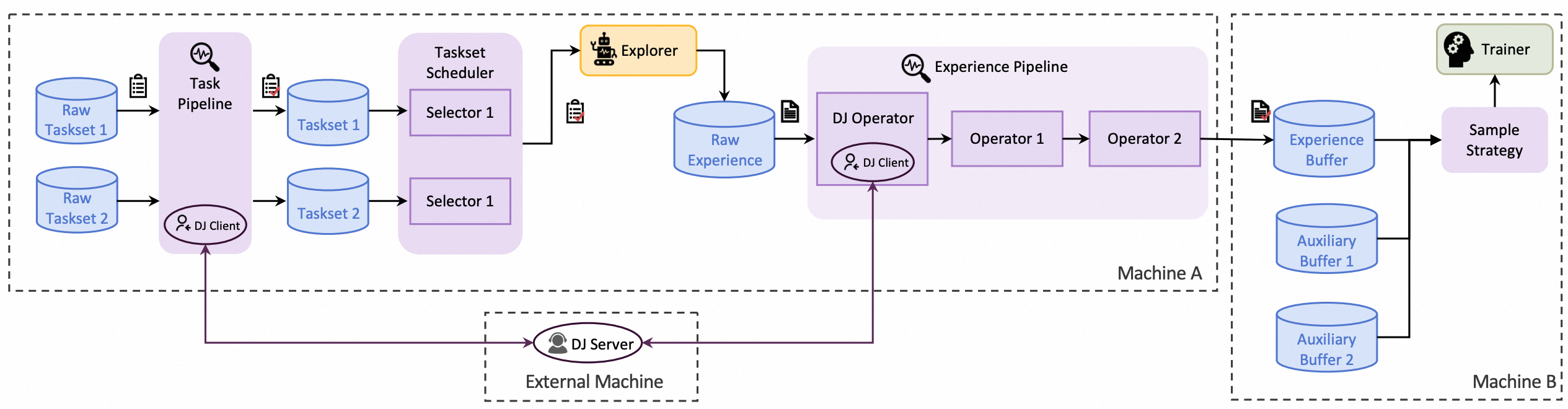

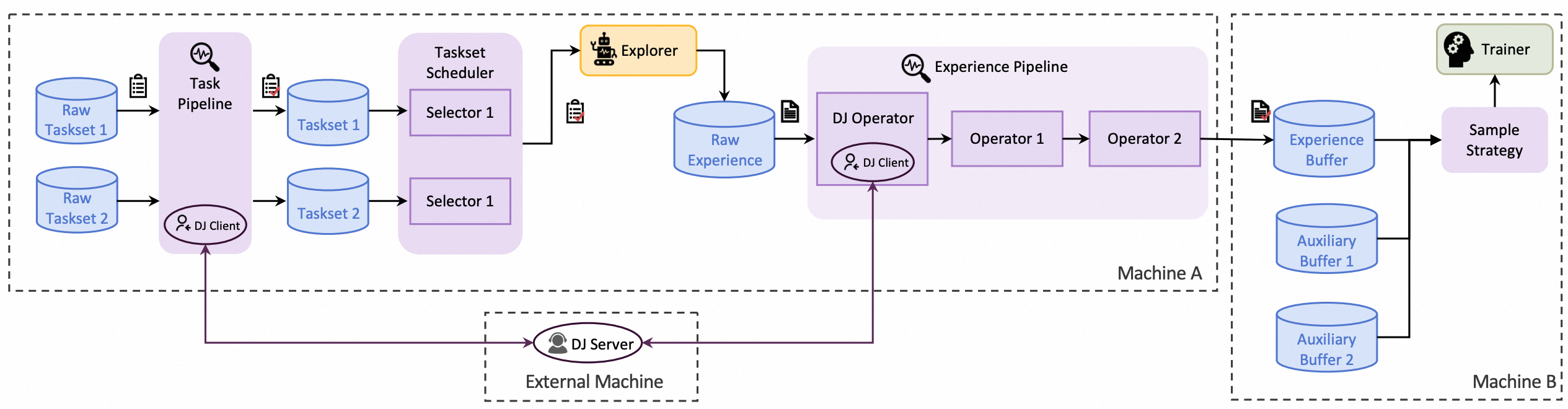

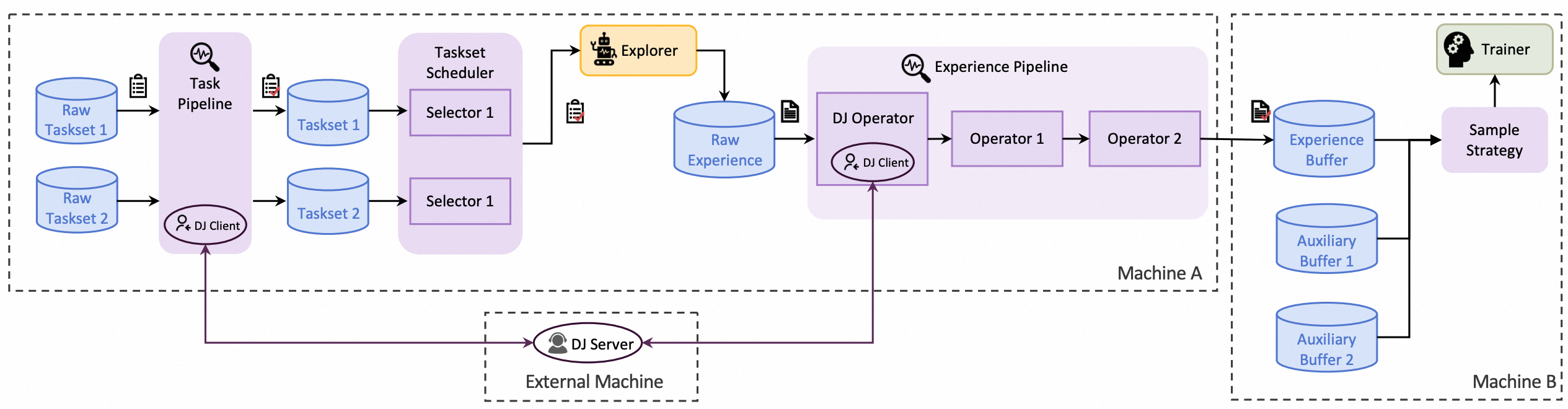

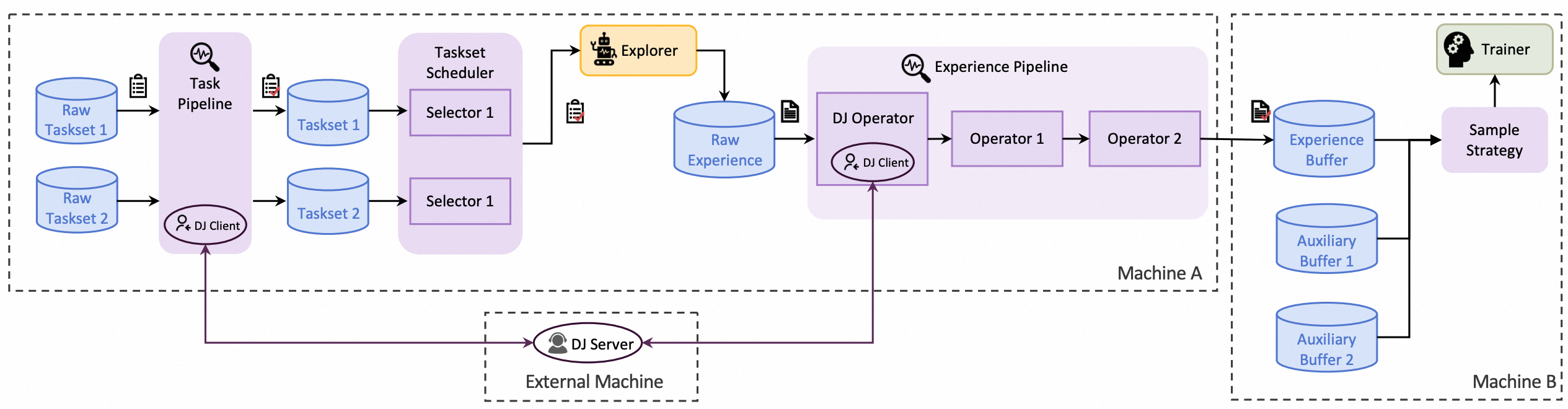

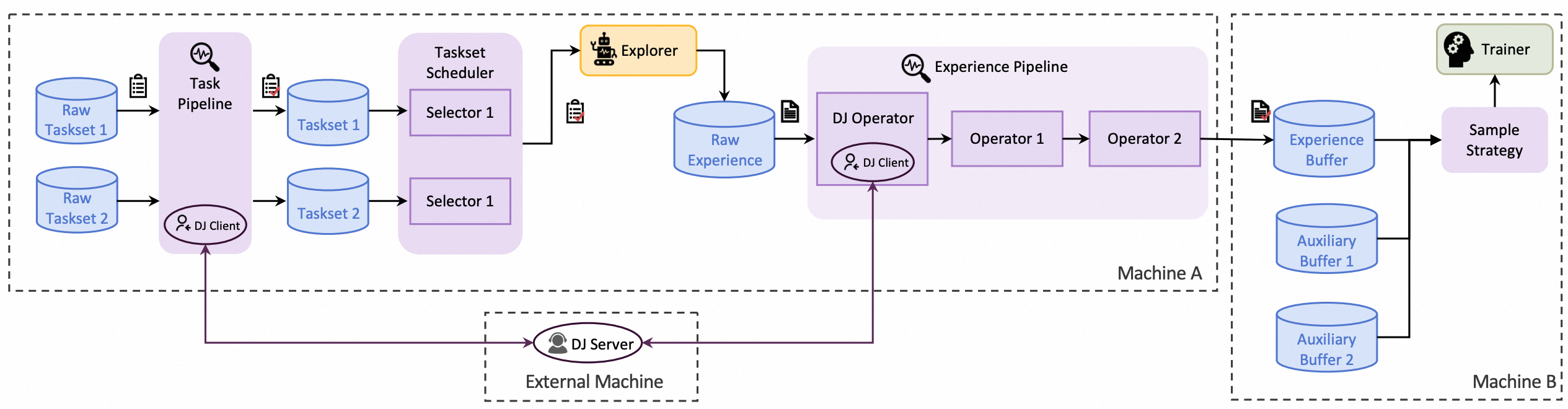

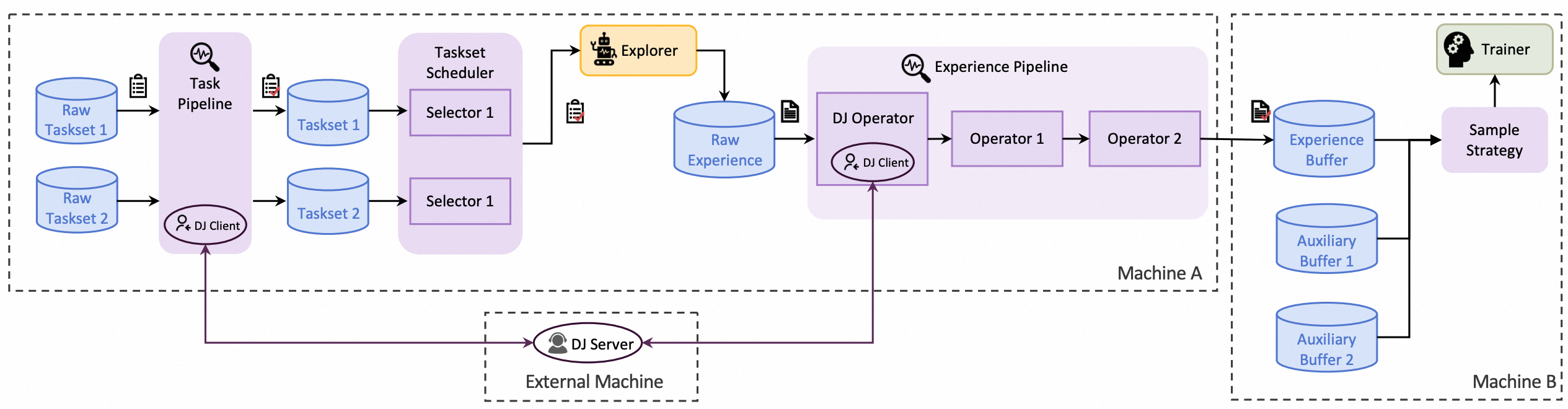

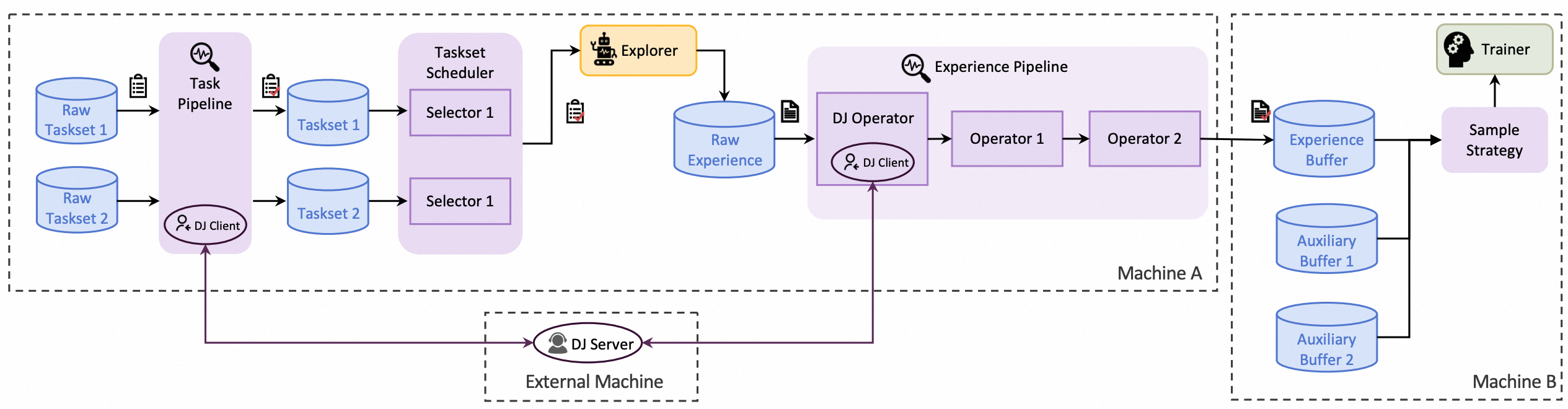

@@ -14,7 +14,7 @@ To support the data processing of Data-Juicer and RFT-related operators, Trinity

An overview of the data processor is shown in the following figure.

-

+

-* **通用 Agentic-RL:**

- - 支持拼接式和通用多轮交互,能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用。

+* **Agentic RL 支持:**

+ - 支持拼接式多轮和通用多轮交互

+ - 能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用

-* **通用 Agentic-RL:**

- - 支持拼接式和通用多轮交互,能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用。

+* **Agentic RL 支持:**

+ - 支持拼接式多轮和通用多轮交互

+ - 能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用

* **全流程的数据流水线:**

- - 支持 rollout 和经验数据的流水线处理,贯穿 RFT 生命周期实现主动管理(优先级、清洗、增强等)。

+ - 支持 rollout 任务和经验数据的流水线处理

+ - 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

+ - 原生支持多任务联合训练

-

* **全流程的数据流水线:**

- - 支持 rollout 和经验数据的流水线处理,贯穿 RFT 生命周期实现主动管理(优先级、清洗、增强等)。

+ - 支持 rollout 任务和经验数据的流水线处理

+ - 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

+ - 原生支持多任务联合训练

-  +

+  * **用户友好的框架设计:**

- - 模块化、解耦架构,便于快速上手和二次开发。丰富的图形界面支持低代码使用。

+ - 即插即用模块与解耦式架构,便于快速上手和二次开发

+ - 丰富的图形界面,支持低代码使用

* **用户友好的框架设计:**

- - 模块化、解耦架构,便于快速上手和二次开发。丰富的图形界面支持低代码使用。

+ - 即插即用模块与解耦式架构,便于快速上手和二次开发

+ - 丰富的图形界面,支持低代码使用

+

+

+

+## 🔨 教程与指南

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](/tutorial/example_reasoning_basic.md)

+

+

+

+## 🔨 教程与指南

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](/tutorial/example_reasoning_basic.md)

+ [Off-policy RFT](/tutorial/example_reasoning_advanced.md)

+ [全异步 RFT](/tutorial/example_async_mode.md)

+ [通过 DPO 或 SFT 进行离线学习](/tutorial/example_dpo.md) |

+| 多轮智能体场景 | + [拼接多轮任务](/tutorial/example_multi_turn.md)

+ [通用多轮任务](/tutorial/example_step_wise.md)

+ [调用智能体框架中的 ReAct 工作流](/tutorial/example_react.md) |

+| 数据流水线进阶能力 | + [Rollout 任务混合与选取](/tutorial/develop_selector.md)

+ [经验回放](https://github.com/modelscope/Trinity-RFT/tree/main/examples/ppo_countdown_exp_replay)

+ [高级数据处理能力 & Human-in-the-loop](/tutorial/example_data_functionalities.md) |

+| RL 算法开发/研究 | + [使用 Trinity-RFT 进行 RL 算法开发](/tutorial/example_mix_algo.md) ([论文](https://arxiv.org/pdf/2508.11408))

+ 不可验证的领域:[RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_ruler), [可训练 RULER](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_gsm8k_trainable_ruler), [rubric-as-reward](https://github.com/modelscope/Trinity-RFT/tree/main/examples/grpo_rubric_as_reward)

+ [研究项目: group-relative REINFORCE](https://github.com/modelscope/Trinity-RFT/tree/main/examples/rec_gsm8k) ([论文](https://arxiv.org/abs/2509.24203)) |

+| 深入认识 Trinity-RFT | + [完整配置指南](/tutorial/trinity_configs.md)

+ [用于快速验证和实验的 Benchmark 工具](https://github.com/modelscope/Trinity-RFT/tree/main/benchmark/README.md)

+ [理解 explorer-trainer 同步逻辑](/tutorial/synchronizer.md) |

+

+

+

## 致谢

本项目基于许多优秀的开源项目构建,包括:

-+ [verl](https://github.com/volcengine/verl) 和 [PyTorch's FSDP](https://pytorch.org/docs/stable/fsdp.html) 用于大模型训练;

++ [verl](https://github.com/volcengine/verl),[FSDP](https://pytorch.org/docs/stable/fsdp.html) 和 [Megatron-LM](https://github.com/NVIDIA/Megatron-LM) 用于大模型训练;

+ [vLLM](https://github.com/vllm-project/vllm) 用于大模型推理;

-+ [Data-Juicer](https://github.com/modelscope/data-juicer?tab=readme-ov-file) 用于数据处理管道;

++ [Data-Juicer](https://github.com/modelscope/data-juicer?tab=readme-ov-file) 用于数据处理流水线;

+ [AgentScope](https://github.com/agentscope-ai/agentscope) 用于智能体工作流;

+ [Ray](https://github.com/ray-project/ray) 用于分布式系统;

+ 我们也从 [OpenRLHF](https://github.com/OpenRLHF/OpenRLHF)、[TRL](https://github.com/huggingface/trl) 和 [ChatLearn](https://github.com/alibaba/ChatLearn) 等框架中汲取了灵感;

diff --git a/docs/sphinx_doc/source_zh/tutorial/example_data_functionalities.md b/docs/sphinx_doc/source_zh/tutorial/example_data_functionalities.md

index 35117c8547..673a428713 100644

--- a/docs/sphinx_doc/source_zh/tutorial/example_data_functionalities.md

+++ b/docs/sphinx_doc/source_zh/tutorial/example_data_functionalities.md

@@ -14,7 +14,7 @@ Trinity-RFT 提供了一个统一的数据处理器,用于处理 task 流水

数据处理器的整体架构如下图所示:

-

+

-* **通用 Agentic-RL:**

- - 支持拼接式和通用多轮交互,能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用。

+* **Agentic RL 支持:**

+ - 支持拼接式多轮和通用多轮交互

+ - 能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用

-* **通用 Agentic-RL:**

- - 支持拼接式和通用多轮交互,能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用。

+* **Agentic RL 支持:**

+ - 支持拼接式多轮和通用多轮交互

+ - 能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用

* **全流程的数据流水线:**

- - 支持 rollout 和经验数据的流水线处理,贯穿 RFT 生命周期实现主动管理(优先级、清洗、增强等)。

+ - 支持 rollout 任务和经验数据的流水线处理

+ - 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

+ - 原生支持多任务联合训练

-

* **全流程的数据流水线:**

- - 支持 rollout 和经验数据的流水线处理,贯穿 RFT 生命周期实现主动管理(优先级、清洗、增强等)。

+ - 支持 rollout 任务和经验数据的流水线处理

+ - 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

+ - 原生支持多任务联合训练

-  +

+  * **用户友好的框架设计:**

- - 模块化、解耦架构,便于快速上手和二次开发。丰富的图形界面支持低代码使用。

+ - 即插即用模块与解耦式架构,便于快速上手和二次开发

+ - 丰富的图形界面,支持低代码使用

* **用户友好的框架设计:**

- - 模块化、解耦架构,便于快速上手和二次开发。丰富的图形界面支持低代码使用。

+ - 即插即用模块与解耦式架构,便于快速上手和二次开发

+ - 丰富的图形界面,支持低代码使用

+

+## 🔨 教程与指南

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html)

+

+## 🔨 教程与指南

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](https://modelscope.github.io/Trinity-RFT/en/main/tutorial/example_reasoning_basic.html) -* **General Agentic-RL Support:**

- - Supports both concatenated and general multi-turn agentic workflows. Able to directly train agent applications developed using agent frameworks like AgentScope.

+* **Agentic RL Support:**

+ - Supports both concatenated and general multi-step agentic workflows.

+ - Able to directly train agent applications developed using agent frameworks like AgentScope.

-* **General Agentic-RL Support:**

- - Supports both concatenated and general multi-turn agentic workflows. Able to directly train agent applications developed using agent frameworks like AgentScope.

+* **Agentic RL Support:**

+ - Supports both concatenated and general multi-step agentic workflows.

+ - Able to directly train agent applications developed using agent frameworks like AgentScope.

-* **Full Lifecycle Data Pipelines:**

- - Enables pipeline processing of rollout and experience data, supporting active management (prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+* **Full-Lifecycle Data Pipelines:**

+ - Enables pipeline processing of rollout tasks and experience samples.

+ - Active data management (e.g., prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+ - Native support for multi-task joint learning.

-

-* **Full Lifecycle Data Pipelines:**

- - Enables pipeline processing of rollout and experience data, supporting active management (prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+* **Full-Lifecycle Data Pipelines:**

+ - Enables pipeline processing of rollout tasks and experience samples.

+ - Active data management (e.g., prioritization, cleaning, augmentation) throughout the RFT lifecycle.

+ - Native support for multi-task joint learning.

-  +

+  * **User-Friendly Design:**

- - Modular, decoupled architecture for easy adoption and development. Rich graphical user interfaces enable low-code usage.

+ - Plug-and-play modules and decoupled architecture, facilitating easy adoption and development.

+ - Rich graphical user interfaces enable low-code usage.

* **User-Friendly Design:**

- - Modular, decoupled architecture for easy adoption and development. Rich graphical user interfaces enable low-code usage.

+ - Plug-and-play modules and decoupled architecture, facilitating easy adoption and development.

+ - Rich graphical user interfaces enable low-code usage.

+

+## 🔨 Tutorials and Guidelines

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| Run diverse RFT modes | + [Quick example: GRPO on GSM8k](/tutorial/example_reasoning_basic.md)

+

+## 🔨 Tutorials and Guidelines

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| Run diverse RFT modes | + [Quick example: GRPO on GSM8k](/tutorial/example_reasoning_basic.md) +

+

+

+

-* **通用 Agentic-RL:**

- - 支持拼接式和通用多轮交互,能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用。

+* **Agentic RL 支持:**

+ - 支持拼接式多轮和通用多轮交互

+ - 能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用

-* **通用 Agentic-RL:**

- - 支持拼接式和通用多轮交互,能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用。

+* **Agentic RL 支持:**

+ - 支持拼接式多轮和通用多轮交互

+ - 能够直接训练使用 AgentScope 等智能体框架开发的 Agent 应用

* **全流程的数据流水线:**

- - 支持 rollout 和经验数据的流水线处理,贯穿 RFT 生命周期实现主动管理(优先级、清洗、增强等)。

+ - 支持 rollout 任务和经验数据的流水线处理

+ - 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

+ - 原生支持多任务联合训练

-

* **全流程的数据流水线:**

- - 支持 rollout 和经验数据的流水线处理,贯穿 RFT 生命周期实现主动管理(优先级、清洗、增强等)。

+ - 支持 rollout 任务和经验数据的流水线处理

+ - 贯穿 RFT 生命周期的主动数据管理(优先级排序、清洗、增强等)

+ - 原生支持多任务联合训练

-  +

+  * **用户友好的框架设计:**

- - 模块化、解耦架构,便于快速上手和二次开发。丰富的图形界面支持低代码使用。

+ - 即插即用模块与解耦式架构,便于快速上手和二次开发

+ - 丰富的图形界面,支持低代码使用

* **用户友好的框架设计:**

- - 模块化、解耦架构,便于快速上手和二次开发。丰富的图形界面支持低代码使用。

+ - 即插即用模块与解耦式架构,便于快速上手和二次开发

+ - 丰富的图形界面,支持低代码使用

+

+

+

+## 🔨 教程与指南

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](/tutorial/example_reasoning_basic.md)

+

+

+

+## 🔨 教程与指南

+

+

+| Category | Tutorial / Guideline |

+| --- | --- |

+| 运行各种 RFT 模式 | + [快速开始:在 GSM8k 上运行 GRPO](/tutorial/example_reasoning_basic.md) +

+

+

+