Apache kyuubi-1.5.1 failed to submit the Spark task? #4068

-

Code of Conduct

Search before asking

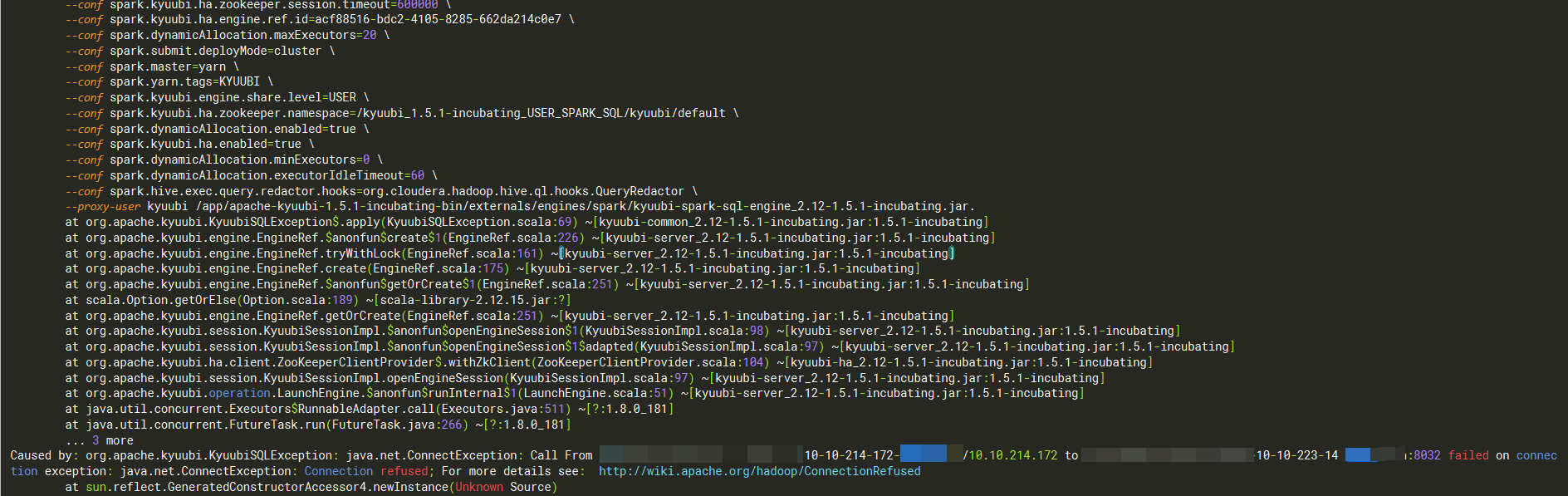

Describe the bugHello, I'm a new user of Apache kyuubi. Our cluster uses the Spark 2.4.8 environment; I just learned about Apache kyuubi. After installation, I found that it could not be used. I checked the log and reported an error and could not connect to the ResourceManager: 8032. What is the reason? Export HADOOP has been configured in the kyuubi-env.sh configuration file_ CONF_ DIR=/etc/hadoop/conf Affects Version(s)Apache kyuubi-1.5.1 Kyuubi Server Log OutputNo response Kyuubi Engine Log Output16:59:58.704 INFO org.apache.curator.framework.imps.CuratorFrameworkImpl: backgroundOperationsLoop exiting

16:59:58.708 INFO org.apache.zookeeper.ZooKeeper: Session: 0x2016353d5c9001e closed

16:59:58.708 INFO org.apache.zookeeper.ClientCnxn: EventThread shut down for session: 0x2016353d5c9001e

16:59:58.709 INFO org.apache.kyuubi.operation.LaunchEngine: Processing kyuubi's query[4a095da4-66cf-42c2-8f0a-e8c82abc2711]: RUNNING_STATE -> ERROR_STATE, statement: LAUNCH_ENGINE, time taken: 180.456 seconds

16:59:58.710 ERROR org.apache.kyuubi.server.KyuubiTBinaryFrontendService: Error executing statement:

java.util.concurrent.ExecutionException: org.apache.kyuubi.KyuubiSQLException: Timeout(180000 ms) to launched SPARK_SQL engine with /opt/cloudera/parcels/SPARK24-2.4.8-bin-hadoop-3.0.0-cdh6.0.0-1/lib/spark24/bin/spark-submit \

--class org.apache.kyuubi.engine.spark.SparkSQLEngine \

--conf spark.kyuubi.session.engine.idle.timeout=PT10H \

--conf spark.kyuubi.ha.zookeeper.quorum=10.10.214.168:2181,10.10.214.169:2181,10.10.214.172:2181 \

--conf spark.kyuubi.client.ip=10.10.214.172 \

--conf spark.hive.query.redaction.rules=/opt/cloudera/parcels/CDH-6.0.0-1.cdh6.0.0.p0.537114/bin/../lib/hive/conf/redaction-rules.json \

--conf spark.kyuubi.engine.submit.time=1672736218275 \

--conf spark.kyuubi.ha.zookeeper.client.port=2181 \

--conf spark.app.name=kyuubi_USER_SPARK_SQL_kyuubi_default_acf88516-bdc2-4105-8285-662da214c0e7 \

--conf spark.kyuubi.ha.zookeeper.session.timeout=600000 \

--conf spark.kyuubi.ha.engine.ref.id=acf88516-bdc2-4105-8285-662da214c0e7 \

--conf spark.dynamicAllocation.maxExecutors=20 \

--conf spark.submit.deployMode=cluster \

--conf spark.master=yarn \

--conf spark.yarn.tags=KYUUBI \

--conf spark.kyuubi.engine.share.level=USER \

--conf spark.kyuubi.ha.zookeeper.namespace=/kyuubi_1.5.1-incubating_USER_SPARK_SQL/kyuubi/default \

--conf spark.dynamicAllocation.enabled=true \

--conf spark.kyuubi.ha.enabled=true \

--conf spark.dynamicAllocation.minExecutors=0 \

--conf spark.dynamicAllocation.executorIdleTimeout=60 \

--conf spark.hive.exec.query.redactor.hooks=org.cloudera.hadoop.hive.ql.hooks.QueryRedactor \

--proxy-user kyuubi /app/apache-kyuubi-1.5.1-incubating-bin/externals/engines/spark/kyuubi-spark-sql-engine_2.12-1.5.1-incubating.jar.

at java.util.concurrent.FutureTask.report(FutureTask.java:122) ~[?:1.8.0_181]

at java.util.concurrent.FutureTask.get(FutureTask.java:192) ~[?:1.8.0_181]

at org.apache.kyuubi.session.KyuubiSessionImpl.$anonfun$waitForEngineLaunched$1(KyuubiSessionImpl.scala:131) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.session.KyuubiSessionImpl.$anonfun$waitForEngineLaunched$1$adapted(KyuubiSessionImpl.scala:127) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at scala.Option.foreach(Option.scala:407) ~[scala-library-2.12.15.jar:?]

at org.apache.kyuubi.session.KyuubiSessionImpl.waitForEngineLaunched(KyuubiSessionImpl.scala:127) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.session.KyuubiSessionImpl.runOperation(KyuubiSessionImpl.scala:117) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.session.AbstractSession.$anonfun$executeStatement$1(AbstractSession.scala:122) ~[kyuubi-common_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.session.AbstractSession.withAcquireRelease(AbstractSession.scala:75) ~[kyuubi-common_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.session.AbstractSession.executeStatement(AbstractSession.scala:119) ~[kyuubi-common_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.service.AbstractBackendService.executeStatement(AbstractBackendService.scala:66) ~[kyuubi-common_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.server.KyuubiServer$$anon$1.org$apache$kyuubi$server$BackendServiceMetric$$super$executeStatement(KyuubiServer.scala:142) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.server.BackendServiceMetric.$anonfun$executeStatement$1(BackendServiceMetric.scala:62) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.metrics.MetricsSystem$.timerTracing(MetricsSystem.scala:111) ~[kyuubi-metrics_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.server.BackendServiceMetric.executeStatement(BackendServiceMetric.scala:62) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.server.BackendServiceMetric.executeStatement$(BackendServiceMetric.scala:55) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.server.KyuubiServer$$anon$1.executeStatement(KyuubiServer.scala:142) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.service.TFrontendService.ExecuteStatement(TFrontendService.scala:232) ~[kyuubi-common_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$ExecuteStatement.getResult(TCLIService.java:1557) ~[hive-service-rpc-3.1.2.jar:3.1.2]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$ExecuteStatement.getResult(TCLIService.java:1542) ~[hive-service-rpc-3.1.2.jar:3.1.2]

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) ~[libthrift-0.9.3.jar:0.9.3]

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39) ~[libthrift-0.9.3.jar:0.9.3]

at org.apache.kyuubi.service.authentication.TSetIpAddressProcessor.process(TSetIpAddressProcessor.scala:36) ~[kyuubi-common_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286) ~[libthrift-0.9.3.jar:0.9.3]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_181]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_181]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_181]

Caused by: org.apache.kyuubi.KyuubiSQLException: Timeout(180000 ms) to launched SPARK_SQL engine with /opt/cloudera/parcels/SPARK24-2.4.8-bin-hadoop-3.0.0-cdh6.0.0-1/lib/spark24/bin/spark-submit \

--class org.apache.kyuubi.engine.spark.SparkSQLEngine \

--conf spark.kyuubi.session.engine.idle.timeout=PT10H \

--conf spark.kyuubi.ha.zookeeper.quorum=10.10.214.168:2181,10.10.214.169:2181,10.10.214.172:2181 \

--conf spark.kyuubi.client.ip=10.10.214.172 \

--conf spark.hive.query.redaction.rules=/opt/cloudera/parcels/CDH-6.0.0-1.cdh6.0.0.p0.537114/bin/../lib/hive/conf/redaction-rules.json \

--conf spark.kyuubi.engine.submit.time=1672736218275 \

--conf spark.kyuubi.ha.zookeeper.client.port=2181 \

--conf spark.app.name=kyuubi_USER_SPARK_SQL_kyuubi_default_acf88516-bdc2-4105-8285-662da214c0e7 \

--conf spark.kyuubi.ha.zookeeper.session.timeout=600000 \

--conf spark.kyuubi.ha.engine.ref.id=acf88516-bdc2-4105-8285-662da214c0e7 \

--conf spark.dynamicAllocation.maxExecutors=20 \

--conf spark.submit.deployMode=cluster \

--conf spark.master=yarn \

--conf spark.yarn.tags=KYUUBI \

--conf spark.kyuubi.engine.share.level=USER \

--conf spark.kyuubi.ha.zookeeper.namespace=/kyuubi_1.5.1-incubating_USER_SPARK_SQL/kyuubi/default \

--conf spark.dynamicAllocation.enabled=true \

--conf spark.kyuubi.ha.enabled=true \

--conf spark.dynamicAllocation.minExecutors=0 \

--conf spark.dynamicAllocation.executorIdleTimeout=60 \

--conf spark.hive.exec.query.redactor.hooks=org.cloudera.hadoop.hive.ql.hooks.QueryRedactor \

--proxy-user kyuubi /app/apache-kyuubi-1.5.1-incubating-bin/externals/engines/spark/kyuubi-spark-sql-engine_2.12-1.5.1-incubating.jar.

at org.apache.kyuubi.KyuubiSQLException$.apply(KyuubiSQLException.scala:69) ~[kyuubi-common_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.engine.EngineRef.$anonfun$create$1(EngineRef.scala:226) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.engine.EngineRef.tryWithLock(EngineRef.scala:161) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.engine.EngineRef.create(EngineRef.scala:175) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.engine.EngineRef.$anonfun$getOrCreate$1(EngineRef.scala:251) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at scala.Option.getOrElse(Option.scala:189) ~[scala-library-2.12.15.jar:?]

at org.apache.kyuubi.engine.EngineRef.getOrCreate(EngineRef.scala:251) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.session.KyuubiSessionImpl.$anonfun$openEngineSession$1(KyuubiSessionImpl.scala:98) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.session.KyuubiSessionImpl.$anonfun$openEngineSession$1$adapted(KyuubiSessionImpl.scala:97) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.ha.client.ZooKeeperClientProvider$.withZkClient(ZooKeeperClientProvider.scala:104) ~[kyuubi-ha_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.session.KyuubiSessionImpl.openEngineSession(KyuubiSessionImpl.scala:97) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.operation.LaunchEngine.$anonfun$runInternal$1(LaunchEngine.scala:51) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[?:1.8.0_181]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_181]

... 3 more

Caused by: org.apache.kyuubi.KyuubiSQLException: java.net.ConnectException: Call From 10-10-214-172/10.10.214.172 to 10-10-223-14:8032 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.GeneratedConstructorAccessor4.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:731)

at org.apache.hadoop.ipc.Client.call(Client.java:1474)

at org.apache.hadoop.ipc.Client.call(Client.java:1401)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232)

at com.sun.proxy.$Proxy12.getClusterMetrics(Unknown Source)

at org.apache.hadoop.yarn.api.impl.pb.client.ApplicationClientProtocolPBClientImpl.getClusterMetrics(ApplicationClientProtocolPBClientImpl.java:202)

at sun.reflect.GeneratedMethodAccessor2.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy13.getClusterMetrics(Unknown Source)

at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.getYarnClusterMetrics(YarnClientImpl.java:461)

at org.apache.spark.deploy.yarn.Client$$anonfun$submitApplication$1.apply(Client.scala:165)

at org.apache.spark.deploy.yarn.Client$$anonfun$submitApplication$1.apply(Client.scala:165)

at org.apache.spark.internal.Logging$class.logInfo(Logging.scala:54)

at org.apache.spark.deploy.yarn.Client.logInfo(Client.scala:60)

at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:164)

at org.apache.spark.deploy.yarn.Client.run(Client.scala:1135)

at org.apache.spark.deploy.yarn.YarnClusterApplication.start(Client.scala:1530)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:855)

at org.apache.spark.deploy.SparkSubmit$$anon$3.run(SparkSubmit.scala:146)

at org.apache.spark.deploy.SparkSubmit$$anon$3.run(SparkSubmit.scala:144)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1692)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:144)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:184)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:930)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:939)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:609)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:707)

at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:370)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1523)

at org.apache.hadoop.ipc.Client.call(Client.java:1440)

See more: /app/kyuubi/work/kyuubi/kyuubi-spark-sql-engine.log.16

at org.apache.kyuubi.KyuubiSQLException$.apply(KyuubiSQLException.scala:69) ~[kyuubi-common_2.12-1.5.1-incubating.jar:1.5.1-incubating]

at org.apache.kyuubi.engine.ProcBuilder.$anonfun$start$1(ProcBuilder.scala:165) ~[kyuubi-server_2.12-1.5.1-incubating.jar:1.5.1-incubating]

... 1 moreKyuubi Server Configurationskyuubi.authentication=NONE

kyuubi.engine.share.level=USER

kyuubi.frontend.bind.host=0.0.0.0

kyuubi.frontend.bind.port=10009

kyuubi.ha.zookeeper.quorum=10.10.214.168:2181,10.10.214.169:2181,10.10.214.172:2181

kyuubi.ha.zookeeper.client.port=2181

kyuubi.ha.zookeeper.session.timeout=600000

kyuubi.ha.zookeeper.namespace=kyuubi

kyuubi.session.engine.idle.timeout=PT10H

kyuubi.ha.enabled=true

spark.master=yarn

spark.submit.deployMode=cluster

spark.dynamicAllocation.enabled=true

spark.dynamicAllocation.minExecutors=0

spark.dynamicAllocation.maxExecutors=20

spark.dynamicAllocation.executorIdleTimeout=60Kyuubi Engine Configurationsspark.authenticate=false

spark.driver.log.dfsDir=/user/spark/driver24Logs

spark.driver.log.persistToDfs.enabled=true

spark.dynamicAllocation.enabled=true

spark.dynamicAllocation.executorIdleTimeout=60

spark.dynamicAllocation.minExecutors=0

spark.dynamicAllocation.schedulerBacklogTimeout=1

spark.eventLog.enabled=true

spark.io.encryption.enabled=false

spark.lineage.enabled=true

spark.network.crypto.enabled=false

spark.serializer=org.apache.spark.serializer.KryoSerializer

spark.shuffle.service.enabled=true

spark.shuffle.service.port=7337

spark.ui.enabled=true

spark.ui.killEnabled=true

spark.master=yarn

spark.submit.deployMode=client

spark.eventLog.dir=hdfs://nameservice1/user/spark/spark24ApplicationHistory

spark.yarn.historyServer.address=http://10-10-223-21:18090

spark.yarn.jars=local:/opt/cloudera/parcels/SPARK24/lib/spark24/jars/*,local:/opt/cloudera/parcels/SPARK24/lib/spark24/hive/*

spark.driver.extraLibraryPath=/opt/cloudera/parcels/CDH-6.0.0-1.cdh6.0.0.p0.537114/lib/hadoop/lib/native

spark.executor.extraLibraryPath=/opt/cloudera/parcels/CDH-6.0.0-1.cdh6.0.0.p0.537114/lib/hadoop/lib/native

spark.yarn.am.extraLibraryPath=/opt/cloudera/parcels/CDH-6.0.0-1.cdh6.0.0.p0.537114/lib/hadoop/lib/native

spark.yarn.config.gatewayPath=/opt/cloudera/parcels

spark.yarn.config.replacementPath={{HADOOP_COMMON_HOME}}/../../..

spark.yarn.appMasterEnv.PYSPARK_PYTHON=/opt/cloudera/parcels/Anaconda-5.1.0.1/bin/python

spark.yarn.appMasterEnv.PYSPARK_DRIVER_PYTHON=/opt/cloudera/parcels/Anaconda-5.1.0.1/bin/python

spark.yarn.historyServer.allowTracking=true

spark.yarn.appMasterEnv.MKL_NUM_THREADS=1

spark.executorEnv.MKL_NUM_THREADS=1

spark.yarn.appMasterEnv.OPENBLAS_NUM_THREADS=1

spark.executorEnv.OPENBLAS_NUM_THREADS=1Additional contextNo response Are you willing to submit PR?

|

Beta Was this translation helpful? Give feedback.

Replies: 4 comments 14 replies

-

|

Hello @2416210017, |

Beta Was this translation helpful? Give feedback.

-

|

The error message guides you to check |

Beta Was this translation helpful? Give feedback.

-

Kyuubi supports Spark 3.0 and above, it's not expected to work w/ Spark 2.4. |

Beta Was this translation helpful? Give feedback.

-

|

Problems of Spark environment |

Beta Was this translation helpful? Give feedback.

Problems of Spark environment