diff --git a/enterprise_versioned_docs/version-3.2.2/background-information/key-concepts/ssl-certificates.md b/enterprise_versioned_docs/version-3.2.2/background-information/key-concepts/ssl-certificates.md

deleted file mode 100644

index 7d6dd652..00000000

--- a/enterprise_versioned_docs/version-3.2.2/background-information/key-concepts/ssl-certificates.md

+++ /dev/null

@@ -1,153 +0,0 @@

----

-title: SSL Certificates

-slug: /key-concepts/ssl-certificates

----

-

-In this document, you will learn the basic concept of SSL certificate objects in APISIX and scenarios where you may need them, including configuring TLS or mTLS between client applications, APISIX, and upstream servers. You will go over the basics of SSL/TLS at the beginning to help further understanding when to use SSL certificate objects in APISIX.

-

-Explore additional resources at the end of the document for more information on related topics.

-

-## Overview

-

-_TLS (Transport Layer Security)_, being the successor to SSL (Secure Sockets Layer) protocol, is a cryptographic protocol designed to secure communication between two parties, such as a web browser and a web server. It is implemented on top of an existing protocol, such as HTTP or TCP, to provide an additional layer of security by establishing a connection through a TLS handshake and encrypting data transmission.

-

-The following is a high-level overview of the **one-way TLS handshake** in [TLS v1.2](https://www.rfc-editor.org/rfc/rfc5246) and [TLS v1.3](https://www.rfc-editor.org/rfc/rfc8446)—the two most commonly used TLS versions:

-

-

-

-

-

-| | **TLS** | **mTLS** |

-|----------------------------------|---------------|----------|

-| **Client Application –– APISIX** | Required | Required |

-| **APISIX –– Upstream** | Not Required | Optional |

-

-

-

-You will learn about use cases and non-use cases of SSL objects for those scenarios.

-

-## TLS Between Client Applications and APISIX

-

-It is common practice to enforce TLS between client applications and APISIX as data transmission in this segment is typically over the public internet and therefore, is at a higher risk of being eavesdropped.

-

-The following diagram illustrates the usage of an SSL object in implementing TLS over HTTP (also known as HTTPS) between client applications and APISIX, where APISIX is deployed at an arbitrary IP address `132.69.xxx.xxx` behind the domain name `foodservice.com` and acts as a gatekeeper between public traffic and internal services:

-

-

-

-

-

-

-

-Here are the key steps that took place in the illustration:

-

-1. The client application initiates a request to `https://foodservice.com`.

-

-2. The request first goes through a DNS server, which resolves the domain name to an IP address and returns the IP address to the client application.

-

-3. The client application sends the request for `foodservice.com` to its resolved IP address, during which process, client application performs a [TLS handshake](#overview) with APISIX, where APISIX sends its certificate `server.crt` to client for authentication.

-

-4. As `foodservice.com` is included in the SNI list of the APISIX SSL object, the TLS handshake shall succeed. The communication between the client application and APISIX is now encrypted with TLS.

-

-5. APISIX routes and forwards the request to the corresponding upstream services over HTTP. Note that the upstream services are exposed on the default port 80 and TLS is terminated at APISIX in this example.

-

-[//]:

-[//]:

-

-For detailed instructions on how to configure HTTPS between client and APISIX, please refer to the [how-to guide](../how-to-guide/traffic-management/tls-and-mtls/configure-https-between-client-and-apisix).

-

-## TLS Between APISIX and Upstreams

-

-Upstream services may require TLS in cases where the traffic between the API gateway and upstreams is not secure or private. In a one-way TLS setup between APISIX and upstreams, upstream servers are responsible for presenting the certificate and key. On the APISIX side, you only need to configure [upstreams](./upstreams.md) to use HTTPS scheme and port 443 (or other designated port).

-

-For detailed instructions on how to configure TLS between APISIX and upstreams, please refer to the [how-to guide](../how-to-guide/traffic-management/tls-and-mtls/configure-upstream-https).

-

-## mTLS Between Client Applications and APISIX

-

-In closed systems where general access to back-end services is restricted, it is important for the server to verify the identity of the client to ensure that only authenticated and authorized clients are allowed to access the back-end services. One way to achieve this is to configure mTLS between the client and server. With mTLS, the client presents a certificate to the server during the TLS handshake process, and the server uses the certificate to verify the identity of the client. If the client is not authenticated, the server will reject the request.

-

-To configure mTLS between client applications and APISIX, in addition to the configuration required for TLS, you should also:

-

-1. Generate and configure certificates and keys on the client applications.

-

-2. Add the [Certificate Authority (CA)](https://en.wikipedia.org/wiki/Certificate_authority) certificate to the `client.ca` field in APISIX's SSL object, such as the following:

-

- ```json

- {

- "type": "server",

- "sni": "foodservice.com",

- "cert": "",

- "key": "",

- # highlight-start

- "client": {

- "ca": ""

- }

- # highlight-end

- }

- ```

-

- where the CA certificate is used to verify the digital signatures on client certificates issued by the CA, thereby verifying the identity of client applications.

-

-For detailed instructions on how to configure mTLS between client and APISIX, please refer to the [how-to guide](../how-to-guide/traffic-management/tls-and-mtls/configure-mtls-between-client-and-apisix).

-

-[//]:

-[//]:

-

-## mTLS Between APISIX and Upstreams

-

-mTLS between an API gateway and its upstream services is typically implemented in high-security environments by organizations, such as financial institutions, who need to stay compliant with relevant security standards and regulations.

-

-In APISIX, whether to use an SSL object in configuring mTLS between APISIX and its upstream services is determined by whether the configuration will be repetitive.

-

-If the certificate is valid for only one domain, you can choose to directly configure the certificate and key in the upstream object:

-

-

-

-

-

-When a certificate, such as a wildcard certificate, is valid for multiple domains, it is recommended to create a single SSL object to store the certificate and key and avoid the repetitive TLS configurations on upstreams:

-

-

-

-

-

-

-

-For detailed instructions on how to configure mTLS between APISIX and upstreams, please refer to the how-to guide (coming soon).

-

-## Additional Resource(s)

-

-* Key Concepts

-

- * [Routes](./routes.md)

- * [Upstreams](./upstreams.md)

-

-[//]:

-[//]:

-[//]:

-[//]:

diff --git a/enterprise_versioned_docs/version-3.2.2/background-information/key-concepts/upstreams.md b/enterprise_versioned_docs/version-3.2.2/background-information/key-concepts/upstreams.md

deleted file mode 100644

index 970f6686..00000000

--- a/enterprise_versioned_docs/version-3.2.2/background-information/key-concepts/upstreams.md

+++ /dev/null

@@ -1,73 +0,0 @@

----

-title: Upstreams

-slug: /key-concepts/upstreams

----

-

-In this document, you will learn the basic concept of an upstream object in API7 Enterprise Edition and why you would want to use it. You will be introduced to a few relevant features, including load balancing, service discovery, and upstream health checking.

-

-Explore additional resources at the end for more information on related topics.

-

-## Overview

-

-An _upstream_ object in API7 Enterprise Edition is a logical abstraction of a set containing one or more upstream addresses. It is required in [services](../key-concepts/services.md) to specify **where** requests flow to and **how** they are distributed.

-

-Here is an example of such a configuration in service routes, where the same upstream address is repeated across three different service routes:

-

-

-

-

-

-

-

-As you can probably see, large-scale systems with many services would benefit significantly from configuring identical groups of upstream addresses in upstream objects, reducing redundant information and operational costs.

-

-## Load Balancing

-

-An important use case of upstreams is to help [enable load balancing](../../getting-started/load-balancing.md) - that is, outlining where client requests are forwarded to and how they are distributed among back-end replicas.

-

-In upstreams, there are four load-balancing algorithms available to choose from:

-

-* `Round Robin` - weighted round robin

-* `Consistent Hash` - consistent hashing

-* `Exponentially Weighted Moving Average(EWMA)` - exponentially weighted moving average

-* `Least Connection` - least connections

-

-For detailed instructions and explanation about load balancing in API7 Enterprise Edition, please refer to the load balancing how-to guide and API Reference (coming soon).

-

-[//]:

-[//]:

-

-## Service Discovery

-

-While it is straightforward to figure upstream addresses statically, in microservice-based architectures, upstream addresses are often dynamically assigned and therefore, changed, during autoscaling, failures, and updates. Static configurations are less than ideal in this case.

-

-Service discovery comes to rescue. It describes the process of automatically detecting the available upstream services, keeping their addresses in a database (called a service registry) for others to reference. That way, an API gateway can always fetch the latest list of upstream addresses through the registry, ensuring all requests are forwarded to healthy upstream nodes.

-

-API7 Enterprise Edition supports integrations with many service registries, such as Consul, Eureka, Nacos, Kubernetes service discovery, and more.

-

-For more details about how to integrate with third-party service registries, please see Service Discovery (coming soon).

-

-[//]:

-

-## Upstream Health Checking

-

-API7 Enterprise Edition provides active and passive health checking options to probe if upstream nodes are online (a.k.a. healthy). Unhealthy upstream nodes will be ignored until they recover and are deemed healthy again.

-

-Upstream health checking can be configured in the `checks` parameter in an upstream object.

-

-More details about how to configure upstream health checking will be provided in Active and Passive Health Checking (coming soon).

-

-[//]:

-[//]:

-

-## Additional Resource(s)

-

-* Getting Started - [Load Balancing](../../getting-started/load-balancing.md)

-[//]:

-[//]:

-[//]:

-[//]:

diff --git a/enterprise_versioned_docs/version-3.2.2/enterprise-edition/features/audit.md b/enterprise_versioned_docs/version-3.2.2/enterprise-edition/features/audit.md

deleted file mode 100644

index 37859229..00000000

--- a/enterprise_versioned_docs/version-3.2.2/enterprise-edition/features/audit.md

+++ /dev/null

@@ -1,6 +0,0 @@

----

-title: Audit

-slug: /enterprise-edition/audit

----

-

-Coming soon.

diff --git a/enterprise_versioned_docs/version-3.2.2/enterprise-edition/features/rbac.md b/enterprise_versioned_docs/version-3.2.2/enterprise-edition/features/rbac.md

deleted file mode 100644

index 9da97349..00000000

--- a/enterprise_versioned_docs/version-3.2.2/enterprise-edition/features/rbac.md

+++ /dev/null

@@ -1,6 +0,0 @@

----

-title: RBAC

-slug: /enterprise-edition/rabc

----

-

-Coming soon.

diff --git a/enterprise_versioned_docs/version-3.2.2/enterprise-edition/install-enterprise-trial.md b/enterprise_versioned_docs/version-3.2.2/enterprise-edition/install-enterprise-trial.md

deleted file mode 100644

index 22fb4334..00000000

--- a/enterprise_versioned_docs/version-3.2.2/enterprise-edition/install-enterprise-trial.md

+++ /dev/null

@@ -1,10 +0,0 @@

----

-title: Install API7 Enterprise

-slug: /enterprise-edition/install

----

-

-You can install API7 Enterprise with a trial option to start for free.

-

-Visit [API7 Enterprise](https://api7.ai/try?product=enterprise) and complete the download form to obtain a trial license, which should be sent to the email you provided.

-

-Proceeding with the download, you will be redirected to the installation instructions.

diff --git a/enterprise_versioned_docs/version-3.2.2/getting-started/configure-routes.md b/enterprise_versioned_docs/version-3.2.2/getting-started/configure-routes.md

deleted file mode 100644

index fbcdfc8e..00000000

--- a/enterprise_versioned_docs/version-3.2.2/getting-started/configure-routes.md

+++ /dev/null

@@ -1,67 +0,0 @@

----

-title: Configure Routes

-slug: /getting-started/configure-routes

----

-

-Apache APISIX provides flexible gateway management capabilities based on routes, where routing paths and targets are defined for requests.

-

-This tutorial guides you on how to create a route and validate it. You will complete the following steps:

-

-1. Create a route with a sample _upstream_ that points to [httpbin.org](http://httpbin.org).

-2. Use _cURL_ to send a test request to see how APISIX proxies and forwards the request.

-

-## What Is a Route

-

-A _route_ is a routing path to upstream targets. In [Apache APISIX](https://api7.ai/apisix), routes are responsible for matching client's requests based on defined rules, loading and executing the corresponding plugins, as well as forwarding requests to the specified upstream services.

-

-In APISIX, a simple route can be set up with a path-matching URI and a corresponding upstream address.

-

-## What Is an Upstream

-

-An _upstream_ is a set of target nodes with the same work. It defines a virtual host abstraction that performs load balancing on a given set of service nodes according to the configured rules.

-

-## Prerequisite(s)

-

-1. Complete [Get APISIX](./) to install APISIX.

-

-## Create a Route

-

-In this section, you will create a route that forwards client requests to [httpbin.org](http://httpbin.org), a public HTTP request and response service.

-

-The following command creates a route, which should forward all requests sent to `http://127.0.0.1:9080/ip` to [httpbin.org/ip](http://httpbin.org/ip):

-

-[//]:

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/routes" -X PUT -d '

-{

- "id": "getting-started-ip",

- "uri": "/ip",

- "upstream": {

- "type": "roundrobin",

- "nodes": {

- "httpbin.org:80": 1

- }

- }

-}'

-```

-

-You will receive an `HTTP/1.1 201 OK` response if the route was created successfully.

-

-## Validate

-

-```shell

-curl "http://127.0.0.1:9080/ip"

-```

-

-The expected response is similar to the following:

-

-```text

-{

- "origin": "183.94.122.205"

-}

-```

-

-## What's Next

-

-This tutorial creates a route with only one target node. In the next tutorial, you will learn how to configure load balancing with multiple target nodes.

diff --git a/enterprise_versioned_docs/version-3.2.2/getting-started/get-apisix.md b/enterprise_versioned_docs/version-3.2.2/getting-started/get-apisix.md

deleted file mode 100644

index c0e6b56f..00000000

--- a/enterprise_versioned_docs/version-3.2.2/getting-started/get-apisix.md

+++ /dev/null

@@ -1,62 +0,0 @@

----

-title: Get APISIX

-slug: /getting-started/

----

-

-Apache APISIX is a dynamic, real-time, and high-performance API Gateway. It is a [top-level project](https://projects.apache.org/project.html?apisix) of the Apache Software Foundation.

-

-You can use APISIX API Gateway as a traffic entrance to process all business data. It offers features including dynamic routing, dynamic upstream, dynamic certificates, A/B testing, canary release, blue-green deployment, limit rate, defense against malicious attacks, metrics, monitoring alarms, service observability, service governance, and more.

-

-This tutorial uses a script to quickly install [Apache APISIX](https://api7.ai/apisix) in your local environment and verifies the installation through the Admin API. You can also use [API7 Cloud](https://api7.ai/cloud), a Cloud-host service, to manage APISIX.

-

-## Prerequisite(s)

-

-* Install [Docker](https://docs.docker.com/get-docker/) to be used in the quickstart script to create containerized **etcd** and **APISIX**.

-* Install [cURL](https://curl.se/) to be used in the quickstart script and to send requests to APISIX for validation.

-

-## Get APISIX

-

-:::caution

-

-To provide a better experience in this tutorial, the requirement of Admin API key is switched off by default. Please turn on the API key requirement of Admin API in the production environment.

-

-:::

-

-APISIX can be easily installed and started with the quickstart script:

-

-```shell

-curl -sL https://run.api7.ai/apisix/quickstart-v3.2.0 | sh

-```

-

-The script starts two Docker containers, `apisix-quickstart` and `etcd-quickstart` in the `apisix-quickstart-net` Docker network, where etcd is used to store APISIX configurations.

-

-You should see the following message once APISIX is ready:

-

-```text

-✔ APISIX is ready!

-```

-

-## Validate

-

-Once APISIX is running, you can use curl to send a request to see if APISIX is working properly:

-

-```shell

-curl -sI "http://127.0.0.1:9080" | grep Server

-```

-

-If everything is ok, you will get the APISIX version similar to the following:

-

-```text

-Server: APISIX/3.2.0

-```

-

-APISIX is now installed and running.

-

-## Next Steps

-

-The following tutorial is based on the working APISIX, please keep everything running and move on to the next step.

-

-* [Configure Routes](configure-routes.md)

-* [Load Balancing](load-balancing.md)

-* [Rate Limiting](rate-limiting.md)

-* [Key Authentication](key-authentication.md)

diff --git a/enterprise_versioned_docs/version-3.2.2/getting-started/install-api7-ee.md b/enterprise_versioned_docs/version-3.2.2/getting-started/install-api7-ee.md

new file mode 100644

index 00000000..f4064930

--- /dev/null

+++ b/enterprise_versioned_docs/version-3.2.2/getting-started/install-api7-ee.md

@@ -0,0 +1,4 @@

+---

+title: Install API7 Enterprise Edition

+slug: /getting-started/install-api7-ee

+---

\ No newline at end of file

diff --git a/enterprise_versioned_docs/version-3.2.2/getting-started/key-authentication.md b/enterprise_versioned_docs/version-3.2.2/getting-started/key-authentication.md

deleted file mode 100644

index 3c81cf38..00000000

--- a/enterprise_versioned_docs/version-3.2.2/getting-started/key-authentication.md

+++ /dev/null

@@ -1,148 +0,0 @@

----

-title: Key Authentication

-slug: /getting-started/key-authentication

----

-

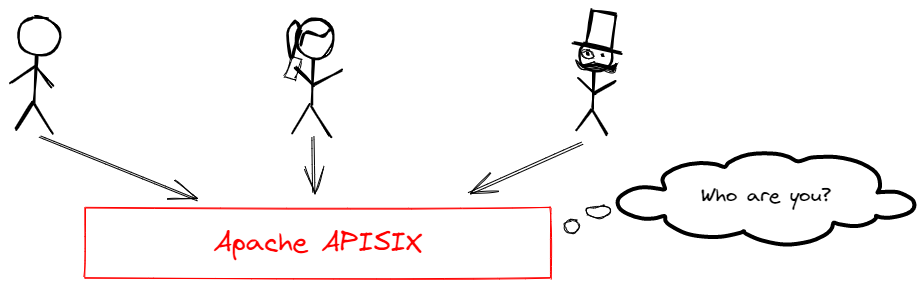

-An API gateway's primary role is to connect API consumers and providers. For security reasons, it should authenticate and authorize consumers before access to internal resources.

-

-

-

-APISIX has a flexible plugin extension system and a number of existing plugins for user authentication and authorization. For example:

-

-- [Key Authentication](https://apisix.apache.org/docs/apisix/plugins/key-auth/)

-- [Basic Authentication](https://apisix.apache.org/docs/apisix/plugins/basic-auth/)

-- [JSON Web Token (JWT) Authentication](https://apisix.apache.org/docs/apisix/plugins/jwt-auth/)

-- [Keycloak](https://apisix.apache.org/docs/apisix/plugins/authz-keycloak/)

-- [Casdoor](https://apisix.apache.org/docs/apisix/plugins/authz-casdoor/)

-- [Wolf RBAC](https://apisix.apache.org/docs/apisix/plugins/wolf-rbac/)

-- [OpenID Connect](https://apisix.apache.org/docs/apisix/plugins/openid-connect/)

-- [Central Authentication Service (CAS)](https://apisix.apache.org/docs/apisix/plugins/cas-auth/)

-- [HMAC](https://apisix.apache.org/docs/apisix/plugins/hmac-auth/)

-- [Casbin](https://apisix.apache.org/docs/apisix/plugins/authz-casbin/)

-- [LDAP](https://apisix.apache.org/docs/apisix/plugins/ldap-auth/)

-- [Open Policy Agent (OPA)](https://apisix.apache.org/docs/apisix/plugins/opa/)

-- [Forward Authentication](https://apisix.apache.org/docs/apisix/plugins/forward-auth/)

-

-In this tutorial, you will create a consumer with key authentication, and learn how to enable and disable key authentication.

-

-## What Is a Consumer

-

-A _consumer_ is an application or a developer who consumes the API.

-

-In APISIX, a consumer requires a unique `username` to be created. As part of the key authentication configuration, you would also add one of the authentication plugins from the list above to the consumer's `plugin` field.

-

-## What Is Key Authentication

-

-Key authentication is a relatively simple but widely used authentication approach. The idea is as follows:

-

-1. Administrator adds an authentication key (API key) to the Route.

-2. API consumers add the key to the query string or headers for authentication when sending requests.

-

-## Enable Key Authentication

-

-### Prerequisite(s)

-

-1. Complete [Get APISIX](./) to install APISIX.

-2. Complete [Configure Routes](./configure-routes#whats-a-route).

-

-### Create a Consumer

-

-Create a consumer named `tom` and enable the `key-auth` plugin with an API key `secret-key`. All requests sent with the key `secret-key` should be authenticated as `tom`.

-

-:::caution

-

-Please use a complex key in the Production environment.

-

-:::

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/consumers" -X PUT -d '

-{

- "username": "tom",

- "plugins": {

- "key-auth": {

- "key": "secret-key"

- }

- }

-}'

-```

-

-You will receive an `HTTP/1.1 201 OK` response if the consumer was created successfully.

-

-### Enable Authentication

-

-Re-using the same route `getting-started-ip` from [Configure Routes](./configure-routes), you can use the `PATCH` method to add the `key-auth` plugin to the route:

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/routes/getting-started-ip" -X PATCH -d '

-{

- "plugins": {

- "key-auth": {}

- }

-}'

-```

-

-You will receive an `HTTP/1.1 200 OK` response if the plugin was added successfully.

-

-### Validate

-

-Validate if authentication is successfully enabled in the following steps.

-

-#### 1. Send a request without any key

-

-Send a request without the `apikey` header.

-

-```shell

-curl -i "http://127.0.0.1:9080/ip"

-```

-

-Since the key is not provided, you will receive an unauthorized `HTTP/1.1 401 Unauthorized` response.

-

-#### 2. Send a request with a wrong key

-

-Send a request with a wrong key in the `apikey` header.

-

-```shell

-curl -i "http://127.0.0.1:9080/ip" -H 'apikey: wrong-key'

-```

-

-Since the key is incorrect, you will receive an `HTTP/1.1 401 Unauthorized` response.

-

-#### 3. Send a request with the correct key

-

-Send a request with the correct key in the `apikey` header.

-

-```shell

-curl -i "http://127.0.0.1:9080/ip" -H 'apikey: secret-key'

-```

-

-Since the correct key is provided, you will receive an `HTTP/1.1 200 OK` response.

-

-### Disable Authentication

-

-Disable the key authentication plugin by setting the `_meta.disable` parameter to `true`.

-

-```shell

-curl "http://127.0.0.1:9180/apisix/admin/routes/getting-started-ip" -X PATCH -d '

-{

- "plugins": {

- "key-auth": {

- "_meta": {

- "disable": true

- }

- }

- }

-}'

-```

-

-Send a request without any key to validate:

-

-```shell

-curl -i "http://127.0.0.1:9080/ip"

-```

-

-Since key authentication is disabled, you will receive an `HTTP/1.1 200 OK` response.

-

-## What's Next

-

-You have learned how to configure key authentication for a route. In the next tutorial, you will learn how to configure rate limiting.

diff --git a/enterprise_versioned_docs/version-3.2.2/getting-started/load-balancing.md b/enterprise_versioned_docs/version-3.2.2/getting-started/load-balancing.md

deleted file mode 100644

index d79e93c1..00000000

--- a/enterprise_versioned_docs/version-3.2.2/getting-started/load-balancing.md

+++ /dev/null

@@ -1,75 +0,0 @@

----

-title: Load Balancing

-slug: /getting-started/load-balancing

----

-

-Load balancing is a technique used to distribute network request loads. It is a key consideration in designing systems that need to handle a large volume of traffic, allowing for improved system performance, scalability, and reliability

-

-Apache APISIX supports a variety of [load balancing algorithms](../background-information/key-concepts/upstreams.md#load-balancing), one of which is the weighted round-robin algorithm. This algorithm distributes incoming requests over a set of servers in a cyclical pattern.

-

-In this tutorial, you will create a route with two upstream services and uses the round-robin load balancing algorithm to load balance requests.

-

-## Prerequisite(s)

-

-1. Complete [Get APISIX](./) to install APISIX.

-2. Understand APISIX [Route and Upstream](./configure-routes#whats-a-route).

-

-## Enable Load Balancing

-

-Create a route with two upstream services, [httpbin.org](https://httpbin.org/headers) and [mock.api7.ai](https://mock.api7.ai/headers), to distribute requests across. Both services respond with the request headers when receiving request at `/headers`:

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/routes" -X PUT -d '

-{

- "id": "getting-started-headers",

- "uri": "/headers",

- "upstream" : {

-# highlight-start

- // Annotate 1

- "type": "roundrobin",

- // Annotate 2

- "nodes": {

-# highlight-end

- "httpbin.org:443": 1,

- "mock.api7.ai:443": 1

- },

-# highlight-start

- // Annotate 3

- "pass_host": "node",

- // Annotate 4

- "scheme": "https"

-# highlight-end

- }

-}'

-```

-

-❶ `type`: use `roundrobin` as the load balancing algorithm.

-

-❷ `nodes`: upstream services.

-

-❸ `pass_host`: use `node` to pass the host header to the upstream.

-

-❹ `scheme`: use `https` to enable TLS with upstream.

-

-You should receive an `HTTP/1.1 201 OK` response if the route was created successfully.

-

-## Validate

-

-Generate 50 consecutive requests to APISIX `/headers` route to see the load-balancing effect:

-

-```shell

-resp=$(seq 50 | xargs -I{} curl "http://127.0.0.1:9080/headers" -sL) && \

- count_httpbin=$(echo "$resp" | grep "httpbin.org" | wc -l) && \

- count_mockapi7=$(echo "$resp" | grep "mock.api7.ai" | wc -l) && \

- echo httpbin.org: $count_httpbin, mock.api7.ai: $count_mockapi7

-```

-

-The command keeps count of the number of requests that was handled by the two services respectively. The output shows that requests were distributed over to the two services:

-

-```text

-httpbin.org: 23, mock.api7.ai: 27

-```

-

-## What's Next

-

-You have learned how to configure load balancing. In the next tutorial, you will learn how to configure key authentication.

diff --git a/enterprise_versioned_docs/version-3.2.2/getting-started/rate-limiting.md b/enterprise_versioned_docs/version-3.2.2/getting-started/rate-limiting.md

deleted file mode 100644

index e8988c9d..00000000

--- a/enterprise_versioned_docs/version-3.2.2/getting-started/rate-limiting.md

+++ /dev/null

@@ -1,104 +0,0 @@

----

-title: Rate Limiting

-slug: /getting-started/rate-limiting

----

-

-APISIX is a unified control point, managing the ingress and egress of APIs and microservices traffic. In addition to the legitimate client requests, these requests may also include unwanted traffic generated by web crawlers as well as cyber attacks, such as DDoS.

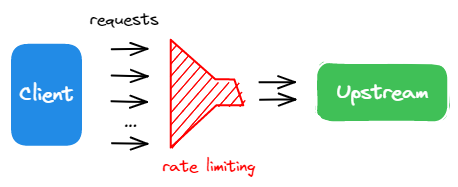

-

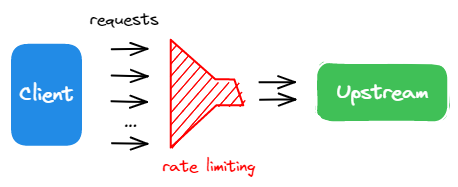

-APISIX offers rate limiting capabilities to protect APIs and microservices by limiting the number of requests sent to upstream services in a given period of time. The count of requests is done efficiently in memory with low latency and high performance.

-

-

-

-

-

-

-In this tutorial, you will enable the `limit-count` plugin to set a rate limiting constraint on the incoming traffic.

-

-## Prerequisite(s)

-

-1. Complete the [Get APISIX](./) step to install APISIX first.

-2. Complete the [Configure Routes](./configure-routes#whats-a-route) step.

-

-## Enable Rate Limiting

-

-The following route `getting-started-ip` is inherited from [Configure Routes](./configure-routes). You only need to use the `PATCH` method to add the `limit-count` plugin to the route:

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/routes/getting-started-ip" -X PATCH -d '

-{

- "plugins": {

- "limit-count": {

- "count": 2,

- "time_window": 10,

- "rejected_code": 429

- }

- }

-}'

-```

-

-You will receive an `HTTP/1.1 200 OK` response if the plugin was added successfully. The above configuration limits the incoming requests to a maximum of 2 requests within 10 seconds.

-

-### Validate

-

-Generate 50 simultaneous requests to see the rate limiting plugin in effect.

-

-```shell

-resp=$(seq 50 | xargs -I{} curl "http://127.0.0.1:9080/ip" -o /dev/null -s -w "%{http_code}\n") && \

- count_200=$(echo "$resp" | grep "200" | wc -l) && \

- count_429=$(echo "$resp" | grep "429" | wc -l) && \

- echo "200": $count_200, "429": $count_429

-```

-

-The results are as expected: out of the 50 requests, 2 requests were sent successfully (status code `200`) while the others were rejected (status code `429`).

-

-```text

-"200": 2, "429": 48

-```

-

-## Disable Rate Limiting

-

-Disable rate limiting by setting the `_meta.disable` parameter to `true`:

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/routes/getting-started-ip" -X PATCH -d '

-{

- "plugins": {

- "limit-count": {

- "_meta": {

- "disable": true

- }

- }

- }

-}'

-```

-

-### Validate

-

-Generate 50 requests again to validate if it is disabled:

-

-```shell

-resp=$(seq 50 | xargs -I{} curl "http://127.0.0.1:9080/ip" -o /dev/null -s -w "%{http_code}\n") && \

- count_200=$(echo "$resp" | grep "200" | wc -l) && \

- count_429=$(echo "$resp" | grep "429" | wc -l) && \

- echo "200": $count_200, "429": $count_429

-```

-

-The results below show that all of the requests were sent successfully:

-

-```text

-"200": 50, "429": 0

-```

-

-## More

-

-[//]:

-[//]:

-

-You can use [APISIX variables](../reference/built-in-variables.md#apisix-variables) to configure fined matching rules of rate limiting, such as `$host` and `$uri`. In addition, APISIX also supports rate limiting at the cluster level using Redis.

-

-## What's Next

-

-Congratulations! You have learned how to configure rate limiting and completed the Getting Started tutorials.

-

-You can continue to explore other documentations to customize APISIX and meet your production needs.

diff --git a/enterprise_versioned_docs/version-3.2.2/getting-started/set-up-and-launch-init-api.md b/enterprise_versioned_docs/version-3.2.2/getting-started/set-up-and-launch-init-api.md

new file mode 100644

index 00000000..79c9dafc

--- /dev/null

+++ b/enterprise_versioned_docs/version-3.2.2/getting-started/set-up-and-launch-init-api.md

@@ -0,0 +1,4 @@

+---

+title: Set Up and Launch Your Initial API

+slug: /getting-started/set-up-and-launch-init-api

+---

\ No newline at end of file

diff --git a/enterprise_versioned_docs/version-3.2.2/how-to-guide/authentication/set-up-sso-with-oidc-and-keycloak.md b/enterprise_versioned_docs/version-3.2.2/how-to-guide/authentication/set-up-sso-with-oidc-and-keycloak.md

deleted file mode 100644

index ada5e0a8..00000000

--- a/enterprise_versioned_docs/version-3.2.2/how-to-guide/authentication/set-up-sso-with-oidc-and-keycloak.md

+++ /dev/null

@@ -1,340 +0,0 @@

----

-title: Set Up SSO with OIDC and Keycloak

-slug: /how-to-guide/authentication/set-up-sso-with-oidc-and-keycloak

----

-

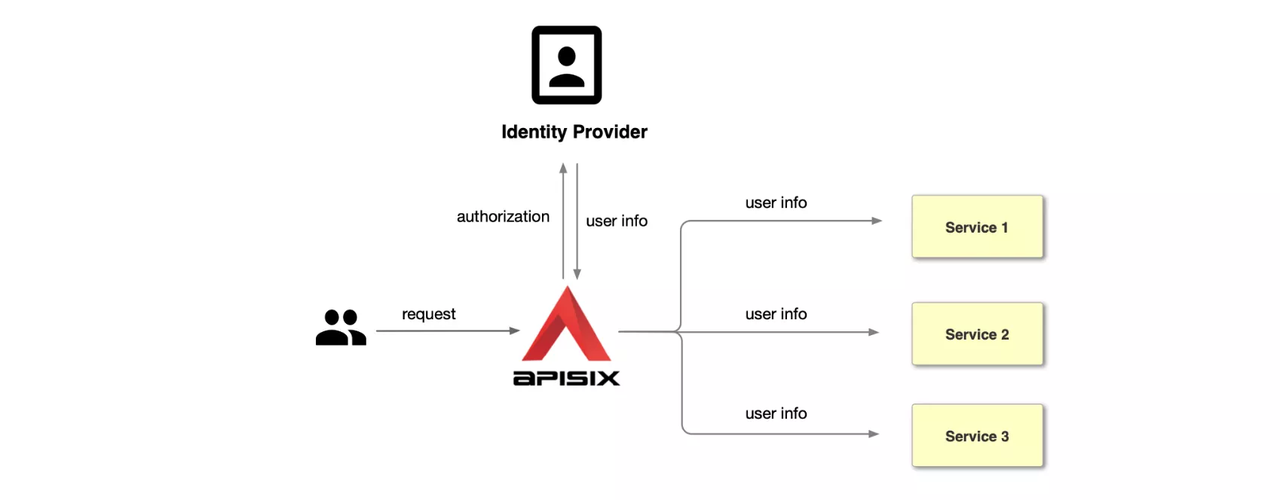

-[OpenID Connect (OIDC)](https://openid.net/connect/) is a simple identity layer on top of the [OAuth 2.0 protocol](https://www.rfc-editor.org/rfc/rfc6749). It allows clients to verify the identity of end users based on the authentication performed by the identity provider, as well as to obtain basic profile information about end users in an interoperable and REST-like manner.

-

-[Keycloak](https://www.keycloak.org/) is an open source identity and access management solution for modern applications and services. Keycloak supports single sign-on (SSO), which enables services to interface with Keycloak through protocols such as OIDC and OAuth 2.0. In addition, Keycloak also supports delegating authentication to third party identity providers such as Facebook and Google.

-

-APISIX supports SSO with OIDC to protect APIs by integrating Keycloak as an identity provider.

-

-This guide will show you how to use the plugin `openid-connect` to integrate APISIX with Keycloak. There are [two types of use cases](https://www.keycloak.org/docs/latest/securing_apps/#openid-connect-2) when using OIDC and Keycloak:

-

-* Applications ask the Keycloak server to authenticate users. It is described in [Authentication With User Credentials](#authentication-with-user-credentials).

-* Clients want to gain access to remote services. It is described in [Authentication With Access Token](#authentication-with-access-token).

-

-

-

-## Prerequisite(s)

-

-* Install [Docker](https://docs.docker.com/get-docker/).

-* Install [cURL](https://curl.se/) to send requests to the services for validation.

-* Follow the [Getting Started tutorial](../../getting-started/) to start a new APISIX instance in Docker.

-

-## Configure Keycloak

-

-Start a Keycloak instance named `apisix-quickstart-keycloak` with the administrator name `quickstart-admin` and password `quickstart-admin-pass` in [development mode](https://www.keycloak.org/server/configuration#_starting_keycloak_in_development_mode) in Docker. The exposed port is mapped to 8080 on the host machine:

-

-```shell

-docker run -d --name "apisix-quickstart-keycloak" \

-# highlight-start

- -e 'KEYCLOAK_ADMIN=quickstart-admin' \

- -e 'KEYCLOAK_ADMIN_PASSWORD=quickstart-admin-pass' \

- -p 8080:8080 \

-# highlight-end

- quay.io/keycloak/keycloak:18.0.2 start-dev

-```

-

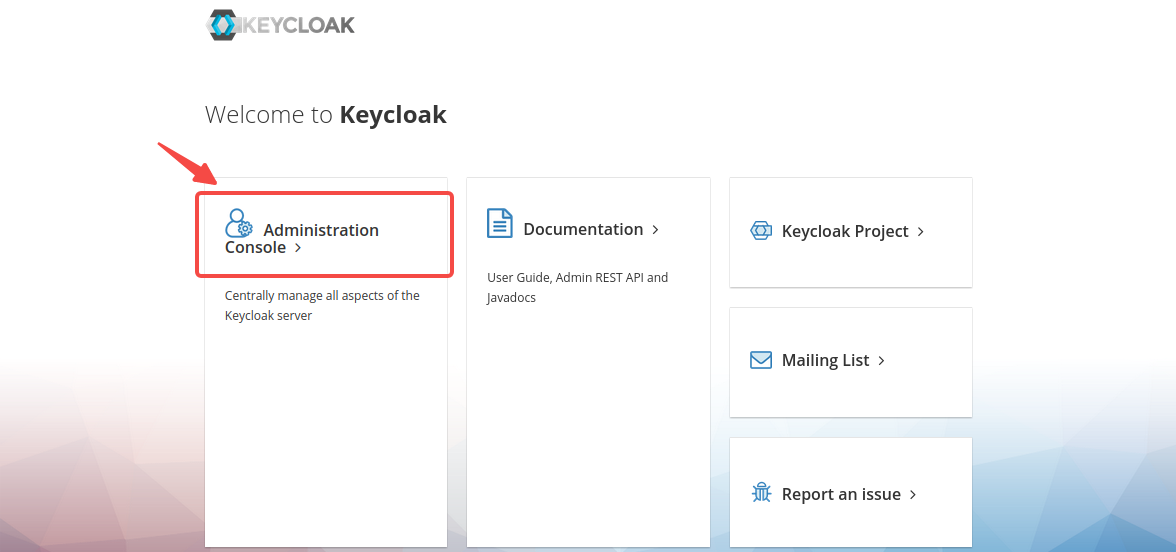

-Keycloak provides an easy-to-use web UI to help the administrator manage all resources, such as clients, roles, and users.

-

-Navigate to `http://localhost:8080` in browser to access the Keycloak web page, then click __Administration Console__:

-

-

-

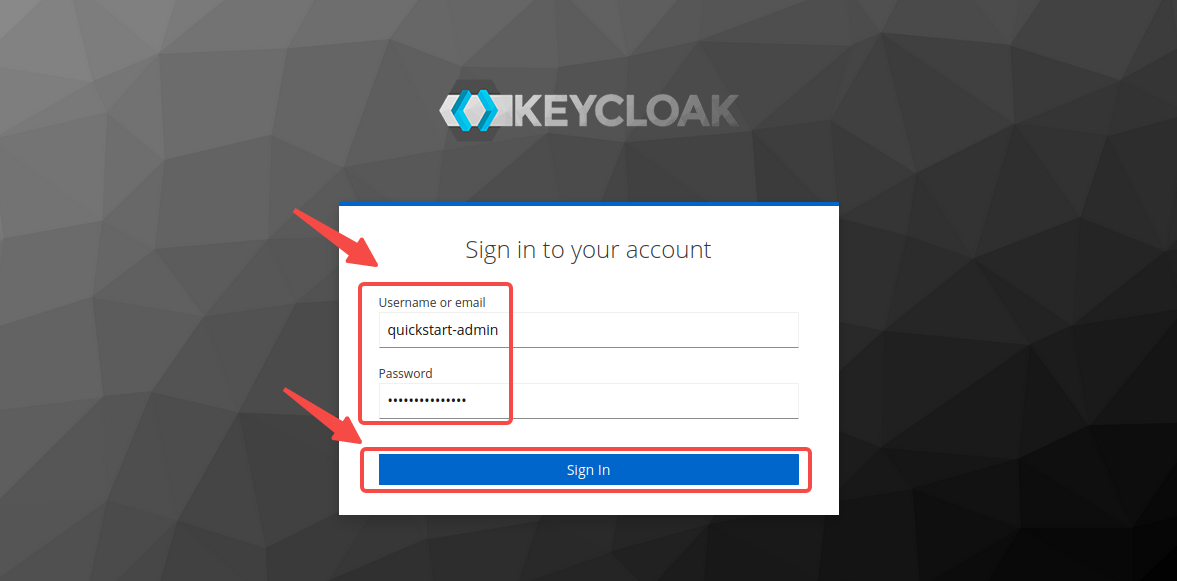

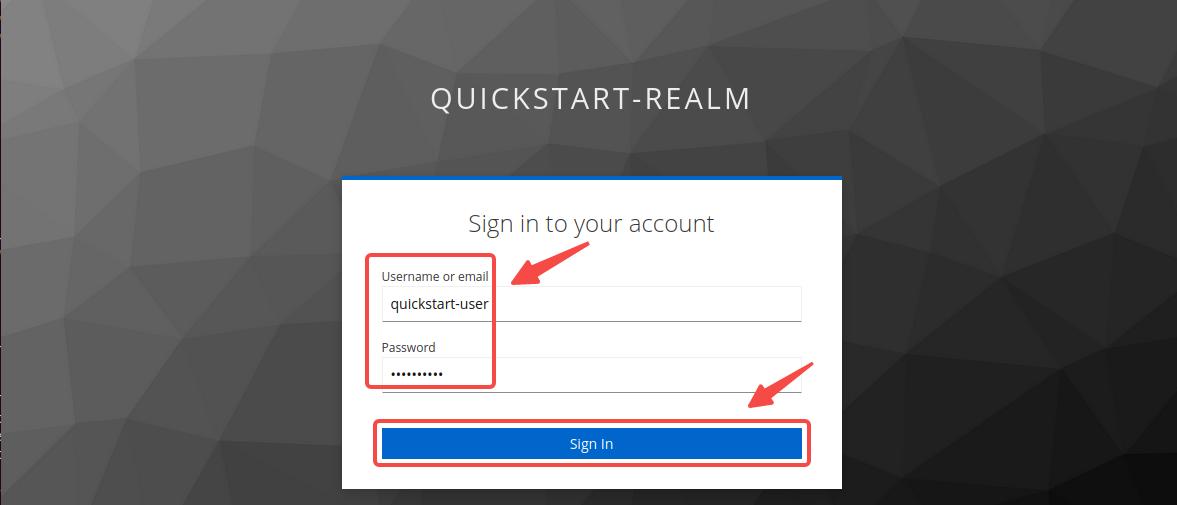

-Enter the administrator’s username `quickstart-admin` and password `quickstart-admin-pass` and sign in:

-

-

-

-You need to maintain the login status to configure Keycloak during the following steps.

-

-### Create a Realm

-

-_Realms_ in Keycloak are workspaces to manage resources such as users, credentials, and roles. The resources in different realms are isolated from each other. You need to create a realm named `quickstart-realm` for APISIX.

-

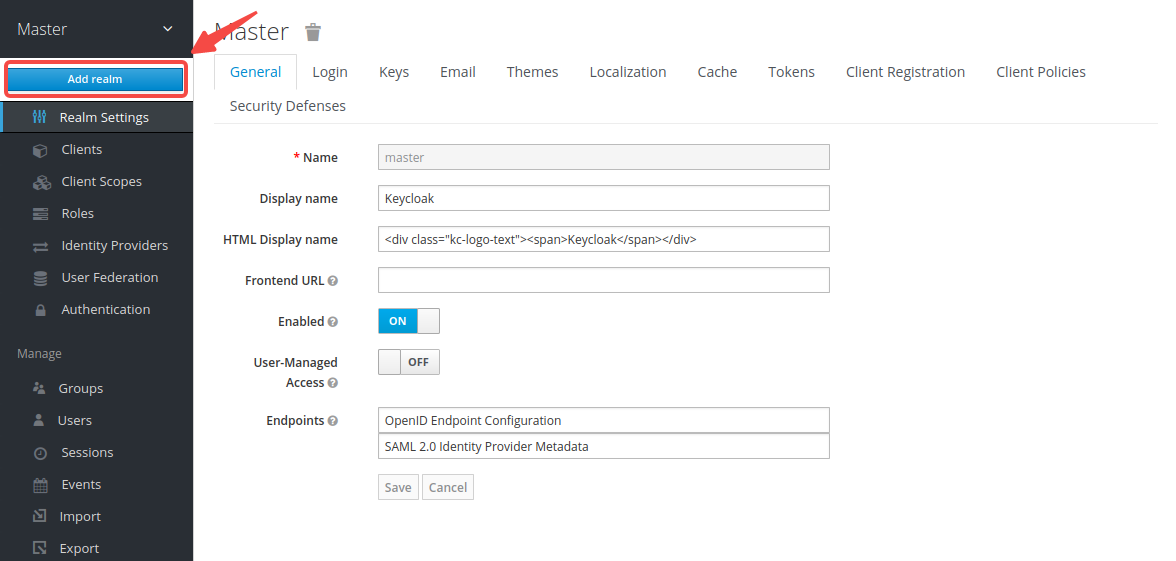

-From the left menu, select __Add realm__:

-

-

-

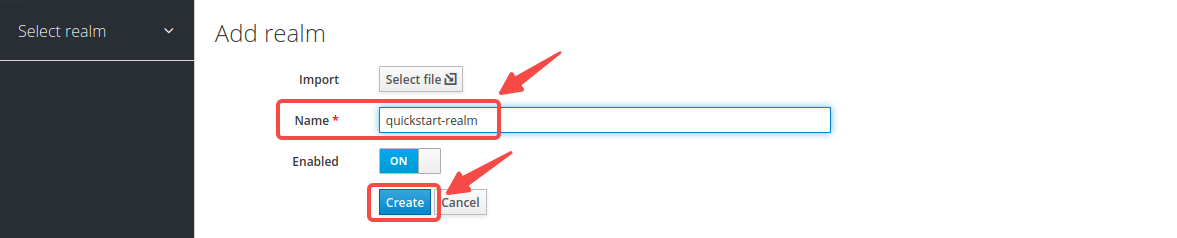

-Enter the realm name `quickstart-realm` and click __Create__ to create it:

-

-

-

-### Create a Client

-

-_Clients_ in Keycloak are entities that request Keycloak to authenticate a user. More often, clients are applications that want to use Keycloak to secure themselves and provide a single sign-on solution. APISIX is equivalent to a client that is responsible for initiating authentication requests to Keycloak, so you need to create its corresponding client named `apisix-quickstart-client`.

-

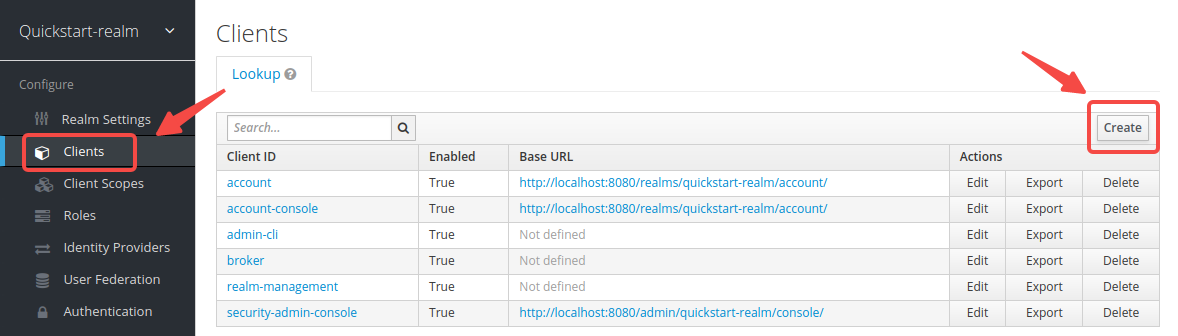

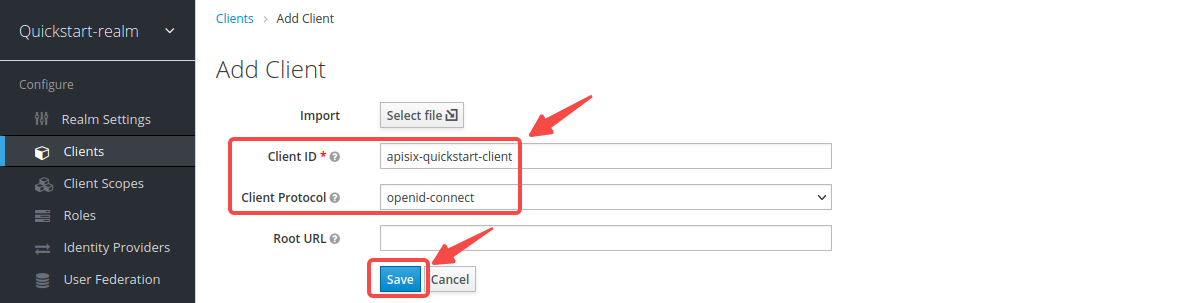

-Click __Clients__ > __Create__ to open the __Add Client__ page:

-

-

-

-Enter __Client ID__ as `apisix-quickstart-client`, then select __Client Protocol__ as `openid-connect` and __Save__:

-

-

-

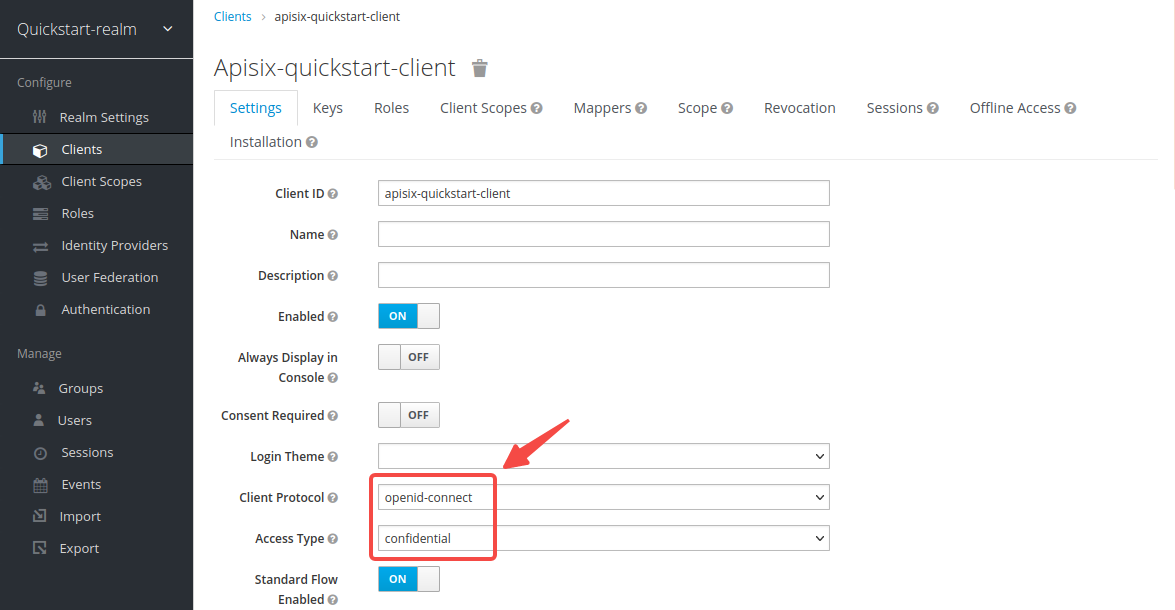

-The client `apisix-quickstart-client` is created. After redirecting to the detailed page, select `confidential` as the __Access Type__:

-

-

-

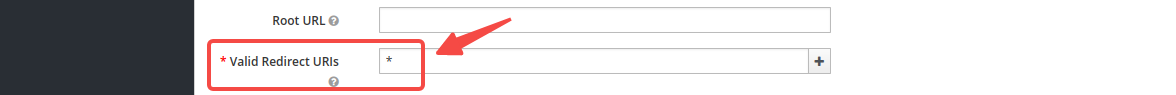

-When the user login is successful during the SSO, Keycloak will carry the state and code to redirect the client to the addresses in __Valid Redirect URIs__. To simplify the operation, enter wildcard `*` to consider any URI valid:

-

-

-

-Select __Save__ to apply custom configurations.

-

-### Create a User

-

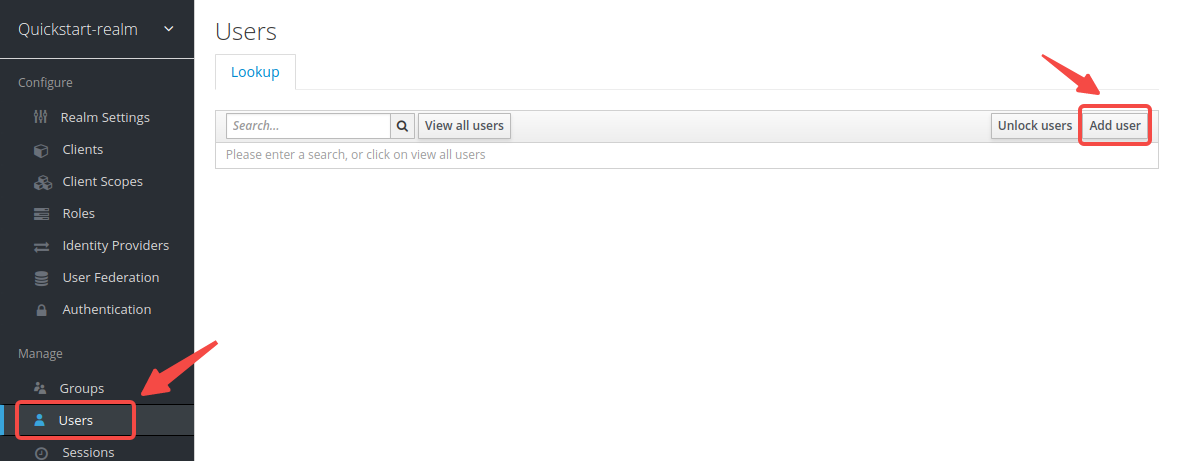

-Users in Keycloak are entities that are able to log into the system. They can have attributes associated with themselves, such as username, email, and address. You need to create a user for login authentication.

-

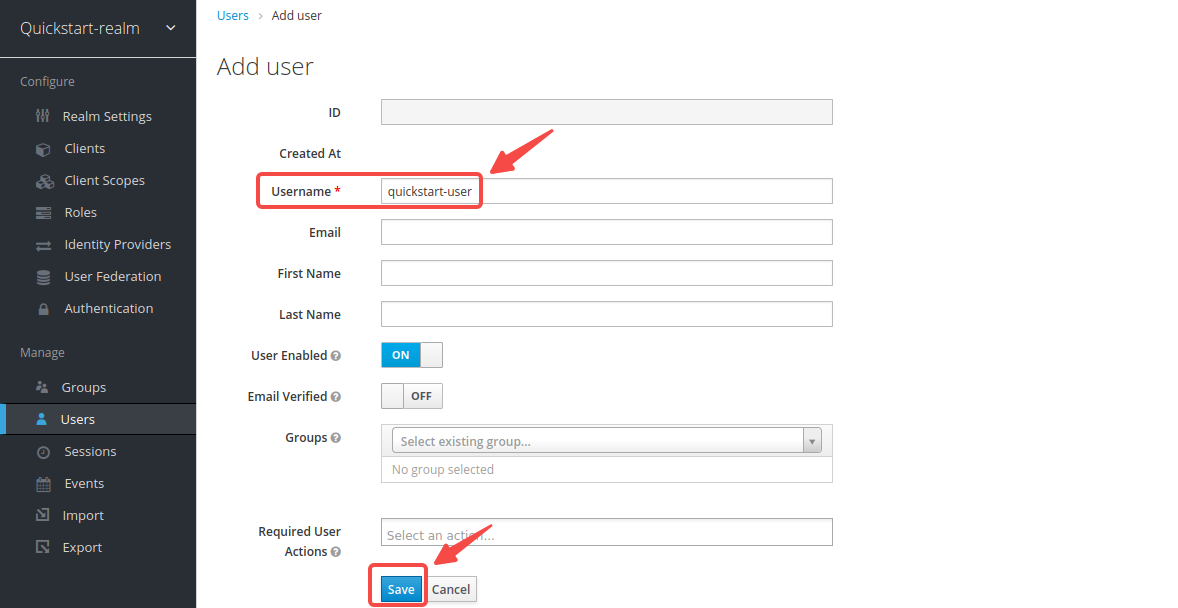

-Click __Users__ > __Add user__ to open the __Add user__ page:

-

-

-

-Enter the __Username__ as `quickstart-user` and select __Save__:

-

-

-

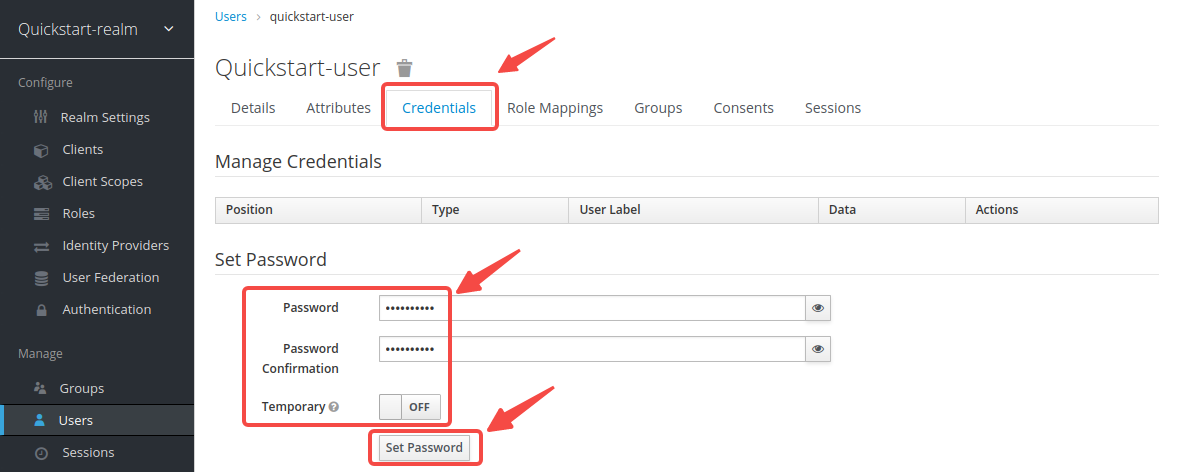

-Click on __Credentials__, then set the __Password__ as `quickstart-user-pass`. Switch __Temporary__ to `OFF` to turn off the restriction, so that you need not to change password the first time you log in:

-

-

-

-## Obtain the OIDC Configuration

-

-In this section, you will obtain the key OIDC configuration from Keycloak and define them as shell variables. Steps after this section will use these variables to configure the OIDC by shell commands.

-

-:::info

-

-Open a separate terminal to follow the steps and define related shell variables. Then steps after this section could use the defined variables directly.

-

-:::

-

-### Get Discovery Endpoint

-

-Click __Realm Settings__, then right click __OpenID Endpoints Configuration__ and copy the link.

-

-

-

-The link should be the same as the following:

-

-```text

-http://localhost:8080/realms/quickstart-realm/.well-known/openid-configuration

-```

-

-Both APISIX and your client (browser and terminal) should access the discovery URI during OIDC authentication progress. You need to replace `localhost` with the actual host IP, thus the APISIX instance in Docker can access the discovery URI successfully.

-

-Define a variable named `KEYCLOAK_IP` to store the real machine IP, then define a variable named `OIDC_DISCOVERY` to store the URI of discovery:

-

-```shell

-KEYCLOAK_IP=192.168.42.145 # Replace this value with your ip

-OIDC_DISCOVERY=http://${KEYCLOAK_IP}:8080/realms/quickstart-realm/.well-known/openid-configuration

-```

-

-### Get Client ID and Secret

-

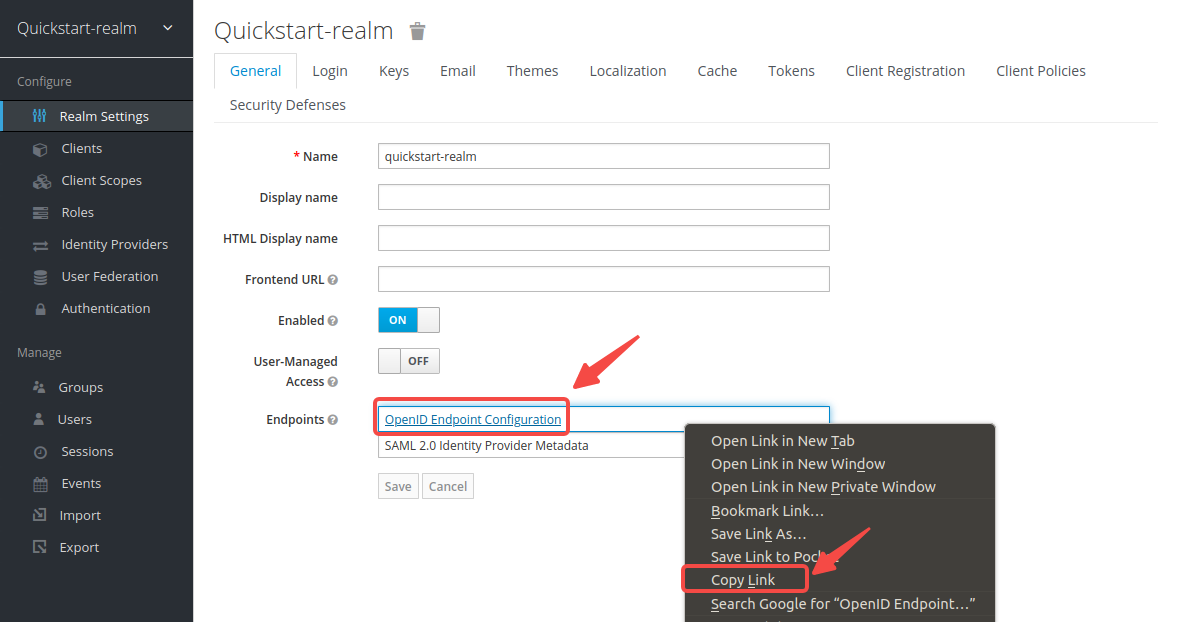

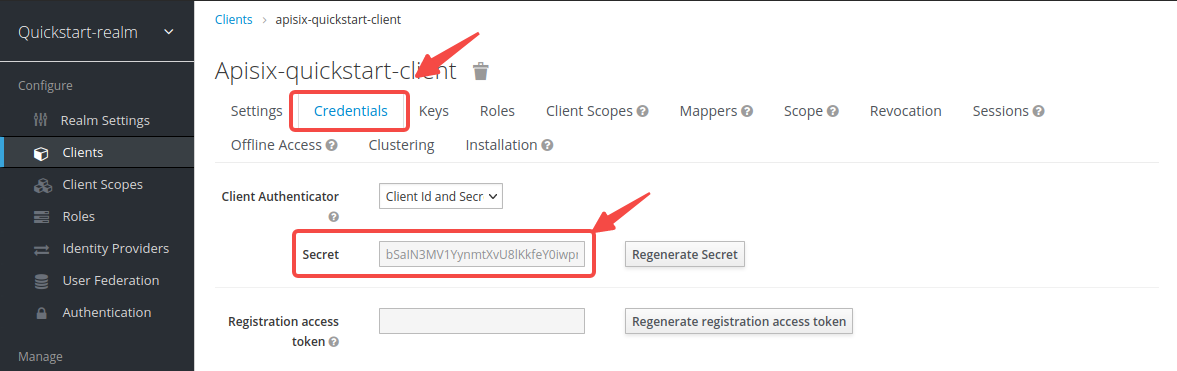

-Click __Clients__ > `apisix-quickstart-client` > __Credentials__, then copy the client secret from __Secret__:

-

-

-

-

-

-Define the variables `OIDC_CLIENT_ID` and `OIDC_CLIENT_SECRET` to store client id and secret, respectively. You need to replace the secret with yours:

-

-```shell

-OIDC_CLIENT_ID=apisix-quickstart-client

-OIDC_CLIENT_SECRET=bSaIN3MV1YynmtXvU8lKkfeY0iwpr9cH # Replace this value with yours

-```

-

-## Authentication With User Credentials

-

-In this section, you will create a route with OIDC that forwards client requests to [httpbin.org](http://httpbin.org/), a public HTTP request and response service.

-

-The route `/anything/{anything}` of `httpbin.org` returns anything passed in request data in JSON type, such as methods, arguments, and headers.

-

-### Enable OIDC Plugin

-

-Create a route with id `auth-with-oidc` and enable the plugin `openid-connect`, which forwards all requests sent to `/anything/*` to the upstream `httpbin.org`:

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/routes" -X PUT -d '

-{

- "id": "auth-with-oidc",

- "uri":"/anything/*",

- "plugins": {

- "openid-connect": {

-# highlight-start

- // Annotate 1

- "client_id": "'"$OIDC_CLIENT_ID"'",

- // Annotate 2

- "client_secret": "'"$OIDC_CLIENT_SECRET"'",

- // Annotate 3

- "discovery": "'"$OIDC_DISCOVERY"'",

-# highlight-end

- "scope": "openid profile",

-# highlight-start

- // Annotate 4

- "redirect_uri": "http://localhost:9080/anything/callback"

-# highlight-end

- }

- },

- "upstream":{

- "type":"roundrobin",

- "nodes":{

- "httpbin.org:80":1

- }

- }

-}'

-```

-

-❶ `client_id`: OAuth client ID.

-

-❷ `client_secret`: OAuth client secret.

-

-❸ `discovery`: Discovery endpoint URL of the identity server.

-

-❹ `redirect_uri`: URI to which the identity provider redirects back to.

-

-Identity provider redirects requests to a pre-configured URI in `redirect_uri` for token exchange after users sign in. Here you can use a valid URI `http://localhost:9080/anything/callback` defined in this route. More details can be found in [Keycloak document of securing apps](https://www.keycloak.org/docs/latest/securing_apps/#redirect-uris).

-

-### Test With Correct Credentials

-

-Navigate to `http://127.0.0.1:9080/anything/test` in browser. The request will be redirected to a login page:

-

-

-

-Sign in with correct username `quickstart-user` and password `quickstart-user-pass`. If the authentication is successful, the route will redirect the request to upstream `httpbin.org`. A valid response similar to the following verifies that OIDC plugin works:

-

-```json

-{

- "args": {},

- "data": "",

- "files": {},

- "form": {},

- "headers": {

- "Accept": "text/html..."

- ...

- },

- "json": null,

- "method": "GET",

- "origin": "127.0.0.1, 59.71.244.81",

- "url": "http://127.0.0.1/anything/test"

-}

-```

-

-### Test With Wrong Credentials

-

-Sign in with wrong password:

-

-

-

-Authentication failed verifies that the OIDC plugin works and rejects requests with wrong credentials.

-

-## Authentication With Access Token

-

-In this section, you will create a route with OIDC similar to the previous section [Authentication With Username and Password](#authentication-with-username-and-password), but authenticate with access token in headers instead of asking the Keycloak server.

-

-### Enable OIDC Plugin

-

-Create a route with id `auth-with-oidc` and enable the plugin `openid-connect`, but adding the parameter `bearer_only` with a value of true:

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/routes" -X PUT -d '

-{

- "id": "auth-with-oidc",

- "uri":"/anything/*",

- "plugins": {

- "openid-connect": {

-# highlight-start

- // Annotate 1

- "client_id": "'"$OIDC_CLIENT_ID"'",

- // Annotate 2

- "client_secret": "'"$OIDC_CLIENT_SECRET"'",

- // Annotate 3

- "discovery": "'"$OIDC_DISCOVERY"'",

-# highlight-end

- "scope": "openid profile",

-# highlight-start

- // Annotate 4

- "bearer_only": true,

- // Annotate 5

- "redirect_uri": "http://127.0.0.1:9080/anything/callback"

-# highlight-end

- }

- },

- "upstream":{

- "type":"roundrobin",

- "nodes":{

- "httpbin.org:80":1

- }

- }

-}'

-```

-

-❶ `client_id`: OAuth client ID.

-

-❷ `client_secret`: OAuth client secret.

-

-❸ `discovery`: Discovery endpoint URL of the identity server.

-

-❹ `bearer_only`: If its value is true, APISIX only check if the authorization header in the request matches a bearer token.

-

-❺ `redirect_uri`: URI to which the identity provider redirects back to.

-

-### Test With Valid Access Token

-

-Call the Keycloak [Token endpoint](https://www.keycloak.org/docs/latest/securing_apps/#token-endpoint) to obtain the access token with parameters client ID, client secret, username, and password:

-

-```shell

-OIDC_USER=quickstart-user

-OIDC_PASSWORD=quickstart-user-pass

-curl -i "http://$KEYCLOAK_IP:8080/realms/quickstart-realm/protocol/openid-connect/token" -X POST \

- -d 'grant_type=password' \

- -d 'client_id='$OIDC_CLIENT_ID'&client_secret='$OIDC_CLIENT_SECRET'' \

- -d 'username='$OIDC_USER'&password='$OIDC_PASSWORD''

-```

-

-The expected response is similar to the following:

-

-```text

-{"access_token":"eyJhbGciOiJSUzI1NiIsInR5cCIgOiAiSldUIiwia2lkIiA6ICJ6U3FFaXN6VlpuYi1sRWMzZkp0UHNpU1ZZcGs4RGN3dXI1Mkx5V05aQTR3In0.eyJleHAiOjE2ODAxNjA5NjgsImlhdCI6MTY4MDE2MDY2OCwianRpIjoiMzQ5MTc4YjQtYmExZC00ZWZjLWFlYTUtZGY2MzJiMDJhNWY5IiwiaXNzIjoiaHR0cDovLzE5Mi4xNjguNDIuMTQ1OjgwODAvcmVhbG1zL3F1aWNrc3RhcnQtcmVhbG0iLCJhdWQiOiJhY2NvdW50Iiwic3ViIjoiMTg4MTVjM2EtNmQwNy00YTY2LWJjZjItYWQ5NjdmMmIwMTFmIiwidHlwIjoiQmVhcmVyIiwiYXpwIjoiYXBpc2l4LXF1aWNrc3RhcnQtY2xpZW50Iiwic2Vzc2lvbl9zdGF0ZSI6ImIxNmIyNjJlLTEwNTYtNDUxNS1hNDU1LWYyNWUwNzdjY2I3NiIsImFjciI6IjEiLCJyZWFsbV9hY2Nlc3MiOnsicm9sZXMiOlsiZGVmYXVsdC1yb2xlcy1xdWlja3N0YXJ0LXJlYWxtIiwib2ZmbGluZV9hY2Nlc3MiLCJ1bWFfYXV0aG9yaXphdGlvbiJdfSwicmVzb3VyY2VfYWNjZXNzIjp7ImFjY291bnQiOnsicm9sZXMiOlsibWFuYWdlLWFjY291bnQiLCJtYW5hZ2UtYWNjb3VudC1saW5rcyIsInZpZXctcHJvZmlsZSJdfX0sInNjb3BlIjoicHJvZmlsZSBlbWFpbCIsInNpZCI6ImIxNmIyNjJlLTEwNTYtNDUxNS1hNDU1LWYyNWUwNzdjY2I3NiIsImVtYWlsX3ZlcmlmaWVkIjpmYWxzZSwicHJlZmVycmVkX3VzZXJuYW1lIjoicXVpY2tzdGFydC11c2VyIn0.uD_7zfZv5182aLXu9-YBzBDK0nr2mE4FWb_4saTog2JTqFTPZZa99Gm8AIDJx2ZUcZ_ElkATqNUZ4OpWmL2Se5NecMw3slJReewjD6xgpZ3-WvQuTGpoHdW5wN9-Rjy8ungilrnAsnDA3tzctsxm2w6i9KISxvZrzn5Rbk-GN6fxH01VC5eekkPUQJcJgwuJiEiu70SjGnm21xDN4VGkNRC6jrURoclv3j6AeOqDDIV95kA_MTfBswDFMCr2PQlj5U0RTndZqgSoxwFklpjGV09Azp_jnU7L32_Sq-8coZd0nj5mSdbkJLJ8ZDQDV_PP3HjCP7EHdy4P6TyZ7oGvjw","expires_in":300,"refresh_expires_in":1800,"refresh_token":"eyJhbGciOiJIUzI1NiIsInR5cCIgOiAiSldUIiwia2lkIiA6ICI0YjFiNTQ3Yi0zZmZjLTQ5YzQtYjE2Ni03YjdhNzIxMjk1ODcifQ.eyJleHAiOjE2ODAxNjI0NjgsImlhdCI6MTY4MDE2MDY2OCwianRpIjoiYzRjNjNlMTEtZTdlZS00ZmEzLWJlNGYtNDMyZWQ4ZmY5OTQwIiwiaXNzIjoiaHR0cDovLzE5Mi4xNjguNDIuMTQ1OjgwODAvcmVhbG1zL3F1aWNrc3RhcnQtcmVhbG0iLCJhdWQiOiJodHRwOi8vMTkyLjE2OC40Mi4xNDU6ODA4MC9yZWFsbXMvcXVpY2tzdGFydC1yZWFsbSIsInN1YiI6IjE4ODE1YzNhLTZkMDctNGE2Ni1iY2YyLWFkOTY3ZjJiMDExZiIsInR5cCI6IlJlZnJlc2giLCJhenAiOiJhcGlzaXgtcXVpY2tzdGFydC1jbGllbnQiLCJzZXNzaW9uX3N0YXRlIjoiYjE2YjI2MmUtMTA1Ni00NTE1LWE0NTUtZjI1ZTA3N2NjYjc2Iiwic2NvcGUiOiJwcm9maWxlIGVtYWlsIiwic2lkIjoiYjE2YjI2MmUtMTA1Ni00NTE1LWE0NTUtZjI1ZTA3N2NjYjc2In0.8xYP4bhDg1U9B5cTaEVD7B4oxNp8wwAYEynUne_Jm78","token_type":"Bearer","not-before-policy":0,"session_state":"b16b262e-1056-4515-a455-f25e077ccb76","scope":"profile email"}

-```

-

-Define the variable `OIDC_ACCESS_TOKEN` to store the token:

-

-```shell

-# Replace the token with yours

-OIDC_ACCESS_TOKEN="eyJhbGciOiJSUzI1NiIsInR5cCIgOiAiSldUIiwia2lkIiA6ICJ6U3FFaXN6VlpuYi1sRWMzZkp0UHNpU1ZZcGs4RGN3dXI1Mkx5V05aQTR3In0.eyJleHAiOjE2ODAxNjA5NjgsImlhdCI6MTY4MDE2MDY2OCwianRpIjoiMzQ5MTc4YjQtYmExZC00ZWZjLWFlYTUtZGY2MzJiMDJhNWY5IiwiaXNzIjoiaHR0cDovLzE5Mi4xNjguNDIuMTQ1OjgwODAvcmVhbG1zL3F1aWNrc3RhcnQtcmVhbG0iLCJhdWQiOiJhY2NvdW50Iiwic3ViIjoiMTg4MTVjM2EtNmQwNy00YTY2LWJjZjItYWQ5NjdmMmIwMTFmIiwidHlwIjoiQmVhcmVyIiwiYXpwIjoiYXBpc2l4LXF1aWNrc3RhcnQtY2xpZW50Iiwic2Vzc2lvbl9zdGF0ZSI6ImIxNmIyNjJlLTEwNTYtNDUxNS1hNDU1LWYyNWUwNzdjY2I3NiIsImFjciI6IjEiLCJyZWFsbV9hY2Nlc3MiOnsicm9sZXMiOlsiZGVmYXVsdC1yb2xlcy1xdWlja3N0YXJ0LXJlYWxtIiwib2ZmbGluZV9hY2Nlc3MiLCJ1bWFfYXV0aG9yaXphdGlvbiJdfSwicmVzb3VyY2VfYWNjZXNzIjp7ImFjY291bnQiOnsicm9sZXMiOlsibWFuYWdlLWFjY291bnQiLCJtYW5hZ2UtYWNjb3VudC1saW5rcyIsInZpZXctcHJvZmlsZSJdfX0sInNjb3BlIjoicHJvZmlsZSBlbWFpbCIsInNpZCI6ImIxNmIyNjJlLTEwNTYtNDUxNS1hNDU1LWYyNWUwNzdjY2I3NiIsImVtYWlsX3ZlcmlmaWVkIjpmYWxzZSwicHJlZmVycmVkX3VzZXJuYW1lIjoicXVpY2tzdGFydC11c2VyIn0.uD_7zfZv5182aLXu9-YBzBDK0nr2mE4FWb_4saTog2JTqFTPZZa99Gm8AIDJx2ZUcZ_ElkATqNUZ4OpWmL2Se5NecMw3slJReewjD6xgpZ3-WvQuTGpoHdW5wN9-Rjy8ungilrnAsnDA3tzctsxm2w6i9KISxvZrzn5Rbk-GN6fxH01VC5eekkPUQJcJgwuJiEiu70SjGnm21xDN4VGkNRC6jrURoclv3j6AeOqDDIV95kA_MTfBswDFMCr2PQlj5U0RTndZqgSoxwFklpjGV09Azp_jnU7L32_Sq-8coZd0nj5mSdbkJLJ8ZDQDV_PP3HjCP7EHdy4P6TyZ7oGvjw"

-```

-

-Send a request to the route `/anything/test` with authorization header:

-

-```shell

-curl -i "http://127.0.0.1:9080/anything/test" -H "Authorization: Bearer $OIDC_ACCESS_TOKEN"

-```

-

-An `HTTP/1.1 200 OK` response verifies that the OIDC plugin works and accepts requests with valid access token.

-

-### Test With Invalid Access Token

-

-Send a request to `http://127.0.0.1:9080/anything/test` with invalid access token:

-

-```shell

-curl -i "http://127.0.0.1:9080/anything/test -H "Authorization: Bearer invalid-access-token"

-```

-

-An `HTTP/1.1 401 Unauthorized` response verifies that the OIDC plugin works and rejects requests with invalid access token.

-

-### Test Without Access Token

-

-Send a request to `http://127.0.0.1:9080/anything/test` without access token:

-

-```shell

-curl -i "http://127.0.0.1:9080/anything/test"

-```

-

-An `HTTP/1.1 401 Unauthorized` response verifies that the OIDC plugin works and rejects requests without access token.

-

-## Next Steps

-

-APISIX supports more OIDC identity providers, such as Okta, Auth0, and Azure AD (coming soon).

-

-In addition, APISIX also supports other authentication approach such as basic authentication, JWT, and key authentication (coming soon).

diff --git a/enterprise_versioned_docs/version-3.2.2/how-to-guide/observability/log-with-clickhouse.md b/enterprise_versioned_docs/version-3.2.2/how-to-guide/observability/log-with-clickhouse.md

deleted file mode 100644

index abc97e4e..00000000

--- a/enterprise_versioned_docs/version-3.2.2/how-to-guide/observability/log-with-clickhouse.md

+++ /dev/null

@@ -1,240 +0,0 @@

----

-title: Log with ClickHouse

-slug: /how-to-guide/observability/log-with-clickhouse

----

-

-import Tabs from '@theme/Tabs';

-import TabItem from '@theme/TabItem';

-

-APISIX supports collecting route access information and recording it as logs, such as host, client IP, and request timestamp. This key information will be of great help in troubleshooting related problems.

-

-[ClickHouse](https://clickhouse.com/) is an open-source column-oriented database management system (DBMS) for online analytical processing (OLAP). It allows users to generate analytical reports such as log analytics using SQL queries in real-time.

-

-This guide will show you how to enable the `clickhouse-logger` plugin to record the APISIX logs into ClickHouse databases.

-

-## Prerequisite(s)

-

-* Install [Docker](https://docs.docker.com/get-docker/).

-* Install [cURL](https://curl.se/) to send requests to the services for validation.

-* Follow the [Getting Started tutorial](./../../getting-started/) to start a new APISIX instance in Docker.

-

-## Configure ClickHouse

-

-Start a ClickHouse instance named `quickstart-clickhouse-server` with a default database `quickstart_db`, a default user `quickstart-user` and password `quickstart-pass` in Docker:

-

-```shell

-docker run -d \

- --name quickstart-clickhouse-server \

- --network=apisix-quickstart-net \

-# highlight-start

- -e CLICKHOUSE_DB=quickstart_db \

- -e CLICKHOUSE_USER=quickstart-user \

- -e CLICKHOUSE_PASSWORD=quickstart-pass \

-# highlight-end

- -e CLICKHOUSE_DEFAULT_ACCESS_MANAGEMENT=1 \

- --ulimit nofile=262144:262144 \

- clickhouse/clickhouse-server

-```

-

-Connect to the ClickHouse instance using the command line tool `clickhouse-client` in Docker:

-

-```shell

-docker exec -it quickstart-clickhouse-server clickhouse-client

-```

-

-Create a table `test` in database `quickstart_db` with fields `host`, `client_ip`, `route_id`, `@timestamp` of `String` type, or adjust the command accordingly based on your needs:

-

-```sql

-CREATE TABLE quickstart_db.test (

- `host` String,

- `client_ip` String,

- `route_id` String,

- `@timestamp` String,

- PRIMARY KEY(`@timestamp`)

-) ENGINE = MergeTree()

-```

-

-If successful, you should see `Ok` on the output.

-

-Enter `exit` to exit from the command line interface in Docker.

-

-## Enable `clickhouse-logger` Plugin

-

-Create a route:

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/routes" -X PUT -d '

-{

- "id": "getting-started-ip",

- "uri": "/ip",

- "upstream": {

- "type": "roundrobin",

- "nodes": {

- "httpbin.org:80": 1

- }

- }

-}'

-```

-

-Enable the `clickhouse-logger` plugin globally for all requests, or on a specific route:

-

-

-

-

-Enable the `clickhouse-logger` plugin on all routes:

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/global_rules" -X PUT -d '

-{

- "id": "clickhouse",

- "plugins": {

- "clickhouse-logger": {

-# highlight-start

-// Annotate 1

- "log_format": {

-# highlight-end

- "host": "$host",

- "@timestamp": "$time_iso8601",

- "client_ip": "$remote_addr"

- },

-# highlight-start

-// Annotate 2

- "user": "quickstart-user",

- "password": "quickstart-pass",

- "database": "quickstart_db",

- "logtable": "test",

- "endpoint_addrs": ["http://quickstart-clickhouse-server:8123"]

-# highlight-end

- }

- }

-}'

-```

-

-➊ Specify all fields corresponding to the ClickHouse table in the log format

-

-➋ ClickHouse server information

-

-An `HTTP/1.1 201 OK` response verifies that the `clickhouse-logger` plugin is enabled successfully.

-

-

-

-

-Enable the `clickhouse-logger` plugin on a specific route:

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/routes/getting-started-ip" -X PATCH -d '

-{

- "plugins": {

- "clickhouse-logger": {

-# highlight-start

-// Annotate 1

- "log_format": {

-# highlight-end

- "host": "$host",

- "@timestamp": "$time_iso8601",

- "client_ip": "$remote_addr"

- },

-# highlight-start

-// Annotate 2

- "user": "quickstart-user",

- "password": "quickstart-pass",

- "database": "quickstart_db",

- "logtable": "test",

- "endpoint_addrs": ["http://quickstart-clickhouse-server:8123"]

-# highlight-end

- }

- }

-}'

-```

-

-➊ Specify all fields corresponding to the ClickHouse table in the log format

-

-➋ ClickHouse server information

-

-An `HTTP/1.1 200 OK` response verifies that the `clickhouse-logger` plugin is enabled successfully.

-

-

-

-

-## Submit Logs in Batches

-

-The `clickhouse-logger` plugin supports using a batch processor to aggregate and process logs in batches. This avoids frequent submissions of log entries to ClickHouse, which slows down the operations.

-

-By default, the batch processor submits data every 5 seconds or when the data size in a batch reaches 1000 KB. You can adjust the time interval of submission `inactive_timeout` and maximum batch size `batch_max_size` for the plugin. For example, this is how you can set `inactive_timeout` to 10 seconds and `batch_max_size` to 2000 KB:

-

-

-

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/global_rules/clickhouse" -X PATCH -d '

-{

- "plugins": {

- "clickhouse-logger": {

- "batch_max_size": 2000,

- "inactive_timeout": 10

- }

- }

-}'

-```

-

-

-

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/routes/getting-started-ip" -X PATCH -d '

-{

- "plugins": {

- "clickhouse-logger": {

- "batch_max_size": 2000,

- "inactive_timeout": 10

- }

- }

-}'

-```

-

-

-

-

-## Verify Logging

-

-Send a request to the route to generate an access log entry:

-

-```shell

-curl -i "http://127.0.0.1:9080/ip"

-```

-

-Connect to the ClickHouse instance using the command line tool `clickhouse-client` in Docker:

-

-```shell

-docker exec -it quickstart-clickhouse-server clickhouse-client

-```

-

-Query all records in table `quickstart_db.test`:

-

-```sql

-SELECT * from quickstart_db.test

-```

-

-You should see an access record similar to the following, which verifies `clickhouse-logger` plugin works as intended.

-

-```text

-┌─host──────┬─client_ip──┬─route_id───────────┬─@timestamp────────────────┐

-│ 127.0.0.1 │ 172.18.0.1 │ getting-started-ip │ 2023-06-07T15:28:24+00:00 │

-└───────────┴────────────┴────────────────────┴───────────────────────────┘

-```

-

-## Next Steps

-

-See `clickhouse-logger` plugin reference to learn more about the plugin configuration options (coming soon).

-

-[//]:

diff --git a/enterprise_versioned_docs/version-3.2.2/how-to-guide/observability/monitor-apisix-with-prometheus.md b/enterprise_versioned_docs/version-3.2.2/how-to-guide/observability/monitor-apisix-with-prometheus.md

deleted file mode 100644

index a6c70117..00000000

--- a/enterprise_versioned_docs/version-3.2.2/how-to-guide/observability/monitor-apisix-with-prometheus.md

+++ /dev/null

@@ -1,129 +0,0 @@

----

-title: Monitor APISIX with Prometheus

-slug: /how-to-guide/observability/monitor-apisix-with-prometheus

----

-

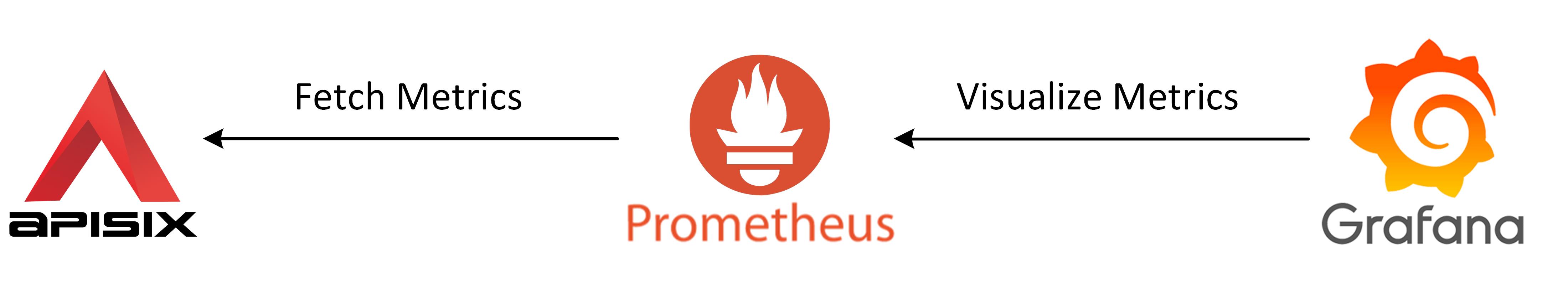

-Prometheus is a popular systems monitoring and alerting toolkit. It collects and stores multi-dimensional time series data like metrics with key-value paired labels.

-

-APISIX offers the capability to expose a significant number of metrics to Prometheus [with low latency](https://api7.ai/blog/1s-to-10ms-reducing-prometheus-delay-in-api-gateway), allowing for continuous monitoring and diagnostics.

-

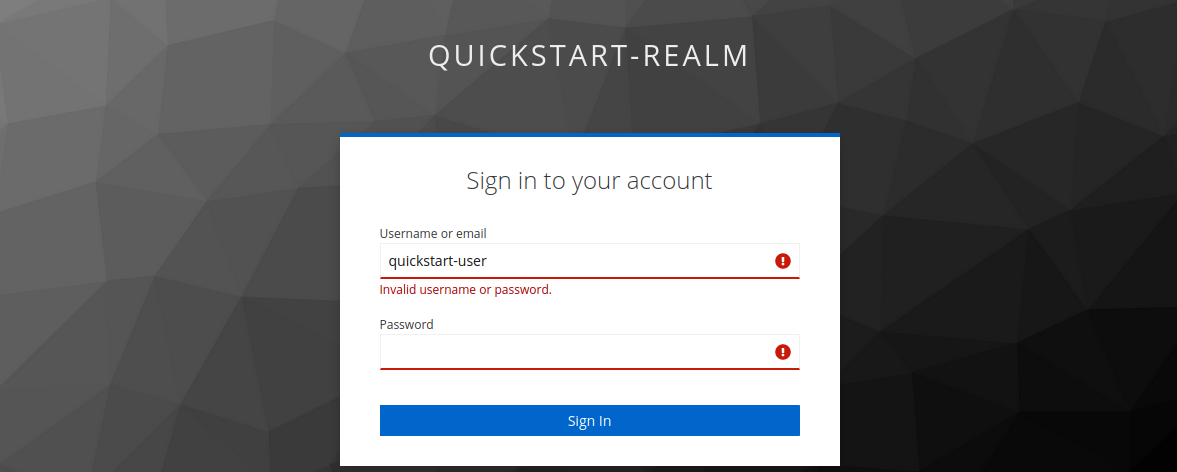

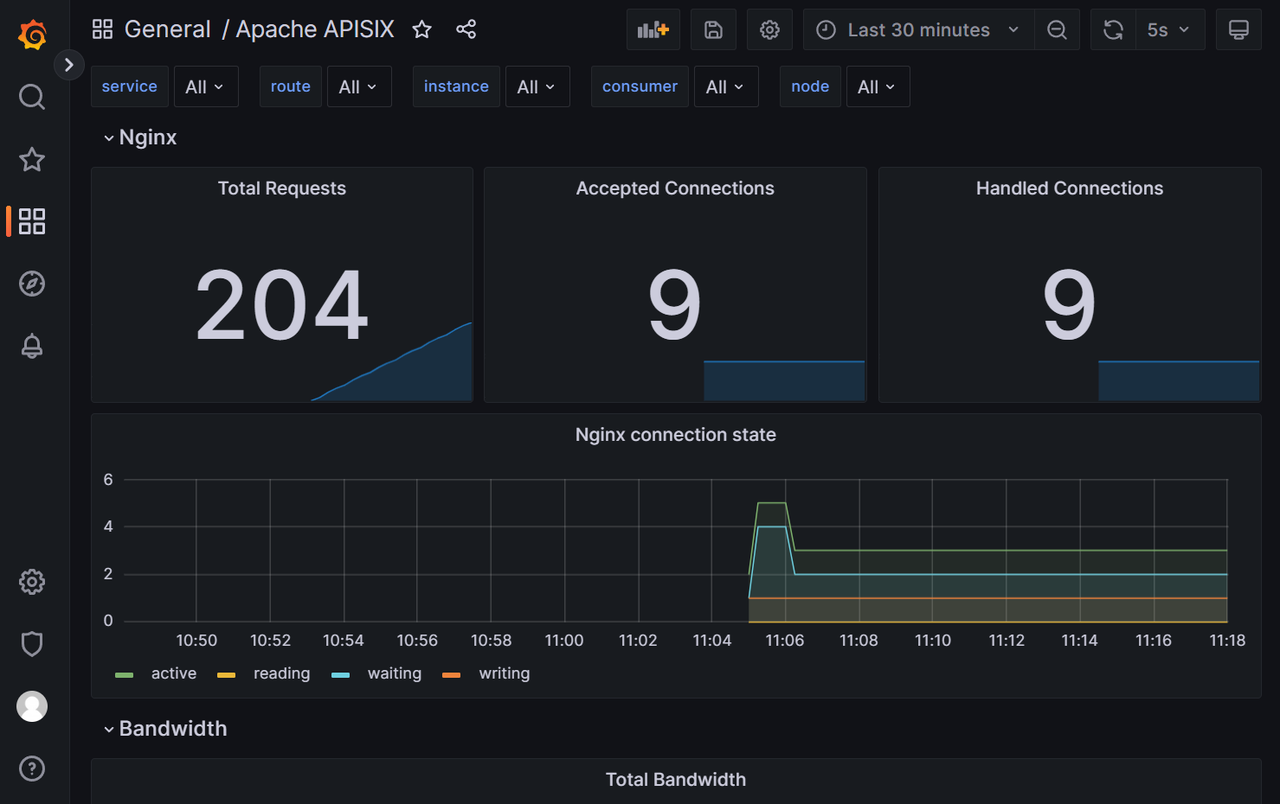

-This guide will show you how to enable the `prometheus` plugin to integrate with Prometheus and Grafana services, where APISIX HTTP metrics are collected and visualized.

-

-

-

-

-

-

-## Prerequisite(s)

-

-* Install [Docker](https://docs.docker.com/get-docker/).

-* Install [cURL](https://curl.se/) to send requests to the services for validation.

-* Follow the [Getting Started tutorial](./../../getting-started/) to start a new APISIX instance in Docker.

-

-## Enable Prometheus Plugin

-

-Create a global rule to enable the `prometheus` plugin on all routes by adding `"prometheus": {}` in the plugins option.

-

-```shell

-curl -i "http://127.0.0.1:9180/apisix/admin/global_rules" -X PUT -d '{

- "id": "rule-for-metrics",

- "plugins": {

- "prometheus":{}

- }

-}'

-```

-

-APISIX gathers internal runtime metrics and exposes them through port `9091` and path `/apisix/prometheus/metrics` by default. The port and path are supported to be customized in APISIX.

-

-Send a request to the route `/apisix/prometheus/metrics` to fetch metrics from APISIX:

-

-```shell

-docker exec apisix-quickstart curl -sL "http://apisix-quickstart:9091/apisix/prometheus/metrics"

-```

-

-Responded metrics are similar to the following, holding all routes (such as an existing route `/ip` here) metrics:

-

-```text

-# HELP apisix_etcd_modify_indexes Etcd modify index for APISIX keys

-# TYPE apisix_etcd_modify_indexes gauge

-apisix_etcd_modify_indexes{key="consumers"} 0

-apisix_etcd_modify_indexes{key="global_rules"} 0

-apisix_etcd_modify_indexes{key="max_modify_index"} 16

-apisix_etcd_modify_indexes{key="prev_index"} 15

-apisix_etcd_modify_indexes{key="protos"} 0

-apisix_etcd_modify_indexes{key="routes"} 16

-apisix_etcd_modify_indexes{key="services"} 0

-apisix_etcd_modify_indexes{key="ssls"} 0

-apisix_etcd_modify_indexes{key="stream_routes"} 0

-apisix_etcd_modify_indexes{key="upstreams"} 0

-apisix_etcd_modify_indexes{key="x_etcd_index"} 16

-# HELP apisix_etcd_reachable Config server etcd reachable from APISIX, 0 is unreachable

-# TYPE apisix_etcd_reachable gauge

-apisix_etcd_reachable 1

-...

-# HELP apisix_http_status HTTP status codes per service in APISIX

-# TYPE apisix_http_status counter

-apisix_http_status{code="200",route="ip",matched_uri="/ip",matched_host="",service="",consumer="",node="52.20.124.211"} 1

-...

-```

-

-## Configure Prometheus

-

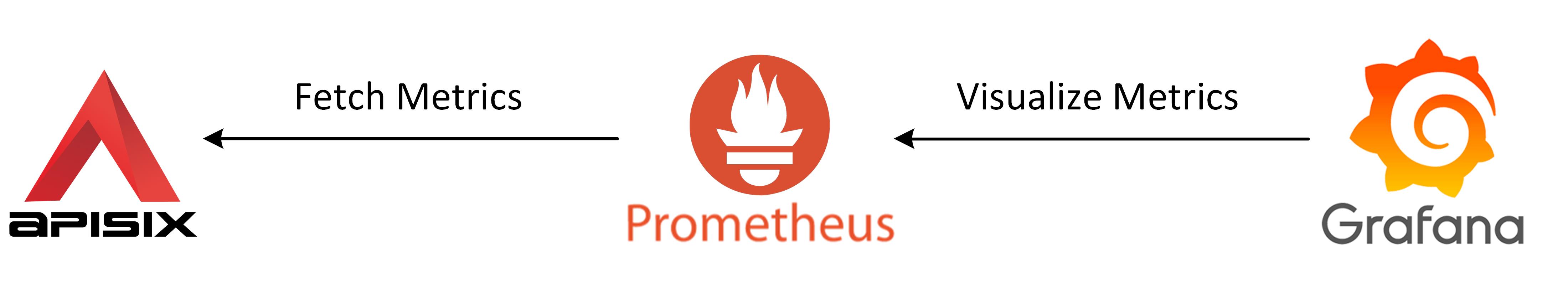

-Targets are monitored objects in Prometheus. You can configure the APISIX metric endpoint as a target in the Prometheus configuration file `prometheus.yml`.

-

-```shell

-echo 'scrape_configs:

- - job_name: "apisix"

- scrape_interval: 15s

- metrics_path: "/apisix/prometheus/metrics"

- static_configs:

- - targets: ["apisix-quickstart:9091"]

-' > prometheus.yml

-```

-

-Start a Prometheus instance in Docker. The exposed port is mapped to `9092` on the host because `9090` is reserved for APISIX. The local configuration file `prometheus.yml` is mounted to the Prometheus container.

-

-```shell

-docker run -d --name apisix-quickstart-prometheus \

- -p 9092:9090 \

- --network=apisix-quickstart-net \

- -v $(pwd)/prometheus.yml:/etc/prometheus/prometheus.yml \

- prom/prometheus:latest

-```

-

-You can now check if the state is "UP" on the Prometheus webpage. Prometheus will collect metrics from APISIX by scraping its metric HTTP endpoint.

-

-

-

-## Configure Grafana

-

-Grafana can visualize metrics stored in Prometheus. Start a Grafana instance on port `3000` in Docker.

-

-```shell

-docker run -d --name=apisix-quickstart-grafana \

- -p 3000:3000 \

- --network=apisix-quickstart-net \

- grafana/grafana-oss

-```

-

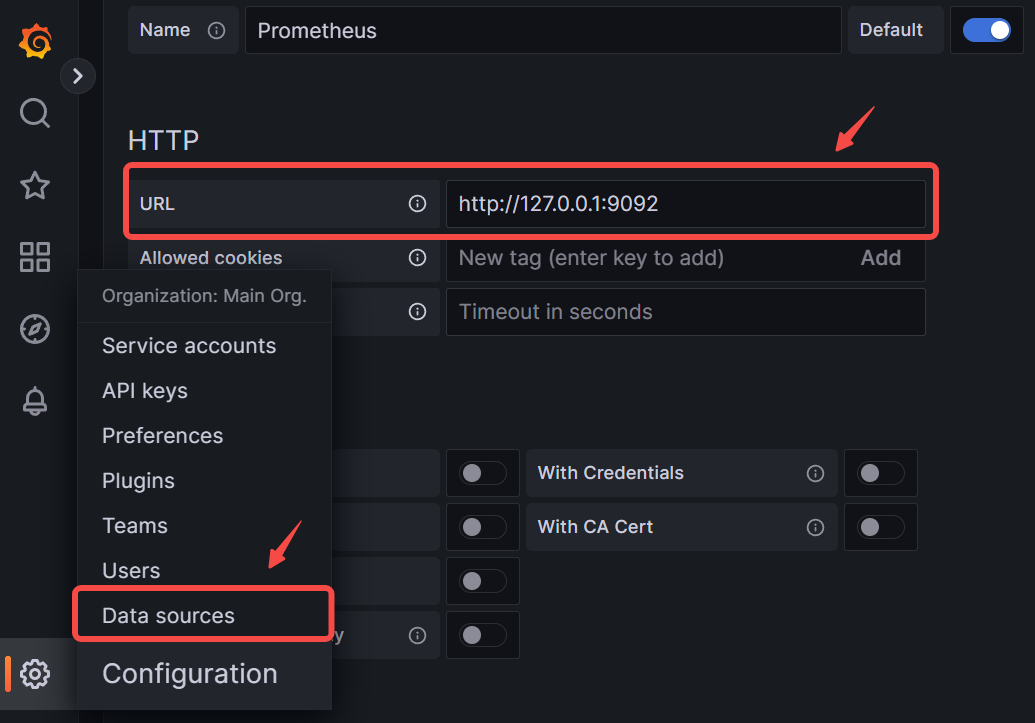

-Add the Prometheus instance created above to Grafana as a data source:

-

-

-

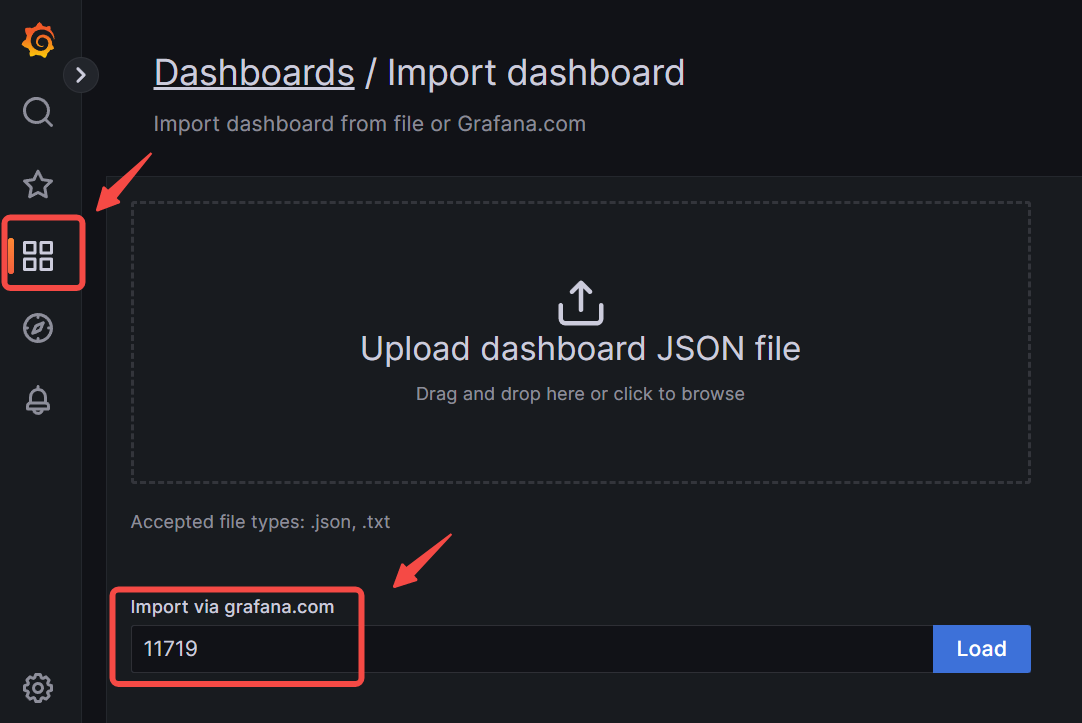

-The official APISIX metric dashboard is published to [Grafana dashboards](https://grafana.com/grafana/dashboards/) with ID [11719](https://grafana.com/grafana/dashboards/11719-apache-apisix/). You can then import the dashboard into Grafana with the ID.

-

-

-

-If everything is OK, the dashboard will automatically visualize metrics in real time.

-

-

-

-## Next Steps

-

-You have now learned how to monitor APISIX metrics with Prometheus and visualize them in Grafana.

-

-Explore other resources in How-To Guides to monitor APISIX logs and traces (coming soon).

-

-[//]:

-[//]:

diff --git a/enterprise_versioned_docs/version-3.2.2/how-to-guide/security/secrets-management/manage-secrets-in-hashicorp-vault.md b/enterprise_versioned_docs/version-3.2.2/how-to-guide/security/secrets-management/manage-secrets-in-hashicorp-vault.md

deleted file mode 100644

index 146f4374..00000000

--- a/enterprise_versioned_docs/version-3.2.2/how-to-guide/security/secrets-management/manage-secrets-in-hashicorp-vault.md

+++ /dev/null

@@ -1,265 +0,0 @@

----

-title: Manage APISIX Secrets in HashiCorp Vault

-slug: /how-to-guide/security/secrets-management/manage-secrets-in-hashicorp-vault

----

-

-[HashiCorp Vault](https://www.vaultproject.io/) is a centralized platform for managing secrets and encryption across different environments and applications. It provides a unified secrets management for storing and accessing, such as API keys, passwords, certificates, and more.

-

-APISIX supports storing sensitive data in the configuration file as secrets, such as `admin_key`, etcd `username`, `password`.

-

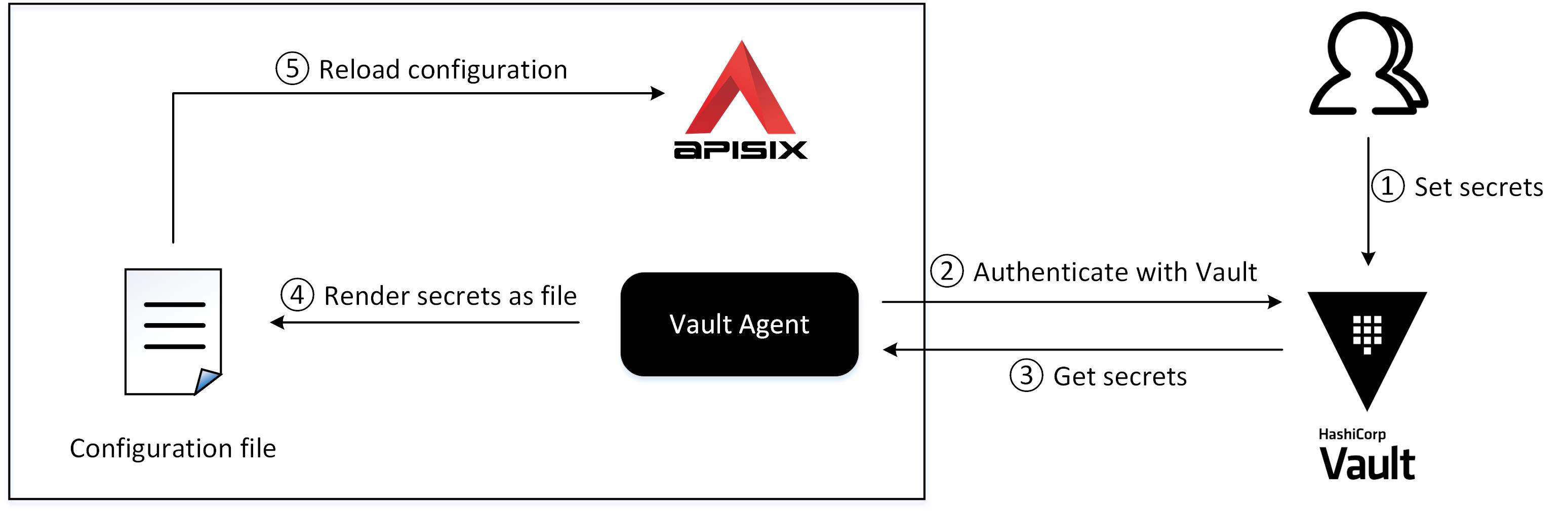

-This guide will show you how to configure HashiCorp Vault as a secrets manager, then store the APISIX `admin_key` in Vault and reference the key in APISIX configuration file.

-

-

-

-## Prerequisite(s)

-

-* Install [Docker](https://docs.docker.com/get-docker/).

-* Install [cURL](https://curl.se/) to send requests to the services for validation.

-* Install [ZIP](https://infozip.sourceforge.net/Zip.html) to unzip the Vault binary from the [official distributed zipped file](https://developer.hashicorp.com/vault/downloads).

-* Follow the [Getting Started tutorial](../../../getting-started/) to start a new APISIX instance in Docker.

-

-## Configure Vault Server

-

-Start a Vault instance in dev mode in Docker named `apisix-quickstart-vault` with the token `apisix-quickstart-vault-token`. The exposed port is mapped to `8200` on the host machine:

-

-```shell

-docker run -d --cap-add=IPC_LOCK \

- -e 'VAULT_DEV_LISTEN_ADDRESS=0.0.0.0:8200' \

- -e 'VAULT_ADDR=http://127.0.0.1:8200' \

-# highlight-start

- -e 'VAULT_DEV_ROOT_TOKEN_ID=apisix-quickstart-vault-token' \

- -e 'VAULT_TOKEN=apisix-quickstart-vault-token' \

- --network=apisix-quickstart-net \

- --name apisix-quickstart-vault \

-# highlight-end

- -p 8200:8200 vault:1.13.0

-```

-

-APISIX needs permission to access Vault and retrieve secrets. You should create a policy file in [HashiCorp Configuration Language (HCL)](https://github.com/hashicorp/hcl) to generate a Vault access token for APISIX.

-

-Create a Vault policy file named `apisix-policy.hcl` in the Vault instance to grant read permission of the path `secret/` to APISIX. You can put secrets under the path `secret/` to allow APISIX to read them:

-

-```shell

-docker exec apisix-quickstart-vault /bin/sh -c "echo '

-# highlight-start

-path \"secret/data/*\" {

- capabilities = [\"read\"]

-}

-# highlight-end

-' > /etc/apisix-policy.hcl"

-```

-

-Apply the policy file to the Vault instance:

-

-```shell

-docker exec apisix-quickstart-vault vault policy write apisix-policy /etc/apisix-policy.hcl

-```

-

-Next, generate the access token attached to the newly defined policy for APISIX to access Vault:

-

-```shell

-docker exec apisix-quickstart-vault vault token create -policy="apisix-policy"

-```

-

-Every execution of the above command will generate a different token. If successful, the output should be similar to the following:

-

-```text

-Key Value

---- -----

-# highlight-start

-token hvs.CAESIHUznrV4wgcifUia0FROd6iprK7NjipAiHBYwiZDQP9TGh4KHGh2cy5ndHc5dzBPbXd5Y1pzblZXd2ZuQXA3ZHI

-# highlight-end

-token_accessor YY4iCj2lICDNd50ZJDsBjvZK

-token_duration 768h

-token_renewable true

-token_policies ["apisix-policy" "default"]

-identity_policies []

-policies ["apisix-policy" "default"]

-```

-

-Copy the value of token and create a file named `apisix-vault-token` to store it in the APISIX instance:

-

-```shell

-docker exec apisix-quickstart /bin/sh -c "echo '

-# highlight-start

-hvs.CAESIHUznrV4wgcifUia0FROd6iprK7NjipAiHBYwiZDQP9TGh4KHGh2cy5ndHc5dzBPbXd5Y1pzblZXd2ZuQXA3ZHI

-# highlight-end

-' > /usr/local/apisix/conf/apisix-vault-token"

-```

-

-Vault Agent uses the token `apisix-vault-token` to authenticate with Vault in the next step.

-

-## Configure Vault Agent

-

-Vault Agent is a client daemon that runs with your applications and automates authentication with Vault and token renewal. It acts as a proxy for Vault's API and renders Vault secrets as files.

-

-Sensitive configurations in APISIX can be stored in the Vault, and then the Vault Agent reads them and inject them into the APISIX configuration file, such as the `admin_key`.

-

-Download the Vault binary and copy it into the APISIX instance:

-

-```shell

-wget https://releases.hashicorp.com/vault/1.13.0/vault_1.13.0_linux_amd64.zip

-unzip vault_1.13.0_linux_amd64.zip

-docker cp vault apisix-quickstart:/usr/local/bin/

-```

-

-Create a file in APISIX instance named `vault-agent-apisix.hcl` to configure how the Vault Agent accesses the server and renders the secrets.

-

-```shell

-docker exec apisix-quickstart /bin/sh -c "echo '

-pid_file = \"./pidfile\"

-

-vault {

- # highlight-start

- // Annotate 1

- address = \"http://apisix-quickstart-vault:8200\"

- # highlight-end

- retry {

- num_retries = 5

- }

-}

-

-auto_auth {

- method {

- type = \"token_file\"

- config = {

- # highlight-start

- // Annotate 2

- token_file_path = \"/usr/local/apisix/conf/apisix-vault-token\"

- # highlight-end

- }

- }

-}

-

-# highlight-start

-// Annotate 3

-listener \"tcp\" {

- address = \"127.0.0.1:8100\"

- tls_disable = true

-}

-# highlight-end

-

-template {

- # highlight-start

- // Annotate 4

- source = \"/usr/local/apisix/conf/config.ctmpl\"

- // Annotate 5

- destination = \"/usr/local/apisix/conf/config.yaml\"

- # highlight-end

-}

-' > /usr/local/apisix/conf/vault-agent-apisix.hcl"

-```

-

-❶ Vault address

-

-❷ Token for authentication

-

-❸ Vault Agent daemon listening attributes

-

-❹ Template file for rendering

-

-❺ Rendered APISIX configuration file

-

-Vault Agent injects secrets into the APISIX configuration file `config.yaml` according to the template file `config.ctmpl` after it is started. Both two files are stored in the APISIX default configuration path `/usr/local/apisix/conf/`.

-

-## Store a Secret

-

-Create a secret `adminKey=apisix-quickstart-key` and store it in the path `secret/apisix/` of Vault:

-

-```shell

-docker exec apisix-quickstart-vault vault kv put secret/apisix adminKey=apisix-quickstart-key

-```

-

-The expected response is similar to the following:

-

-```text

-=== Secret Path ===

-secret/data/apisix

-

-======= Metadata =======

-Key Value

---- -----

-created_time 2023-03-15T11:42:17.123175125Z

-custom_metadata

-deletion_time n/a

-destroyed false

-version 1

-```

-

-## Use the Secret

-

-Create an APISIX template configuration file named `config.ctmpl` in the APISIX instance. Populate the `admin_key` using [Consul Template syntax](https://github.com/hashicorp/consul-template/blob/v0.28.1/docs/templating-language.md), which will be replaced with `secret/apisix/adminKey` at runtime.

-

-[//]:

-

-```shell

-docker exec apisix-quickstart /bin/sh -c "echo '

-deployment:

- role: traditional

- role_traditional:

- config_provider: etcd

- admin:

- allow_admin:

- - 0.0.0.0/0

- admin_key:

- -

- name: admin

- # highlight-start

- key: {{ with secret \"secret/apisix\"}} {{ .Data.data.adminKey }} {{ end }}

- # highlight-end

- role: admin

-plugin_attr:

- prometheus:

- export_addr:

- ip: 0.0.0.0

- port: 9091

-' > /usr/local/apisix/conf/config.ctmpl"

-```

-

-The following command starts the Vault Agent daemon in the APISIX instance with the configuration file `vault-agent-apisix.hcl` created above. The Agent will retrieves the secret `admin_key` and injects it into the APISIX configuration file `config.yaml`.

-

-```shell

-docker exec -d apisix-quickstart vault agent -config=/usr/local/apisix/conf/vault-agent-apisix.hcl

-```

-

-Reload the APISIX container for configuration changes to take effect:

-

-```shell

-docker exec apisix-quickstart apisix reload

-```

-

-## Validate

-

-To verify that the new `admin_key` is in effect:

-

-1. Request Admin API with the correct `admin_key`:

-

- ```shell

- curl -i "http://localhost:9180/apisix/admin/routes" -H 'X-API-KEY: apisix-quickstart-key'

- ```

-

- The expected response is similar to the following:

-

- ```text

- HTTP/1.1 200 OK

- ...

- ```

-

-2. Request Admin API with a wrong `admin_key`:

-

- ```shell

- curl -i "http://localhost:9180/apisix/admin/routes" -H 'X-API-KEY: wrong-key'

- ```

-

- The expected response is similar to the following:

-

- ```

- HTTP/1.1 401 Unauthorized

- ...

- ```

-

-## Next Steps

-

-APISIX supports Vault as the backend to manage other types of secrets, such as JWT tokens and certificates.

-

-See other guides in this chapter (coming soon) to learn more about integrating Vault with APISIX for authentication and certificates management.

diff --git a/enterprise_versioned_docs/version-3.2.2/how-to-guide/traffic-management/tls-and-mtls/configure-https-between-client-and-apisix.md b/enterprise_versioned_docs/version-3.2.2/how-to-guide/traffic-management/tls-and-mtls/configure-https-between-client-and-apisix.md

deleted file mode 100644

index 69886490..00000000

--- a/enterprise_versioned_docs/version-3.2.2/how-to-guide/traffic-management/tls-and-mtls/configure-https-between-client-and-apisix.md

+++ /dev/null

@@ -1,156 +0,0 @@

----

-title: Configure HTTPS Between Client and APISIX

-slug: /how-to-guide/traffic-management/tls-and-mtls/configure-https-between-client-and-apisix

----

-

-_TLS (Transport Layer Security)_ is a cryptographic protocol designed to secure communication between two entities. Enforcing HTTPS between clients and APISIX improves the security and authenticity during the data transmission.

-

-This guide will show you how to configure HTTPS between client applications and APISIX.

-

-

-

-

-

-

-## Prerequisite(s)

-