A unified modular hypervisor based on ArceOS.

+

@@ -20,156 +20,82 @@ English | [中文](README_CN.md)

# Introduction

-AxVisor is a Hypervisor implemented based on the ArceOS unikernel framework. Its goal is to leverage the basic operating system functionalities provided by ArceOS as a foundation to build a unified and componentized Hypervisor.

+AxVisor is a Hypervisor implemented based on the ArceOS kernel. Its goal is to leverage the basic operating system functionalities provided by ArceOS as a foundation to implement a lightweight unified modular Hypervisor.

-**Unified** means using the same codebase to support three architectures—x86_64, Arm (aarch64), and RISC-V—maximizing the reuse of architecture-agnostic code and simplifying development and maintenance efforts.

+- **Unified** means using the same codebase to support three architectures—x86_64, Arm (aarch64), and RISC-V—maximizing the reuse of architecture-agnostic code and simplifying development and maintenance costs.

-**Componentized** means that the Hypervisor's functionalities are decomposed into multiple independently usable components. Each component implements a specific function, and components communicate through standardized interfaces to achieve decoupling and reusability.

+- **Modular** means that the Hypervisor's functionalities are decomposed into multiple independently usable components. Each component implements a specific function, and components communicate through standardized interfaces to achieve decoupling and reusability.

## Architecture

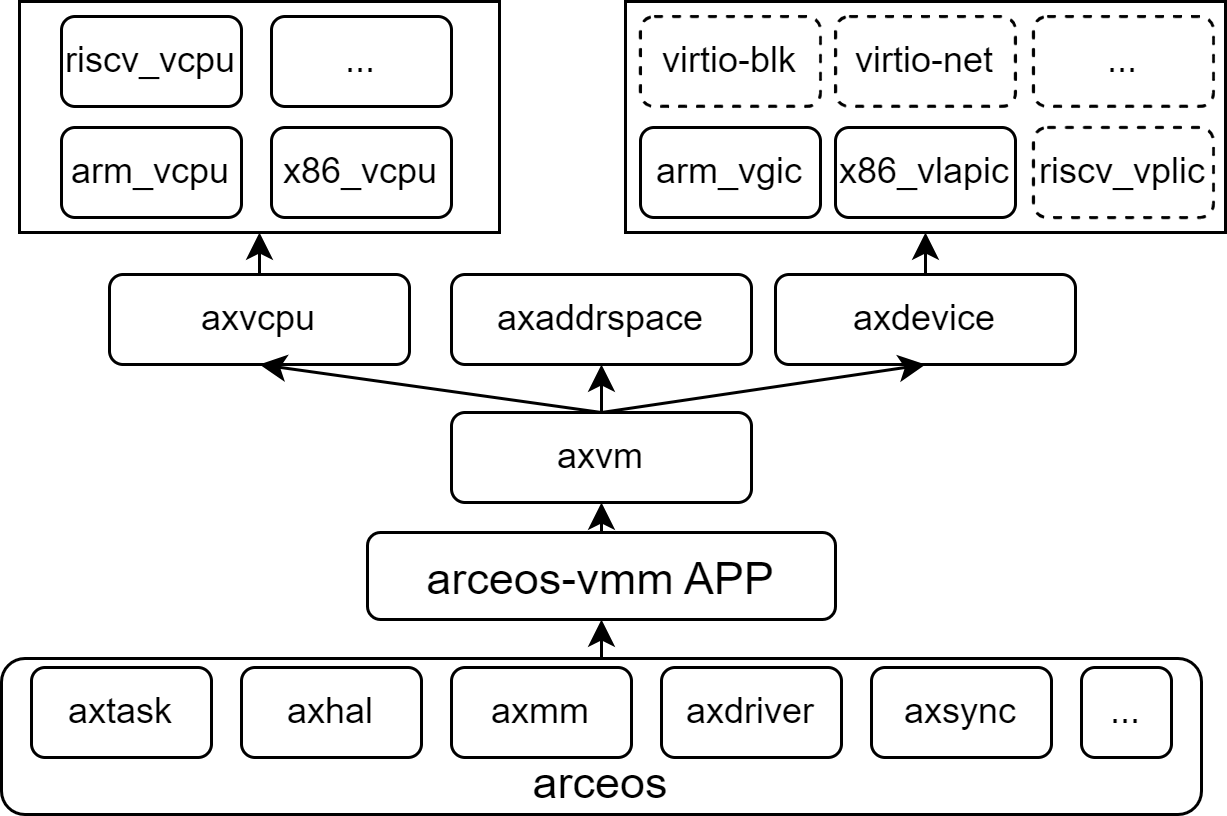

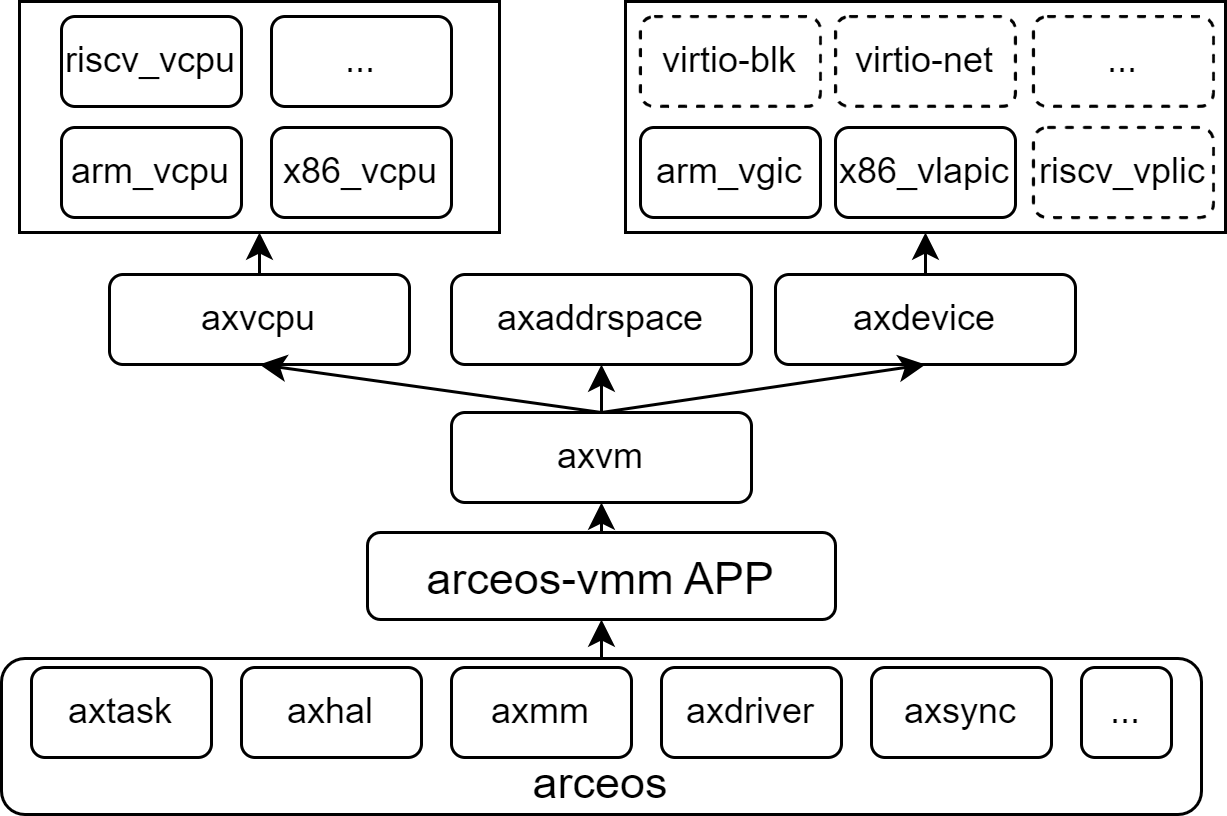

-The software architecture of AxVisor is divided into five layers as shown in the diagram below. Each box represents an independent module, and the modules communicate with each other through standard interfaces.

+The software architecture of AxVisor is divided into five layers as shown in the diagram below. Each box represents an independent component, and components communicate with each other through standard interfaces. The complete architecture description can be found in the [documentation](https://arceos-hypervisor.github.io/axvisorbook/docs/overview).

-The complete architecture description can be found in the [documentation](https://arceos-hypervisor.github.io/doc/arch_cn.html).

+## Hardware Platforms

-## Hardwares

+AxVisor has been verified on multiple hardware platforms, covering extensive support from virtualization environments to actual physical devices. To facilitate rapid user deployment, we provide one-click build scripts for each platform in the [axvisor-guest](https://github.com/arceos-hypervisor/axvisor-guest) repository, which can automatically generate corresponding image files.

-Currently, AxVisor has been verified on the following platforms:

+| Platform Name | Architecture Support | Key Features |

+|---------------|---------------------|--------------|

+| QEMU | ARM64, x86_64 | Virtualization platform, supports multiple architectures, for development and testing |

+| Orange Pi 5 Plus | ARM64 | Development board based on Rockchip RK3588, high-performance ARM platform |

+| Phytium Pi | ARM64 | Development board based on Phytium E2000Q processor, domestic ARM platform |

+| ROC-RK3568-PC | ARM64 | Development board based on Rockchip RK3568, suitable for industrial applications |

+| EVM3588 | ARM64 | Evaluation board based on Rockchip RK3588, enterprise-level applications |

-- [x] QEMU ARM64 virt (qemu-max)

-- [x] Rockchip RK3568 / RK3588

-- [x] PhytiumPi

+## Guest Systems

-## Guest VMs

+AxVisor supports multiple operating systems as guests, with good compatibility from lightweight microkernels to mature macrokernel systems. To simplify the user deployment process, we provide one-click build scripts for different guest systems in the [axvisor-guest](https://github.com/arceos-hypervisor/axvisor-guest) repository, which can quickly generate adapted guest images.

-Currently, AxVisor has been verified in scenarios with the following systems as guests:

+| Guest System | System Type | Architecture Support | Feature Description |

+|--------------|-------------|---------------------|-------------------|

+| [ArceOS](https://github.com/arceos-org/arceos) | Unikernel | ARM64, x86_64, RISC-V | Rust-based componentized operating system, lightweight and high-performance |

+| [Starry-OS](https://github.com/Starry-OS) | Macrokernel OS | ARM64, x86_64 | Real-time operating system for embedded scenarios |

+| [NimbOS](https://github.com/equation314/nimbos) | RTOS System | ARM64, x86_64, RISC-V | Concise Unix-like system, supports POSIX interface |

+| Linux | Macrokernel OS | ARM64, x86_64, RISC-V | Mature and stable general-purpose operating system, rich software ecosystem |

-- [ArceOS](https://github.com/arceos-org/arceos)

-- [Starry-OS](https://github.com/Starry-OS)

-- [NimbOS](https://github.com/equation314/nimbos)

-- Linux

+# Build

-## Shell Management

-

-AxVisor provides an interactive shell interface for managing virtual machines and file operations.

-

-For detailed information about shell features, commands, and usage, see: [Shell模块介绍.md](doc/Shell模块介绍.md)

-

-# Build and Run

-

-After AxVisor starts, it loads and starts the guest based on the information in the guest configuration file. Currently, AxVisor supports loading guest images from a FAT32 file system and also supports binding guest images to the hypervisor image through static compilation (using include_bytes).

+AxVisor is built based on the Rust ecosystem, providing complete project build, configuration management, and debugging support through the extended xtask toolchain, offering developers a unified and efficient development experience.

## Build Environment

-AxVisor is written in the Rust programming language, so you need to install the Rust development environment following the instructions on the official Rust website. Additionally, you need to install cargo-binutils to use tools like rust-objcopy and rust-objdump.

+First, in a Linux environment, you need to install basic development tool packages such as `libssl-dev gcc libudev-dev pkg-config`.

-```console

-cargo install cargo-binutils

-```

+Second, AxVisor is written in the Rust programming language, so you need to install the Rust development environment according to the official Rust website instructions, and use the `cargo install cargo-binutils` command to install [cargo-binutils](https://github.com/rust-embedded/cargo-binutils) to use tools like `rust-objcopy` and `rust-objdump`.

-If necessary, you may also need to install [musl-gcc](http://musl.cc/x86_64-linux-musl-cross.tgz) to build guest applications.

+> If necessary, you may also need to install [musl-gcc](http://musl.cc/x86_64-linux-musl-cross.tgz) to build guest applications.

## Configuration Files

-Since guest configuration is a complex process, AxVisor chooses to use toml files to manage guest configurations, which include the virtual machine ID, virtual machine name, virtual machine type, number of CPU cores, memory size, virtual devices, and passthrough devices.

-

-- In the source code's `./config/vms` directory, there are some example templates for guest configurations. The configuration files are named in the format `

--board_or_cpu-smpx`, where:

- - `` is the guest operating system name

- - `` is the architecture

- - `board_or_cpu` is the name of the hardware development board or CPU (different strings are concatenated with `_`)

- - `smpx` refers to the number of CPUs allocated to the guest, where `x` is the specific value

- - The different components are concatenated with `-` to form the whole name

-

-- Additionally, you can also use the [axvmconfig](https://github.com/arceos-hypervisor/axvmconfig) tool to generate a custom configuration file. For detailed information, please refer to [axvmconfig](https://arceos-hypervisor.github.io/axvmconfig/axvmconfig/index.html).

-

-## Load and run from file system

-

-Loading from the filesystem refers to the method where the AxVisor image, Linux guest image, and its device tree are independently deployed in the filesystem on the storage. After AxVisor starts, it loads the guest image and its device tree from the filesystem to boot the guest.

-

-### NimbOS as guest

-

-1. Execute script to download and prepare NimbOS image.

-

- ```shell

- ./scripts/nimbos.sh --arch aarch64

- ```

-

-2. Execute `./axvisor.sh run --plat aarch64-generic --features fs,ept-level-4 --arceos-args BUS=mmio,BLK=y,DISK_IMG=tmp/nimbos-aarch64.img,LOG=info --vmconfigs configs/vms/nimbos-aarch64-qemu-smp1.toml` to build AxVisor and start it in QEMU.

+AxVisor uses a layered configuration system, including hardware platform configuration and guest configuration, both in TOML format.

-3. After that, you can directly run `./axvisor.sh run` to start it, and modify `.hvconfig.toml` to change the startup parameters.

+### Hardware Platform Configuration

-### More guest

+Hardware platform configuration files are located in the `configs/board/` directory, with each configuration file corresponding to a development board (or QEMU platform architecture) that we have verified. They specify the target architecture, feature sets, driver support, log levels, and build options.

- TODO

+> The guest configuration item `vm_configs` is not specified by default and needs to be specified in actual use!

-## Load and run from memory

+### Guest Configuration

-Loading from memory refers to a method where the AxVisor image, guest image, and its device tree are already packaged together during the build phase. Only AxVisor itself needs to be deployed in the file system on the storage device. After AxVisor starts, it loads the guest image and its device tree from memory to boot the guest.

+Guest configuration files are located in the `configs/vms/` directory, defining the runtime parameters of guests, including basic information, kernel configuration, memory regions, and device configuration details.

-### linux as guest

+The configuration file naming format is `--board_or_cpu-smpx`, where `` is the guest system name (such as `arceos`, `linux`, `nimbos`), `` is the architecture (such as `aarch64`, `x86_64`, `riscv64`), `board_or_cpu` is the hardware development board or CPU name, and `smpx` is the number of CPUs allocated to the guest.

-1. Prepare working directory

- ```console

- mkdir -p tmp

- cp configs/vms/linux-aarch64-qemu-smp1.toml tmp/

- cp configs/vms/linux-aarch64-qemu-smp1.dts tmp/

- ```

+## Compilation

-2. [See Linux build help](https://github.com/arceos-hypervisor/guest-test-linux) to get the guest Image and rootfs.img, then copy them to the `tmp` directory.

+AxVisor uses the xtask tool for build management, supporting multiple hardware platforms and configuration options. For a quick build and run of AxVisor, please refer to the [Quick Start](https://arceos-hypervisor.github.io/axvisorbook/docs/category/quickstart) chapter in the configuration documentation.

-3. Execute `dtc -O dtb -I dts -o tmp/linux-aarch64-qemu-smp1.dtb tmp/linux-aarch64-qemu-smp1.dts` to build the guest device tree file

+1. **Generate Configuration**: Use `cargo xtask defconfig ` to select the target hardware platform configuration from the `configs/board/` directory. This command copies the corresponding board-level configuration to `.build.toml` as the build configuration.

-4. Execute `./axvisor.sh defconfig`, then edit the `.hvconfig.toml` file, set the `vmconfigs` item to your guest machine configuration file path, with the following content:

+2. **Modify Configuration**: Use `cargo xtask menuconfig` to launch the interactive configuration interface, where you can adjust the target architecture, feature sets, log levels, and other parameters.

- ```toml

- arceos_args = [

- "BUS=mmio",

- "BLK=y",

- "MEM=8g",

- "LOG=debug",

- "QEMU_ARGS=\"-machine gic-version=3 -cpu cortex-a72 \"",

- "DISK_IMG=\"tmp/rootfs.img\"",

- ]

- vmconfigs = [ "tmp/linux-aarch64-qemu-smp1.toml"]

- ```

-

-4. Execute `./axvisor.sh run` to build AxVisor and start it in QEMU.

-

-### More guest

-

- TODO

+3. **Execute Build**: Use `cargo xtask build` to compile the project according to the `.build.toml` configuration file, generating the target platform binary file.

# Contributing

-Feel free to fork this repository and submit a pull request.

-

-You can refer to these [discussions]((https://github.com/arceos-hypervisor/axvisor/discussions)) to gain deeper insights into the project's ideas and future development direction.

-

-## Development

-

-To contribute to AxVisor, you can follow these steps:

-

-1. Fork the repository on GitHub.

-2. Clone your forked repository to your local machine.

-3. Create a new branch for your feature or bug fix.

-4. Make your changes and commit them with clear messages.

-5. Push your changes to your forked repository.

-6. Open a pull request against the main branch of the original repository.

-

-To develop crates used by AxVisor, you can use the following command to build and run the project:

-

-```bash

-cargo install cargo-lpatch

-cargo lpatch -n deps_crate_name

-```

-

-Then you can modify the code in the `crates/deps_crate_name` directory, and it will be automatically used by AxVisor.

-

-## Contributors

-

-This project exists thanks to all the people who contribute.

+Welcome to fork this repository and submit pull requests. The existence and development of this project is thanks to the support of all contributors.

@@ -177,9 +103,9 @@ This project exists thanks to all the people who contribute.

# License

-AxVisor uses the following open-source license:

+AxVisor uses the following open-source licenses:

- Apache-2.0

- MulanPubL-2.0

- MulanPSL2

-- GPL-3.0-or-later

+- GPL-3.0-or-later

\ No newline at end of file

diff --git a/README_CN.md b/README_CN.md

index 37cd0102..1ce87af8 100644

--- a/README_CN.md

+++ b/README_CN.md

@@ -4,9 +4,9 @@

@@ -177,9 +103,9 @@ This project exists thanks to all the people who contribute.

# License

-AxVisor uses the following open-source license:

+AxVisor uses the following open-source licenses:

- Apache-2.0

- MulanPubL-2.0

- MulanPSL2

-- GPL-3.0-or-later

+- GPL-3.0-or-later

\ No newline at end of file

diff --git a/README_CN.md b/README_CN.md

index 37cd0102..1ce87af8 100644

--- a/README_CN.md

+++ b/README_CN.md

@@ -4,9 +4,9 @@

@@ -20,236 +20,82 @@

# 简介

-AxVisor 是基于 ArceOS unikernel 框架实现的 Hypervisor。其目标是利用 ArceOS 提供的基础操作系统功能作为基础,实现一个统一的组件化 Hypervisor。

+AxVisor 是一个基于 ArceOS 内核实现的 Hypervisor。其目标是利用 ArceOS 提供的基础操作系统功能作为基础,实现一个轻量级统一组件化 Hypervisor。

-**统一**是指使用同一套代码同时支持 x86_64、Arm(aarch64) 和 RISC-V 三种架构,以最大化复用架构无关代码,简化代码开发和维护成本。

+- **统一**是指使用同一套代码同时支持 x86_64、Arm(aarch64) 和 RISC-V 三种架构,以最大化复用架构无关代码,简化代码开发和维护成本。

-**组件化**是指 Hypervisor 的功能被分解为多个可独立使用的组件,每个组件实现一个特定的功能,组件之间通过标准接口进行通信,以实现功能的解耦和复用。

+- **组件化**是指 Hypervisor 的功能被分解为多个可独立使用的组件,每个组件实现一个特定的功能,组件之间通过标准接口进行通信,以实现功能的解耦和复用。

## 架构

-AxVisor 的软件架构分为如下图所示的五层,其中,每一个框都是一个独立的组件,组件之间通过标准接口进行通信。

+AxVisor 的软件架构分为如下图所示的五层,其中,每一个框都是一个独立的组件,组件之间通过标准接口进行通信。完整的架构描述可以在[文档](https://arceos-hypervisor.github.io/axvisorbook/docs/overview)中找到。

-完整的架构描述可以在[文档](https://arceos-hypervisor.github.io/doc/arch_cn.html)中找到。

-

## 硬件平台

-目前,AxVisor 已经在如下平台进行了验证:

+AxVisor 已在多种硬件平台上完成验证,涵盖从虚拟化环境到实际物理设备的广泛支持。为方便用户快速部署,我们在 [axvisor-guest](https://github.com/arceos-hypervisor/axvisor-guest) 仓库中提供了各平台的一键构建脚本,可自动生成对应的镜像文件。

-- [x] QEMU ARM64 virt (qemu-max)

-- [x] Rockchip RK3568 / RK3588

-- [x] 飞腾派

+| 平台名称 | 架构支持 | 主要特点 |

+|---------|---------|---------|

+| QEMU | ARM64, x86_64 | 虚拟化平台,支持多架构,用于开发和测试 |

+| Orange Pi 5 Plus | ARM64 | 基于 Rockchip RK3588 的开发板,高性能 ARM 平台 |

+| 飞腾派 | ARM64 | 基于飞腾 E2000Q 处理器的开发板,国产 ARM 平台 |

+| ROC-RK3568-PC | ARM64 | 基于 Rockchip RK3568 的开发板,适合工业应用 |

+| EVM3588 | ARM64 | 基于 Rockchip RK3588 的评估板,企业级应用 |

-## 客户机

+## 客户机系统

-目前,AxVisor 已经在对如下系统作为客户机的情况进行了验证:

+AxVisor 支持多种操作系统作为客户机运行,从轻量级微内核到成熟的宏内核系统均有良好兼容性。为简化用户部署流程,我们在 [axvisor-guest](https://github.com/arceos-hypervisor/axvisor-guest) 仓库中提供了针对不同客户机系统的一键构建脚本,可快速生成适配的客户机镜像。

-- [ArceOS](https://github.com/arceos-org/arceos)

-- [Starry-OS](https://github.com/Starry-OS)

-- [NimbOS](https://github.com/equation314/nimbos)

-- Linux

+| 客户机系统 | 系统类型 | 架构支持 | 特点描述 |

+|-----------|---------|---------|---------|

+| [ArceOS](https://github.com/arceos-org/arceos) | Unikernel | ARM64, x86_64, RISC-V | 基于Rust的组件化操作系统,轻量级、高性能 |

+| [Starry-OS](https://github.com/Starry-OS) | 宏内核操作系统 | ARM64, x86_64 | 面向嵌入式场景的实时操作系统 |

+| [NimbOS](https://github.com/equation314/nimbos) | RTOS 系统 | ARM64, x86_64, RISC-V | 简洁的类Unix系统,支持POSIX接口 |

+| Linux | 宏内核操作系统 | ARM64, x86_64, RISC-V | 成熟稳定的通用操作系统,丰富的软件生态 |

-# 构建及运行

+# 构建

-AxVisor 启动之后会根据客户机配置文件中的信息加载并启动客户机。目前,AxVisor 即支持从 FAT32 文件系统加载客户机镜像,也支持通过静态编译方式(include_bytes)将客户机镜像绑定到虚拟机管理程序镜像中。

+AxVisor 基于 Rust 生态系统构建,通过扩展的 xtask 工具链提供了完整的项目构建、配置管理和调试支持,为开发者提供统一且高效的开发体验。

## 构建环境

-AxVisor 是使用 Rust 编程语言编写的,因此,需要根据 Rust 官方网站的说明安装 Rust 开发环境。此外,还需要安装 [cargo-binutils](https://github.com/rust-embedded/cargo-binutils) 以便使用 `rust-objcopy` 和 `rust-objdump` 等工具

+首先,在 Linux 环境下,需要安装 `libssl-dev gcc libudev-dev pkg-config` 等基本开发工具包。

-```console

-cargo install cargo-binutils

-```

+其次,AxVisor 是使用 Rust 编程语言编写的,因此,需要根据 Rust 官方网站的说明安装 Rust 开发环境,并使用 `cargo install cargo-binutils` 命令安装 [cargo-binutils](https://github.com/rust-embedded/cargo-binutils) 以便使用 `rust-objcopy` 和 `rust-objdump` 等工具

-根据需要,可能还要安装 [musl-gcc](http://musl.cc/x86_64-linux-musl-cross.tgz) 来构建客户机应用程序

+> 根据需要,可能还要安装 [musl-gcc](http://musl.cc/x86_64-linux-musl-cross.tgz) 来构建客户机应用程序

## 配置文件

-由于客户机配置是一个复杂的过程,AxVisor 选择使用 toml 文件来管理客户机的配置,其中包括虚拟机 ID、虚拟机名称、虚拟机类型、CPU 核心数量、内存大小、虚拟设备和直通设备等。

+AxVisor 使用分层配置系统,包含硬件平台配置和客户机配置两部分,均采用 TOML 格式。

+

+### 硬件平台配置

+

+硬件平台配置文件位于 `configs/board/` 目录下,每个配置文件都对应了一个经过我们验证的开发板(或 QEMU 平台架构)。其中指定了目标架构、功能特性、驱动支持、日志级别以及构建选项。

+

+> 客户机配置项 `vm_configs` 默认并没有指定,在实际使用时需要进行指定!

+

+### 客户机配置

+

+客户机配置文件位于 `configs/vms/` 目录下,定义了客户机的运行参数,包括基本信息、内核配置、内存区域以及设备配置等详细信息。

+

+配置文件命名格式为 `

--board_or_cpu-smpx`,其中 `` 是客户机系统名字(如 `arceos`、`linux`、`nimbos`),`` 是架构(如 `aarch64`、`x86_64`、`riscv64`),`board_or_cpu` 是硬件开发板或 CPU 名称,`smpx` 是分配给客户机的 CPU 数量。

+

+## 编译

+

+AxVisor 使用 xtask 工具进行构建管理,支持多种硬件平台和配置选项。快速构建及运行 AxVisor,请参见配置套文档中的[快速上手](https://arceos-hypervisor.github.io/axvisorbook/docs/category/quickstart)章节。

+

+1. **生成配置**:使用 `cargo xtask defconfig ` 选择 `configs/board/` 目录下目标硬件平台配置。此命令会将对应板级配置复制为 `.build.toml` 作为构建配置。

+

+2. **修改配置**:使用 `cargo xtask menuconfig` 启动交互式配置界面,可调整目标架构、功能特性、日志级别等参数。

+

+3. **执行构建**:使用 `cargo xtask build` 根据 `.build.toml` 配置文件编译项目,生成目标平台的二进制文件。

-- 在源码的 `./config/vms` 目录下是一些客户机配置的示例模板,配置文件命名格式为 `--board_or_cpu-smpx`,其中

- - `` 是客户机系统名字

- - `` 是架构

- - `board_or_cpu` 硬件开发板或者 CPU 的名字(以 `_` 拼接不同字符串)

- - `smpx` 是指分配客户机的 CPU 数量 `x` 为具体数值

- - 各项之间以 `-` 拼接为整体

+# 贡献

-- 此外,也可以使用 [axvmconfig](https://github.com/arceos-hypervisor/axvmconfig) 工具来生成一个自定义配置文件。详细介绍参见 [axvmconfig](https://arceos-hypervisor.github.io/axvmconfig/axvmconfig/index.html)。

-

-## 从文件系统加载运行

-

-从文件系统加载是指将 AxVisor 镜像和 Linux 客户机镜像及其设备树独立部署在存储器上的文件系统中,AxVisor 启动后从文件系统中加载客户机镜像及其设备树进而启动客户机的方式。

-

-### NimbOS 作为客户机

-

-1. 执行仓库内的脚本下载并准备 NimbOS 镜像:

-

- ```bash

- ./scripts/nimbos.sh --arch aarch64

- ```

-

-2. 执行 `./axvisor.sh run --plat aarch64-generic --features fs,ept-level-4 --arceos-args BUS=mmio,BLK=y,DISK_IMG=tmp/nimbos-aarch64.img,LOG=info --vmconfigs configs/vms/nimbos-aarch64-qemu-smp1.toml` 构建 AxVisor 并在 QEMU 中启动 NimbOS 客户机。

-

-3. 后续可直接执行 `./axvisor.sh run` 来启动,并可通过修改 `.hvconfig.toml` 来更改启动参数

-

-### 更多客户机

-

- TODO

-

-## 从内存加载运行

-

-从内存加载是指在构建时已经将 AxVisor 镜像与客户机镜像及其设备树打包在了一起,而只需要将 AxVisor 本身部署在存储器上文件系统中,AxVisor 启动后从内存中加载客户机镜像及其设备树进而启动客户机的方式。

-

-### Linux 作为客户机

-

-1. 准备工作目录及相关文件

- ```console

- mkdir -p tmp

- cp configs/vms/linux-aarch64-qemu-smp1.toml tmp/

- cp configs/vms/linux-aarch64-qemu-smp1.dts tmp/

- ```

-

-2. [参见 linux 构建帮助](https://github.com/arceos-hypervisor/guest-test-linux)获取客户机 Image 和 rootfs.img,然后复制到 `tmp` 目录中。

-

-3. 构建客户机设备树 `dtc -O dtb -I dts -o tmp/linux-aarch64-qemu-smp1.dtb tmp/linux-aarch64-qemu-smp1.dts`

-

-4. 执行 `./axvisor.sh defconfig`,然后编辑 `.hvconfig.toml` 文件,设置 `vmconfigs` 项为您的客户机配置文件路径,例如:

-

- ```toml

- arceos_args = [

- "BUS=mmio",

- "BLK=y",

- "MEM=8g",

- "LOG=debug",

- "QEMU_ARGS=\"-machine gic-version=3 -cpu cortex-a72 \"",

- "DISK_IMG=\"tmp/rootfs.img\"",

- ]

- vmconfigs = [ "tmp/linux-aarch64-qemu-smp1.toml"]

- ```

-

-4. 执行 `./axvisor.sh run` 构建 AxVisor 并在 QEMU 中启动。

-

-### 更多客户机

-

- TODO

-

-## 启动示例

-

-```bash

- d8888 .d88888b. .d8888b.

- d88888 d88P" "Y88b d88P Y88b

- d88P888 888 888 Y88b.

- d88P 888 888d888 .d8888b .d88b. 888 888 "Y888b.

- d88P 888 888P" d88P" d8P Y8b 888 888 "Y88b.

- d88P 888 888 888 88888888 888 888 "888

- d8888888888 888 Y88b. Y8b. Y88b. .d88P Y88b d88P

-d88P 888 888 "Y8888P "Y8888 "Y88888P" "Y8888P"

-

-log_level = info

-smp = 1

-

-[ 0.020822 0 axruntime:130] Logging is enabled.

-[ 0.026419 0 axruntime:131] Primary CPU 0 started, dtb = 0x44000000.

-[ 0.028520 0 axruntime:133] Found physcial memory regions:

-[ 0.030673 0 axruntime:135] [PA:0x40080000, PA:0x400d6000) .text (READ | EXECUTE | RESERVED)

-[ 0.033564 0 axruntime:135] [PA:0x400d6000, PA:0x400ef000) .rodata (READ | RESERVED)

-[ 0.035313 0 axruntime:135] [PA:0x400ef000, PA:0x400f5000) .data .tdata .tbss .percpu (READ | WRITE | RESERVED)

-[ 0.037083 0 axruntime:135] [PA:0x400f5000, PA:0x40135000) boot stack (READ | WRITE | RESERVED)

-[ 0.038622 0 axruntime:135] [PA:0x40135000, PA:0x4013b000) .bss (READ | WRITE | RESERVED)

-[ 0.040643 0 axruntime:135] [PA:0x4013b000, PA:0x48000000) free memory (READ | WRITE | FREE)

-[ 0.042907 0 axruntime:135] [PA:0x9000000, PA:0x9001000) mmio (READ | WRITE | DEVICE | RESERVED)

-[ 0.045011 0 axruntime:135] [PA:0x9040000, PA:0x9041000) mmio (READ | WRITE | DEVICE | RESERVED)

-[ 0.047070 0 axruntime:135] [PA:0x9100000, PA:0x9101000) mmio (READ | WRITE | DEVICE | RESERVED)

-[ 0.049093 0 axruntime:135] [PA:0x8000000, PA:0x8020000) mmio (READ | WRITE | DEVICE | RESERVED)

-[ 0.051081 0 axruntime:135] [PA:0xa000000, PA:0xa004000) mmio (READ | WRITE | DEVICE | RESERVED)

-[ 0.053120 0 axruntime:135] [PA:0x10000000, PA:0x3eff0000) mmio (READ | WRITE | DEVICE | RESERVED)

-[ 0.055229 0 axruntime:135] [PA:0x4010000000, PA:0x4020000000) mmio (READ | WRITE | DEVICE | RESERVED)

-[ 0.057642 0 axruntime:208] Initialize global memory allocator...

-[ 0.059377 0 axruntime:209] use TLSF allocator.

-[ 0.072071 0 axmm:60] Initialize virtual memory management...

-[ 0.136312 0 axruntime:150] Initialize platform devices...

-[ 0.137733 0 axhal::platform::aarch64_common::gic:67] Initialize GICv2...

-[ 0.143653 0 axtask::api:73] Initialize scheduling...

-[ 0.151435 0 axtask::api:79] use FIFO scheduler.

-[ 0.152744 0 axruntime:176] Initialize interrupt handlers...

-[ 0.157472 0 axruntime:186] Primary CPU 0 init OK.

-[ 0.159027 0:2 axvisor:17] Starting virtualization...

-[ 0.160968 0:2 axvisor:19] Hardware support: true

-[ 0.168619 0:4 axvisor::vmm::timer:103] Initing HV Timer...

-[ 0.170399 0:4 axvisor::hal:117] Hardware virtualization support enabled on core 0

-[ 0.295531 0:2 axvisor::vmm::config:33] Creating VM [1] "arceos"

-[ 0.301423 0:2 axvm::vm:113] Setting up memory region: [0x40000000~0x41000000] READ | WRITE | EXECUTE

-[ 0.334424 0:2 axvm::vm:156] Setting up passthrough device memory region: [0x8000000~0x8050000] -> [0x8000000~0x8050000]

-[ 0.339431 0:2 axvm::vm:156] Setting up passthrough device memory region: [0x9000000~0x9001000] -> [0x9000000~0x9001000]

-[ 0.341925 0:2 axvm::vm:156] Setting up passthrough device memory region: [0x9010000~0x9011000] -> [0x9010000~0x9011000]

-[ 0.343758 0:2 axvm::vm:156] Setting up passthrough device memory region: [0x9030000~0x9031000] -> [0x9030000~0x9031000]

-[ 0.345559 0:2 axvm::vm:156] Setting up passthrough device memory region: [0xa000000~0xa004000] -> [0xa000000~0xa004000]

-[ 0.348819 0:2 axvm::vm:191] VM created: id=1

-[ 0.350749 0:2 axvm::vm:206] VM setup: id=1

-[ 0.352526 0:2 axvisor::vmm::config:40] VM[1] created success, loading images...

-[ 0.355270 0:2 axvisor::vmm::images:24] Loading VM[1] images from memory

-[ 0.363583 0:2 axvisor::vmm:29] Setting up vcpus...

-[ 0.368014 0:2 axvisor::vmm::vcpus:176] Initializing VM[1]'s 1 vcpus

-[ 0.370802 0:2 axvisor::vmm::vcpus:207] Spawning task for VM[1] Vcpu[0]

-[ 0.374805 0:2 axvisor::vmm::vcpus:219] Vcpu task Task(5, "VM[1]-VCpu[0]") created cpumask: [0, ]

-[ 0.378878 0:2 axvisor::vmm:36] VMM starting, booting VMs...

-[ 0.380775 0:2 axvm::vm:273] Booting VM[1]

-[ 0.382631 0:2 axvisor::vmm:42] VM[1] boot success

-[ 0.387436 0:5 axvisor::vmm::vcpus:240] VM[1] Vcpu[0] waiting for running

-[ 0.390048 0:5 axvisor::vmm::vcpus:243] VM[1] Vcpu[0] running...

-

- d8888 .d88888b. .d8888b.

- d88888 d88P" "Y88b d88P Y88b

- d88P888 888 888 Y88b.

- d88P 888 888d888 .d8888b .d88b. 888 888 "Y888b.

- d88P 888 888P" d88P" d8P Y8b 888 888 "Y88b.

- d88P 888 888 888 88888888 888 888 "888

- d8888888888 888 Y88b. Y8b. Y88b. .d88P Y88b d88P

-d88P 888 888 "Y8888P "Y8888 "Y88888P" "Y8888P"

-

-arch = aarch64

-platform = aarch64-qemu-virt

-target = aarch64-unknown-none-softfloat

-build_mode = release

-log_level = warn

-smp = 1

-

-Hello, world!

-[ 0.416823 0:5 axvisor::vmm::vcpus:288] VM[1] run VCpu[0] SystemDown

-[ 0.419035 0:5 axhal::platform::aarch64_common::psci:98] Shutting down...

-```

-

-# 如何贡献

-

-欢迎 fork 本仓库并提交 pull request。

-

-您可以参考这些[讨论](https://github.com/arceos-hypervisor/axvisor/discussions),以深入了解该项目的思路和未来发展方向。

-

-## 开发

-

-要为 AxVisor 做贡献,您可以按照以下步骤操作:

-

-1. 在 GitHub 上 fork 本仓库。

-2. 将您 fork 的仓库克隆到本地。

-3. 为您的功能或错误修复创建新分支。

-4. 进行更改并提交清晰的消息。

-5. 将更改推送到您 fork 的仓库。

-6. 对原始仓库的主分支提交 pull request。

-

-要开发 AxVisor 使用的 crate,您可以使用以下命令构建和运行项目:

-

-```bash

-cargo install cargo-lpatch

-cargo lpatch -n deps_crate_name

-```

-

-然后您可以修改 `crates/deps_crate_name` 目录中的代码,AxVisor 将自动使用这些代码。

-

-## 贡献者

-

-这个项目的存在得益于所有贡献者的支持。

+欢迎 fork 本仓库并提交 pull request。这个项目的存在与发展得益于所有贡献者的支持。