DeePMD on AMD GPUs #1387

-

|

Hi, |

Beta Was this translation helpful? Give feedback.

Replies: 2 comments 3 replies

-

|

Yes, our offline package only support cuda. You may have to install from source. please refer to https://github.com/deepmodeling/deepmd-kit/blob/master/doc/install/install-from-source.md. Basically one needs to set the environment variable DP_VARIANT to rocm to switch on the compiling of deepmd-kit operators with rocm supported. If not set, deepmd-kit operators will be compiled for cpu, but all tensorflow operators are still on GPU. Please contact us if you have any questions regarding the installation of deepmd-kit for AMD GPU. |

Beta Was this translation helpful? Give feedback.

-

|

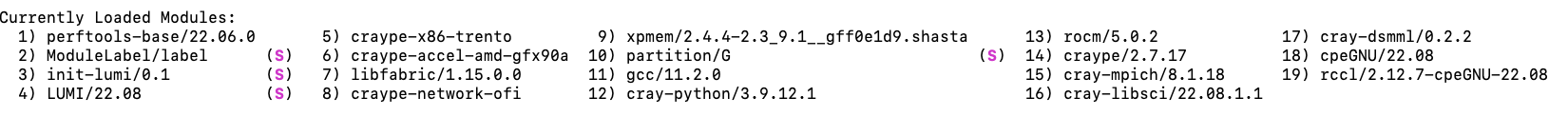

Hi, I am attempting to install deepmd on the LUMI supercomputer, which has MI250X GPUs. I have gotten quite far with the python interface but failed at the finishing line when training. Seem like the deepmd community also had the same problem: ROCm/ROCm#1623 (comment) Do you have any advice? Also, the guys at LUMI, said it would be great if you had a rocm docker container for their newer version of ROCM: rocm/5.0.2 |

Beta Was this translation helpful? Give feedback.

Yes, our offline package only support cuda. You may have to install from source. please refer to https://github.com/deepmodeling/deepmd-kit/blob/master/doc/install/install-from-source.md.

Basically one needs to set the environment variable DP_VARIANT to rocm to switch on the compiling of deepmd-kit operators with rocm supported. If not set, deepmd-kit operators will be compiled for cpu, but all tensorflow operators are still on GPU.

Please contact us if you have any questions regarding the installation of deepmd-kit for AMD GPU.