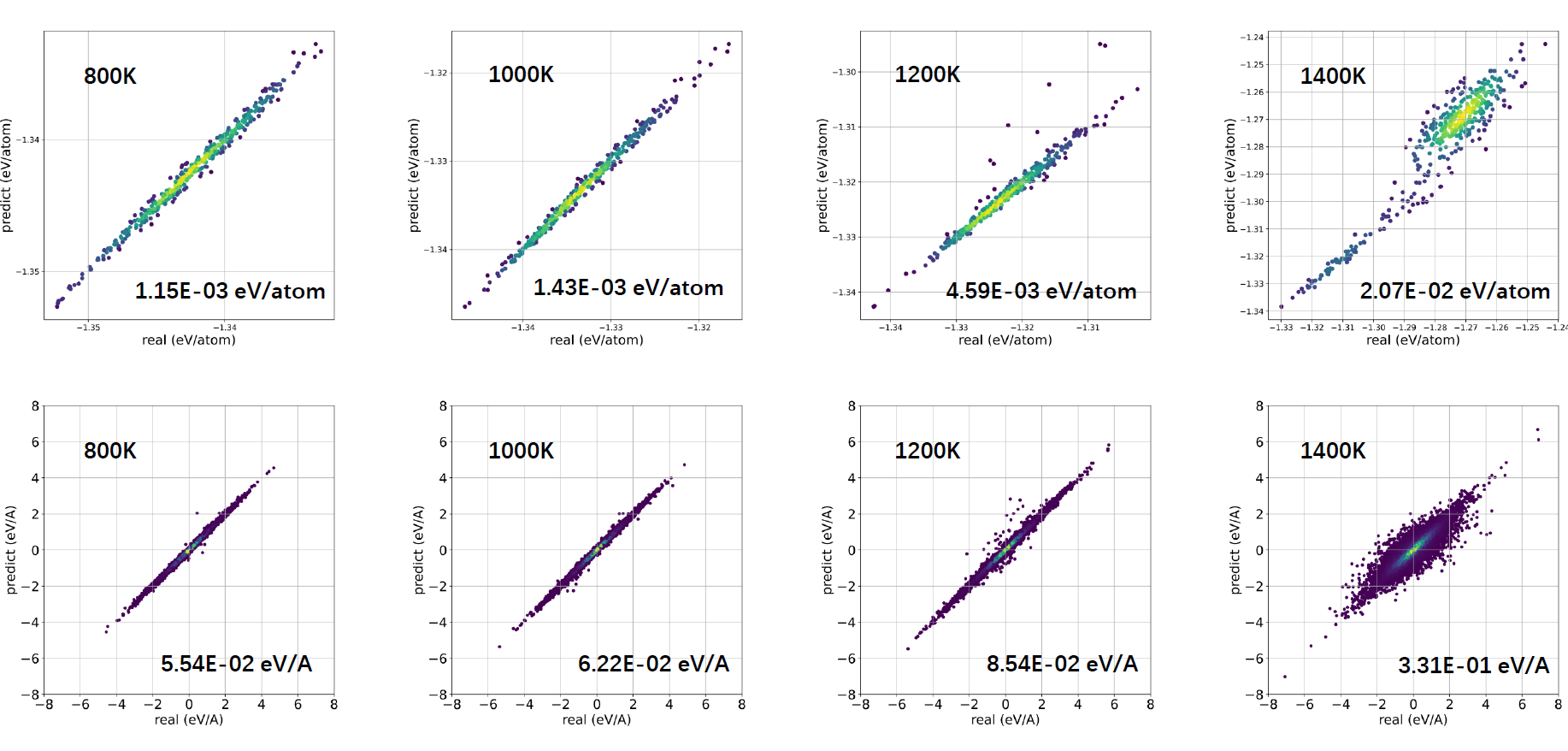

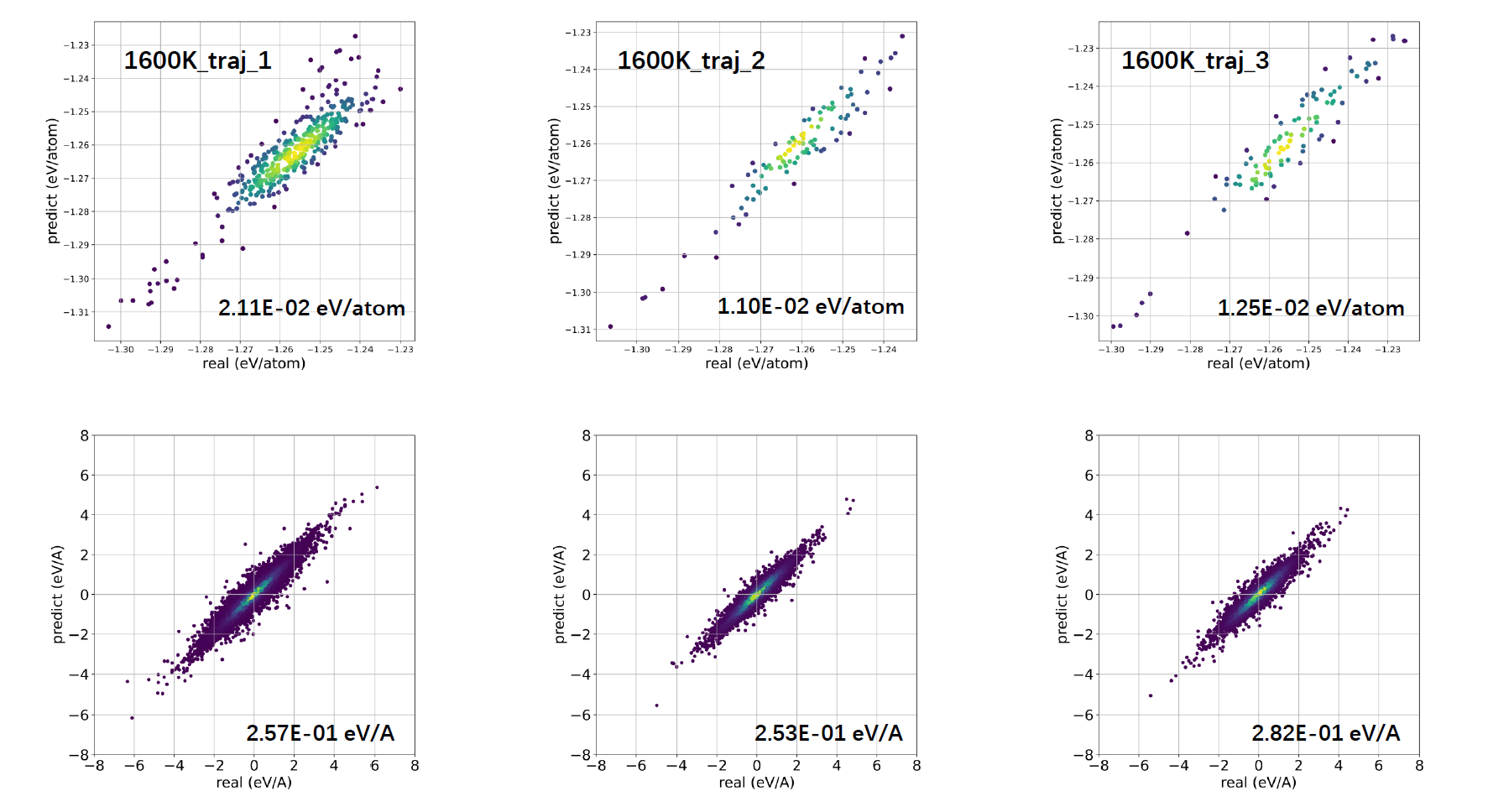

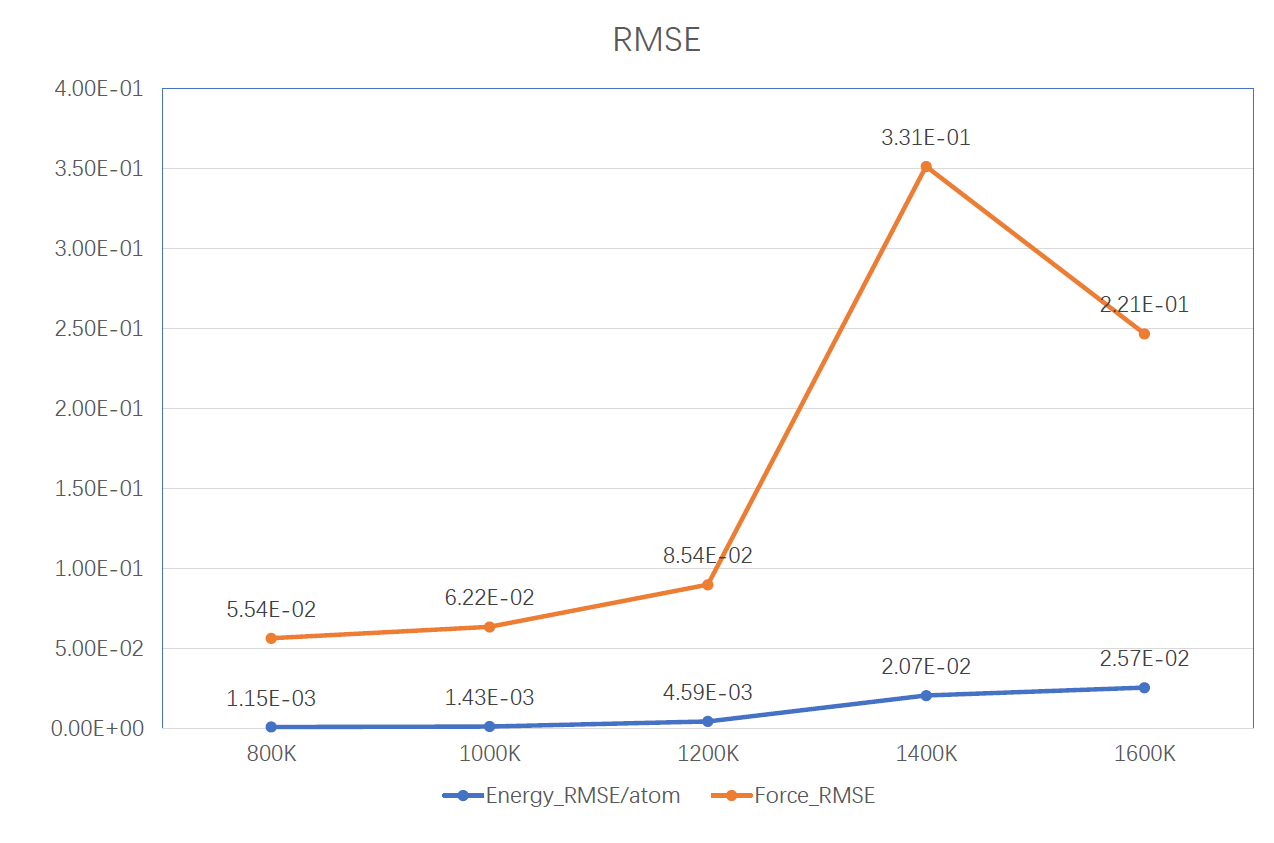

The RMSE of energy/atom and force increased with tamperatrue of AIMD #2252

-

Beta Was this translation helpful? Give feedback.

Replies: 2 comments 4 replies

-

|

This behavior is similar to what I've seen using every MLIAP I've ever run. The configurational space sampled at 50 K is simply smaller than the configurational space sampled at 1600 K. As such, at 1600 K the potential is asked to predict the forces and energies of a more diverse set of structures, and will always have more difficulty doing so, whereas at 50 K the structures sampled in a MD run are going to be nearer to the ground state, and thus easier to predict. To an extent, you're just going to need a fairly large amount of data to allow for accurate interpolation at 1600 K. If you want to remedy this, you'll need a more diverse training set. You could run additional high(er) temperature ab initio molecular dynamics, and then add structures generated in that manner to the training set, or you could run an active learning-style algorithm, eg the DP-Gen [1] algorithm (listing here because its designed to interface with DeepMD-Kit) to generate a more diverse training set. If you want to go down the path of rerunning AIMD, I've generally seen doing a large number of short AIMD runs as preferable to running a few long AIMD runs in literature, though I'd recommend an active learning type approach, as you can generally fill in the gaps in the training set using less DFT time that way. Also, out of curiosity, what's the size both of the AIMD simulations (in number of atoms), and the number of structures you're training on. [1] Zhang, Yuzhi, et al. "DP-GEN: A concurrent learning platform for the generation of reliable deep learning based potential energy models." Computer Physics Communications 253 (2020): 107206. I am not a dev of the project, and thus can't really comment deeply on the DeepMD-kit parameters used here. Though, in every sample training script I've seen for DeepMD-Kit, the max number of training steps is on the order of 10^6 rather than 2*10^4 as used here, so it could be that the potential isn't fully trained yet. In my own case, I still see improvement to the validation forces up to around 250k training steps. A useful metric for looking at this could be plotting the training loss (in lcurve.out) against the epoch number, and seeing if its still decreasing at the time training finishes. Edit, the cutoff seems fine; generally VASP wants 1.3-1.5x the max cutoff for running AIMD/Relaxation, and it appears you've satisfied that assuming you're using standard pseudopotentials, though definitely double check that. Depending on the size of the simulation, I might worry a tiny bit about the density of the KPOINT mesh, but I don't think it's the root of the problem here. |

Beta Was this translation helpful? Give feedback.

-

|

Please read FAQ:Why does a model have low precision:

|

Beta Was this translation helpful? Give feedback.

This behavior is similar to what I've seen using every MLIAP I've ever run. The configurational space sampled at 50 K is simply smaller than the configurational space sampled at 1600 K. As such, at 1600 K the potential is asked to predict the forces and energies of a more diverse set of structures, and will always have more difficulty doing so, whereas at 50 K the structures sampled in a MD run are going to be nearer to the ground state, and thus easier to predict. To an extent, you're just going to need a fairly large amount of data to allow for accurate interpolation at 1600 K.

If you want to remedy this, you'll need a more diverse training set. You could run additional high(er) tempera…