-

Beta Was this translation helpful? Give feedback.

Answered by

tuoping

May 13, 2021

Replies: 2 comments 6 replies

-

|

I can't say for sure what your problem is. But one thing I notice is that your learning rate is decaying too violently. Your stop_batch=150000, decay_steps=5000, which means you only decay 30 times in total to decay from 10^-3 to 10^-8, which means decay_rate~0.7. The decay_rate should better to be >~0.95. |

Beta Was this translation helpful? Give feedback.

5 replies

Answer selected by

tuoping

-

|

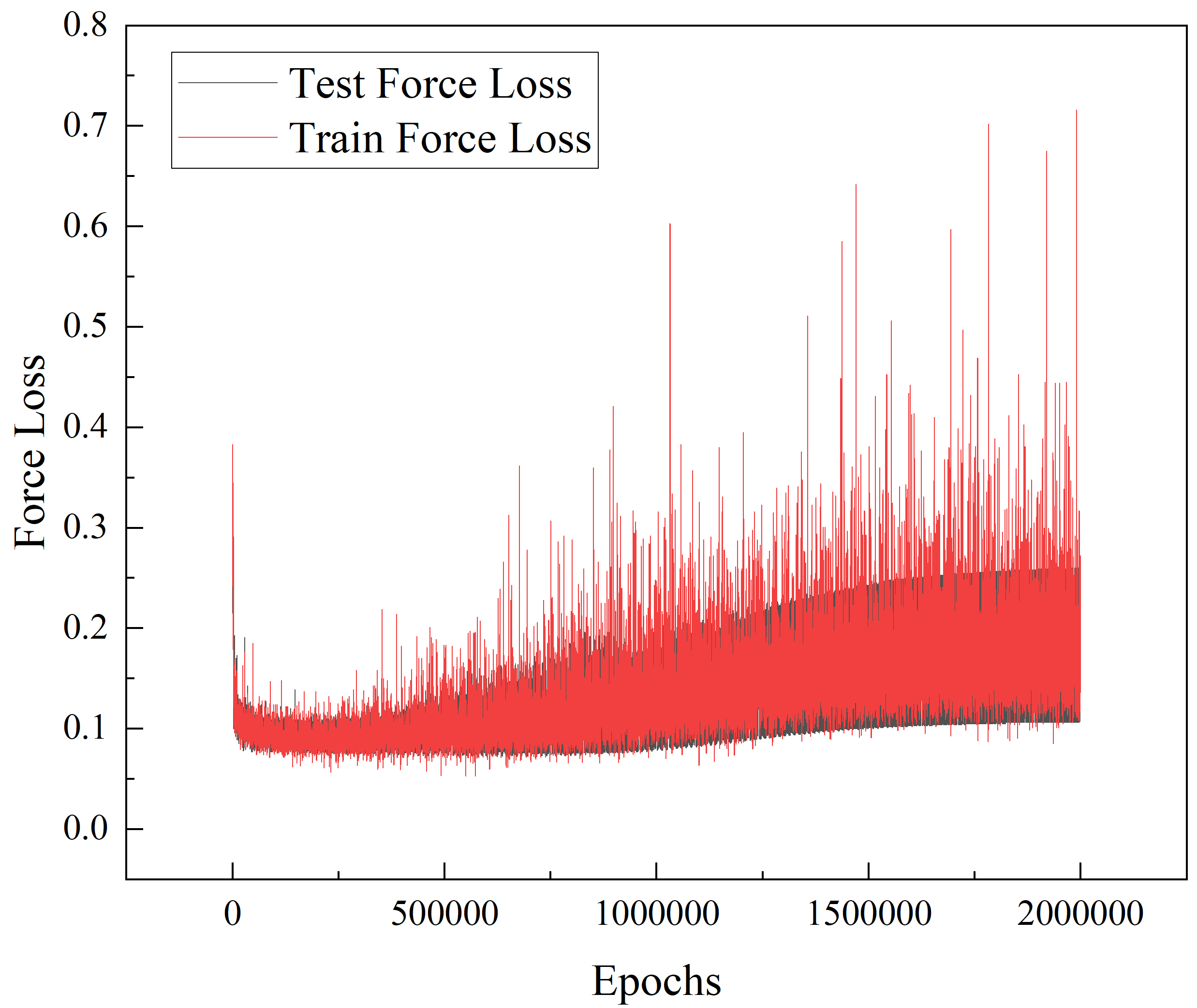

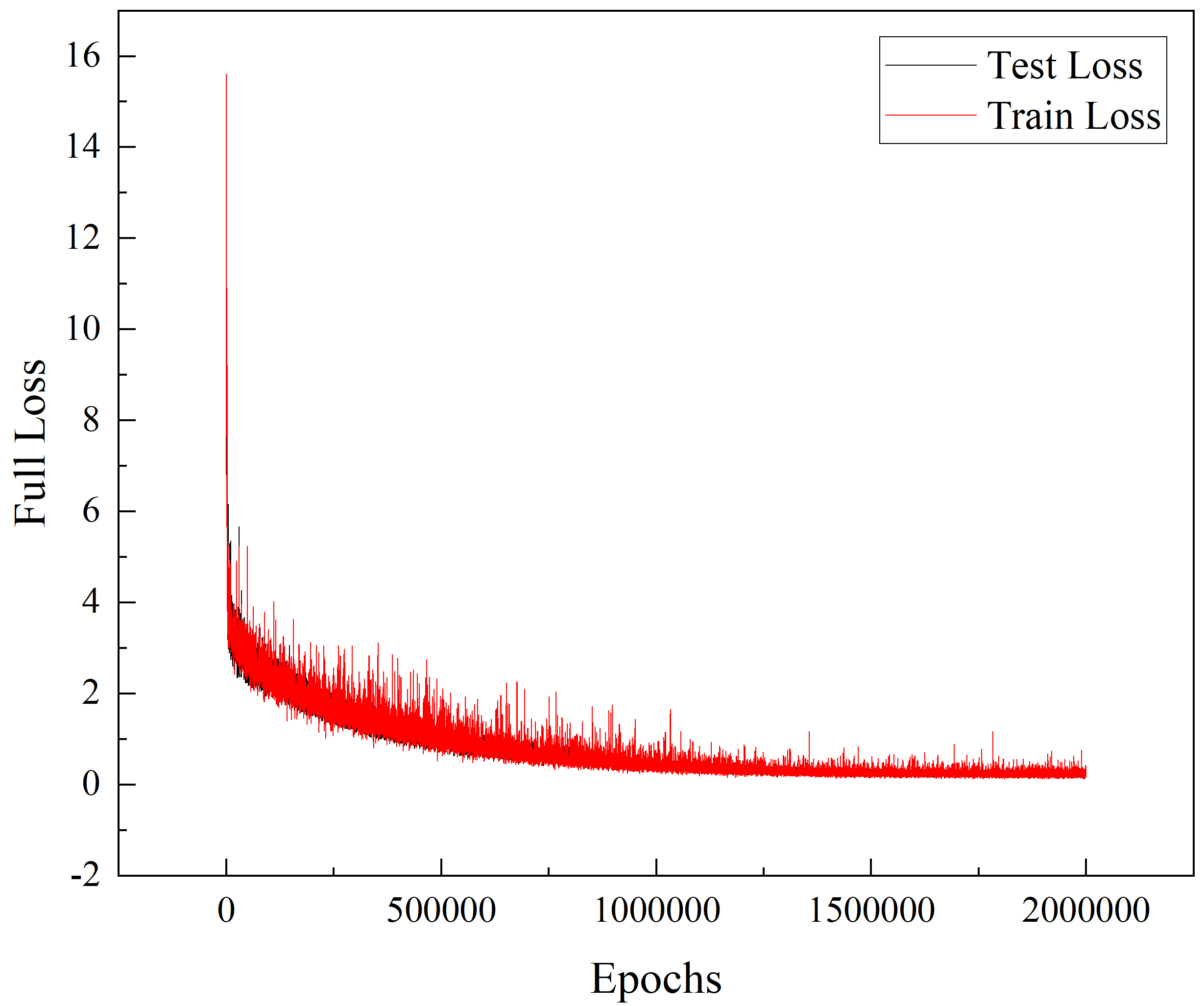

It seems that the force loss is going up, which might suggest that the

energy term may contaminate the training process. Maybe you can do a test

by setting the prefactor of energies directly to 0. This will show how you

can fit the forces purely.

For the batch size, you may choose to use "auto".

…On Wed, May 12, 2021 at 1:07 PM caolz ***@***.***> wrote:

I am training a model using the "se_a" type. The force losses does not

converge. What is your advice for better convergence?

I attached the "lcurve.out" plot for total and force losses. I would be

thankful if you help me with this issue.

[image: Graph1]

<https://user-images.githubusercontent.com/49866647/117921080-a7c68f80-b322-11eb-8561-1f1a37fc2828.jpg>

[image: Graph2]

<https://user-images.githubusercontent.com/49866647/117921092-ab5a1680-b322-11eb-9a1e-0fbfaa821600.jpg>

Below is my input.json file for deepmd:

{

"_comment": " model parameters",

"model": {

"type_map": ["Au"],

"descriptor" :{

"type": "se_a",

"sel": [550],

"rcut_smth": 5.80,

"rcut": 6.00,

"neuron": [25, 50, 100],

"resnet_dt": false,

"axis_neuron": 16,

"seed": 1,

"_comment": " that's all"

},

"fitting_net" : {

"neuron": [240, 240, 240],

"resnet_dt": true,

"seed": 1,

"_comment": " that's all"

},

"_comment": " that's all"

},

"learning_rate" :{

"type": "exp",

"decay_steps": 5000,

"start_lr": 0.001,

"stop_lr": 3.51e-8,

"_comment": "that's all"

},

"loss" :{

"start_pref_e": 0.05,

"limit_pref_e": 1,

"start_pref_f": 1000,

"limit_pref_f": 100,

"start_pref_v": 0,

"limit_pref_v": 0,

"_comment": " that's all"

},

"_comment": " traing controls",

"training" : {

"systems": ["../1-data/Au16", "../1-data/Au17", "../1-data/Au18", "../1-data/Au19", "../1-data/Au20", "../1-data/Au21", "../1-data/Au22", "../1-data/Au23", "../1-data/Au24", "../1-data/Au25", "../1-data/Au40"],

"set_prefix": "set",

"stop_batch": 150000,

"batch_size": 1,

"seed": 1,

"_comment": " display and restart",

"_comment": " frequencies counted in batch",

"disp_file": "lcurve.out",

"disp_freq": 100,

"numb_test": 10,

"save_freq": 1000,

"save_ckpt": "model.ckpt",

"load_ckpt": "model.ckpt",

"disp_training":true,

"time_training":true,

"profiling": false,

"profiling_file":"timeline.json",

"_comment": "that's all"

},

"_comment": "that's all"

}

—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

<#616>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AEJ6DC5NJF5W6IP362CIW6DTNIEJHANCNFSM44XZNIUQ>

.

|

Beta Was this translation helpful? Give feedback.

1 reply

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

I can't say for sure what your problem is. But one thing I notice is that your learning rate is decaying too violently. Your stop_batch=150000, decay_steps=5000, which means you only decay 30 times in total to decay from 10^-3 to 10^-8, which means decay_rate~0.7. The decay_rate should better to be >~0.95.

In addition, the recommended atom numbers per batch is >=32.