Beir evaluation score using Pipeline.eval_beir is lower then using the one in Beir documentation #3649

Unanswered

huynguyengl99

asked this question in

Questions

Replies: 1 comment 1 reply

-

|

Hey @huynguyengl99, my guess would be to add the document_store to your query_pipeline? |

Beta Was this translation helpful? Give feedback.

1 reply

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

-

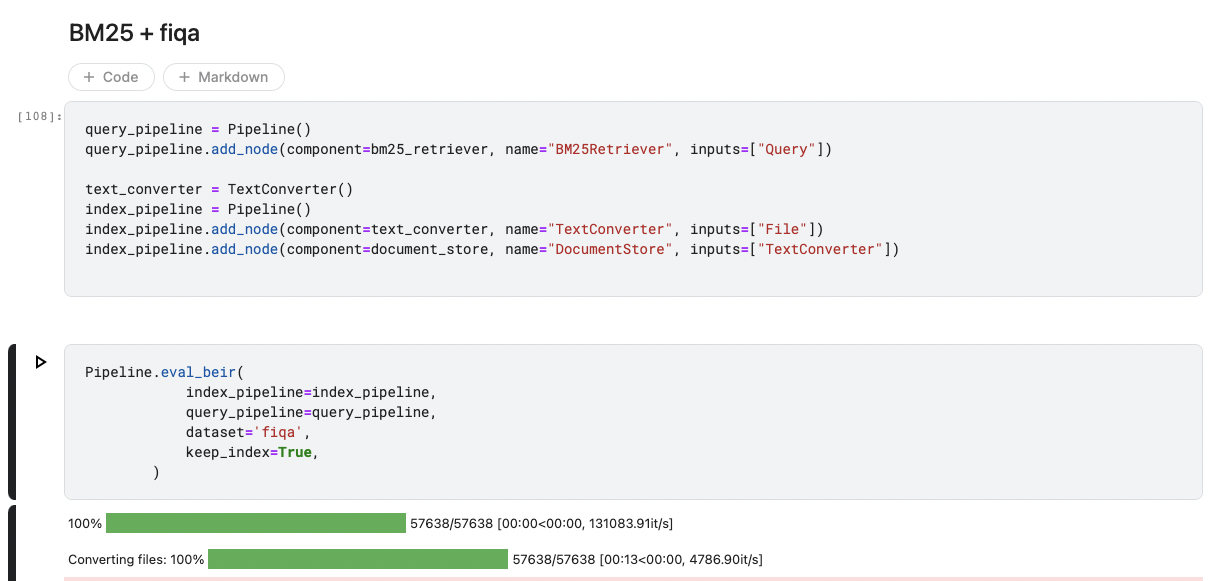

Hi all, (sorry for being a newbie), I have tested BM25Retriever and evaluated its corresponding Pipeline using the Pipeline.eval_beir but it seems the final score is lower than the one (my friend has tested) from the Beir documentation. Below is my code, did I miss any configuration or miscoding? Thank you for reading and it would be a favor if you can help me on that difference.

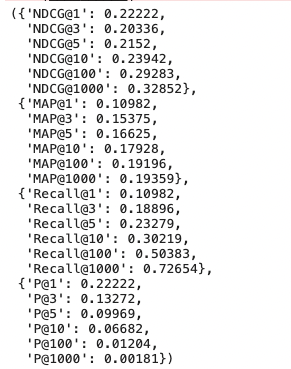

Furthermore, I have tested on the

fiqadatasets.Here are my code & evaluation:

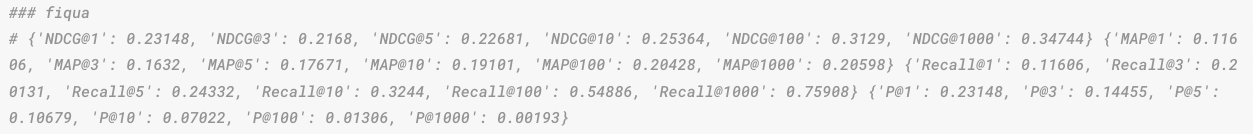

Here is my friend's score following the Beir's guide

Beta Was this translation helpful? Give feedback.

All reactions