A question about faiss acceleration #4752

-

|

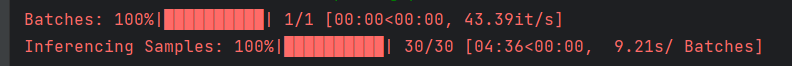

I am using this tutorial: Tutorial: Better Retrieval with Embedded Retrieval When I search for problems on a PDF document, the following step usually takes several minutes: |

Beta Was this translation helpful? Give feedback.

Answered by

mayankjobanputra

Apr 26, 2023

Replies: 1 comment 1 reply

-

|

By the way, my code is completely consistent with the Better Retrieval with Embedding Retrieval tutorial |

Beta Was this translation helpful? Give feedback.

1 reply

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

@mc112611 are you using GPU for inference? That should speed things up for you. There are many other techniques such as using a smaller Retriever model, different FAISS index, and a smaller Reader model but again all these choices might affect your performance.