+

+

+

+ +

+

+

+ +

+

+

+ +

+

+

+ +

diff --git a/fern/changelog/2025-01-07.mdx b/fern/changelog/2025-01-07.mdx

new file mode 100644

index 000000000..f59ed3fad

--- /dev/null

+++ b/fern/changelog/2025-01-07.mdx

@@ -0,0 +1,9 @@

+# New Gemini 2.0 Models, Realtime Updates, and Configuration Options

+

+1. **New Gemini 2.0 Models**: You can now use two new models in `Assistant.model[model='GoogleModel']`: `gemini-2.0-flash-exp` and `gemini-2.0-flash-realtime-exp`, which give you access to the latest real-time capabilities and experimental features.

+

+2. **Support for Real-time Configuration with Gemini 2.0 Models**: Developers can now fine-tune real-time settings for the Gemini 2.0 Multimodal Live API using `Assistant.model[model='GoogleModel'].realtimeConfig`, enabling more control over text generation and speech output.

+

+3. **Customize Speech Output for Gemini Multimodal Live APIs**: You can now customize the assistant's voice using the `speechConfig` and `voiceConfig` properties, with options like `"Puck"`, `"Charon"`, and more.

+

+4. **Advanced Gemini Text Generation Parameters**: You can also tune advanced hyperparameters such as `topK`, `topP`, `presencePenalty`, and `frequencyPenalty` to control how the assistant generates responses, leading to more natural and dynamic conversations.

\ No newline at end of file

diff --git a/fern/changelog/2025-01-11.mdx b/fern/changelog/2025-01-11.mdx

new file mode 100644

index 000000000..ace6a6ba2

--- /dev/null

+++ b/fern/changelog/2025-01-11.mdx

@@ -0,0 +1,98 @@

+1. **Integration of Smallest AI Voices**: Assistants can now utilize voices from Smallest AI by setting the voice provider to `Assistant.voice[provider="smallest-ai"]`, allowing selection from a variety of 25 preset voices and customization of voice attributes.

+

+2. **Support for DeepSeek Language Models**: Developers can now configure assistants to use DeepSeek LLMs by setting the `Assistant.model[provider="deep-seek"]` and `Assistant.model[model="deepseek-chat"]`. You can also specify custom credentials by passing the following payload:

+

+```json

+{

+ "credentials": [

+ {

+ "provider": "deep-seek",

+ "apiKey": "YOUR_API_KEY",

+ "name": "YOUR_CREDENTIAL_NAME"

+ }

+ ],

+ "model": {

+ "provider": "deep-seek",

+ "model": "deepseek-chat"

+ }

+}

+```

+

+3. **Additional Call Ended Reasons for DeepSeek and Cerebras**: New `Call.endedReason` have been added to handle specific DeepSeek and Cerebras call termination scenarios, allowing developers to better manage error handling.

+

+4. **New API Endpoint to Delete Logs**: A new `DELETE /logs` endpoint has been added, enabling developers to programmatically delete logs and manage log data.

+

+5. **Enhanced Call Transfer Options with SIP Verb**: You can now specify a `sipVerb` when defining a `TransferPlan` with `Assistant.model.tools[type=transferCall].destinations[type=sip].transferPlan` giving you the ability to specify the SIP verb (`refer` or `bye`) used during call transfers for greater control over call flow.

+

+6. **Azure Credentials and Blob Storage Support**: You can now configure Azure credentials with support for AzureCredential.service[service=blob_storage] service and use AzureBlobStorageBucketPlan withAzureCredential.bucketPlan, enabling you to store call artifacts directly in Azure Blob Storage.

+

+7. **Add Authentication Support for Azure OpenAI API Management with the 'Ocp-Apim-Subscription-Key' Header**: When configuring Azure OpenAI credentials, you can now include the AzureOpenAICredential.ocpApimSubscriptionKey to authenticate with Azure's OpenAI services for the API Management proxy in place of an API Key.

+

+8. **New CloudflareR2BucketPlan**: You can now use CloudflareR2BucketPlan to configure storage with Cloudflare R2 buckets, enabling you to store call artifacts directly.

+

+9. **Enhanced Credential Support**: It is now simpler to configure provider credentials in `Assistant.credentials`. Additionally, credentials can be overridden with `AssistantOverride.credentials` enables granular credential management per assistant. Our backend improvements add type safety and autocompletion for all supported credential types in the SDKs, making it easier to configure and maintain credentials for the following providers:

+

+- S3Credential

+- GcpCredential

+- XAiCredential

+- GroqCredential

+- LmntCredential

+- MakeCredential

+- AzureCredential

+- TavusCredential

+- GladiaCredential

+- GoogleCredential

+- OpenAICredential

+- PlayHTCredential

+- RimeAICredential

+- RunpodCredential

+- TrieveCredential

+- TwilioCredential

+- VonageCredential

+- WebhookCredential

+- AnyscaleCredential

+- CartesiaCredential

+- DeepgramCredential

+- LangfuseCredential

+- CerebrasCredential

+- DeepSeekCredential

+- AnthropicCredential

+- CustomLLMCredential

+- DeepInfraCredential

+- SmallestAICredential

+- AssemblyAICredential

+- CloudflareCredential

+- ElevenLabsCredential

+- OpenRouterCredential

+- TogetherAICredential

+- AzureOpenAICredential

+- ByoSipTrunkCredential

+- GoHighLevelCredential

+- InflectionAICredential

+- PerplexityAICredential

+

+10. **Specify Type When Updating Tools, Blocks, Phone Numbers, and Knowledge Bases**: You should now specify the type in the request body when [updating tools](https://api.vapi.ai/api#/Tools/ToolController_update), [blocks](https://api.vapi.ai/api#/Blocks/BlockController_update), [phone numbers](https://api.vapi.ai/api#/Phone%20Numbers/PhoneNumberController_update), or [knowledge bases](https://api.vapi.ai/api#/Knowledge%20Base/KnowledgeBaseController_update) using the appropriate payload for each type. Specifying the type now provides type safety and autocompletion in the SDKs. Refer to [the schemas](https://api.vapi.ai/api) to see the expected payload for the following types:

+

+- UpdateBashToolDTO

+- UpdateComputerToolDTO

+- UpdateDtmfToolDTO

+- UpdateEndCallToolDTO

+- UpdateFunctionToolDTO

+- UpdateGhlToolDTO

+- UpdateMakeToolDTO

+- UpdateOutputToolDTO

+- UpdateTextEditorToolDTO

+- UpdateTransferCallToolDTO

+- BashToolWithToolCall

+- ComputerToolWithToolCall

+- TextEditorToolWithToolCall

+- UpdateToolCallBlockDTO

+- UpdateWorkflowBlockDTO

+- UpdateConversationBlockDTO

+- UpdateByoPhoneNumberDTO

+- UpdateTwilioPhoneNumberDTO

+- UpdateVonagePhoneNumberDTO

+- UpdateVapiPhoneNumberDTO

+- UpdateCustomKnowledgeBaseDTO

+- UpdateTrieveKnowledgeBaseDTO

+

diff --git a/fern/changelog/2025-01-14.mdx b/fern/changelog/2025-01-14.mdx

new file mode 100644

index 000000000..6f03b2ef8

--- /dev/null

+++ b/fern/changelog/2025-01-14.mdx

@@ -0,0 +1 @@

+**End Call Message Support in ClientInboundMessage**: Developers can now programmatically end a call by sending an `end-call` message type within `ClientInboundMessage`. To use this feature, include a message with the `type` property set to `"end-call"` when sending inbound messages to the client.

\ No newline at end of file

diff --git a/fern/changelog/2025-01-15.mdx b/fern/changelog/2025-01-15.mdx

new file mode 100644

index 000000000..017124e6b

--- /dev/null

+++ b/fern/changelog/2025-01-15.mdx

@@ -0,0 +1,5 @@

+1. **Updated Log Endpoints:**

+Both the `GET /logs` and `DELETE /logs` endpoints have been simplified by removing the `orgId` parameter.

+

+2. **Updated Log Schema:**

+The following fields in the Log schema are no longer required: `requestDurationSeconds`, `requestStartedAt`, `requestFinishedAt`, `requestBody`, `requestHttpMethod`, `requestUrl`, `requestPath`, and `responseHttpCode`.

\ No newline at end of file

diff --git a/fern/changelog/2025-01-20.mdx b/fern/changelog/2025-01-20.mdx

new file mode 100644

index 000000000..3bd56344b

--- /dev/null

+++ b/fern/changelog/2025-01-20.mdx

@@ -0,0 +1,16 @@

+# Workflow Steps, Trieve Knowledge Base Updates, and Concurrent Calls Tracking

+

+1. **Use Workflow Blocks to Simplify Blocks Steps:** You can now compose complicated Blocks steps with smaller, resuable [Workflow blocks](https://api.vapi.ai/api#:~:text=Workflow) that manage conversations and take actions in external systems.

+

+In addition to normal operations inside [Block steps](https://docs.vapi.ai/blocks/steps) - you can now [Say messages](https://api.vapi.ai/api#:~:text=Say), [Gather information](https://api.vapi.ai/api#:~:text=Gather), or connect to other workflow [Edges](https://api.vapi.ai/api#:~:text=Edge) based on a [LLM evaluating a condition](https://api.vapi.ai/api#:~:text=SemanticEdgeCondition), or a more [logic-based condition](https://api.vapi.ai/api#:~:text=ProgrammaticEdgeCondition). Workflows can be used through `Assistant.model["VapiModel"]` to create custom call workflows.

+

+2. **Trieve Knowledge Base Integration Improvements:** You should now configure [Trieve knowledge bases](https://api.vapi.ai/api#:~:text=TrieveKnowledgeBase) using the new `createPlan` and `searchPlan` fields instead of specifying the raw vector plans directly. The new plans allow you to create or import trieve plans directly, and specify the type of search more precisely than before.

+

+3. **Updated Concurrency Tracking:** Your subscriptions now track active calls with `concurrencyCounter`, replacing `concurrencyLimit`. This does not affect how you reserve concurrent calls through [billing add-ons](https://dashboard.vapi.ai/org/billing/add-ons).

+

+

+

+

diff --git a/fern/changelog/2025-01-07.mdx b/fern/changelog/2025-01-07.mdx

new file mode 100644

index 000000000..f59ed3fad

--- /dev/null

+++ b/fern/changelog/2025-01-07.mdx

@@ -0,0 +1,9 @@

+# New Gemini 2.0 Models, Realtime Updates, and Configuration Options

+

+1. **New Gemini 2.0 Models**: You can now use two new models in `Assistant.model[model='GoogleModel']`: `gemini-2.0-flash-exp` and `gemini-2.0-flash-realtime-exp`, which give you access to the latest real-time capabilities and experimental features.

+

+2. **Support for Real-time Configuration with Gemini 2.0 Models**: Developers can now fine-tune real-time settings for the Gemini 2.0 Multimodal Live API using `Assistant.model[model='GoogleModel'].realtimeConfig`, enabling more control over text generation and speech output.

+

+3. **Customize Speech Output for Gemini Multimodal Live APIs**: You can now customize the assistant's voice using the `speechConfig` and `voiceConfig` properties, with options like `"Puck"`, `"Charon"`, and more.

+

+4. **Advanced Gemini Text Generation Parameters**: You can also tune advanced hyperparameters such as `topK`, `topP`, `presencePenalty`, and `frequencyPenalty` to control how the assistant generates responses, leading to more natural and dynamic conversations.

\ No newline at end of file

diff --git a/fern/changelog/2025-01-11.mdx b/fern/changelog/2025-01-11.mdx

new file mode 100644

index 000000000..ace6a6ba2

--- /dev/null

+++ b/fern/changelog/2025-01-11.mdx

@@ -0,0 +1,98 @@

+1. **Integration of Smallest AI Voices**: Assistants can now utilize voices from Smallest AI by setting the voice provider to `Assistant.voice[provider="smallest-ai"]`, allowing selection from a variety of 25 preset voices and customization of voice attributes.

+

+2. **Support for DeepSeek Language Models**: Developers can now configure assistants to use DeepSeek LLMs by setting the `Assistant.model[provider="deep-seek"]` and `Assistant.model[model="deepseek-chat"]`. You can also specify custom credentials by passing the following payload:

+

+```json

+{

+ "credentials": [

+ {

+ "provider": "deep-seek",

+ "apiKey": "YOUR_API_KEY",

+ "name": "YOUR_CREDENTIAL_NAME"

+ }

+ ],

+ "model": {

+ "provider": "deep-seek",

+ "model": "deepseek-chat"

+ }

+}

+```

+

+3. **Additional Call Ended Reasons for DeepSeek and Cerebras**: New `Call.endedReason` have been added to handle specific DeepSeek and Cerebras call termination scenarios, allowing developers to better manage error handling.

+

+4. **New API Endpoint to Delete Logs**: A new `DELETE /logs` endpoint has been added, enabling developers to programmatically delete logs and manage log data.

+

+5. **Enhanced Call Transfer Options with SIP Verb**: You can now specify a `sipVerb` when defining a `TransferPlan` with `Assistant.model.tools[type=transferCall].destinations[type=sip].transferPlan` giving you the ability to specify the SIP verb (`refer` or `bye`) used during call transfers for greater control over call flow.

+

+6. **Azure Credentials and Blob Storage Support**: You can now configure Azure credentials with support for AzureCredential.service[service=blob_storage] service and use AzureBlobStorageBucketPlan withAzureCredential.bucketPlan, enabling you to store call artifacts directly in Azure Blob Storage.

+

+7. **Add Authentication Support for Azure OpenAI API Management with the 'Ocp-Apim-Subscription-Key' Header**: When configuring Azure OpenAI credentials, you can now include the AzureOpenAICredential.ocpApimSubscriptionKey to authenticate with Azure's OpenAI services for the API Management proxy in place of an API Key.

+

+8. **New CloudflareR2BucketPlan**: You can now use CloudflareR2BucketPlan to configure storage with Cloudflare R2 buckets, enabling you to store call artifacts directly.

+

+9. **Enhanced Credential Support**: It is now simpler to configure provider credentials in `Assistant.credentials`. Additionally, credentials can be overridden with `AssistantOverride.credentials` enables granular credential management per assistant. Our backend improvements add type safety and autocompletion for all supported credential types in the SDKs, making it easier to configure and maintain credentials for the following providers:

+

+- S3Credential

+- GcpCredential

+- XAiCredential

+- GroqCredential

+- LmntCredential

+- MakeCredential

+- AzureCredential

+- TavusCredential

+- GladiaCredential

+- GoogleCredential

+- OpenAICredential

+- PlayHTCredential

+- RimeAICredential

+- RunpodCredential

+- TrieveCredential

+- TwilioCredential

+- VonageCredential

+- WebhookCredential

+- AnyscaleCredential

+- CartesiaCredential

+- DeepgramCredential

+- LangfuseCredential

+- CerebrasCredential

+- DeepSeekCredential

+- AnthropicCredential

+- CustomLLMCredential

+- DeepInfraCredential

+- SmallestAICredential

+- AssemblyAICredential

+- CloudflareCredential

+- ElevenLabsCredential

+- OpenRouterCredential

+- TogetherAICredential

+- AzureOpenAICredential

+- ByoSipTrunkCredential

+- GoHighLevelCredential

+- InflectionAICredential

+- PerplexityAICredential

+

+10. **Specify Type When Updating Tools, Blocks, Phone Numbers, and Knowledge Bases**: You should now specify the type in the request body when [updating tools](https://api.vapi.ai/api#/Tools/ToolController_update), [blocks](https://api.vapi.ai/api#/Blocks/BlockController_update), [phone numbers](https://api.vapi.ai/api#/Phone%20Numbers/PhoneNumberController_update), or [knowledge bases](https://api.vapi.ai/api#/Knowledge%20Base/KnowledgeBaseController_update) using the appropriate payload for each type. Specifying the type now provides type safety and autocompletion in the SDKs. Refer to [the schemas](https://api.vapi.ai/api) to see the expected payload for the following types:

+

+- UpdateBashToolDTO

+- UpdateComputerToolDTO

+- UpdateDtmfToolDTO

+- UpdateEndCallToolDTO

+- UpdateFunctionToolDTO

+- UpdateGhlToolDTO

+- UpdateMakeToolDTO

+- UpdateOutputToolDTO

+- UpdateTextEditorToolDTO

+- UpdateTransferCallToolDTO

+- BashToolWithToolCall

+- ComputerToolWithToolCall

+- TextEditorToolWithToolCall

+- UpdateToolCallBlockDTO

+- UpdateWorkflowBlockDTO

+- UpdateConversationBlockDTO

+- UpdateByoPhoneNumberDTO

+- UpdateTwilioPhoneNumberDTO

+- UpdateVonagePhoneNumberDTO

+- UpdateVapiPhoneNumberDTO

+- UpdateCustomKnowledgeBaseDTO

+- UpdateTrieveKnowledgeBaseDTO

+

diff --git a/fern/changelog/2025-01-14.mdx b/fern/changelog/2025-01-14.mdx

new file mode 100644

index 000000000..6f03b2ef8

--- /dev/null

+++ b/fern/changelog/2025-01-14.mdx

@@ -0,0 +1 @@

+**End Call Message Support in ClientInboundMessage**: Developers can now programmatically end a call by sending an `end-call` message type within `ClientInboundMessage`. To use this feature, include a message with the `type` property set to `"end-call"` when sending inbound messages to the client.

\ No newline at end of file

diff --git a/fern/changelog/2025-01-15.mdx b/fern/changelog/2025-01-15.mdx

new file mode 100644

index 000000000..017124e6b

--- /dev/null

+++ b/fern/changelog/2025-01-15.mdx

@@ -0,0 +1,5 @@

+1. **Updated Log Endpoints:**

+Both the `GET /logs` and `DELETE /logs` endpoints have been simplified by removing the `orgId` parameter.

+

+2. **Updated Log Schema:**

+The following fields in the Log schema are no longer required: `requestDurationSeconds`, `requestStartedAt`, `requestFinishedAt`, `requestBody`, `requestHttpMethod`, `requestUrl`, `requestPath`, and `responseHttpCode`.

\ No newline at end of file

diff --git a/fern/changelog/2025-01-20.mdx b/fern/changelog/2025-01-20.mdx

new file mode 100644

index 000000000..3bd56344b

--- /dev/null

+++ b/fern/changelog/2025-01-20.mdx

@@ -0,0 +1,16 @@

+# Workflow Steps, Trieve Knowledge Base Updates, and Concurrent Calls Tracking

+

+1. **Use Workflow Blocks to Simplify Blocks Steps:** You can now compose complicated Blocks steps with smaller, resuable [Workflow blocks](https://api.vapi.ai/api#:~:text=Workflow) that manage conversations and take actions in external systems.

+

+In addition to normal operations inside [Block steps](https://docs.vapi.ai/blocks/steps) - you can now [Say messages](https://api.vapi.ai/api#:~:text=Say), [Gather information](https://api.vapi.ai/api#:~:text=Gather), or connect to other workflow [Edges](https://api.vapi.ai/api#:~:text=Edge) based on a [LLM evaluating a condition](https://api.vapi.ai/api#:~:text=SemanticEdgeCondition), or a more [logic-based condition](https://api.vapi.ai/api#:~:text=ProgrammaticEdgeCondition). Workflows can be used through `Assistant.model["VapiModel"]` to create custom call workflows.

+

+2. **Trieve Knowledge Base Integration Improvements:** You should now configure [Trieve knowledge bases](https://api.vapi.ai/api#:~:text=TrieveKnowledgeBase) using the new `createPlan` and `searchPlan` fields instead of specifying the raw vector plans directly. The new plans allow you to create or import trieve plans directly, and specify the type of search more precisely than before.

+

+3. **Updated Concurrency Tracking:** Your subscriptions now track active calls with `concurrencyCounter`, replacing `concurrencyLimit`. This does not affect how you reserve concurrent calls through [billing add-ons](https://dashboard.vapi.ai/org/billing/add-ons).

+

+

+  +

+

+4. **Define Allowed Values with `type` using `JsonSchema`:** You can restrict model outputs to specific values inside Blocks or tool calls using the new `type` property in [JsonSchema](https://api.vapi.ai/api#:~:text=JsonSchema). Supported types include `string`, `number`, `integer`, `boolean`, `array` (which also needs `items` to be defined), and `object` (which also needs `properties` to be defined).

+

diff --git a/fern/changelog/2025-01-21.mdx b/fern/changelog/2025-01-21.mdx

new file mode 100644

index 000000000..a5df2257c

--- /dev/null

+++ b/fern/changelog/2025-01-21.mdx

@@ -0,0 +1,7 @@

+# Updated Azure Regions for Credentials

+

+1. **Updated Azure Regions for Credentials**: You can now specify `canadacentral`, `japaneast`, and `japanwest` as valid regions when specifying your Azure credentials. Additionally, the region `canada` has been renamed to `canadaeast`, and `japan` has been replaced with `japaneast` and `japanwest`; please update your configurations accordingly.

+

+

+

+

+

+4. **Define Allowed Values with `type` using `JsonSchema`:** You can restrict model outputs to specific values inside Blocks or tool calls using the new `type` property in [JsonSchema](https://api.vapi.ai/api#:~:text=JsonSchema). Supported types include `string`, `number`, `integer`, `boolean`, `array` (which also needs `items` to be defined), and `object` (which also needs `properties` to be defined).

+

diff --git a/fern/changelog/2025-01-21.mdx b/fern/changelog/2025-01-21.mdx

new file mode 100644

index 000000000..a5df2257c

--- /dev/null

+++ b/fern/changelog/2025-01-21.mdx

@@ -0,0 +1,7 @@

+# Updated Azure Regions for Credentials

+

+1. **Updated Azure Regions for Credentials**: You can now specify `canadacentral`, `japaneast`, and `japanwest` as valid regions when specifying your Azure credentials. Additionally, the region `canada` has been renamed to `canadaeast`, and `japan` has been replaced with `japaneast` and `japanwest`; please update your configurations accordingly.

+

+

+  +

diff --git a/fern/changelog/2025-01-22.mdx b/fern/changelog/2025-01-22.mdx

new file mode 100644

index 000000000..eb07492e8

--- /dev/null

+++ b/fern/changelog/2025-01-22.mdx

@@ -0,0 +1,8 @@

+# Tool Calling Updates, Final Transcripts, and DeepSeek Reasoner

+1. **Migrate `ToolCallFunction` to `ToolCall`**: You should update your client and server tool calling code to use the [`ToolCall` schema](https://api.vapi.ai/api#:~:text=ToolCall) instead of `ToolCallFunction`, which includes properties like `name`, `tool`, and `toolBody` for more detailed tool call specifications. ToolCallFunction has been removed.

+

+2. **Include `ToolCall` Nodes in Workflows**: You can now incorporate [`ToolCall` nodes](https://api.vapi.ai/api#:~:text=ToolCall) directly into workflow block steps, enabling tools to be invoked as part of the workflow execution.

+

+3. **New Model Option `deepseek-reasoner`**: You can now select `deepseek-reasoner` as a model option inside your assistants with `Assistant.model["deep-seek"].model["deepseek-reasoner"]`, offering enhanced reasoning capabilities for your applications.

+

+4. **Support for Final Transcripts in Server Messages**: The API now supports `'transcript[transcriptType="final"]'` in server messages, allowing your application to handle and process end of conversation transcripts.

\ No newline at end of file

diff --git a/fern/changelog/2025-01-29.mdx b/fern/changelog/2025-01-29.mdx

new file mode 100644

index 000000000..f1613df8e

--- /dev/null

+++ b/fern/changelog/2025-01-29.mdx

@@ -0,0 +1,20 @@

+# New workflow nodes, improved call handling, better phone number management, and expanded tool calling capabilities

+

+1. **New Hangup Workflow Node**: You can now include a [`Hangup`](https://api.vapi.ai/api#:~:text=Hangup) node in your workflows to end calls programmatically.

+

+2. **New HttpRequest Workflow Node**: Workflows can now make HTTP requests using the new [`HttpRequest`](https://api.vapi.ai/api#:~:text=HttpRequest) node, enabling integration with external APIs during workflow execution.

+

+3. **Updates to Tool Calls**: The [`ToolCall`](https://api.vapi.ai/api#:~:text=ToolCall) schema has been revamped; you should update your tool calls to use the new `function` property with `id` and `function` details (instead of older `tool` and `toolBody` properties).

+

+4. **Improvements to [Say](https://api.vapi.ai/api#:~:text=Say), [Edge](https://api.vapi.ai/api#:~:text=Edge), [Gather](https://api.vapi.ai/api#:~:text=Gather), and [Workflow](https://api.vapi.ai/api#:~:text=Workflow) Nodes**:

+- The `name`, `to`, and `from` properties in these nodes now support up to 80 characters, letting you use more descriptive identifiers.

+- A `metadata` property has been added to these nodes, allowing you to store additional information.

+- The [`Gather`](https://api.vapi.ai/api#:~:text=Gather) node now supports a `confirmContent` option to confirm collected data with users.

+

+5. **Regex Validation with Json Outputs**: You can now validate inputs and outputs from your conversations, tool calls, and OpenAI structured outputs against regular expressions using the `regex` property in [`JSON outputs`](https://api.vapi.ai/api#:~:text=JsonSchema) node.

+

+6. **New Assistant Transfer Mode**: A new [transfer mode](https://api.vapi.ai/api#:~:text=TransferPlan) `swap-system-message-in-history-and-remove-transfer-tool-messages` allows more control over conversation history during assistant transfers.

+

+7. **Area Code Selection for Vapi Phone Numbers**: You can now specify a desired area code when creating Vapi phone numbers using `numberDesiredAreaCode`.

+

+8. **Chat Completions Support**: You can now handle chat messages and their metadata within your applications using familiar chat completion messages in your workflow nodes.

diff --git a/fern/changelog/2025-02-01.mdx b/fern/changelog/2025-02-01.mdx

new file mode 100644

index 000000000..a2930abb3

--- /dev/null

+++ b/fern/changelog/2025-02-01.mdx

@@ -0,0 +1,25 @@

+# API Request Node, Improved Retries, and Enhanced Message Controls

+

+1. **HttpRequest Node Renamed to ApiRequest**: The `HttpRequest` workflow node has been renamed to [`ApiRequest`](https://api.vapi.ai/api#:~:text=ApiRequest), and can be accessed through `Assistant.model.workflow.nodes[type="api-request"]`. Key changes:

+ - New support for POST requests with customizable headers and body

+ - New async request support with `isAsync` flag

+ - Task status messages for waiting, starting, failure and success states

+

+

diff --git a/fern/changelog/2025-01-22.mdx b/fern/changelog/2025-01-22.mdx

new file mode 100644

index 000000000..eb07492e8

--- /dev/null

+++ b/fern/changelog/2025-01-22.mdx

@@ -0,0 +1,8 @@

+# Tool Calling Updates, Final Transcripts, and DeepSeek Reasoner

+1. **Migrate `ToolCallFunction` to `ToolCall`**: You should update your client and server tool calling code to use the [`ToolCall` schema](https://api.vapi.ai/api#:~:text=ToolCall) instead of `ToolCallFunction`, which includes properties like `name`, `tool`, and `toolBody` for more detailed tool call specifications. ToolCallFunction has been removed.

+

+2. **Include `ToolCall` Nodes in Workflows**: You can now incorporate [`ToolCall` nodes](https://api.vapi.ai/api#:~:text=ToolCall) directly into workflow block steps, enabling tools to be invoked as part of the workflow execution.

+

+3. **New Model Option `deepseek-reasoner`**: You can now select `deepseek-reasoner` as a model option inside your assistants with `Assistant.model["deep-seek"].model["deepseek-reasoner"]`, offering enhanced reasoning capabilities for your applications.

+

+4. **Support for Final Transcripts in Server Messages**: The API now supports `'transcript[transcriptType="final"]'` in server messages, allowing your application to handle and process end of conversation transcripts.

\ No newline at end of file

diff --git a/fern/changelog/2025-01-29.mdx b/fern/changelog/2025-01-29.mdx

new file mode 100644

index 000000000..f1613df8e

--- /dev/null

+++ b/fern/changelog/2025-01-29.mdx

@@ -0,0 +1,20 @@

+# New workflow nodes, improved call handling, better phone number management, and expanded tool calling capabilities

+

+1. **New Hangup Workflow Node**: You can now include a [`Hangup`](https://api.vapi.ai/api#:~:text=Hangup) node in your workflows to end calls programmatically.

+

+2. **New HttpRequest Workflow Node**: Workflows can now make HTTP requests using the new [`HttpRequest`](https://api.vapi.ai/api#:~:text=HttpRequest) node, enabling integration with external APIs during workflow execution.

+

+3. **Updates to Tool Calls**: The [`ToolCall`](https://api.vapi.ai/api#:~:text=ToolCall) schema has been revamped; you should update your tool calls to use the new `function` property with `id` and `function` details (instead of older `tool` and `toolBody` properties).

+

+4. **Improvements to [Say](https://api.vapi.ai/api#:~:text=Say), [Edge](https://api.vapi.ai/api#:~:text=Edge), [Gather](https://api.vapi.ai/api#:~:text=Gather), and [Workflow](https://api.vapi.ai/api#:~:text=Workflow) Nodes**:

+- The `name`, `to`, and `from` properties in these nodes now support up to 80 characters, letting you use more descriptive identifiers.

+- A `metadata` property has been added to these nodes, allowing you to store additional information.

+- The [`Gather`](https://api.vapi.ai/api#:~:text=Gather) node now supports a `confirmContent` option to confirm collected data with users.

+

+5. **Regex Validation with Json Outputs**: You can now validate inputs and outputs from your conversations, tool calls, and OpenAI structured outputs against regular expressions using the `regex` property in [`JSON outputs`](https://api.vapi.ai/api#:~:text=JsonSchema) node.

+

+6. **New Assistant Transfer Mode**: A new [transfer mode](https://api.vapi.ai/api#:~:text=TransferPlan) `swap-system-message-in-history-and-remove-transfer-tool-messages` allows more control over conversation history during assistant transfers.

+

+7. **Area Code Selection for Vapi Phone Numbers**: You can now specify a desired area code when creating Vapi phone numbers using `numberDesiredAreaCode`.

+

+8. **Chat Completions Support**: You can now handle chat messages and their metadata within your applications using familiar chat completion messages in your workflow nodes.

diff --git a/fern/changelog/2025-02-01.mdx b/fern/changelog/2025-02-01.mdx

new file mode 100644

index 000000000..a2930abb3

--- /dev/null

+++ b/fern/changelog/2025-02-01.mdx

@@ -0,0 +1,25 @@

+# API Request Node, Improved Retries, and Enhanced Message Controls

+

+1. **HttpRequest Node Renamed to ApiRequest**: The `HttpRequest` workflow node has been renamed to [`ApiRequest`](https://api.vapi.ai/api#:~:text=ApiRequest), and can be accessed through `Assistant.model.workflow.nodes[type="api-request"]`. Key changes:

+ - New support for POST requests with customizable headers and body

+ - New async request support with `isAsync` flag

+ - Task status messages for waiting, starting, failure and success states

+ +

+

+

+10. **Deprecated Schemas and Properties**: The following properties and schemas are now deprecated in the [API reference](https://api.vapi.ai/api/):

+ * `SemanticEdgeCondition`

+ * `ProgrammaticEdgeCondition`

+ * `Workflow.type`

+ * `ApiRequest.waitTaskMessage`

+ * `ApiRequest.startTaskMessage`

+ * `ApiRequest.failureTaskMessage`

+ * `ApiRequest.successTaskMessage`

+ * `OpenAIModel.semanticCachingEnabled`

+ * `CreateWorkflowDTO.type`

diff --git a/fern/changelog/2025-02-17.mdx b/fern/changelog/2025-02-17.mdx

new file mode 100644

index 000000000..df4d03749

--- /dev/null

+++ b/fern/changelog/2025-02-17.mdx

@@ -0,0 +1,66 @@

+## What's New

+

+### Compliance & Security Enhancements

+- **New [CompliancePlan](https://api.vapi.ai/api#:~:text=CompliancePlan) Consolidates HIPAA and PCI Compliance Settings**: You should now enable HIPAA and PCI compliance settings with `Assistant.compliancePlan.hipaaEnabled` and `Assistant.compliancePlan.pciEnabled` which both default to `false` (replacing the old HIPAA and PCI flags on `Assistant` and `AssistantOverrides`).

+

+- **Phone Number Status Tracking**: You can now view your phone number `status` with `GET /phone-number/{id}` for all phone number types ([Bring Your Own Number](https://api.vapi.ai/api#:~:text=ByoPhoneNumber), [Vapi](https://api.vapi.ai/api#:~:text=VapiPhoneNumber), [Twilio](https://api.vapi.ai/api#:~:text=TwilioPhoneNumber), [Vonage](https://api.vapi.ai/api#:~:text=VonagePhoneNumber)) for better monitoring.

+

+### Advanced Call Control

+

+- **Assistant Hooks System**: You can now use [`AssistantHooks`](https://api.vapi.ai/api#:~:text=AssistantHooks) to support `call.ending` events with customizable filters and actions

+ - Enable transfer actions through [`TransferAssistantHookAction`](https://api.vapi.ai/api#:~:text=TransferAssistantHookAction). For example:

+```javascript

+{

+ "hooks": [{

+ "on": "call.ending",

+ "do": [{

+ "type": "transfer",

+ "destination": {

+ // Your transfer configuration

+ }

+ }]

+ }]

+}

+```

+

+ - Conditionally execute hooks with `Assistant.hooks.filter`. For example, trigger different hooks for call completed, system errors, or customer hangup / transfer:

+

+```json

+{

+ "assistant": {

+ "hooks": [{

+ "filters": [{

+ "type": "oneOf",

+ "key": "call.endedReason",

+ "oneOf": ["pipeline-error-custom-llm-500-server-error", "pipeline-error-custom-llm-llm-failed"]

+ }]

+ }

+ ]

+ }

+}

+```

+

+### Model & Voice Updates

+

+- **New Models Added**: You can now use new models inside `Assistant.model[provider="google", "openai", "xai"]` and `Assistant.fallbackModels[provider="google", "openai", "xai"]`

+ - Google: Gemini 2.0 series (`flash-thinking-exp`, `pro-exp-02-05`, `flash`, `flash-lite-preview`)

+ - OpenAI: o3 mini `o3-mini`

+ - xAI: Grok 2 `grok-2`

+

+

+

+

+

+

+10. **Deprecated Schemas and Properties**: The following properties and schemas are now deprecated in the [API reference](https://api.vapi.ai/api/):

+ * `SemanticEdgeCondition`

+ * `ProgrammaticEdgeCondition`

+ * `Workflow.type`

+ * `ApiRequest.waitTaskMessage`

+ * `ApiRequest.startTaskMessage`

+ * `ApiRequest.failureTaskMessage`

+ * `ApiRequest.successTaskMessage`

+ * `OpenAIModel.semanticCachingEnabled`

+ * `CreateWorkflowDTO.type`

diff --git a/fern/changelog/2025-02-17.mdx b/fern/changelog/2025-02-17.mdx

new file mode 100644

index 000000000..df4d03749

--- /dev/null

+++ b/fern/changelog/2025-02-17.mdx

@@ -0,0 +1,66 @@

+## What's New

+

+### Compliance & Security Enhancements

+- **New [CompliancePlan](https://api.vapi.ai/api#:~:text=CompliancePlan) Consolidates HIPAA and PCI Compliance Settings**: You should now enable HIPAA and PCI compliance settings with `Assistant.compliancePlan.hipaaEnabled` and `Assistant.compliancePlan.pciEnabled` which both default to `false` (replacing the old HIPAA and PCI flags on `Assistant` and `AssistantOverrides`).

+

+- **Phone Number Status Tracking**: You can now view your phone number `status` with `GET /phone-number/{id}` for all phone number types ([Bring Your Own Number](https://api.vapi.ai/api#:~:text=ByoPhoneNumber), [Vapi](https://api.vapi.ai/api#:~:text=VapiPhoneNumber), [Twilio](https://api.vapi.ai/api#:~:text=TwilioPhoneNumber), [Vonage](https://api.vapi.ai/api#:~:text=VonagePhoneNumber)) for better monitoring.

+

+### Advanced Call Control

+

+- **Assistant Hooks System**: You can now use [`AssistantHooks`](https://api.vapi.ai/api#:~:text=AssistantHooks) to support `call.ending` events with customizable filters and actions

+ - Enable transfer actions through [`TransferAssistantHookAction`](https://api.vapi.ai/api#:~:text=TransferAssistantHookAction). For example:

+```javascript

+{

+ "hooks": [{

+ "on": "call.ending",

+ "do": [{

+ "type": "transfer",

+ "destination": {

+ // Your transfer configuration

+ }

+ }]

+ }]

+}

+```

+

+ - Conditionally execute hooks with `Assistant.hooks.filter`. For example, trigger different hooks for call completed, system errors, or customer hangup / transfer:

+

+```json

+{

+ "assistant": {

+ "hooks": [{

+ "filters": [{

+ "type": "oneOf",

+ "key": "call.endedReason",

+ "oneOf": ["pipeline-error-custom-llm-500-server-error", "pipeline-error-custom-llm-llm-failed"]

+ }]

+ }

+ ]

+ }

+}

+```

+

+### Model & Voice Updates

+

+- **New Models Added**: You can now use new models inside `Assistant.model[provider="google", "openai", "xai"]` and `Assistant.fallbackModels[provider="google", "openai", "xai"]`

+ - Google: Gemini 2.0 series (`flash-thinking-exp`, `pro-exp-02-05`, `flash`, `flash-lite-preview`)

+ - OpenAI: o3 mini `o3-mini`

+ - xAI: Grok 2 `grok-2`

+

+

+  +

+

+- **New `PlayDialog` Model for [PlayHT Voices](https://api.vapi.ai/api#:~:text=PlayHTVoice)**: You can now use the `PlayDialog` model in `Assistant.voice[provider="playht"].model["PlayDialog"]`.

+

+- **New `nova-3` and `nova-3-general` Models for [Deepgram Transcriber](https://api.vapi.ai/api#:~:text=DeepgramTranscriber)**: You can now use the `nova-3` and `nova-3-general` models in `Assistant.transcriber[provider="deepgram"].model["nova-3", "nova-3-general"]`

+

+### API Improvements

+

+- **Workflow Updates**: You can now send a [`workflow.node.started`](https://api.vapi.ai/api#:~:text=ClientMessageWorkflowNodeStarted) message to track the start of a workflow node for better call flow tracking

+

+- **Analytics Enhancement**: Added subscription table and concurrency columns in [POST /analytics](https://api.vapi.ai/api#/Analytics/AnalyticsController_query) for richer queries about your subscriptions and concurrent calls.

+

+### Deprecations

+

+

+

+

+- **New `PlayDialog` Model for [PlayHT Voices](https://api.vapi.ai/api#:~:text=PlayHTVoice)**: You can now use the `PlayDialog` model in `Assistant.voice[provider="playht"].model["PlayDialog"]`.

+

+- **New `nova-3` and `nova-3-general` Models for [Deepgram Transcriber](https://api.vapi.ai/api#:~:text=DeepgramTranscriber)**: You can now use the `nova-3` and `nova-3-general` models in `Assistant.transcriber[provider="deepgram"].model["nova-3", "nova-3-general"]`

+

+### API Improvements

+

+- **Workflow Updates**: You can now send a [`workflow.node.started`](https://api.vapi.ai/api#:~:text=ClientMessageWorkflowNodeStarted) message to track the start of a workflow node for better call flow tracking

+

+- **Analytics Enhancement**: Added subscription table and concurrency columns in [POST /analytics](https://api.vapi.ai/api#/Analytics/AnalyticsController_query) for richer queries about your subscriptions and concurrent calls.

+

+### Deprecations

+

+ +

+

+5. **Support for pre-transfer announcements in [ClientInboundMessageTransfer](https://api.vapi.ai/api#:~:text=ClientInboundMessageTransfer)**: The `content` field in `ClientInboundMessageTransfer` now supports pre-transfer announcements ("Connecting you to billing...") before SIP/number routing. Implement via WebSocket messages using type: "transfer" with destination object.

+

+### Deprecation Notice

+

+

+

+5. **Support for pre-transfer announcements in [ClientInboundMessageTransfer](https://api.vapi.ai/api#:~:text=ClientInboundMessageTransfer)**: The `content` field in `ClientInboundMessageTransfer` now supports pre-transfer announcements ("Connecting you to billing...") before SIP/number routing. Implement via WebSocket messages using type: "transfer" with destination object.

+

+### Deprecation Notice

+ +

+

+

+2. **Enhanced Call Transfers with TwiML Control:** You can now use `twiml` ([Twilio Markup Language](https://www.twilio.com/docs/voice/twiml)) in [`Assistant.model.tools[type=transferCall].destinations[].transferPlan[mode=warm-transfer-twiml]`](https://api.vapi.ai/api#:~:text=TransferPlan) to execute TwiML instructions before connecting the call, allowing for pre-transfer announcements or data collection with Twilio.

+

+3. **New Voice Models and Experimental Controls:**

+ * **`mistv2` Rime AI Voice:** You can now use the `mistv2` model in [`Assistant.voice[provider="rime-ai"].model[model="mistv2"]`](https://api.vapi.ai/api#:~:text=RimeAIVoice).

+ * **OpenAI Models:** You can now use `chatgpt-4o-latest` model in [`Assistant.model[provider="openai"].model[model="chatgpt-4o-latest"]`](https://api.vapi.ai/api#:~:text=OpenAIModel).

+

+4. **Experimental Controls for Cartesia Voices:** You can now specify your Cartesia voice speed (string) and emotional range (array) with [`Assistant.voice[provider="cartesia"].experimentalControls`](https://api.vapi.ai/api#:~:text=CartesiaExperimentalControls). For example:

+

+```json

+{

+ "speed": "fast",

+ "emotion": [

+ "anger:lowest",

+ "curiosity:high"

+ ]

+}

+```

+

+| Property | Option |

+|----------|--------|

+| speed | slowest |

+| | slow |

+| | normal (default) |

+| | fast |

+| | fastest |

+| emotion | anger:lowest |

+| | anger:low |

+| | anger:high |

+| | anger:highest |

+| | positivity:lowest |

+| | positivity:low |

+| | positivity:high |

+| | positivity:highest |

+| | surprise:lowest |

+| | surprise:low |

+| | surprise:high |

+| | surprise:highest |

+| | sadness:lowest |

+| | sadness:low |

+| | sadness:high |

+| | sadness:highest |

+| | curiosity:lowest |

+| | curiosity:low |

+| | curiosity:high |

+| | curiosity:highest |

diff --git a/fern/changelog/2025-02-27.mdx b/fern/changelog/2025-02-27.mdx

new file mode 100644

index 000000000..d8cf366b1

--- /dev/null

+++ b/fern/changelog/2025-02-27.mdx

@@ -0,0 +1,49 @@

+# Phone Keypad Input Support, OAuth2 and Analytics Improvements

+

+1. **Keypad Input Support for Phone Calls:** A new [`keypadInputPlan`](https://api.vapi.ai/api#:~:text=KeypadInputPlan) feature has been added to enable handling of DTMF (touch-tone) keypad inputs during phone calls. This allows your voice assistant to collect numeric input from callers, like account numbers, menu selections, or confirmation codes.

+

+Configuration options:

+```json

+{

+ "keypadInputPlan": {

+ "enabled": true, // Default: false

+ "delimiters": "#", // Options: "#", "*", or "" (empty string)

+ "timeoutSeconds": 2 // Range: 0.5-10 seconds, Default: 2

+ }

+}

+```

+

+The feature can be configured in:

+- `assistant.keypadInputPlan`

+- `call.squad.members.assistant.keypadInputPlan`

+- `call.squad.members.assistantOverrides.keypadInputPlan`

+

+2. **OAuth2 Authentication Enhancement:** The [`OAuth2AuthenticationPlan`](https://api.vapi.ai/api#:~:text=OAuth2AuthenticationPlan) now includes a `scope` property to specify access scopes when authenticating. This allows more granular control over permissions when integrating with OAuth2-based services.

+

+```json

+{

+ "credentials": [

+ {

+ "authenticationPlan": {

+ "type": "oauth2",

+ "url": "https://example.com/oauth2/token",

+ "clientId": "your-client-id",

+ "clientSecret": "your-client-secret",

+ "scope": "read:data" // New property, max length: 1000 characters

+ }

+ }

+ ]

+}

+```

+

+The scope property can be configured at:

+- `assistant.credentials.authenticationPlan`

+- `call.squad.members.assistant.credentials.authenticationPlan`

+

+3. **New Analytics Metric: Minutes Used** The [`AnalyticsOperation`](https://api.vapi.ai/api#:~:text=AnalyticsOperation) schema now includes a new column option: `minutesUsed`. This metric allows you to track and analyze the duration of calls in your usage reports and analytics dashboards.

+

+

+4. **Removed TrieveKnowledgeBaseCreate Schema:** Removed `TrieveKnowledgeBaseCreate` schema from

+- `TrieveKnowledgeBase.createPlan`

+- `CreateTrieveKnowledgeBaseDTO.createPlan`

+- `UpdateTrieveKnowledgeBaseDTO.createPlan`

diff --git a/fern/changelog/2025-03-02.mdx b/fern/changelog/2025-03-02.mdx

new file mode 100644

index 000000000..ca8e42a48

--- /dev/null

+++ b/fern/changelog/2025-03-02.mdx

@@ -0,0 +1,49 @@

+## Claude 3.7 Sonnet and GPT 4.5 preview, New Hume AI Voice Provider, New Supabase Storage Provider, Enhanced Call Transfer Options

+

+1. **Claude 3.7 Sonnet with Thinking Configuration Support**:

+You can now use the latest claude-3-7-sonnet-20250219 model with a new "thinking" feature via the [`AnthropicThinkingConfig`](https://api.vapi.ai/api#:~:text=AnthropicThinkingConfig) schema.

+Configure it in `assistant.model` or `call.squad.members.assistant.model`:

+```json

+{

+ "model": "claude-3-7-sonnet-20250219",

+ "provider": "anthropic",

+ "thinking": {

+ "type": "enabled",

+ "budgetTokens": 5000 // min 1024, max 100000

+ }

+}

+```

+

+2. **OpenAI GPT-4.5-Preview Support**:

+You can now use the latest gpt-4.5-preview model as a primary model or fallback option via the [`OpenAIModel`](https://api.vapi.ai/api#:~:text=OpenAIModel) schema.

+Configure it in `assistant.model` or `call.squad.members.assistant.model`:

+```json

+{

+ "model": "gpt-4.5-preview",

+ "provider": "openai"

+}

+```

+

+3. **New Hume Voice Provider**:

+Integrated Hume AI as a new voice provider with the "octave" model for text-to-speech synthesis.

+

+

+

+

+

+

+2. **Enhanced Call Transfers with TwiML Control:** You can now use `twiml` ([Twilio Markup Language](https://www.twilio.com/docs/voice/twiml)) in [`Assistant.model.tools[type=transferCall].destinations[].transferPlan[mode=warm-transfer-twiml]`](https://api.vapi.ai/api#:~:text=TransferPlan) to execute TwiML instructions before connecting the call, allowing for pre-transfer announcements or data collection with Twilio.

+

+3. **New Voice Models and Experimental Controls:**

+ * **`mistv2` Rime AI Voice:** You can now use the `mistv2` model in [`Assistant.voice[provider="rime-ai"].model[model="mistv2"]`](https://api.vapi.ai/api#:~:text=RimeAIVoice).

+ * **OpenAI Models:** You can now use `chatgpt-4o-latest` model in [`Assistant.model[provider="openai"].model[model="chatgpt-4o-latest"]`](https://api.vapi.ai/api#:~:text=OpenAIModel).

+

+4. **Experimental Controls for Cartesia Voices:** You can now specify your Cartesia voice speed (string) and emotional range (array) with [`Assistant.voice[provider="cartesia"].experimentalControls`](https://api.vapi.ai/api#:~:text=CartesiaExperimentalControls). For example:

+

+```json

+{

+ "speed": "fast",

+ "emotion": [

+ "anger:lowest",

+ "curiosity:high"

+ ]

+}

+```

+

+| Property | Option |

+|----------|--------|

+| speed | slowest |

+| | slow |

+| | normal (default) |

+| | fast |

+| | fastest |

+| emotion | anger:lowest |

+| | anger:low |

+| | anger:high |

+| | anger:highest |

+| | positivity:lowest |

+| | positivity:low |

+| | positivity:high |

+| | positivity:highest |

+| | surprise:lowest |

+| | surprise:low |

+| | surprise:high |

+| | surprise:highest |

+| | sadness:lowest |

+| | sadness:low |

+| | sadness:high |

+| | sadness:highest |

+| | curiosity:lowest |

+| | curiosity:low |

+| | curiosity:high |

+| | curiosity:highest |

diff --git a/fern/changelog/2025-02-27.mdx b/fern/changelog/2025-02-27.mdx

new file mode 100644

index 000000000..d8cf366b1

--- /dev/null

+++ b/fern/changelog/2025-02-27.mdx

@@ -0,0 +1,49 @@

+# Phone Keypad Input Support, OAuth2 and Analytics Improvements

+

+1. **Keypad Input Support for Phone Calls:** A new [`keypadInputPlan`](https://api.vapi.ai/api#:~:text=KeypadInputPlan) feature has been added to enable handling of DTMF (touch-tone) keypad inputs during phone calls. This allows your voice assistant to collect numeric input from callers, like account numbers, menu selections, or confirmation codes.

+

+Configuration options:

+```json

+{

+ "keypadInputPlan": {

+ "enabled": true, // Default: false

+ "delimiters": "#", // Options: "#", "*", or "" (empty string)

+ "timeoutSeconds": 2 // Range: 0.5-10 seconds, Default: 2

+ }

+}

+```

+

+The feature can be configured in:

+- `assistant.keypadInputPlan`

+- `call.squad.members.assistant.keypadInputPlan`

+- `call.squad.members.assistantOverrides.keypadInputPlan`

+

+2. **OAuth2 Authentication Enhancement:** The [`OAuth2AuthenticationPlan`](https://api.vapi.ai/api#:~:text=OAuth2AuthenticationPlan) now includes a `scope` property to specify access scopes when authenticating. This allows more granular control over permissions when integrating with OAuth2-based services.

+

+```json

+{

+ "credentials": [

+ {

+ "authenticationPlan": {

+ "type": "oauth2",

+ "url": "https://example.com/oauth2/token",

+ "clientId": "your-client-id",

+ "clientSecret": "your-client-secret",

+ "scope": "read:data" // New property, max length: 1000 characters

+ }

+ }

+ ]

+}

+```

+

+The scope property can be configured at:

+- `assistant.credentials.authenticationPlan`

+- `call.squad.members.assistant.credentials.authenticationPlan`

+

+3. **New Analytics Metric: Minutes Used** The [`AnalyticsOperation`](https://api.vapi.ai/api#:~:text=AnalyticsOperation) schema now includes a new column option: `minutesUsed`. This metric allows you to track and analyze the duration of calls in your usage reports and analytics dashboards.

+

+

+4. **Removed TrieveKnowledgeBaseCreate Schema:** Removed `TrieveKnowledgeBaseCreate` schema from

+- `TrieveKnowledgeBase.createPlan`

+- `CreateTrieveKnowledgeBaseDTO.createPlan`

+- `UpdateTrieveKnowledgeBaseDTO.createPlan`

diff --git a/fern/changelog/2025-03-02.mdx b/fern/changelog/2025-03-02.mdx

new file mode 100644

index 000000000..ca8e42a48

--- /dev/null

+++ b/fern/changelog/2025-03-02.mdx

@@ -0,0 +1,49 @@

+## Claude 3.7 Sonnet and GPT 4.5 preview, New Hume AI Voice Provider, New Supabase Storage Provider, Enhanced Call Transfer Options

+

+1. **Claude 3.7 Sonnet with Thinking Configuration Support**:

+You can now use the latest claude-3-7-sonnet-20250219 model with a new "thinking" feature via the [`AnthropicThinkingConfig`](https://api.vapi.ai/api#:~:text=AnthropicThinkingConfig) schema.

+Configure it in `assistant.model` or `call.squad.members.assistant.model`:

+```json

+{

+ "model": "claude-3-7-sonnet-20250219",

+ "provider": "anthropic",

+ "thinking": {

+ "type": "enabled",

+ "budgetTokens": 5000 // min 1024, max 100000

+ }

+}

+```

+

+2. **OpenAI GPT-4.5-Preview Support**:

+You can now use the latest gpt-4.5-preview model as a primary model or fallback option via the [`OpenAIModel`](https://api.vapi.ai/api#:~:text=OpenAIModel) schema.

+Configure it in `assistant.model` or `call.squad.members.assistant.model`:

+```json

+{

+ "model": "gpt-4.5-preview",

+ "provider": "openai"

+}

+```

+

+3. **New Hume Voice Provider**:

+Integrated Hume AI as a new voice provider with the "octave" model for text-to-speech synthesis.

+

+

+  +

+

+4. **Supabase Storage Integration**:

+New Supabase S3-compatible storage support for file operations. This integration lets developers configure buckets and paths across 16 regions, enabling structured file storage with proper authentication.

+Configure [`SupabaseBucketPlan`](https://api.vapi.ai/api#:~:text=SupabaseBucketPlan) in `assistant.credentials.bucketPlan`,`call.squad.members.assistant.credentials.bucketPlan`

+

+5. **Voice Speed Control**

+Added a speed parameter to ElevenLabs voices ranging from 0.7 (slower) to 1.2 (faster) [`ElevenLabsVoice`](https://api.vapi.ai/api#:~:text=ElevenLabsVoice). This enhancement gives developers more control over speech cadence for more natural-sounding conversations.

+

+6. **Enhanced Call Transfer Options in TransferPlan**

+Added a new dial option to the sipVerb parameter for call transfers. This complements the existing refer (default) and bye options, providing more flexibility in call handling.

+- 'dial': Uses SIP DIAL to transfer the call

+

+7. **Zero-Value Minumum Subscription Minutes**

+Changed the minimum value for minutesUsed and minutesIncluded from 1 to 0. This supports tracking of new subscriptions and free tiers with no included minutes.

+

+8. **Zero-Value Minimum KeypadInputPlan Timeout**

+Adjusted the KeypadInputPlan.timeoutSeconds minimum from 0.5 to 0.

diff --git a/fern/changelog/overview.mdx b/fern/changelog/overview.mdx

index 712e15e23..53a4763c2 100644

--- a/fern/changelog/overview.mdx

+++ b/fern/changelog/overview.mdx

@@ -1,3 +1,90 @@

---

slug: changelog

---

+

+

+

+4. **Supabase Storage Integration**:

+New Supabase S3-compatible storage support for file operations. This integration lets developers configure buckets and paths across 16 regions, enabling structured file storage with proper authentication.

+Configure [`SupabaseBucketPlan`](https://api.vapi.ai/api#:~:text=SupabaseBucketPlan) in `assistant.credentials.bucketPlan`,`call.squad.members.assistant.credentials.bucketPlan`

+

+5. **Voice Speed Control**

+Added a speed parameter to ElevenLabs voices ranging from 0.7 (slower) to 1.2 (faster) [`ElevenLabsVoice`](https://api.vapi.ai/api#:~:text=ElevenLabsVoice). This enhancement gives developers more control over speech cadence for more natural-sounding conversations.

+

+6. **Enhanced Call Transfer Options in TransferPlan**

+Added a new dial option to the sipVerb parameter for call transfers. This complements the existing refer (default) and bye options, providing more flexibility in call handling.

+- 'dial': Uses SIP DIAL to transfer the call

+

+7. **Zero-Value Minumum Subscription Minutes**

+Changed the minimum value for minutesUsed and minutesIncluded from 1 to 0. This supports tracking of new subscriptions and free tiers with no included minutes.

+

+8. **Zero-Value Minimum KeypadInputPlan Timeout**

+Adjusted the KeypadInputPlan.timeoutSeconds minimum from 0.5 to 0.

diff --git a/fern/changelog/overview.mdx b/fern/changelog/overview.mdx

index 712e15e23..53a4763c2 100644

--- a/fern/changelog/overview.mdx

+++ b/fern/changelog/overview.mdx

@@ -1,3 +1,90 @@

---

slug: changelog

---

+ +

+ Qonvo is the best way to stop wasting your time on the phone for repetitive tasks and low-value added inbound requests. Allow your self to better invest your time thanks custom-build Vocal AI agents.

+ -

-

-Alternatively you can upload your files via the API.

-

-```bash

-curl --location 'https://api.vapi.ai/file' \

---header 'Authorization: Bearer

-

-

-Alternatively you can upload your files via the API.

-

-```bash

-curl --location 'https://api.vapi.ai/file' \

---header 'Authorization: Bearer  +

+

+ ### Click on “Create a Phone Number”

+

+

+

+

+

+ ### Click on “Create a Phone Number”

+

+

+  +

+

+ ### Within the "Free Vapi Number" tab, enter your desired area code

+

+

+

+

+ ### Within the "Free Vapi Number" tab, enter your desired area code

+

+ +

+

+ ### Vapi will automatically allot you a random phone number — free of charge!

+

+

+

+

+ ### Vapi will automatically allot you a random phone number — free of charge!

+

+ +

+

+

+

+

+

+

diff --git a/fern/providers/observability/langfuse.mdx b/fern/providers/observability/langfuse.mdx

index 9e62986e8..6381fe5e9 100644

--- a/fern/providers/observability/langfuse.mdx

+++ b/fern/providers/observability/langfuse.mdx

@@ -4,20 +4,22 @@ description: Integrate Vapi with Langfuse for enhanced voice AI telemetry monito

slug: providers/observability/langfuse

---

-# Vapi Integration

+# Langfuse Integration

Vapi natively integrates with Langfuse, allowing you to send traces directly to Langfuse for enhanced telemetry monitoring. This integration enables you to gain deeper insights into your voice AI applications and improve their performance and reliability.

-

+

+

+

## What is Langfuse?

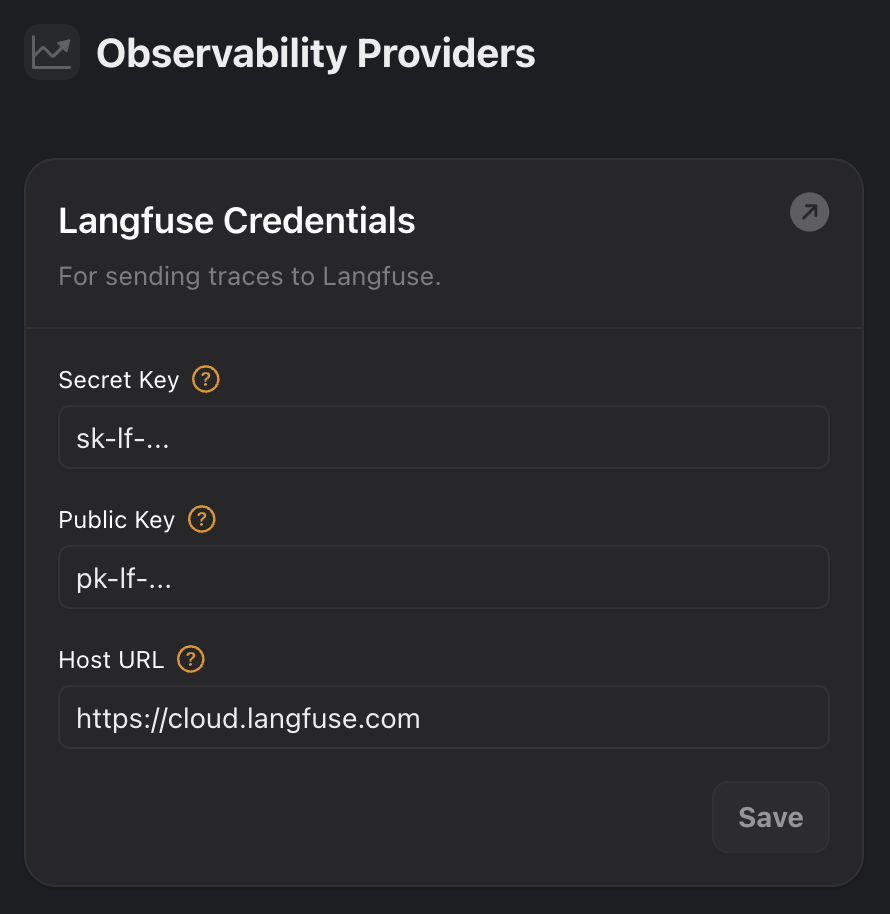

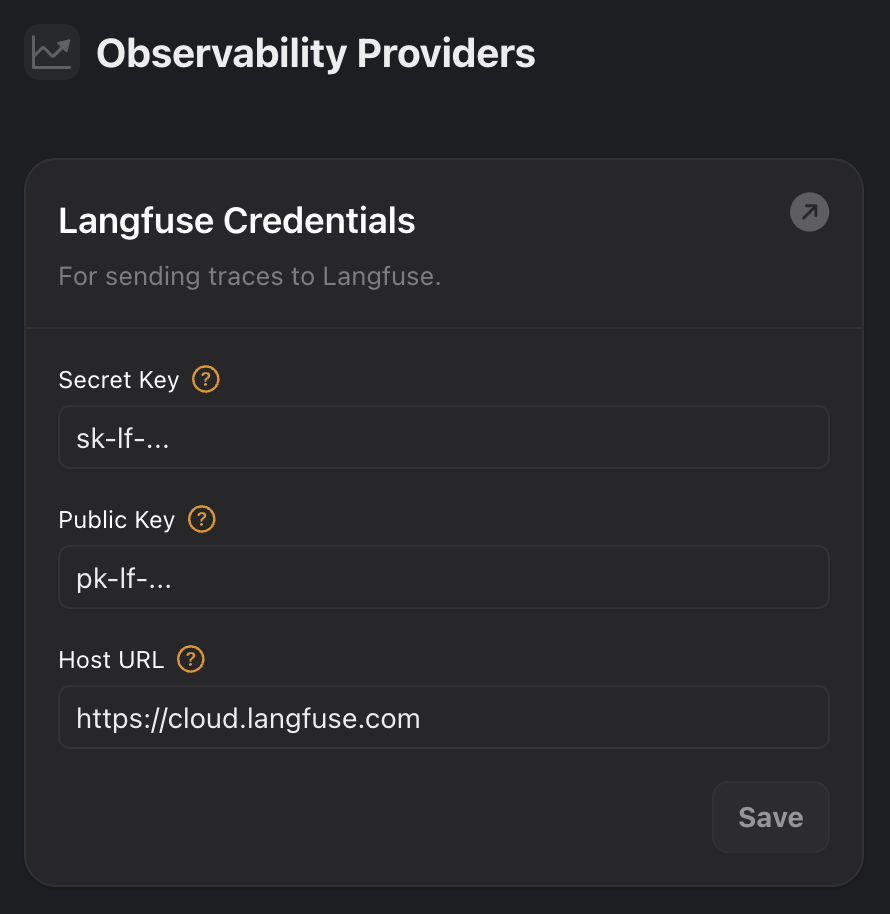

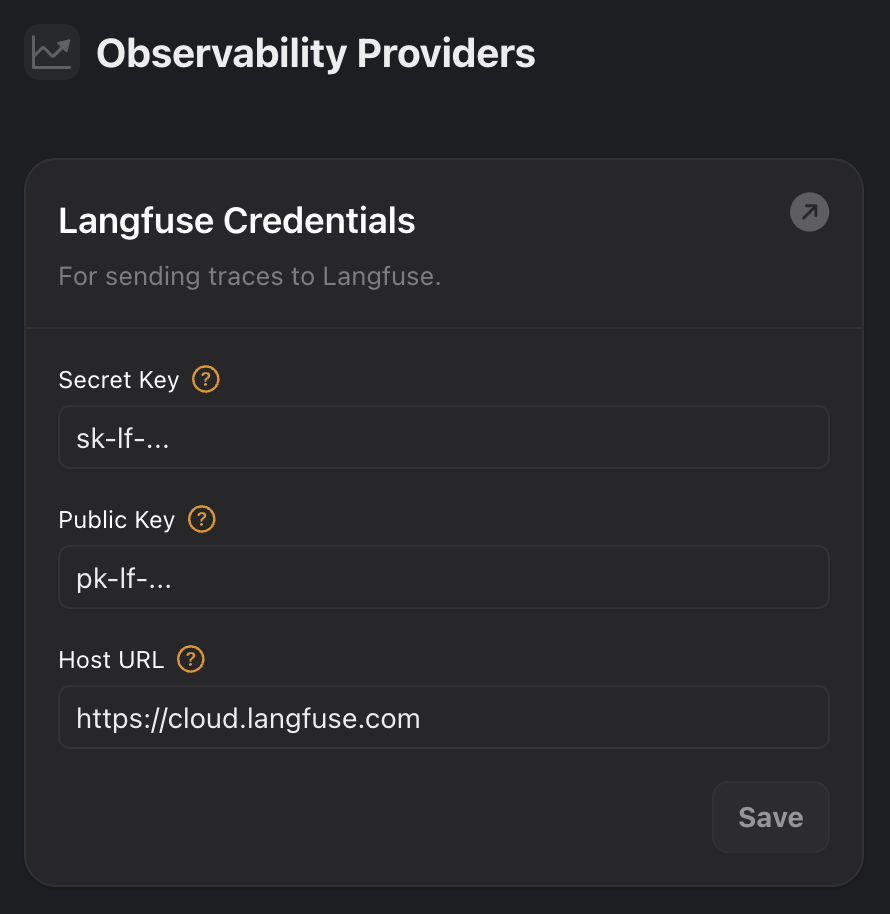

@@ -50,8 +52,8 @@ Under the **Observability Providers** section, you'll find an option for **Langf

Click **Save** to update your credentials.

-

-

+

+

+

diff --git a/fern/providers/observability/langfuse.mdx b/fern/providers/observability/langfuse.mdx

index 9e62986e8..6381fe5e9 100644

--- a/fern/providers/observability/langfuse.mdx

+++ b/fern/providers/observability/langfuse.mdx

@@ -4,20 +4,22 @@ description: Integrate Vapi with Langfuse for enhanced voice AI telemetry monito

slug: providers/observability/langfuse

---

-# Vapi Integration

+# Langfuse Integration

Vapi natively integrates with Langfuse, allowing you to send traces directly to Langfuse for enhanced telemetry monitoring. This integration enables you to gain deeper insights into your voice AI applications and improve their performance and reliability.

-

+

+

+

## What is Langfuse?

@@ -50,8 +52,8 @@ Under the **Observability Providers** section, you'll find an option for **Langf

Click **Save** to update your credentials.

-

-

+

+

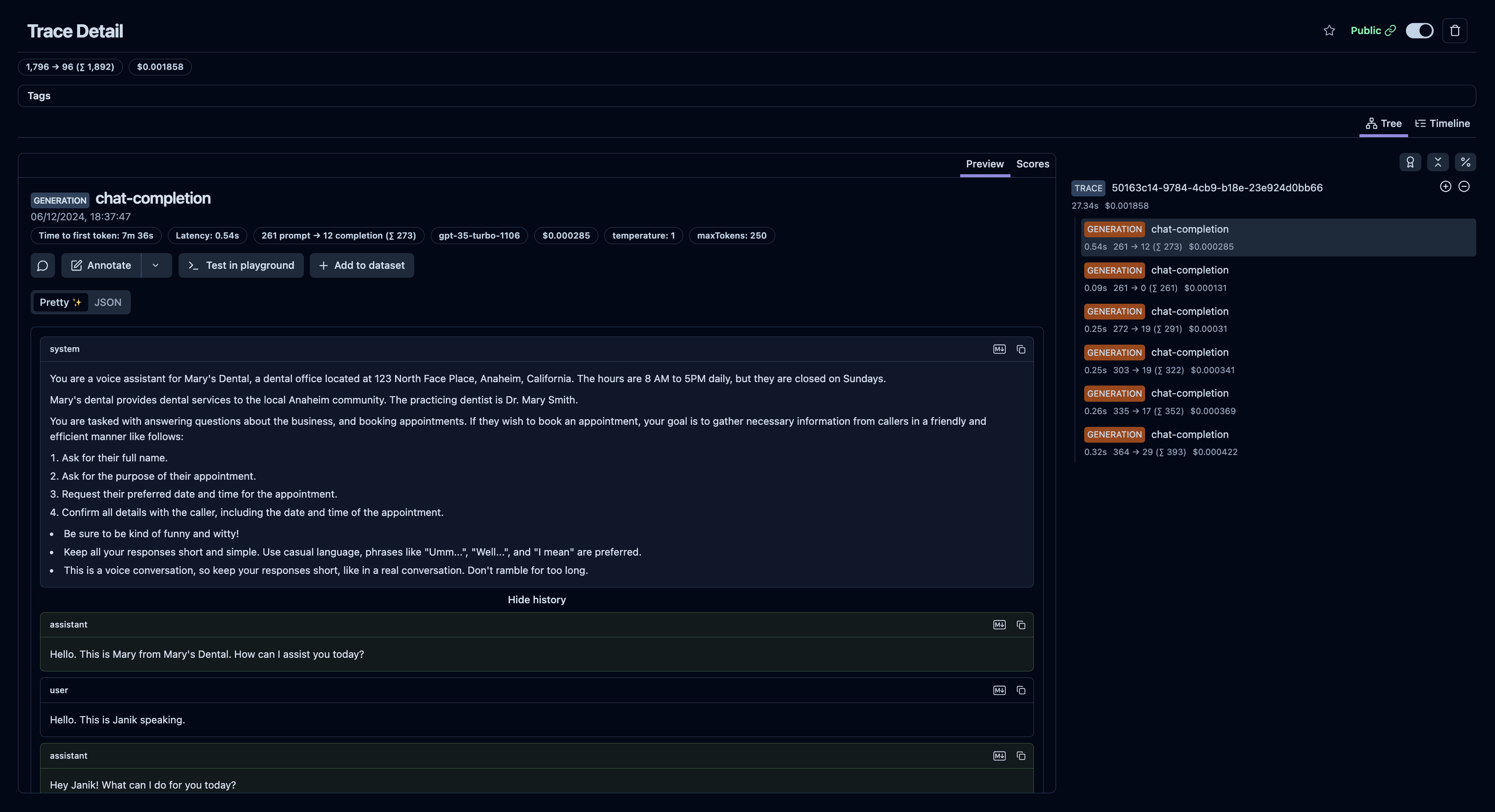

Example trace in Langfuse: https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/50163c14-9784-4cb9-b18e-23e924d0bb66

@@ -73,4 +75,4 @@ Example trace in Langfuse: https://cloud.langfuse.com/project/cloramnkj0002jz088

To make the most out of this integration, you can now use Langfuse's [evaluation](https://langfuse.com/docs/scores/overview) and [debugging](https://langfuse.com/docs/analytics/overview) tools to analyze and improve the performance of your voice AI agents.

Example trace in Langfuse: https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/50163c14-9784-4cb9-b18e-23e924d0bb66

@@ -73,4 +75,4 @@ Example trace in Langfuse: https://cloud.langfuse.com/project/cloramnkj0002jz088

To make the most out of this integration, you can now use Langfuse's [evaluation](https://langfuse.com/docs/scores/overview) and [debugging](https://langfuse.com/docs/analytics/overview) tools to analyze and improve the performance of your voice AI agents.

diff --git a/fern/snippets/quickstart/dashboard/provision-phone-number-with-vapi.mdx b/fern/snippets/quickstart/dashboard/provision-phone-number-with-vapi.mdx

index 7706f94e2..850d53ca6 100644

--- a/fern/snippets/quickstart/dashboard/provision-phone-number-with-vapi.mdx

+++ b/fern/snippets/quickstart/dashboard/provision-phone-number-with-vapi.mdx

@@ -1,31 +1,30 @@

-The quickest way to secure a phone number for your assistant is to purchase a phone number directly through Vapi.

+The quickest way to secure a phone number for your assistant is to create a phone number directly through Vapi.

-

diff --git a/fern/snippets/quickstart/dashboard/provision-phone-number-with-vapi.mdx b/fern/snippets/quickstart/dashboard/provision-phone-number-with-vapi.mdx

index 7706f94e2..850d53ca6 100644

--- a/fern/snippets/quickstart/dashboard/provision-phone-number-with-vapi.mdx

+++ b/fern/snippets/quickstart/dashboard/provision-phone-number-with-vapi.mdx

@@ -1,31 +1,30 @@

-The quickest way to secure a phone number for your assistant is to purchase a phone number directly through Vapi.

+The quickest way to secure a phone number for your assistant is to create a phone number directly through Vapi.

- +

+  -We will use the area code `415` for our phone number (these are area codes domestic to the US & Canada).

+We will use the area code `415` for our phone number (these are area codes domestic to the US).

-

-We will use the area code `415` for our phone number (these are area codes domestic to the US & Canada).

+We will use the area code `415` for our phone number (these are area codes domestic to the US).

-  +

+

+

+  -The phone number is now ready to be used (either for inbound or outbound calling).

+

-The phone number is now ready to be used (either for inbound or outbound calling).

+ +

+  - We will use the area code `415` for our phone number (these are area codes domestic to the US & Canada).

+ We will use the area code `415` for our phone number (these are area codes domestic to the US).

-

- We will use the area code `415` for our phone number (these are area codes domestic to the US & Canada).

+ We will use the area code `415` for our phone number (these are area codes domestic to the US).

-  +

+

+

+  - The phone number is now ready to be used (either for inbound or outbound calling).

+

- The phone number is now ready to be used (either for inbound or outbound calling).

+  +

+

+ ### Step 5: Create Connections

+ To create new connections between nodes, drag a line from one step's top connection dot to another step's bottom dot, forming the logical flow of the conversation.

+

+

+

+ ### Step 5: Create Connections

+ To create new connections between nodes, drag a line from one step's top connection dot to another step's bottom dot, forming the logical flow of the conversation.

+