diff --git a/readme.md b/readme.md

index e4e4b26..a13b178 100644

--- a/readme.md

+++ b/readme.md

@@ -6,12 +6,14 @@

[](https://github.com//devlooped/Extensions.AI/blob/main/license.txt)

[](https://github.com/devlooped/Extensions.AI/actions/workflows/build.yml)

-Extensions for [Microsoft.Extensions.AI](https://nuget.org/packages/Microsoft.Extensions.AI).

+## Extensions

-

+

+## Weaving

+

+

-

# Sponsors

diff --git a/src/AI.Tests/GrokTests.cs b/src/AI.Tests/GrokTests.cs

index 6717970..364f484 100644

--- a/src/AI.Tests/GrokTests.cs

+++ b/src/AI.Tests/GrokTests.cs

@@ -64,6 +64,31 @@ public async Task GrokInvokesToolAndSearch()

Assert.Contains("Nasdaq", text, StringComparison.OrdinalIgnoreCase);

}

+ [SecretsFact("XAI_API_KEY")]

+ public async Task GrokInvokesHostedSearchTool()

+ {

+ var messages = new Chat()

+ {

+ { "system", "You are an AI assistant that knows how to search the web." },

+ { "user", "What's Tesla stock worth today? Search X and the news for latest info." },

+ };

+

+ var grok = new GrokClient(Configuration["XAI_API_KEY"]!);

+

+ var options = new ChatOptions

+ {

+ ModelId = "grok-3",

+ Tools = [new HostedWebSearchTool()]

+ };

+

+ var response = await grok.GetResponseAsync(messages, options);

+ var text = response.Text;

+

+ Assert.Contains("TSLA", text);

+ Assert.Contains("$", text);

+ Assert.Contains("Nasdaq", text, StringComparison.OrdinalIgnoreCase);

+ }

+

[SecretsFact("XAI_API_KEY")]

public async Task GrokThinksHard()

{

diff --git a/src/AI/Console/JsonConsoleLoggingChatClient.cs b/src/AI/Console/JsonConsoleLoggingChatClient.cs

deleted file mode 100644

index 7f83a1f..0000000

--- a/src/AI/Console/JsonConsoleLoggingChatClient.cs

+++ /dev/null

@@ -1,57 +0,0 @@

-using System.Runtime.CompilerServices;

-using Microsoft.Extensions.AI;

-using Spectre.Console;

-using Spectre.Console.Json;

-

-namespace Devlooped.Extensions.AI;

-

-///

-/// Chat client that logs messages and responses to the console in JSON format using Spectre.Console.

-///

-///

-public class JsonConsoleLoggingChatClient(IChatClient innerClient) : DelegatingChatClient(innerClient)

-{

- ///

- /// Whether to include additional properties in the JSON output.

- ///

- public bool IncludeAdditionalProperties { get; set; } = true;

-

- ///

- /// Optional maximum length to render for string values. Replaces remaining characters with "...".

- ///

- public int? MaxLength { get; set; }

-

- public override async Task GetResponseAsync(IEnumerable messages, ChatOptions? options = null, CancellationToken cancellationToken = default)

- {

- AnsiConsole.Write(new Panel(new JsonText(new

- {

- messages = messages.Where(x => x.Role != ChatRole.System).ToArray(),

- options

- }.ToJsonString(MaxLength, IncludeAdditionalProperties))));

-

- var response = await InnerClient.GetResponseAsync(messages, options, cancellationToken);

-

- AnsiConsole.Write(new Panel(new JsonText(response.ToJsonString(MaxLength, IncludeAdditionalProperties))));

- return response;

- }

-

- public override async IAsyncEnumerable GetStreamingResponseAsync(IEnumerable messages, ChatOptions? options = null, [EnumeratorCancellation] CancellationToken cancellationToken = default)

- {

- AnsiConsole.Write(new Panel(new JsonText(new

- {

- messages = messages.Where(x => x.Role != ChatRole.System).ToArray(),

- options

- }.ToJsonString(MaxLength, IncludeAdditionalProperties))));

-

- List updates = [];

-

- await foreach (var update in base.GetStreamingResponseAsync(messages, options, cancellationToken))

- {

- updates.Add(update);

- yield return update;

- }

-

- AnsiConsole.Write(new Panel(new JsonText(updates.ToJsonString(MaxLength, IncludeAdditionalProperties))));

- }

-}

-

diff --git a/src/AI/Console/JsonConsoleLoggingExtensions.cs b/src/AI/Console/JsonConsoleLoggingExtensions.cs

index 88d1e41..cb2a773 100644

--- a/src/AI/Console/JsonConsoleLoggingExtensions.cs

+++ b/src/AI/Console/JsonConsoleLoggingExtensions.cs

@@ -1,8 +1,8 @@

using System.ClientModel.Primitives;

using System.ComponentModel;

+using System.Runtime.CompilerServices;

using Devlooped.Extensions.AI;

using Spectre.Console;

-using Spectre.Console.Json;

namespace Microsoft.Extensions.AI;

@@ -17,7 +17,7 @@ public static class JsonConsoleLoggingExtensions

/// console using Spectre.Console rich JSON formatting, but only if the console is interactive.

///

/// The options type to configure for HTTP logging.

- /// The options instance to configure.

+ /// The options instance to configure.

///

/// NOTE: this is the lowst-level logging after all chat pipeline processing has been done.

///

@@ -25,33 +25,20 @@ public static class JsonConsoleLoggingExtensions

/// logging transport to minimize the impact on existing configurations.

///

///

- public static TOptions UseJsonConsoleLogging(this TOptions options)

- where TOptions : ClientPipelineOptions

- => UseJsonConsoleLogging(options, ConsoleExtensions.IsConsoleInteractive);

-

- ///

- /// Sets a that renders HTTP messages to the

- /// console using Spectre.Console rich JSON formatting.

- ///

- /// The options type to configure for HTTP logging.

- /// The options instance to configure.

- /// Whether to confirm logging before enabling it.

- ///

- /// NOTE: this is the lowst-level logging after all chat pipeline processing has been done.

- ///

- /// If the options already provide a transport, it will be wrapped with the console

- /// logging transport to minimize the impact on existing configurations.

- ///

- ///

- public static TOptions UseJsonConsoleLogging(this TOptions options, bool askConfirmation)

+ public static TOptions UseJsonConsoleLogging(this TOptions pipelineOptions, JsonConsoleOptions? consoleOptions = null)

where TOptions : ClientPipelineOptions

{

- if (askConfirmation && !AnsiConsole.Confirm("Do you want to enable rich JSON console logging for HTTP pipeline messages?"))

- return options;

+ consoleOptions ??= JsonConsoleOptions.Default;

+

+ if (consoleOptions.InteractiveConfirm && ConsoleExtensions.IsConsoleInteractive && !AnsiConsole.Confirm("Do you want to enable rich JSON console logging for HTTP pipeline messages?"))

+ return pipelineOptions;

+

+ if (consoleOptions.InteractiveOnly && !ConsoleExtensions.IsConsoleInteractive)

+ return pipelineOptions;

- options.Transport = new ConsoleLoggingPipelineTransport(options.Transport ?? HttpClientPipelineTransport.Shared);

+ pipelineOptions.Transport = new ConsoleLoggingPipelineTransport(pipelineOptions.Transport ?? HttpClientPipelineTransport.Shared, consoleOptions);

- return options;

+ return pipelineOptions;

}

///

@@ -62,36 +49,24 @@ public static TOptions UseJsonConsoleLogging(this TOptions options, bo

/// Confirmation will be asked if the console is interactive, otherwise, it will be

/// enabled unconditionally.

///

- public static ChatClientBuilder UseJsonConsoleLogging(this ChatClientBuilder builder)

- => UseJsonConsoleLogging(builder, ConsoleExtensions.IsConsoleInteractive);

-

- ///

- /// Renders chat messages and responses to the console using Spectre.Console rich JSON formatting.

- ///

- /// The builder in use.

- /// If true, prompts the user for confirmation before enabling console logging.

- /// Optional maximum length to render for string values. Replaces remaining characters with "...".

- public static ChatClientBuilder UseJsonConsoleLogging(this ChatClientBuilder builder, bool askConfirmation = false, Action? configure = null)

+ public static ChatClientBuilder UseJsonConsoleLogging(this ChatClientBuilder builder, JsonConsoleOptions? consoleOptions = null)

{

- if (askConfirmation && !AnsiConsole.Confirm("Do you want to enable console logging for chat messages?"))

+ consoleOptions ??= JsonConsoleOptions.Default;

+

+ if (consoleOptions.InteractiveConfirm && ConsoleExtensions.IsConsoleInteractive && !AnsiConsole.Confirm("Do you want to enable rich JSON console logging for HTTP pipeline messages?"))

return builder;

- return builder.Use(inner =>

- {

- var client = new JsonConsoleLoggingChatClient(inner);

- configure?.Invoke(client);

- return client;

- });

+ if (consoleOptions.InteractiveOnly && !ConsoleExtensions.IsConsoleInteractive)

+ return builder;

+

+ return builder.Use(inner => new JsonConsoleLoggingChatClient(inner, consoleOptions));

}

- class ConsoleLoggingPipelineTransport(PipelineTransport inner) : PipelineTransport

+ class ConsoleLoggingPipelineTransport(PipelineTransport inner, JsonConsoleOptions consoleOptions) : PipelineTransport

{

public static PipelineTransport Default { get; } = new ConsoleLoggingPipelineTransport();

- public ConsoleLoggingPipelineTransport() : this(HttpClientPipelineTransport.Shared) { }

-

- protected override PipelineMessage CreateMessageCore() => inner.CreateMessage();

- protected override void ProcessCore(PipelineMessage message) => inner.Process(message);

+ public ConsoleLoggingPipelineTransport() : this(HttpClientPipelineTransport.Shared, JsonConsoleOptions.Default) { }

protected override async ValueTask ProcessCoreAsync(PipelineMessage message)

{

@@ -105,15 +80,52 @@ protected override async ValueTask ProcessCoreAsync(PipelineMessage message)

memory.Position = 0;

using var reader = new StreamReader(memory);

var content = await reader.ReadToEndAsync();

-

- AnsiConsole.Write(new Panel(new JsonText(content)));

+ AnsiConsole.Write(consoleOptions.CreatePanel(content));

}

if (message.Response != null)

{

- AnsiConsole.Write(new Panel(new JsonText(message.Response.Content.ToString())));

+ AnsiConsole.Write(consoleOptions.CreatePanel(message.Response.Content.ToString()));

}

}

+

+ protected override PipelineMessage CreateMessageCore() => inner.CreateMessage();

+ protected override void ProcessCore(PipelineMessage message) => inner.Process(message);

}

+ class JsonConsoleLoggingChatClient(IChatClient inner, JsonConsoleOptions consoleOptions) : DelegatingChatClient(inner)

+ {

+ public override async Task GetResponseAsync(IEnumerable messages, ChatOptions? options = null, CancellationToken cancellationToken = default)

+ {

+ AnsiConsole.Write(consoleOptions.CreatePanel(new

+ {

+ messages = messages.Where(x => x.Role != ChatRole.System).ToArray(),

+ options

+ }));

+

+ var response = await InnerClient.GetResponseAsync(messages, options, cancellationToken);

+ AnsiConsole.Write(consoleOptions.CreatePanel(response));

+

+ return response;

+ }

+

+ public override async IAsyncEnumerable GetStreamingResponseAsync(IEnumerable messages, ChatOptions? options = null, [EnumeratorCancellation] CancellationToken cancellationToken = default)

+ {

+ AnsiConsole.Write(consoleOptions.CreatePanel(new

+ {

+ messages = messages.Where(x => x.Role != ChatRole.System).ToArray(),

+ options

+ }));

+

+ List updates = [];

+

+ await foreach (var update in base.GetStreamingResponseAsync(messages, options, cancellationToken))

+ {

+ updates.Add(update);

+ yield return update;

+ }

+

+ AnsiConsole.Write(consoleOptions.CreatePanel(updates));

+ }

+ }

}

diff --git a/src/AI/Console/JsonConsoleOptions.cs b/src/AI/Console/JsonConsoleOptions.cs

new file mode 100644

index 0000000..4661e70

--- /dev/null

+++ b/src/AI/Console/JsonConsoleOptions.cs

@@ -0,0 +1,153 @@

+using System.Text.Json;

+using System.Text.Json.Nodes;

+using Microsoft.Extensions.AI;

+using Spectre.Console;

+using Spectre.Console.Json;

+using Spectre.Console.Rendering;

+

+namespace Devlooped.Extensions.AI;

+

+///

+/// Options for rendering JSON output to the console.

+///

+public class JsonConsoleOptions

+{

+ static readonly JsonSerializerOptions jsonOptions = new(JsonSerializerDefaults.Web);

+

+ ///

+ /// Default settings for rendering JSON output to the console, which include:

+ /// * : true

+ /// * : true if console is interactive, otherwise false

+ /// * : true

+ ///

+ public static JsonConsoleOptions Default { get; } = new JsonConsoleOptions();

+

+ ///

+ /// Border kind for the JSON output panel.

+ ///

+ public BoxBorder Border { get; set; } = BoxBorder.Square;

+

+ ///

+ /// Border style for the JSON output panel.

+ ///

+ public Style BorderStyle { get; set; } = Style.Parse("grey");

+

+ ///

+ /// Whether to include additional properties in the JSON output.

+ ///

+ ///

+ /// See and .

+ ///

+ public bool IncludeAdditionalProperties { get; set; } = true;

+

+ ///

+ /// Confirm whether to render JSON output to the console, if the console is interactive. If

+ /// it is non-interactive, JSON output will be rendered conditionally based on the

+ /// setting.

+ ///

+ public bool InteractiveConfirm { get; set; } = ConsoleExtensions.IsConsoleInteractive;

+

+ ///

+ /// Only render JSON output if the console is interactive.

+ ///

+ ///

+ /// This setting defaults to to avoid cluttering non-interactive console

+ /// outputs with JSON, while also removing the need to conditionally check for console interactivity.

+ ///

+ public bool InteractiveOnly { get; set; } = true;

+

+ ///

+ /// Specifies the length at which long text will be truncated.

+ ///

+ ///

+ /// This setting is useful for trimming long strings in the output for cases where you're more

+ /// interested in the metadata about the request and not so much in the actual content of the messages.

+ ///

+ public int? TruncateLength { get; set; }

+

+ ///

+ /// Specifies the length at which long text will be wrapped automatically.

+ ///

+ ///

+ /// This setting is useful for ensuring that long lines of JSON text are wrapped to fit

+ /// in a narrower width in the console for easier reading.

+ ///

+ public int? WrapLength { get; set; }

+

+ internal Panel CreatePanel(string json)

+ {

+ // Determine if we need to pre-process the JSON string based on the settings.

+ if (TruncateLength.HasValue || !IncludeAdditionalProperties)

+ {

+ json = JsonNode.Parse(json)?.ToShortJsonString(TruncateLength, IncludeAdditionalProperties) ?? json;

+ }

+

+ var panel = new Panel(WrapLength.HasValue ? new WrappedJsonText(json, WrapLength.Value) : new JsonText(json))

+ {

+ Border = Border,

+ BorderStyle = BorderStyle,

+ };

+

+ return panel;

+ }

+

+ internal Panel CreatePanel(object value)

+ {

+ string? json = null;

+

+ // Determine if we need to pre-process the JSON string based on the settings.

+ if (TruncateLength.HasValue || !IncludeAdditionalProperties)

+ {

+ json = value.ToShortJsonString(TruncateLength, IncludeAdditionalProperties);

+ }

+ else

+ {

+ // i.e. we had no pre-processing to do

+ json = JsonSerializer.Serialize(value, jsonOptions);

+ }

+

+ var panel = new Panel(WrapLength.HasValue ? new WrappedJsonText(json, WrapLength.Value) : new JsonText(json))

+ {

+ Border = Border,

+ BorderStyle = BorderStyle,

+ };

+

+ return panel;

+ }

+

+ sealed class WrappedJsonText(string json, int maxWidth) : Renderable

+ {

+ readonly JsonText jsonText = new(json);

+

+ protected override Measurement Measure(RenderOptions options, int maxWidth)

+ {

+ // Clamp the measurement to the desired maxWidth

+ return new Measurement(Math.Min(maxWidth, maxWidth), Math.Min(maxWidth, maxWidth));

+ }

+

+ protected override IEnumerable Render(RenderOptions options, int maxWidth)

+ {

+ // Render the original JSON text

+ var segments = ((IRenderable)jsonText).Render(options, maxWidth).ToList();

+ var wrapped = new List();

+

+ foreach (var segment in segments)

+ {

+ string text = segment.Text;

+ Style style = segment.Style ?? Style.Plain;

+

+ // Split long lines forcibly at maxWidth

+ var idx = 0;

+ while (idx < text.Length)

+ {

+ var len = Math.Min(maxWidth, text.Length - idx);

+ wrapped.Add(new Segment(text.Substring(idx, len), style));

+ idx += len;

+ if (idx < text.Length)

+ wrapped.Add(Segment.LineBreak);

+ }

+ }

+ return wrapped;

+ }

+ }

+}

diff --git a/src/AI/Grok/GrokChatOptions.cs b/src/AI/Grok/GrokChatOptions.cs

index 648c050..4d16f9d 100644

--- a/src/AI/Grok/GrokChatOptions.cs

+++ b/src/AI/Grok/GrokChatOptions.cs

@@ -12,6 +12,10 @@ public class GrokChatOptions : ChatOptions

/// Configures Grok's live search capabilities.

/// See https://docs.x.ai/docs/guides/live-search.

///

+ ///

+ /// A shortcut to adding a to the collection,

+ /// or the (which sets the behavior).

+ ///

public GrokSearch Search { get; set; } = GrokSearch.Auto;

///

diff --git a/src/AI/Grok/GrokClient.cs b/src/AI/Grok/GrokClient.cs

index 0e6be9e..68874dd 100644

--- a/src/AI/Grok/GrokClient.cs

+++ b/src/AI/Grok/GrokClient.cs

@@ -33,17 +33,31 @@ IChatClient GetClient(ChatOptions? options) => clients.GetOrAdd(

ChatOptions? SetOptions(ChatOptions? options)

{

- if (options is null || options is not GrokChatOptions grok)

+ if (options is null)

return null;

options.RawRepresentationFactory = _ =>

{

- var result = new GrokCompletionOptions

+ var result = new GrokCompletionOptions();

+ var grok = options as GrokChatOptions;

+

+ if (options.Tools != null)

+ {

+ if (options.Tools.OfType().FirstOrDefault() is GrokSearchTool grokSearch)

+ result.Search = grokSearch.Mode;

+ else if (options.Tools.OfType().FirstOrDefault() is HostedWebSearchTool webSearch)

+ result.Search = GrokSearch.Auto;

+

+ // Grok doesn't support any other hosted search tools, so remove remaining ones

+ // so they don't get copied over by the OpenAI client.

+ options.Tools = [.. options.Tools.Where(tool => tool is not HostedWebSearchTool)];

+ }

+ else if (grok is not null)

{

- Search = grok.Search

- };

+ result.Search = grok.Search;

+ }

- if (grok.ReasoningEffort != null)

+ if (grok?.ReasoningEffort != null)

{

result.ReasoningEffortLevel = grok.ReasoningEffort switch

{

diff --git a/src/AI/Grok/GrokSearch.cs b/src/AI/Grok/GrokSearch.cs

deleted file mode 100644

index 552ddcd..0000000

--- a/src/AI/Grok/GrokSearch.cs

+++ /dev/null

@@ -1,3 +0,0 @@

-namespace Devlooped.Extensions.AI;

-

-public enum GrokSearch { Auto, On, Off }

diff --git a/src/AI/Grok/GrokSearchTool.cs b/src/AI/Grok/GrokSearchTool.cs

new file mode 100644

index 0000000..83021d0

--- /dev/null

+++ b/src/AI/Grok/GrokSearchTool.cs

@@ -0,0 +1,21 @@

+using Microsoft.Extensions.AI;

+

+namespace Devlooped.Extensions.AI;

+

+public enum GrokSearch { Auto, On, Off }

+

+///

+/// Configures Grok's live search capabilities.

+/// See https://docs.x.ai/docs/guides/live-search.

+///

+public class GrokSearchTool(GrokSearch mode) : HostedWebSearchTool

+{

+ ///

+ /// Sets the search mode for Grok's live search capabilities.

+ ///

+ public GrokSearch Mode { get; } = mode;

+

+ public override string Name => "Live Search";

+

+ public override string Description => "Performs live search using X.AI";

+}

diff --git a/src/AI/JsonExtensions.cs b/src/AI/JsonExtensions.cs

index b652d2e..67fddc8 100644

--- a/src/AI/JsonExtensions.cs

+++ b/src/AI/JsonExtensions.cs

@@ -10,7 +10,7 @@ static class JsonExtensions

///

/// Recursively truncates long strings in an object before serialization and optionally excludes additional properties.

///

- public static string ToJsonString(this object? value, int? maxStringLength = 100, bool includeAdditionalProperties = true)

+ public static string ToShortJsonString(this object? value, int? maxStringLength = 100, bool includeAdditionalProperties = true)

{

if (value is null)

return "{}";

@@ -19,6 +19,15 @@ public static string ToJsonString(this object? value, int? maxStringLength = 100

return FilterNode(node, maxStringLength, includeAdditionalProperties)?.ToJsonString() ?? "{}";

}

+ public static string ToShortJsonString(this JsonNode? node, int? maxStringLength = 100, bool includeAdditionalProperties = true)

+ {

+ if (node is null)

+ return "{}";

+

+ var filteredNode = FilterNode(node, maxStringLength, includeAdditionalProperties);

+ return filteredNode?.ToJsonString() ?? "{}";

+ }

+

static JsonNode? FilterNode(JsonNode? node, int? maxStringLength = 100, bool includeAdditionalProperties = true)

{

if (node is JsonObject obj)

diff --git a/src/AI/readme.md b/src/AI/readme.md

new file mode 100644

index 0000000..8b92937

--- /dev/null

+++ b/src/AI/readme.md

@@ -0,0 +1,101 @@

+

+Extensions for Microsoft.Extensions.AI

+

+## Grok

+

+Full support for Grok [Live Search](https://docs.x.ai/docs/guides/live-search)

+and [Reasoning](https://docs.x.ai/docs/guides/reasoning) model options.

+

+```csharp

+// Sample X.AI client usage with .NET

+var messages = new Chat()

+{

+ { "system", "You are a highly intelligent AI assistant." },

+ { "user", "What is 101*3?" },

+};

+

+var grok = new GrokClient(Env.Get("XAI_API_KEY")!);

+

+var options = new GrokChatOptions

+{

+ ModelId = "grok-3-mini", // or "grok-3-mini-fast"

+ Temperature = 0.7f,

+ ReasoningEffort = ReasoningEffort.High, // or ReasoningEffort.Low

+ Search = GrokSearch.Auto, // or GrokSearch.On or GrokSearch.Off

+};

+

+var response = await grok.GetResponseAsync(messages, options);

+```

+

+Search can alternatively be configured using a regular `ChatOptions`

+and adding the `HostedWebSearchTool` to the tools collection, which

+sets the live search mode to `auto` like above:

+

+```csharp

+var messages = new Chat()

+{

+ { "system", "You are an AI assistant that knows how to search the web." },

+ { "user", "What's Tesla stock worth today? Search X and the news for latest info." },

+};

+

+var grok = new GrokClient(Env.Get("XAI_API_KEY")!);

+

+var options = new ChatOptions

+{

+ ModelId = "grok-3",

+ Tools = [new HostedWebSearchTool()]

+};

+

+var response = await grok.GetResponseAsync(messages, options);

+```

+

+## Console Logging

+

+Additional `UseJsonConsoleLogging` extension for rich JSON-formatted console logging of AI requests

+are provided at two levels:

+

+* Chat pipeline: similar to `UseLogging`.

+* HTTP pipeline: lowest possible layer before the request is sent to the AI service,

+ can capture all requests and responses. Can also be used with other Azure SDK-based

+ clients that leverage `ClientPipelineOptions`.

+

+> [!NOTE]

+> Rich JSON formatting is provided by [Spectre.Console](https://spectreconsole.net/)

+

+The HTTP pipeline logging can be enabled by calling `UseJsonConsoleLogging` on the

+client options passed to the client constructor:

+

+```csharp

+var openai = new OpenAIClient(

+ Env.Get("OPENAI_API_KEY")!,

+ new OpenAIClientOptions().UseJsonConsoleLogging());

+```

+

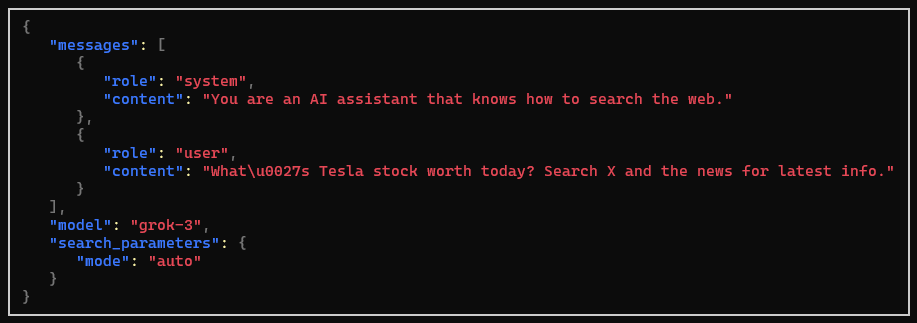

+For a Grok client with search-enabled, a request would look like the following:

+

+

+

+Both alternatives receive an optional `JsonConsoleOptions` instance to configure

+the output, including truncating or wrapping long messages, setting panel style,

+and more.

+

+The chat pipeline logging is added similar to other pipeline extensions:

+

+```csharp

+IChatClient client = new GrokClient(Env.Get("XAI_API_KEY")!)

+ .AsBuilder()

+ .UseOpenTelemetry()

+ // other extensions...

+ .UseJsonConsoleLogging(new JsonConsoleOptions()

+ {

+ // Formatting options...

+ Border = BoxBorder.None,

+ WrapLength = 80,

+ })

+ .Build();

+```

+

+

+

+

+

\ No newline at end of file

diff --git a/src/Samples/Program.cs b/src/Samples/Program.cs

index 26e5140..28e20ae 100644

--- a/src/Samples/Program.cs

+++ b/src/Samples/Program.cs

@@ -1,20 +1,20 @@

// Sample X.AI client usage with .NET

var messages = new Chat()

{

- { "system", "You are a highly intelligent AI assistant." },

- { "user", "What is 101*3?" },

+ { "system", "You are an AI assistant that knows how to search the web." },

+ { "user", "What's Tesla stock worth today? Search X and the news for latest info." },

};

-var grok = new GrokClient(Env.Get("XAI_API_KEY")!);

+var grok = new GrokClient(Env.Get("XAI_API_KEY")!, new GrokClientOptions()

+ .UseJsonConsoleLogging(new() { WrapLength = 80 }));

-var options = new GrokChatOptions

+var options = new ChatOptions

{

- ModelId = "grok-3-mini", // or "grok-3-mini-fast"

- Temperature = 0.7f,

- ReasoningEffort = ReasoningEffort.High, // or GrokReasoningEffort.Low

- Search = GrokSearch.Auto, // or GrokSearch.On or GrokSearch.Off

+ ModelId = "grok-3",

+ // Enables Live Search

+ Tools = [new HostedWebSearchTool()]

};

var response = await grok.GetResponseAsync(messages, options);

-AnsiConsole.MarkupLine($":robot: {response.Text}");

\ No newline at end of file

+AnsiConsole.MarkupLine($":robot: {response.Text.EscapeMarkup()}");

\ No newline at end of file

diff --git a/src/Weaving/Weaving.csproj b/src/Weaving/Weaving.csproj

index a597c21..078a1ae 100644

--- a/src/Weaving/Weaving.csproj

+++ b/src/Weaving/Weaving.csproj

@@ -11,6 +11,7 @@

+

diff --git a/src/Weaving/readme.md b/src/Weaving/readme.md

index a92996f..d022264 100644

--- a/src/Weaving/readme.md

+++ b/src/Weaving/readme.md

@@ -1 +1,34 @@

+

Run AI-powered C# files with the power of Microsoft.Extensions.AI and Devlooped.Extensions.AI

+

+```csharp

+#:package Weaving@0.*

+

+// Sample X.AI client usage with .NET

+var messages = new Chat()

+{

+ { "system", "You are a highly intelligent AI assistant." },

+ { "user", "What is 101*3?" },

+};

+

+var grok = new GrokClient(Env.Get("XAI_API_KEY")!);

+

+var options = new GrokChatOptions

+{

+ ModelId = "grok-3-mini", // or "grok-3-mini-fast"

+ ReasoningEffort = ReasoningEffort.High, // or ReasoningEffort.Low

+ Search = GrokSearch.Auto, // or GrokSearch.On or GrokSearch.Off

+};

+

+var response = await grok.GetResponseAsync(messages, options);

+

+AnsiConsole.MarkupLine($":robot: {response.Text}");

+```

+

+> [!NOTE]

+> The most useful namespaces and dependencies for developing Microsoft.Extensions.AI-

+> powered applications are automatically referenced and imported when using this package.

+

+

+

+

\ No newline at end of file