N-bit sized int and floating point types [Feature-Request] #1318

Replies: 20 comments

-

|

Why do you need the C# language to change to accommodate this? You can just define your own int256 type and use that today already. |

Beta Was this translation helpful? Give feedback.

-

This not answering the 'why'. It's just restating the 'what'. You haven't explained why this is actually needed. It's like me saying "i want channels in the language. why? because it would give me channels." |

Beta Was this translation helpful? Give feedback.

-

|

It would be faster and easier to use if it is a part of the actual c# |

Beta Was this translation helpful? Give feedback.

-

|

And there is no good floating point (bigger than double) libraries out there that do not just use |

Beta Was this translation helpful? Give feedback.

-

Why? it would literally be the same to any end consumer. They would write "int128". Or, in other words, what do you think the C# compiler could do here that you could not do yourself? --

You could write one :) It's unclear how the C# compiler would do this, or why C# would be responsible for this type. Maybe you meant to suggest this new API on dotnet/corefx? This is the repo for the C# language. Types (like System.Int32/Float/Double/Boolean) belong in the BCL repos. Thanks! |

Beta Was this translation helpful? Give feedback.

-

|

Thanks, I will suggest it on there soon. |

Beta Was this translation helpful? Give feedback.

-

|

@CyrusNajmabadi but then you miss the opportunity to introduce a warm, and wonderfully fuzzy feeling, entirely cryptic, language-wide alias. Come on, this might be our best and only shot at getting manifest Think of the possibilities ref readonly long long int value = ref x; |

Beta Was this translation helpful? Give feedback.

-

|

I think the type should be a part of the .NET framework but the syntax be a part of c#. |

Beta Was this translation helpful? Give feedback.

-

|

@hamarb123 sorry, I was thinking of what the natural alias might be if it existed, by drawing inspiration from and poking fun at C++ (I do love C++ though). I was kidding. |

Beta Was this translation helpful? Give feedback.

-

"Types" smaller than 8 bits are rather dubious. They can't be actual types since the runtime has no notion of bit sized type. You'll never be able to have something like |

Beta Was this translation helpful? Give feedback.

-

|

It doesn't matter if it actually uses 8 bits because |

Beta Was this translation helpful? Give feedback.

-

But small types such as |

Beta Was this translation helpful? Give feedback.

-

|

This can be done without a new language syntax. It only needs an analyzer: |

Beta Was this translation helpful? Give feedback.

-

|

|

Beta Was this translation helpful? Give feedback.

-

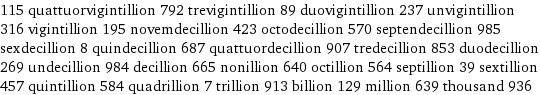

2^1024? that's a very large number. I doubt anyone would need such a number. its sounds like a XY problem. bringing on wolfram alpha to call 2^256 (for fun) that was 78 decimal digits. let alone 2^1024 which is 309 decimal digits! |

Beta Was this translation helpful? Give feedback.

-

|

The types int1, int2, int4 and so on are really similar to my proposal of bitfields: #465 or to this #457. It can be OK to have int1 to be really 8 bit when used in this way: int1 oneBit = 1; // I want a "restricted" range integer, its only valid values are 0 and 1, the actual used memory is 1 Bytethis can be accomplished with an analyzer as @ufcpp was saying but when you do this: int1[] arr = new int1[8]; // I expect this to occupy exactly 8 bit --> 1 Byte, not 64 bit!or this: struct myDouble

{

int1 sign;

int11 exp;

int52 mantissa;

}

myDouble x = Unsafe.As(aDouble); // 'x' should be the same size of double!To accomplish these array bit / bitfield no CLR change is needed, an analogous compile transformation of when a fixed buffer is used in a struct can be made, the struct myDouble is changed to this: struct myDouble

{

private long __compiler_mangled_name_m_value;

public int sign

{

set => set_field_value(__compiler_mangled_name_m_value, 0, value)

get => get_field_value(__compiler_mangled_name_m_value, 0)

};

public int exp

{

set => set_field_value(__compiler_mangled_name_m_value, 1, 12, value)

get => get_field_value(__compiler_mangled_name_m_value, 1, 12)

};

public long mantissa {

set => set_field_value(__compiler_mangled_name_m_value, 13, 64, value)

get => get_field_value(__compiler_mangled_name_m_value, 13, 64)

};

}I'm instead unsure of the usefulness of float1, float2, float4... float16 yes it used in some GPU programming... but in my work in the embedded sector ever seen something to need a float4, well is really difficult to see a float used there really... only int (sometimes smaller than 8 byte) and string are used. |

Beta Was this translation helpful? Give feedback.

-

|

Most of the types in the original post are rather arbitrary and the main benefit of adding them would be for the sake of including more types, which I don't think is a good enough reason. Possible real-world use cases that I can think of (feel free to copy this to the original post): Int128

This is partly a joke, but we could make the type alias Int4 (nybble, or a half of a byte)

I would personally use Int4 / nybble in my code, so +1 from me. Also: Float sizes below 16-bit half-precision make no sense at all. Even at half-precision you can only store 3 decimal digits reliably. If you aim for millimeter-precision, that means a half-precision float can't get much bigger than |

Beta Was this translation helpful? Give feedback.

-

|

I think there are three things that the compiler can do here, that a user-defined type cannot do itself. However, I think one doesn't matter for user defined types and the other two are better answered by broader language features.

|

Beta Was this translation helpful? Give feedback.

-

|

Types smaller that bytes are not only used for BCD in my field a lot of devices "pack" their diagnostic in a byte each of them being a different diagnostic status, devices that gives you I/O ports for example one that has 32 input "pack" all in a int32 and any bit again is the status of a port. The difficult part (maybe) would be on size of these types... when used as normal variable can be simply seen have size 1 byte but when in a struct / array this is not true anymore: an struct with array with 8 int1 should occupy 4 bytes. Some examples: uint1 a; // 8 byte

a = 0; // OK

a = 9; // Error only 0 and 1 are valid

uint1[] array = new uint[8]; // 8 byte non 64!

struct test {

int4 a;

int4 b;

};

var c = new test(); // 8 bytesadly not having the possibility to do not have bit arrays / bitfields will force me to use C for those tasks. |

Beta Was this translation helpful? Give feedback.

-

|

request too, |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

I propose we should be able to have N-bit sized int and floating point types.

Here is how it could work:

Obviously we already have the following:

But what about the following:

The classes could be called the following:

For integers:

intjwhere j is a power of 2For unsigned integers:

uintjwhere j is a power of 2For floats:

floatjwhere j is a power of 2And there could be special names for floats between

float8andfloat256ranging fromquarter,half,single(already in there),double(already in there),quadruple,octupleWhy do I need this in my beloved c#?

Won't this ruin my IDE with millions of different type names?

Beta Was this translation helpful? Give feedback.

All reactions