Replies: 12 comments 33 replies

-

A few things here. First, C# has since the very beginning had an idea that the inner 'best type' would win in cases like this. So, for example, if you have In that regard, switch expressions behave like how constructs in the language always behaved. That said, what we added later was that if there was no "best common type" of all the "arms" that we would allow external data to flow into the expression, instead of determining things just from the expression itself.

Sure. But inherently, what's going on is that we have a set of rules that say "if you can figure out the type this way, then do so" (because that's how the language worked from teh beginning). But then "if that fails, you can add these extra rules to make the type work". Thsi approach allows more code to succeed, while also maintaining back compat.

The problem with this is back compat. Tons of code is written with the rules prior to us adding support for 'target typing'. That code has meaning today, and would absolutely break tons of real world libs and apps that depend on that. So we have to accept the existing behavior, and only allow the new rules to come into play with teh original behavior would result in error. We can't have the new rules change the meaning of existing code. |

Beta Was this translation helpful? Give feedback.

-

|

Switch expressions were added in C# 8, long after target-based type resolution was added to the language. Instead of using the opportunity to design switch expressions properly, the design team chose to conform to the "broken" behavior of conditional expressions, which also forces The design team decided they would prefer to preserve this behavior as they believed it was somehow "better". This was not a forced decision, and it would not have broken anyone's code if they decided otherwise. This does not seem like an issue of backwards compatibility, but of perceived semantic "consistency" between a new feature (switch expression) and an old one (conditional expression). However, since switch expressions have multiple cases, it amplifies the original error in design several times fold, without providing much benefit to the user. I feel that this perceived "consistency" between the new and old is not really about end-user experience, but more of the "designer experience" or "aesthetic sense" of their language being a "whole" in some way. Most users don't know the language deep enough to "appreciate" this (non-)feature and they would rather it to "just work" as expected. As far as I'm concerned, this behavior means that it doesn't achieve that. |

Beta Was this translation helpful? Give feedback.

-

|

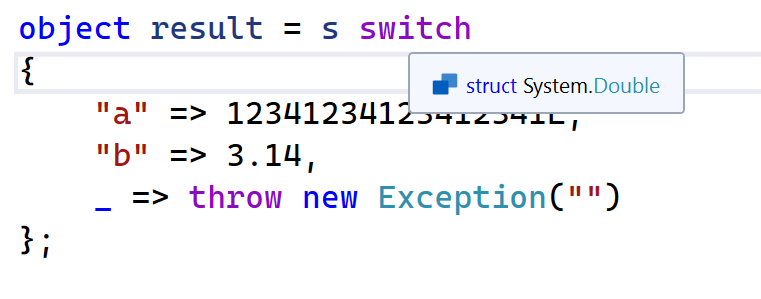

For what it's worth, you can override the default type inference: var s = "a";

var result = s switch

{

"a" => (object)12341234123412341L,

"b" => 3.14,

_ => throw new Exception("")

};

Console.WriteLine(Convert.ToInt64(result)); // Prints "12341234123412341"But I would agree with you that there is a bug in the analyzer as it should be adding that What cannot happen is for the compiler to suddenly change its type inference rules to work in the way you feel they should. That would seriously break vast amounts of existing code. Whether the way the rules work now is the right design choice or not is a moot point. They are what they are and they cannot change. |

Beta Was this translation helpful? Give feedback.

-

|

I did not initially intend to suggest changing this behavior in the language specification. I'm aware this would be a breaking change. Introducing breaking changes seems to be something that mostly Microsoft team members can "dare" to propose, and they do: here is a list of breaking changes in C# 10. I initially thought it was a compiler bug. I also don't personally believe that had I personally suggested it during discussions for C# 8 it would make any difference. About two years ago I've donated an enormous amount of time and effort providing carefully thought-of suggestions and comments for the record design proposal and my suggestion were completely ignored by the design team (not a single comment). Since then I have no interest in participating in language discussions. I only posted here because @CyrusNajmabadi suggested me to. |

Beta Was this translation helpful? Give feedback.

-

Please note that var s = "a";

object result =

s == "a" ? 12341234123412341L

: s == "b" ? 3.14

: s == "c" ? true

: throw new Exception("");And as @CyrusNajmabadi has also mentioned, In fact, adding the

so you had to fix it by explicitly casting one of the branches, for example: |

Beta Was this translation helpful? Give feedback.

-

|

I agree that this looks somehow strange. But it has nothing todo with target type new. See comment from spec:

The exact rule how the type of a switch expression is determined:

(The second part allows the "bool arm") Fixing is explained here: here And these rules were designed with the mindset that implicit conversion dot not cause data loss... |

Beta Was this translation helpful? Give feedback.

-

This is definitely an issue of backwards compatibility now. We cannot change this without breaking a lot of code that depends on this behavior. And we cannot break it without also then introducing a different form of inconsistency that would be likewise unfortunate. So this isn't a case where a break is even arguable as strictly better. It's a case where it will improve some cases for some users a bit, but it will also degrade things for others by potentially more. It is unlikely we would go that route without enormous evidence that the current state of a affairs is broken and otherwise unsalvageable. However, currently, that does not seem to be the case. |

Beta Was this translation helpful? Give feedback.

-

In a strong sense yes. :-) Preservation of behavior is a very important tenet for us. As is reusing concepts throughout the language for consistency. Switch expressions, for example, aren't meant to replace existing constructs. They're meant to compliment them. If they were meant to replace (say because we considered the prior constructs to be a mistake), then we'd use that opportunity to introduce new and different semantics. However, that's not what happened here. Rather we still feel the existing constructs are good and have semantics we think are acceptable. So we wanted switch expressions to fit into that family, not create its own new family :-) BTW, for history, at one point we considered making this construct just look like a ternary expression ( |

Beta Was this translation helpful? Give feedback.

-

Sure. But we discussed this sort of thing at length and ultimately made a decision we felt was appropriate balancing this concern along with a whole host of others. We even considered things like not allowing Target typing to work in a case like this. Ultimately though, we felt the best benefit to users around be to be consistent with existing expression forms, while also allowing Target typing. We recognized this sort of situation could arise, but we believed overall it would, in whole, be the best outcome for the language and community. |

Beta Was this translation helpful? Give feedback.

-

This is certainly true. However, the bar for breaking changes in extremely high. First off, there has to be a belief that very few people will be hot by the change. Second, there has to be a belief that almost no code hit by the break was likely to be correct before. This is not the case here. This week likely hit many people, and we will likely get more reports about working code breaking vs the single report here that the behavior we chose is undesirable. We have to balance things out and make sure that the cure is not worse than the disease. :-) |

Beta Was this translation helpful? Give feedback.

-

Double vs long is another case with enormous history in the language and a huge amount of code that depends on that. However, that case was never even part of my argument. I was simply stating that conditionals allow for Best common type first, over a Target type. And in any where a conversion could happen (including all the cases without data loss) that has been the behavior of the language. We do this 'best common type' concept in many places. It's a core idea the language has been built on, and which we think of as positively. It would be extremely strange to not use it given our history and it would likely feel like we were bifurcating things :-/ |

Beta Was this translation helpful? Give feedback.

-

First, this is not true. We've changed the language several times based on user feedback. We're doing this right now in fact because of user feedback around initializers in structs. And we're going to take a breaking change there (again based on user feedback). There we feel though that the time period is short enough that we can change things without impacting too many people, and because the change won't give you different behavior, it will simply make a highly suspect case illegal, and the user can add code to get either behavior they want.

What does "illusion of participation" mean? We are literally discussing the matter and several Language designers are here sharing their thoughts about this as well as giving the history of this topic, the pros/cons that were discussed, why we settled on what we did, and what would be necessary to get a change here. How is that either an illusion or not participation?

As you can see, this did not fall upon deaf ears. The designers here are both listening and giving a ton of information to help explain how we see and process this feedback as well as how we determine how it could go forward. Nothing has been ignored here. It's simply that this is not a trivial thing that can be changed. Nor is it something universally (or even broadly) considered to be a mistake. That's why this is a discussion. So that people from all backgrounds and beliefs can Converse on the topic and both listen and share what they think. Thanks! |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

-

This code was given as a "fix" by a Roslyn analyzer suggestion. It changes the assigned value from

12341234123412341to12341234123412340. The original worked fine:I've reported this as an analyzer issue and a compiler issue but was told that fundamentally this behavior was "as per the language spec" and not a compiler issue (it was accepted as an analyzer issue).

So now I'm here. The fact that for several years, C#'s own analyzer suggested this code-breaking change as if it is was somehow "semantic-preserving" is by itself an evidence for how unintuitive and unexpected this behavior is.

Moreover, if I added a

booltyped case to the switch expression, it would work fine!I've wasted hours of bug tracking only to eventually be told that the compiler behaved like this "as per the spec". It's mind-bending that by removing one case (here

"c" => true) would significantly alter the behavior of the code.Please consider sorting out your specifications to make them more predictable and useful for the programmer. Not just a set of arbitrary rules that have to blindly accepted as gospel. If the target type is

object, it makes sense that the assigned value and type should be preserved as much as possible. That's the whole point of theobjecttype.Beta Was this translation helpful? Give feedback.

All reactions